1. Spring Batch Introduction

Many applications within the enterprise domain require bulk processing to perform business operations in mission critical environments. These business operations include:

-

Automated, complex processing of large volumes of information that is most efficiently processed without user interaction. These operations typically include time-based events (such as month-end calculations, notices, or correspondence).

-

Periodic application of complex business rules processed repetitively across very large data sets (for example, insurance benefit determination or rate adjustments).

-

Integration of information that is received from internal and external systems that typically requires formatting, validation, and processing in a transactional manner into the system of record. Batch processing is used to process billions of transactions every day for enterprises.

Spring Batch is a lightweight, comprehensive batch framework designed to enable the development of robust batch applications vital for the daily operations of enterprise systems. Spring Batch builds upon the characteristics of the Spring Framework that people have come to expect (productivity, POJO-based development approach, and general ease of use), while making it easy for developers to access and leverage more advance enterprise services when necessary. Spring Batch is not a scheduling framework. There are many good enterprise schedulers (such as Quartz, Tivoli, Control-M, etc.) available in both the commercial and open source spaces. It is intended to work in conjunction with a scheduler, not replace a scheduler.

Spring Batch provides reusable functions that are essential in processing large volumes of records, including logging/tracing, transaction management, job processing statistics, job restart, skip, and resource management. It also provides more advanced technical services and features that enable extremely high-volume and high performance batch jobs through optimization and partitioning techniques. Spring Batch can be used in both simple use cases (such as reading a file into a database or running a stored procedure) as well as complex, high volume use cases (such as moving high volumes of data between databases, transforming it, and so on). High-volume batch jobs can leverage the framework in a highly scalable manner to process significant volumes of information.

1.1. Background

While open source software projects and associated communities have focused greater attention on web-based and microservices-based architecture frameworks, there has been a notable lack of focus on reusable architecture frameworks to accommodate Java-based batch processing needs, despite continued needs to handle such processing within enterprise IT environments. The lack of a standard, reusable batch architecture has resulted in the proliferation of many one-off, in-house solutions developed within client enterprise IT functions.

SpringSource (now Pivotal) and Accenture collaborated to change this. Accenture’s hands-on industry and technical experience in implementing batch architectures, SpringSource’s depth of technical experience, and Spring’s proven programming model together made a natural and powerful partnership to create high-quality, market-relevant software aimed at filling an important gap in enterprise Java. Both companies worked with a number of clients who were solving similar problems by developing Spring-based batch architecture solutions. This provided some useful additional detail and real-life constraints that helped to ensure the solution can be applied to the real-world problems posed by clients.

Accenture contributed previously proprietary batch processing architecture frameworks to the Spring Batch project, along with committer resources to drive support, enhancements, and the existing feature set. Accenture’s contribution was based upon decades of experience in building batch architectures with the last several generations of platforms: COBOL/Mainframe, C++/Unix, and now Java/anywhere.

The collaborative effort between Accenture and SpringSource aimed to promote the standardization of software processing approaches, frameworks, and tools that can be consistently leveraged by enterprise users when creating batch applications. Companies and government agencies desiring to deliver standard, proven solutions to their enterprise IT environments can benefit from Spring Batch.

1.2. Usage Scenarios

A typical batch program generally:

-

Reads a large number of records from a database, file, or queue.

-

Processes the data in some fashion.

-

Writes back data in a modified form.

Spring Batch automates this basic batch iteration, providing the capability to process similar transactions as a set, typically in an offline environment without any user interaction. Batch jobs are part of most IT projects, and Spring Batch is the only open source framework that provides a robust, enterprise-scale solution.

Business Scenarios

-

Commit batch process periodically

-

Concurrent batch processing: parallel processing of a job

-

Staged, enterprise message-driven processing

-

Massively parallel batch processing

-

Manual or scheduled restart after failure

-

Sequential processing of dependent steps (with extensions to workflow-driven batches)

-

Partial processing: skip records (for example, on rollback)

-

Whole-batch transaction, for cases with a small batch size or existing stored procedures/scripts

Technical Objectives

-

Batch developers use the Spring programming model: Concentrate on business logic and let the framework take care of infrastructure.

-

Clear separation of concerns between the infrastructure, the batch execution environment, and the batch application.

-

Provide common, core execution services as interfaces that all projects can implement.

-

Provide simple and default implementations of the core execution interfaces that can be used 'out of the box'.

-

Easy to configure, customize, and extend services, by leveraging the spring framework in all layers.

-

All existing core services should be easy to replace or extend, without any impact to the infrastructure layer.

-

Provide a simple deployment model, with the architecture JARs completely separate from the application, built using Maven.

1.3. Spring Batch Architecture

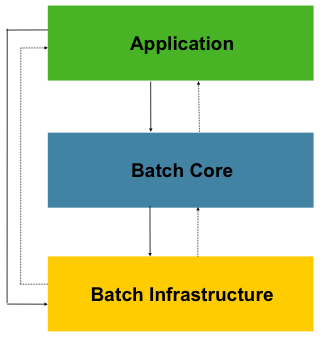

Spring Batch is designed with extensibility and a diverse group of end users in mind. The figure below shows the layered architecture that supports the extensibility and ease of use for end-user developers.

This layered architecture highlights three major high-level components: Application,

Core, and Infrastructure. The application contains all batch jobs and custom code written

by developers using Spring Batch. The Batch Core contains the core runtime classes

necessary to launch and control a batch job. It includes implementations for

JobLauncher, Job, and Step. Both Application and Core are built on top of a common

infrastructure. This infrastructure contains common readers and writers and services

(such as the RetryTemplate), which are used both by application developers(readers and

writers, such as ItemReader and ItemWriter) and the core framework itself (retry,

which is its own library).

1.4. General Batch Principles and Guidelines

The following key principles, guidelines, and general considerations should be considered when building a batch solution.

-

Remember that a batch architecture typically affects on-line architecture and vice versa. Design with both architectures and environments in mind using common building blocks when possible.

-

Simplify as much as possible and avoid building complex logical structures in single batch applications.

-

Keep the processing and storage of data physically close together (in other words, keep your data where your processing occurs).

-

Minimize system resource use, especially I/O. Perform as many operations as possible in internal memory.

-

Review application I/O (analyze SQL statements) to ensure that unnecessary physical I/O is avoided. In particular, the following four common flaws need to be looked for:

-

Reading data for every transaction when the data could be read once and cached or kept in the working storage.

-

Rereading data for a transaction where the data was read earlier in the same transaction.

-

Causing unnecessary table or index scans.

-

Not specifying key values in the WHERE clause of an SQL statement.

-

-

Do not do things twice in a batch run. For instance, if you need data summarization for reporting purposes, you should (if possible) increment stored totals when data is being initially processed, so your reporting application does not have to reprocess the same data.

-

Allocate enough memory at the beginning of a batch application to avoid time-consuming reallocation during the process.

-

Always assume the worst with regard to data integrity. Insert adequate checks and record validation to maintain data integrity.

-

Implement checksums for internal validation where possible. For example, flat files should have a trailer record telling the total of records in the file and an aggregate of the key fields.

-

Plan and execute stress tests as early as possible in a production-like environment with realistic data volumes.

-

In large batch systems, backups can be challenging, especially if the system is running concurrent with on-line on a 24-7 basis. Database backups are typically well taken care of in the on-line design, but file backups should be considered to be just as important. If the system depends on flat files, file backup procedures should not only be in place and documented but be regularly tested as well.

1.5. Batch Processing Strategies

To help design and implement batch systems, basic batch application building blocks and patterns should be provided to the designers and programmers in the form of sample structure charts and code shells. When starting to design a batch job, the business logic should be decomposed into a series of steps that can be implemented using the following standard building blocks:

-

Conversion Applications: For each type of file supplied by or generated to an external system, a conversion application must be created to convert the transaction records supplied into a standard format required for processing. This type of batch application can partly or entirely consist of translation utility modules (see Basic Batch Services).

-

Validation Applications: Validation applications ensure that all input/output records are correct and consistent. Validation is typically based on file headers and trailers, checksums and validation algorithms, and record level cross-checks.

-

Extract Applications: An application that reads a set of records from a database or input file, selects records based on predefined rules, and writes the records to an output file.

-

Extract/Update Applications: An application that reads records from a database or an input file and makes changes to a database or an output file driven by the data found in each input record.

-

Processing and Updating Applications: An application that performs processing on input transactions from an extract or a validation application. The processing usually involves reading a database to obtain data required for processing, potentially updating the database and creating records for output processing.

-

Output/Format Applications: Applications that read an input file, restructure data from this record according to a standard format, and produce an output file for printing or transmission to another program or system.

Additionally, a basic application shell should be provided for business logic that cannot be built using the previously mentioned building blocks.

In addition to the main building blocks, each application may use one or more of standard utility steps, such as:

-

Sort: A program that reads an input file and produces an output file where records have been re-sequenced according to a sort key field in the records. Sorts are usually performed by standard system utilities.

-

Split: A program that reads a single input file and writes each record to one of several output files based on a field value. Splits can be tailored or performed by parameter-driven standard system utilities.

-

Merge: A program that reads records from multiple input files and produces one output file with combined data from the input files. Merges can be tailored or performed by parameter-driven standard system utilities.

Batch applications can additionally be categorized by their input source:

-

Database-driven applications are driven by rows or values retrieved from the database.

-

File-driven applications are driven by records or values retrieved from a file.

-

Message-driven applications are driven by messages retrieved from a message queue.

The foundation of any batch system is the processing strategy. Factors affecting the selection of the strategy include: estimated batch system volume, concurrency with on-line systems or with other batch systems, available batch windows. (Note that, with more enterprises wanting to be up and running 24x7, clear batch windows are disappearing).

Typical processing options for batch are (in increasing order of implementation complexity):

-

Normal processing during a batch window in off-line mode.

-

Concurrent batch or on-line processing.

-

Parallel processing of many different batch runs or jobs at the same time.

-

Partitioning (processing of many instances of the same job at the same time).

-

A combination of the preceding options.

Some or all of these options may be supported by a commercial scheduler.

The following section discusses these processing options in more detail. It is important to notice that, as a rule of thumb, the commit and locking strategy adopted by batch processes depends on the type of processing performed and that the on-line locking strategy should also use the same principles. Therefore, the batch architecture cannot be simply an afterthought when designing an overall architecture.

The locking strategy can be to use only normal database locks or to implement an additional custom locking service in the architecture. The locking service would track database locking (for example, by storing the necessary information in a dedicated db-table) and give or deny permissions to the application programs requesting a db operation. Retry logic could also be implemented by this architecture to avoid aborting a batch job in case of a lock situation.

1. Normal processing in a batch window For simple batch processes running in a separate batch window where the data being updated is not required by on-line users or other batch processes, concurrency is not an issue and a single commit can be done at the end of the batch run.

In most cases, a more robust approach is more appropriate. Keep in mind that batch systems have a tendency to grow as time goes by, both in terms of complexity and the data volumes they handle. If no locking strategy is in place and the system still relies on a single commit point, modifying the batch programs can be painful. Therefore, even with the simplest batch systems, consider the need for commit logic for restart-recovery options as well as the information concerning the more complex cases described later in this section.

2. Concurrent batch or on-line processing Batch applications processing data that can be simultaneously updated by on-line users should not lock any data (either in the database or in files) which could be required by on-line users for more than a few seconds. Also, updates should be committed to the database at the end of every few transactions. This minimizes the portion of data that is unavailable to other processes and the elapsed time the data is unavailable.

Another option to minimize physical locking is to have logical row-level locking implemented with either an Optimistic Locking Pattern or a Pessimistic Locking Pattern.

-

Optimistic locking assumes a low likelihood of record contention. It typically means inserting a timestamp column in each database table used concurrently by both batch and on-line processing. When an application fetches a row for processing, it also fetches the timestamp. As the application then tries to update the processed row, the update uses the original timestamp in the WHERE clause. If the timestamp matches, the data and the timestamp are updated. If the timestamp does not match, this indicates that another application has updated the same row between the fetch and the update attempt. Therefore, the update cannot be performed.

-

Pessimistic locking is any locking strategy that assumes there is a high likelihood of record contention and therefore either a physical or logical lock needs to be obtained at retrieval time. One type of pessimistic logical locking uses a dedicated lock-column in the database table. When an application retrieves the row for update, it sets a flag in the lock column. With the flag in place, other applications attempting to retrieve the same row logically fail. When the application that sets the flag updates the row, it also clears the flag, enabling the row to be retrieved by other applications. Please note that the integrity of data must be maintained also between the initial fetch and the setting of the flag, for example by using db locks (such as

SELECT FOR UPDATE). Note also that this method suffers from the same downside as physical locking except that it is somewhat easier to manage building a time-out mechanism that gets the lock released if the user goes to lunch while the record is locked.

These patterns are not necessarily suitable for batch processing, but they might be used for concurrent batch and on-line processing (such as in cases where the database does not support row-level locking). As a general rule, optimistic locking is more suitable for on-line applications, while pessimistic locking is more suitable for batch applications. Whenever logical locking is used, the same scheme must be used for all applications accessing data entities protected by logical locks.

Note that both of these solutions only address locking a single record. Often, we may need to lock a logically related group of records. With physical locks, you have to manage these very carefully in order to avoid potential deadlocks. With logical locks, it is usually best to build a logical lock manager that understands the logical record groups you want to protect and that can ensure that locks are coherent and non-deadlocking. This logical lock manager usually uses its own tables for lock management, contention reporting, time-out mechanism, and other concerns.

3. Parallel Processing Parallel processing allows multiple batch runs or jobs to run in parallel to minimize the total elapsed batch processing time. This is not a problem as long as the jobs are not sharing the same files, db-tables, or index spaces. If they do, this service should be implemented using partitioned data. Another option is to build an architecture module for maintaining interdependencies by using a control table. A control table should contain a row for each shared resource and whether it is in use by an application or not. The batch architecture or the application in a parallel job would then retrieve information from that table to determine if it can get access to the resource it needs or not.

If the data access is not a problem, parallel processing can be implemented through the use of additional threads to process in parallel. In the mainframe environment, parallel job classes have traditionally been used, in order to ensure adequate CPU time for all the processes. Regardless, the solution has to be robust enough to ensure time slices for all the running processes.

Other key issues in parallel processing include load balancing and the availability of general system resources such as files, database buffer pools, and so on. Also note that the control table itself can easily become a critical resource.

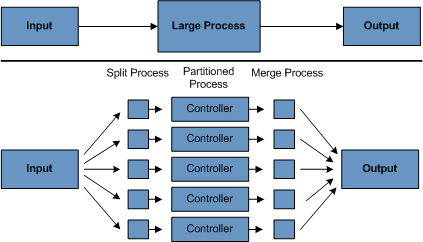

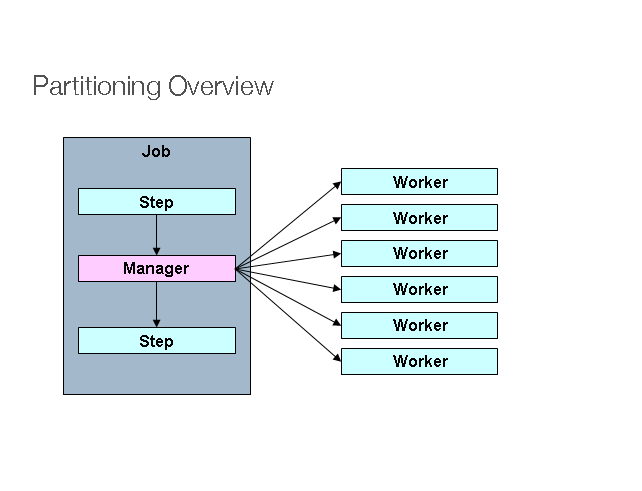

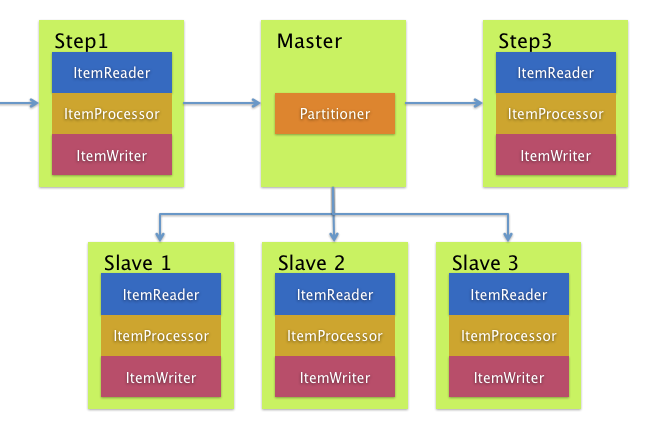

4. Partitioning Using partitioning allows multiple versions of large batch applications to run concurrently. The purpose of this is to reduce the elapsed time required to process long batch jobs. Processes that can be successfully partitioned are those where the input file can be split and/or the main database tables partitioned to allow the application to run against different sets of data.

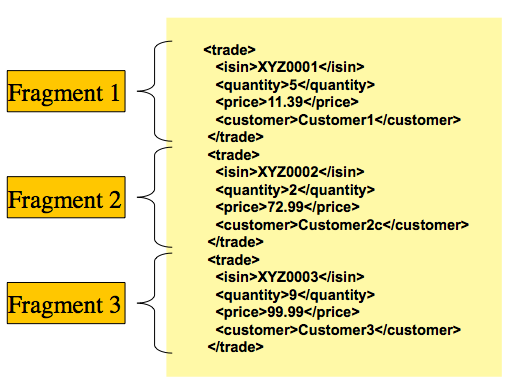

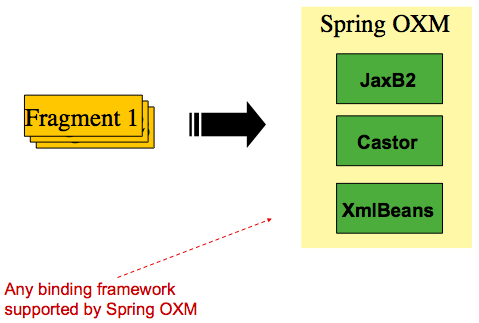

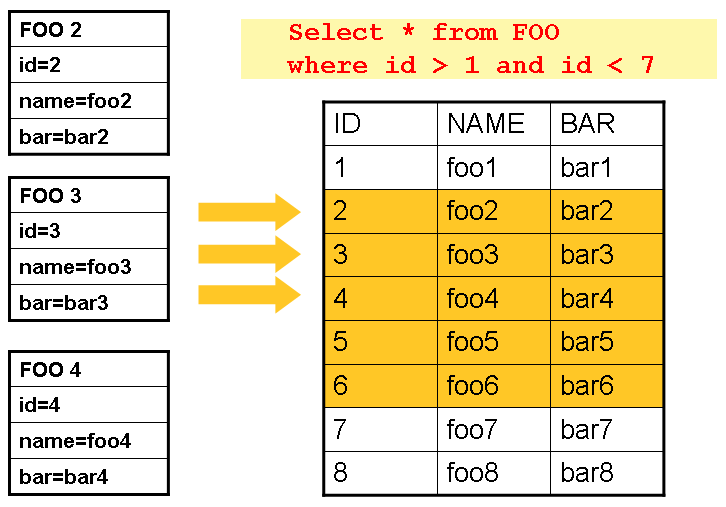

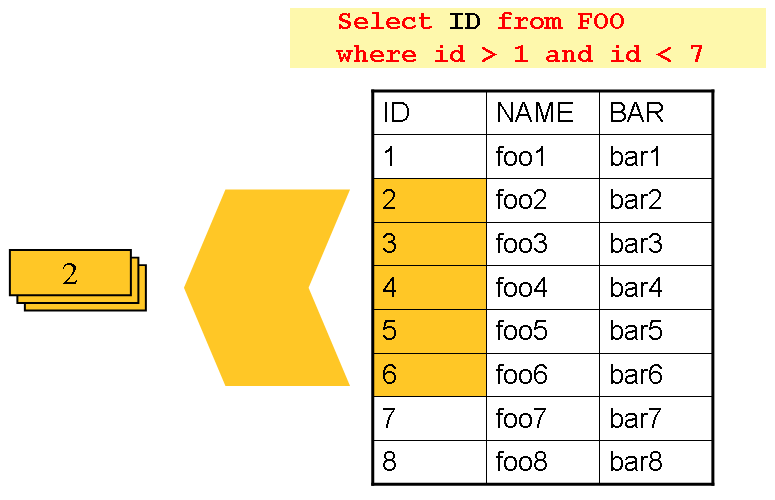

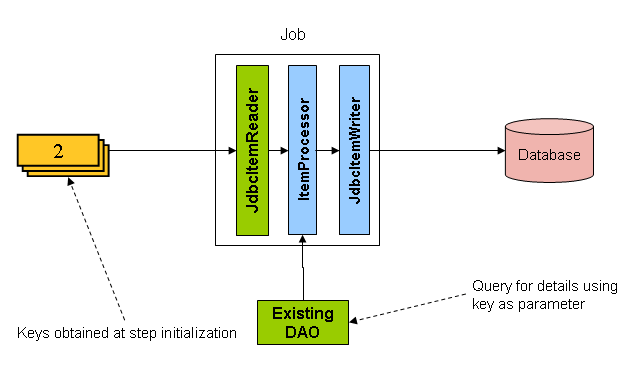

In addition, processes which are partitioned must be designed to only process their assigned data set. A partitioning architecture has to be closely tied to the database design and the database partitioning strategy. Note that database partitioning does not necessarily mean physical partitioning of the database, although in most cases this is advisable. The following picture illustrates the partitioning approach:

The architecture should be flexible enough to allow dynamic configuration of the number of partitions. Both automatic and user controlled configuration should be considered. Automatic configuration may be based on parameters such as the input file size and the number of input records.

4.1 Partitioning Approaches Selecting a partitioning approach has to be done on a case-by-case basis. The following list describes some of the possible partitioning approaches:

1. Fixed and Even Break-Up of Record Set

This involves breaking the input record set into an even number of portions (for example, 10, where each portion has exactly 1/10th of the entire record set). Each portion is then processed by one instance of the batch/extract application.

In order to use this approach, preprocessing is required to split the record set up. The result of this split will be a lower and upper bound placement number which can be used as input to the batch/extract application in order to restrict its processing to only its portion.

Preprocessing could be a large overhead, as it has to calculate and determine the bounds of each portion of the record set.

2. Break up by a Key Column

This involves breaking up the input record set by a key column, such as a location code, and assigning data from each key to a batch instance. In order to achieve this, column values can be either:

-

Assigned to a batch instance by a partitioning table (described later in this section).

-

Assigned to a batch instance by a portion of the value (such as 0000-0999, 1000 - 1999, and so on).

Under option 1, adding new values means a manual reconfiguration of the batch/extract to ensure that the new value is added to a particular instance.

Under option 2, this ensures that all values are covered via an instance of the batch job. However, the number of values processed by one instance is dependent on the distribution of column values (there may be a large number of locations in the 0000-0999 range, and few in the 1000-1999 range). Under this option, the data range should be designed with partitioning in mind.

Under both options, the optimal even distribution of records to batch instances cannot be realized. There is no dynamic configuration of the number of batch instances used.

3. Breakup by Views

This approach is basically breakup by a key column but on the database level. It involves breaking up the record set into views. These views are used by each instance of the batch application during its processing. The breakup is done by grouping the data.

With this option, each instance of a batch application has to be configured to hit a particular view (instead of the master table). Also, with the addition of new data values, this new group of data has to be included into a view. There is no dynamic configuration capability, as a change in the number of instances results in a change to the views.

4. Addition of a Processing Indicator

This involves the addition of a new column to the input table, which acts as an indicator. As a preprocessing step, all indicators are marked as being non-processed. During the record fetch stage of the batch application, records are read on the condition that that record is marked as being non-processed, and once they are read (with lock), they are marked as being in processing. When that record is completed, the indicator is updated to either complete or error. Many instances of a batch application can be started without a change, as the additional column ensures that a record is only processed once.

With this option, I/O on the table increases dynamically. In the case of an updating batch application, this impact is reduced, as a write must occur anyway.

5. Extract Table to a Flat File

This involves the extraction of the table into a file. This file can then be split into multiple segments and used as input to the batch instances.

With this option, the additional overhead of extracting the table into a file and splitting it may cancel out the effect of multi-partitioning. Dynamic configuration can be achieved by changing the file splitting script.

6. Use of a Hashing Column

This scheme involves the addition of a hash column (key/index) to the database tables used to retrieve the driver record. This hash column has an indicator to determine which instance of the batch application processes this particular row. For example, if there are three batch instances to be started, then an indicator of 'A' marks a row for processing by instance 1, an indicator of 'B' marks a row for processing by instance 2, and an indicator of 'C' marks a row for processing by instance 3.

The procedure used to retrieve the records would then have an additional WHERE clause

to select all rows marked by a particular indicator. The inserts in this table would

involve the addition of the marker field, which would be defaulted to one of the

instances (such as 'A').

A simple batch application would be used to update the indicators, such as to redistribute the load between the different instances. When a sufficiently large number of new rows have been added, this batch can be run (anytime, except in the batch window) to redistribute the new rows to other instances.

Additional instances of the batch application only require the running of the batch application as described in the preceding paragraphs to redistribute the indicators to work with a new number of instances.

4.2 Database and Application Design Principles

An architecture that supports multi-partitioned applications which run against partitioned database tables using the key column approach should include a central partition repository for storing partition parameters. This provides flexibility and ensures maintainability. The repository generally consists of a single table, known as the partition table.

Information stored in the partition table is static and, in general, should be maintained by the DBA. The table should consist of one row of information for each partition of a multi-partitioned application. The table should have columns for Program ID Code, Partition Number (logical ID of the partition), Low Value of the db key column for this partition, and High Value of the db key column for this partition.

On program start-up, the program id and partition number should be passed to the

application from the architecture (specifically, from the Control Processing Tasklet). If

a key column approach is used, these variables are used to read the partition table in

order to determine what range of data the application is to process. In addition the

partition number must be used throughout the processing to:

-

Add to the output files/database updates in order for the merge process to work properly.

-

Report normal processing to the batch log and any errors to the architecture error handler.

4.3 Minimizing Deadlocks

When applications run in parallel or are partitioned, contention in database resources and deadlocks may occur. It is critical that the database design team eliminates potential contention situations as much as possible as part of the database design.

Also, the developers must ensure that the database index tables are designed with deadlock prevention and performance in mind.

Deadlocks or hot spots often occur in administration or architecture tables, such as log tables, control tables, and lock tables. The implications of these should be taken into account as well. A realistic stress test is crucial for identifying the possible bottlenecks in the architecture.

To minimize the impact of conflicts on data, the architecture should provide services such as wait-and-retry intervals when attaching to a database or when encountering a deadlock. This means a built-in mechanism to react to certain database return codes and, instead of issuing an immediate error, waiting a predetermined amount of time and retrying the database operation.

4.4 Parameter Passing and Validation

The partition architecture should be relatively transparent to application developers. The architecture should perform all tasks associated with running the application in a partitioned mode, including:

-

Retrieving partition parameters before application start-up.

-

Validating partition parameters before application start-up.

-

Passing parameters to the application at start-up.

The validation should include checks to ensure that:

-

The application has sufficient partitions to cover the whole data range.

-

There are no gaps between partitions.

If the database is partitioned, some additional validation may be necessary to ensure that a single partition does not span database partitions.

Also, the architecture should take into consideration the consolidation of partitions. Key questions include:

-

Must all the partitions be finished before going into the next job step?

-

What happens if one of the partitions aborts?

2. What’s New in Spring Batch 4.3

This release comes with a number of new features, performance improvements, dependency updates and API deprecations. This section describes the most important changes. For a complete list of changes, please refer to the release notes.

2.1. New features

2.1.1. New synchronized ItemStreamWriter

Similar to the SynchronizedItemStreamReader, this release introduces a

SynchronizedItemStreamWriter. This feature is useful in multi-threaded steps

where concurrent threads need to be synchronized to not override each other’s writes.

2.1.2. New JpaQueryProvider for named queries

This release introduces a new JpaNamedQueryProvider next to the

JpaNativeQueryProvider to ease the configuration of JPA named queries when

using the JpaPagingItemReader:

JpaPagingItemReader<Foo> reader = new JpaPagingItemReaderBuilder<Foo>()

.name("fooReader")

.queryProvider(new JpaNamedQueryProvider("allFoos", Foo.class))

// set other properties on the reader

.build();2.1.3. New JpaCursorItemReader Implementation

JPA 2.2 added the ability to stream results as a cursor instead of only paging.

This release introduces a new JPA item reader that uses this feature to

stream results in a cursor-based fashion similar to the JdbcCursorItemReader

and HibernateCursorItemReader.

2.1.4. New JobParametersIncrementer implementation

Similar to the RunIdIncrementer, this release adds a new JobParametersIncrementer

that is based on a DataFieldMaxValueIncrementer from Spring Framework.

2.1.5. GraalVM Support

This release adds initial support to run Spring Batch applications on GraalVM. The support is still experimental and will be improved in future releases.

2.1.6. Java records Support

This release adds support to use Java records as items in chunk-oriented steps.

The newly added RecordFieldSetMapper supports data mapping from flat files to

Java records, as shown in the following example:

@Bean

public FlatFileItemReader<Person> itemReader() {

return new FlatFileItemReaderBuilder<Person>()

.name("personReader")

.resource(new FileSystemResource("persons.csv"))

.delimited()

.names("id", "name")

.fieldSetMapper(new RecordFieldSetMapper<>(Person.class))

.build();

}In this example, the Person type is a Java record defined as follows:

public record Person(int id, String name) { }The FlatFileItemReader uses the new RecordFieldSetMapper to map data from

the persons.csv file to records of type Person.

2.2. Performance improvements

2.2.1. Use bulk writes in RepositoryItemWriter

Up to version 4.2, in order to use CrudRepository#saveAll in RepositoryItemWriter,

it was required to extend the writer and override write(List).

In this release, the RepositoryItemWriter has been updated to use

CrudRepository#saveAll by default.

2.2.2. Use bulk writes in MongoItemWriter

The MongoItemWriter used MongoOperations#save() in a for loop

to save items to the database. In this release, this writer has been

updated to use org.springframework.data.mongodb.core.BulkOperations instead.

2.2.3. Job start/restart time improvement

The implementation of JobRepository#getStepExecutionCount() used to load

all job executions and step executions in-memory to do the count on the framework

side. In this release, the implementation has been changed to do a single call to

the database with a SQL count query in order to count step executions.

2.3. Dependency updates

This release updates dependent Spring projects to the following versions:

-

Spring Framework 5.3

-

Spring Data 2020.0

-

Spring Integration 5.4

-

Spring AMQP 2.3

-

Spring for Apache Kafka 2.6

-

Micrometer 1.5

2.4. Deprecations

2.4.1. API deprecation

The following is a list of APIs that have been deprecated in this release:

-

org.springframework.batch.core.repository.support.MapJobRepositoryFactoryBean -

org.springframework.batch.core.explore.support.MapJobExplorerFactoryBean -

org.springframework.batch.core.repository.dao.MapJobInstanceDao -

org.springframework.batch.core.repository.dao.MapJobExecutionDao -

org.springframework.batch.core.repository.dao.MapStepExecutionDao -

org.springframework.batch.core.repository.dao.MapExecutionContextDao -

org.springframework.batch.item.data.AbstractNeo4jItemReader -

org.springframework.batch.item.file.transform.Alignment -

org.springframework.batch.item.xml.StaxUtils -

org.springframework.batch.core.launch.support.ScheduledJobParametersFactory -

org.springframework.batch.item.file.MultiResourceItemReader#getCurrentResource() -

org.springframework.batch.core.JobExecution#stop()

Suggested replacements can be found in the Javadoc of each deprecated API.

2.4.2. SQLFire support deprecation

SQLFire has been in EOL since November 1st, 2014. This release deprecates the support of using SQLFire as a job repository and schedules it for removal in version 5.0.

3. The Domain Language of Batch

To any experienced batch architect, the overall concepts of batch processing used in

Spring Batch should be familiar and comfortable. There are "Jobs" and "Steps" and

developer-supplied processing units called ItemReader and ItemWriter. However,

because of the Spring patterns, operations, templates, callbacks, and idioms, there are

opportunities for the following:

-

Significant improvement in adherence to a clear separation of concerns.

-

Clearly delineated architectural layers and services provided as interfaces.

-

Simple and default implementations that allow for quick adoption and ease of use out-of-the-box.

-

Significantly enhanced extensibility.

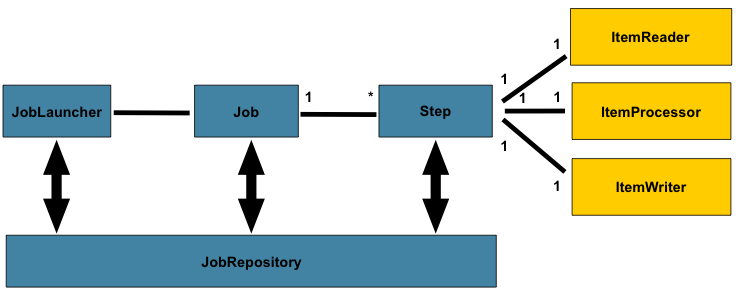

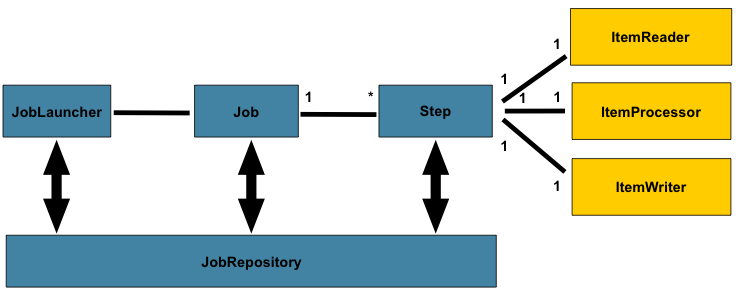

The following diagram is a simplified version of the batch reference architecture that has been used for decades. It provides an overview of the components that make up the domain language of batch processing. This architecture framework is a blueprint that has been proven through decades of implementations on the last several generations of platforms (COBOL/Mainframe, C/Unix, and now Java/anywhere). JCL and COBOL developers are likely to be as comfortable with the concepts as C, C#, and Java developers. Spring Batch provides a physical implementation of the layers, components, and technical services commonly found in the robust, maintainable systems that are used to address the creation of simple to complex batch applications, with the infrastructure and extensions to address very complex processing needs.

The preceding diagram highlights the key concepts that make up the domain language of

Spring Batch. A Job has one to many steps, each of which has exactly one ItemReader,

one ItemProcessor, and one ItemWriter. A job needs to be launched (with

JobLauncher), and metadata about the currently running process needs to be stored (in

JobRepository).

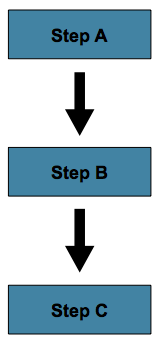

3.1. Job

This section describes stereotypes relating to the concept of a batch job. A Job is an

entity that encapsulates an entire batch process. As is common with other Spring

projects, a Job is wired together with either an XML configuration file or Java-based

configuration. This configuration may be referred to as the "job configuration". However,

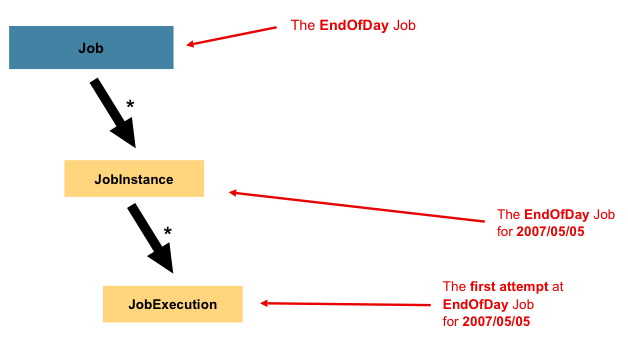

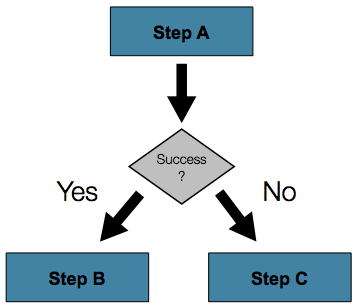

Job is just the top of an overall hierarchy, as shown in the following diagram:

In Spring Batch, a Job is simply a container for Step instances. It combines multiple

steps that belong logically together in a flow and allows for configuration of properties

global to all steps, such as restartability. The job configuration contains:

-

The simple name of the job.

-

Definition and ordering of

Stepinstances. -

Whether or not the job is restartable.

For those who use Java configuration, Spring Batch provides a default implementation of

the Job interface in the form of the SimpleJob class, which creates some standard

functionality on top of Job. When using java based configuration, a collection of

builders is made available for the instantiation of a Job, as shown in the following

example:

@Bean

public Job footballJob() {

return this.jobBuilderFactory.get("footballJob")

.start(playerLoad())

.next(gameLoad())

.next(playerSummarization())

.build();

}For those who use XML configuration, Spring Batch provides a default implementation of the

Job interface in the form of the SimpleJob class, which creates some standard

functionality on top of Job. However, the batch namespace abstracts away the need to

instantiate it directly. Instead, the <job> element can be used, as shown in the

following example:

<job id="footballJob">

<step id="playerload" next="gameLoad"/>

<step id="gameLoad" next="playerSummarization"/>

<step id="playerSummarization"/>

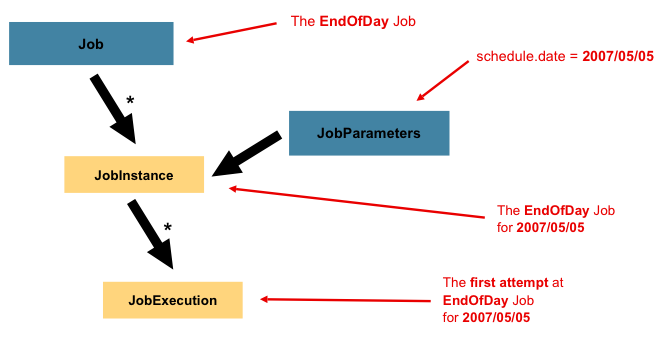

</job>3.1.1. JobInstance

A JobInstance refers to the concept of a logical job run. Consider a batch job that

should be run once at the end of the day, such as the 'EndOfDay' Job from the preceding

diagram. There is one 'EndOfDay' job, but each individual run of the Job must be

tracked separately. In the case of this job, there is one logical JobInstance per day.

For example, there is a January 1st run, a January 2nd run, and so on. If the January 1st

run fails the first time and is run again the next day, it is still the January 1st run.

(Usually, this corresponds with the data it is processing as well, meaning the January

1st run processes data for January 1st). Therefore, each JobInstance can have multiple

executions (JobExecution is discussed in more detail later in this chapter), and only

one JobInstance corresponding to a particular Job and identifying JobParameters can

run at a given time.

The definition of a JobInstance has absolutely no bearing on the data to be loaded.

It is entirely up to the ItemReader implementation to determine how data is loaded. For

example, in the EndOfDay scenario, there may be a column on the data that indicates the

'effective date' or 'schedule date' to which the data belongs. So, the January 1st run

would load only data from the 1st, and the January 2nd run would use only data from the

2nd. Because this determination is likely to be a business decision, it is left up to the

ItemReader to decide. However, using the same JobInstance determines whether or not

the 'state' (that is, the ExecutionContext, which is discussed later in this chapter)

from previous executions is used. Using a new JobInstance means 'start from the

beginning', and using an existing instance generally means 'start from where you left

off'.

3.1.2. JobParameters

Having discussed JobInstance and how it differs from Job, the natural question to ask

is: "How is one JobInstance distinguished from another?" The answer is:

JobParameters. A JobParameters object holds a set of parameters used to start a batch

job. They can be used for identification or even as reference data during the run, as

shown in the following image:

In the preceding example, where there are two instances, one for January 1st, and another

for January 2nd, there is really only one Job, but it has two JobParameter objects:

one that was started with a job parameter of 01-01-2017 and another that was started with

a parameter of 01-02-2017. Thus, the contract can be defined as: JobInstance = Job

+ identifying JobParameters. This allows a developer to effectively control how a

JobInstance is defined, since they control what parameters are passed in.

Not all job parameters are required to contribute to the identification of a

JobInstance. By default, they do so. However, the framework also allows the submission

of a Job with parameters that do not contribute to the identity of a JobInstance.

|

3.1.3. JobExecution

A JobExecution refers to the technical concept of a single attempt to run a Job. An

execution may end in failure or success, but the JobInstance corresponding to a given

execution is not considered to be complete unless the execution completes successfully.

Using the EndOfDay Job described previously as an example, consider a JobInstance for

01-01-2017 that failed the first time it was run. If it is run again with the same

identifying job parameters as the first run (01-01-2017), a new JobExecution is

created. However, there is still only one JobInstance.

A Job defines what a job is and how it is to be executed, and a JobInstance is a

purely organizational object to group executions together, primarily to enable correct

restart semantics. A JobExecution, however, is the primary storage mechanism for what

actually happened during a run and contains many more properties that must be controlled

and persisted, as shown in the following table:

Property |

Definition |

Status |

A |

startTime |

A |

endTime |

A |

exitStatus |

The |

createTime |

A |

lastUpdated |

A |

executionContext |

The "property bag" containing any user data that needs to be persisted between executions. |

failureExceptions |

The list of exceptions encountered during the execution of a |

These properties are important because they are persisted and can be used to completely determine the status of an execution. For example, if the EndOfDay job for 01-01 is executed at 9:00 PM and fails at 9:30, the following entries are made in the batch metadata tables:

JOB_INST_ID |

JOB_NAME |

1 |

EndOfDayJob |

JOB_EXECUTION_ID |

TYPE_CD |

KEY_NAME |

DATE_VAL |

IDENTIFYING |

1 |

DATE |

schedule.Date |

2017-01-01 |

TRUE |

JOB_EXEC_ID |

JOB_INST_ID |

START_TIME |

END_TIME |

STATUS |

1 |

1 |

2017-01-01 21:00 |

2017-01-01 21:30 |

FAILED |

| Column names may have been abbreviated or removed for the sake of clarity and formatting. |

Now that the job has failed, assume that it took the entire night for the problem to be

determined, so that the 'batch window' is now closed. Further assuming that the window

starts at 9:00 PM, the job is kicked off again for 01-01, starting where it left off and

completing successfully at 9:30. Because it is now the next day, the 01-02 job must be

run as well, and it is kicked off just afterwards at 9:31 and completes in its normal one

hour time at 10:30. There is no requirement that one JobInstance be kicked off after

another, unless there is potential for the two jobs to attempt to access the same data,

causing issues with locking at the database level. It is entirely up to the scheduler to

determine when a Job should be run. Since they are separate JobInstances, Spring

Batch makes no attempt to stop them from being run concurrently. (Attempting to run the

same JobInstance while another is already running results in a

JobExecutionAlreadyRunningException being thrown). There should now be an extra entry

in both the JobInstance and JobParameters tables and two extra entries in the

JobExecution table, as shown in the following tables:

JOB_INST_ID |

JOB_NAME |

1 |

EndOfDayJob |

2 |

EndOfDayJob |

JOB_EXECUTION_ID |

TYPE_CD |

KEY_NAME |

DATE_VAL |

IDENTIFYING |

1 |

DATE |

schedule.Date |

2017-01-01 00:00:00 |

TRUE |

2 |

DATE |

schedule.Date |

2017-01-01 00:00:00 |

TRUE |

3 |

DATE |

schedule.Date |

2017-01-02 00:00:00 |

TRUE |

JOB_EXEC_ID |

JOB_INST_ID |

START_TIME |

END_TIME |

STATUS |

1 |

1 |

2017-01-01 21:00 |

2017-01-01 21:30 |

FAILED |

2 |

1 |

2017-01-02 21:00 |

2017-01-02 21:30 |

COMPLETED |

3 |

2 |

2017-01-02 21:31 |

2017-01-02 22:29 |

COMPLETED |

| Column names may have been abbreviated or removed for the sake of clarity and formatting. |

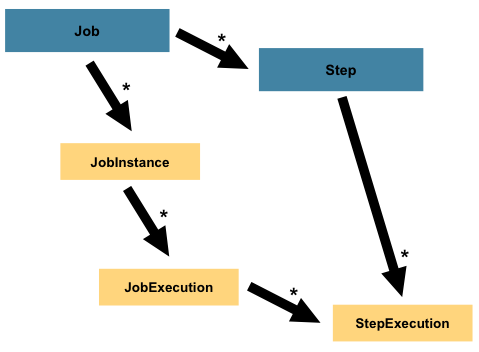

3.2. Step

A Step is a domain object that encapsulates an independent, sequential phase of a batch

job. Therefore, every Job is composed entirely of one or more steps. A Step contains

all of the information necessary to define and control the actual batch processing. This

is a necessarily vague description because the contents of any given Step are at the

discretion of the developer writing a Job. A Step can be as simple or complex as the

developer desires. A simple Step might load data from a file into the database,

requiring little or no code (depending upon the implementations used). A more complex

Step may have complicated business rules that are applied as part of the processing. As

with a Job, a Step has an individual StepExecution that correlates with a unique

JobExecution, as shown in the following image:

3.2.1. StepExecution

A StepExecution represents a single attempt to execute a Step. A new StepExecution

is created each time a Step is run, similar to JobExecution. However, if a step fails

to execute because the step before it fails, no execution is persisted for it. A

StepExecution is created only when its Step is actually started.

Step executions are represented by objects of the StepExecution class. Each execution

contains a reference to its corresponding step and JobExecution and transaction related

data, such as commit and rollback counts and start and end times. Additionally, each step

execution contains an ExecutionContext, which contains any data a developer needs to

have persisted across batch runs, such as statistics or state information needed to

restart. The following table lists the properties for StepExecution:

Property |

Definition |

Status |

A |

startTime |

A |

endTime |

A |

exitStatus |

The |

executionContext |

The "property bag" containing any user data that needs to be persisted between executions. |

readCount |

The number of items that have been successfully read. |

writeCount |

The number of items that have been successfully written. |

commitCount |

The number of transactions that have been committed for this execution. |

rollbackCount |

The number of times the business transaction controlled by the |

readSkipCount |

The number of times |

processSkipCount |

The number of times |

filterCount |

The number of items that have been 'filtered' by the |

writeSkipCount |

The number of times |

3.3. ExecutionContext

An ExecutionContext represents a collection of key/value pairs that are persisted and

controlled by the framework in order to allow developers a place to store persistent

state that is scoped to a StepExecution object or a JobExecution object. For those

familiar with Quartz, it is very similar to JobDataMap. The best usage example is to

facilitate restart. Using flat file input as an example, while processing individual

lines, the framework periodically persists the ExecutionContext at commit points. Doing

so allows the ItemReader to store its state in case a fatal error occurs during the run

or even if the power goes out. All that is needed is to put the current number of lines

read into the context, as shown in the following example, and the framework will do the

rest:

executionContext.putLong(getKey(LINES_READ_COUNT), reader.getPosition());Using the EndOfDay example from the Job Stereotypes section as an example, assume there

is one step, 'loadData', that loads a file into the database. After the first failed run,

the metadata tables would look like the following example:

JOB_INST_ID |

JOB_NAME |

1 |

EndOfDayJob |

JOB_INST_ID |

TYPE_CD |

KEY_NAME |

DATE_VAL |

1 |

DATE |

schedule.Date |

2017-01-01 |

JOB_EXEC_ID |

JOB_INST_ID |

START_TIME |

END_TIME |

STATUS |

1 |

1 |

2017-01-01 21:00 |

2017-01-01 21:30 |

FAILED |

STEP_EXEC_ID |

JOB_EXEC_ID |

STEP_NAME |

START_TIME |

END_TIME |

STATUS |

1 |

1 |

loadData |

2017-01-01 21:00 |

2017-01-01 21:30 |

FAILED |

STEP_EXEC_ID |

SHORT_CONTEXT |

1 |

{piece.count=40321} |

In the preceding case, the Step ran for 30 minutes and processed 40,321 'pieces', which

would represent lines in a file in this scenario. This value is updated just before each

commit by the framework and can contain multiple rows corresponding to entries within the

ExecutionContext. Being notified before a commit requires one of the various

StepListener implementations (or an ItemStream), which are discussed in more detail

later in this guide. As with the previous example, it is assumed that the Job is

restarted the next day. When it is restarted, the values from the ExecutionContext of

the last run are reconstituted from the database. When the ItemReader is opened, it can

check to see if it has any stored state in the context and initialize itself from there,

as shown in the following example:

if (executionContext.containsKey(getKey(LINES_READ_COUNT))) {

log.debug("Initializing for restart. Restart data is: " + executionContext);

long lineCount = executionContext.getLong(getKey(LINES_READ_COUNT));

LineReader reader = getReader();

Object record = "";

while (reader.getPosition() < lineCount && record != null) {

record = readLine();

}

}In this case, after the above code runs, the current line is 40,322, allowing the Step

to start again from where it left off. The ExecutionContext can also be used for

statistics that need to be persisted about the run itself. For example, if a flat file

contains orders for processing that exist across multiple lines, it may be necessary to

store how many orders have been processed (which is much different from the number of

lines read), so that an email can be sent at the end of the Step with the total number

of orders processed in the body. The framework handles storing this for the developer, in

order to correctly scope it with an individual JobInstance. It can be very difficult to

know whether an existing ExecutionContext should be used or not. For example, using the

'EndOfDay' example from above, when the 01-01 run starts again for the second time, the

framework recognizes that it is the same JobInstance and on an individual Step basis,

pulls the ExecutionContext out of the database, and hands it (as part of the

StepExecution) to the Step itself. Conversely, for the 01-02 run, the framework

recognizes that it is a different instance, so an empty context must be handed to the

Step. There are many of these types of determinations that the framework makes for the

developer, to ensure the state is given to them at the correct time. It is also important

to note that exactly one ExecutionContext exists per StepExecution at any given time.

Clients of the ExecutionContext should be careful, because this creates a shared

keyspace. As a result, care should be taken when putting values in to ensure no data is

overwritten. However, the Step stores absolutely no data in the context, so there is no

way to adversely affect the framework.

It is also important to note that there is at least one ExecutionContext per

JobExecution and one for every StepExecution. For example, consider the following

code snippet:

ExecutionContext ecStep = stepExecution.getExecutionContext();

ExecutionContext ecJob = jobExecution.getExecutionContext();

//ecStep does not equal ecJobAs noted in the comment, ecStep does not equal ecJob. They are two different

ExecutionContexts. The one scoped to the Step is saved at every commit point in the

Step, whereas the one scoped to the Job is saved in between every Step execution.

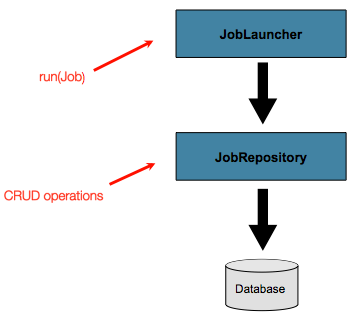

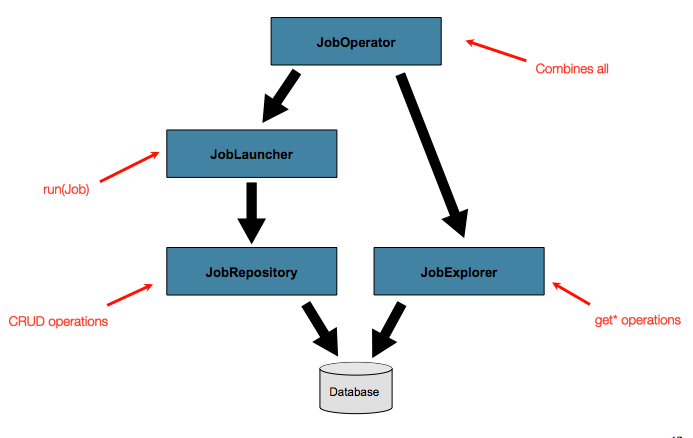

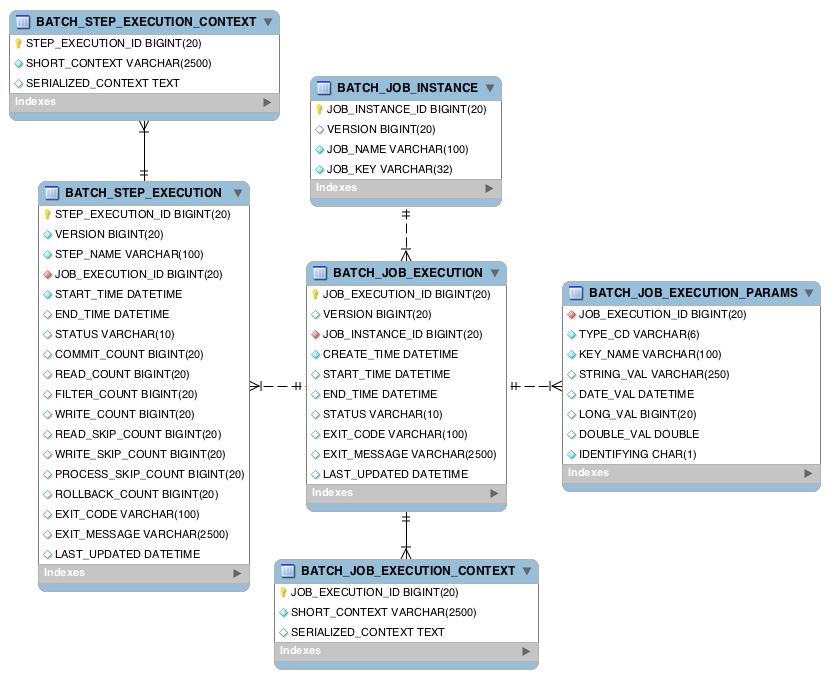

3.4. JobRepository

JobRepository is the persistence mechanism for all of the Stereotypes mentioned above.

It provides CRUD operations for JobLauncher, Job, and Step implementations. When a

Job is first launched, a JobExecution is obtained from the repository, and, during

the course of execution, StepExecution and JobExecution implementations are persisted

by passing them to the repository.

The Spring Batch XML namespace provides support for configuring a JobRepository instance

with the <job-repository> tag, as shown in the following example:

<job-repository id="jobRepository"/>When using Java configuration, the @EnableBatchProcessing annotation provides a

JobRepository as one of the components automatically configured out of the box.

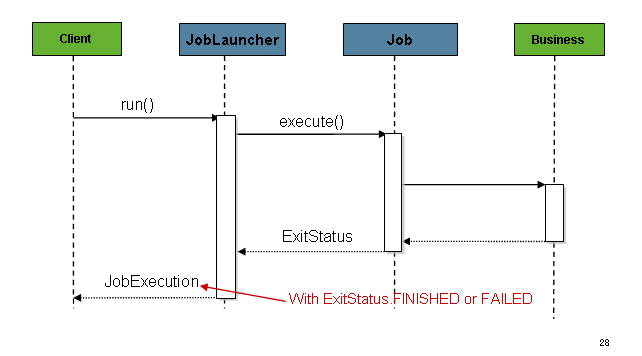

3.5. JobLauncher

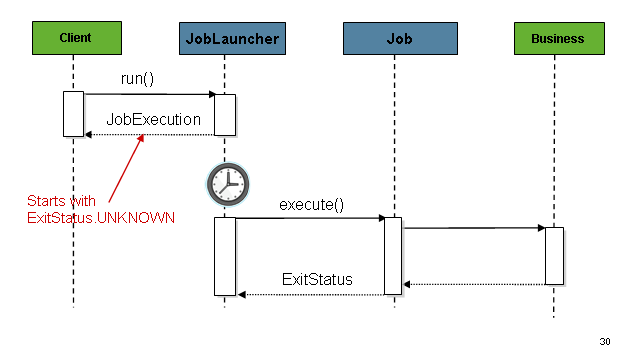

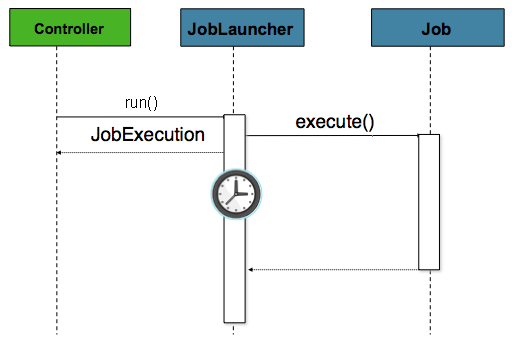

JobLauncher represents a simple interface for launching a Job with a given set of

JobParameters, as shown in the following example:

public interface JobLauncher {

public JobExecution run(Job job, JobParameters jobParameters)

throws JobExecutionAlreadyRunningException, JobRestartException,

JobInstanceAlreadyCompleteException, JobParametersInvalidException;

}It is expected that implementations obtain a valid JobExecution from the

JobRepository and execute the Job.

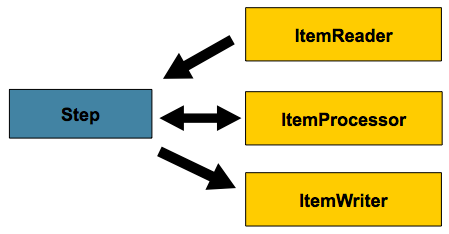

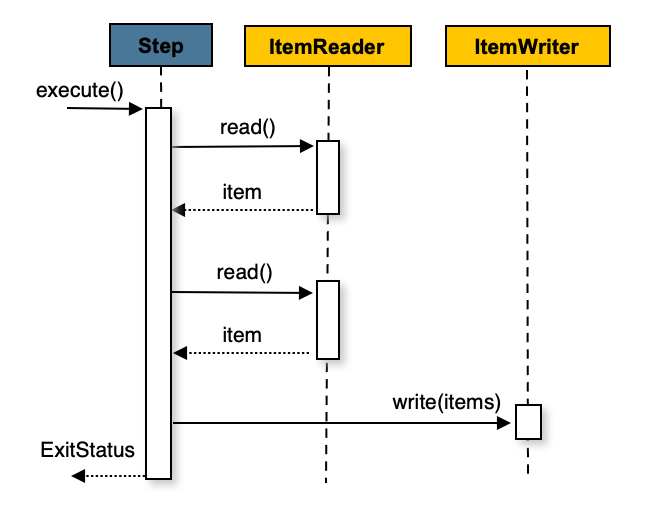

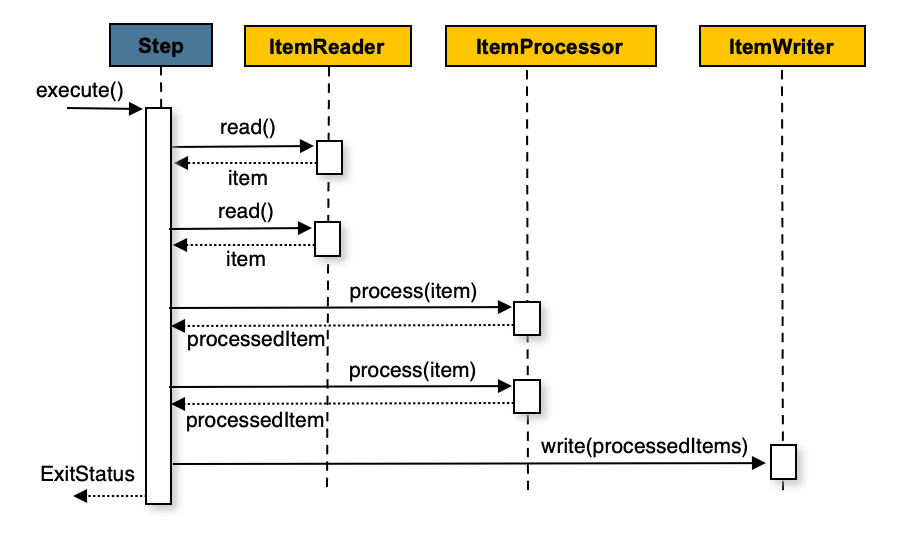

3.6. Item Reader

ItemReader is an abstraction that represents the retrieval of input for a Step, one

item at a time. When the ItemReader has exhausted the items it can provide, it

indicates this by returning null. More details about the ItemReader interface and its

various implementations can be found in

Readers And Writers.

3.7. Item Writer

ItemWriter is an abstraction that represents the output of a Step, one batch or chunk

of items at a time. Generally, an ItemWriter has no knowledge of the input it should

receive next and knows only the item that was passed in its current invocation. More

details about the ItemWriter interface and its various implementations can be found in

Readers And Writers.

3.8. Item Processor

ItemProcessor is an abstraction that represents the business processing of an item.

While the ItemReader reads one item, and the ItemWriter writes them, the

ItemProcessor provides an access point to transform or apply other business processing.

If, while processing the item, it is determined that the item is not valid, returning

null indicates that the item should not be written out. More details about the

ItemProcessor interface can be found in

Readers And Writers.

3.9. Batch Namespace

Many of the domain concepts listed previously need to be configured in a Spring

ApplicationContext. While there are implementations of the interfaces above that can be

used in a standard bean definition, a namespace has been provided for ease of

configuration, as shown in the following example:

<beans:beans xmlns="http://www.springframework.org/schema/batch"

xmlns:beans="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="

http://www.springframework.org/schema/beans

https://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/batch

https://www.springframework.org/schema/batch/spring-batch.xsd">

<job id="ioSampleJob">

<step id="step1">

<tasklet>

<chunk reader="itemReader" writer="itemWriter" commit-interval="2"/>

</tasklet>

</step>

</job>

</beans:beans>As long as the batch namespace has been declared, any of its elements can be used. More

information on configuring a Job can be found in Configuring and

Running a Job. More information on configuring a Step can be found in

Configuring a Step.

4. Configuring and Running a Job

In the domain section , the overall architecture design was discussed, using the following diagram as a guide:

While the Job object may seem like a simple

container for steps, there are many configuration options of which a

developer must be aware. Furthermore, there are many considerations for

how a Job will be run and how its meta-data will be

stored during that run. This chapter will explain the various configuration

options and runtime concerns of a Job.

4.1. Configuring a Job

There are multiple implementations of the Job interface. However,

builders abstract away the difference in configuration.

@Bean

public Job footballJob() {

return this.jobBuilderFactory.get("footballJob")

.start(playerLoad())

.next(gameLoad())

.next(playerSummarization())

.build();

}A Job (and typically any Step within it) requires a JobRepository. The

configuration of the JobRepository is handled via the BatchConfigurer.

The above example illustrates a Job that consists of three Step instances. The job related

builders can also contain other elements that help with parallelisation (Split),

declarative flow control (Decision) and externalization of flow definitions (Flow).

Whether you use Java or XML, there are multiple implementations of the Job

interface. However, the namespace abstracts away the differences in configuration. It has

only three required dependencies: a name, JobRepository , and a list of Step instances.

<job id="footballJob">

<step id="playerload" parent="s1" next="gameLoad"/>

<step id="gameLoad" parent="s2" next="playerSummarization"/>

<step id="playerSummarization" parent="s3"/>

</job>The examples here use a parent bean definition to create the steps. See the section on step configuration for more options declaring specific step details inline. The XML namespace defaults to referencing a repository with an id of 'jobRepository', which is a sensible default. However, this can be overridden explicitly:

<job id="footballJob" job-repository="specialRepository">

<step id="playerload" parent="s1" next="gameLoad"/>

<step id="gameLoad" parent="s3" next="playerSummarization"/>

<step id="playerSummarization" parent="s3"/>

</job>In addition to steps a job configuration can contain other elements that help with

parallelization (<split>), declarative flow control (<decision>) and externalization

of flow definitions (<flow/>).

4.1.1. Restartability

One key issue when executing a batch job concerns the behavior of a Job when it is

restarted. The launching of a Job is considered to be a 'restart' if a JobExecution

already exists for the particular JobInstance. Ideally, all jobs should be able to start

up where they left off, but there are scenarios where this is not possible. It is

entirely up to the developer to ensure that a new JobInstance is created in this

scenario. However, Spring Batch does provide some help. If a Job should never be

restarted, but should always be run as part of a new JobInstance, then the

restartable property may be set to 'false'.

The following example shows how to set the restartable field to false in XML:

<job id="footballJob" restartable="false">

...

</job>The following example shows how to set the restartable field to false in Java:

@Bean

public Job footballJob() {

return this.jobBuilderFactory.get("footballJob")

.preventRestart()

...

.build();

}To phrase it another way, setting restartable to false means “this

Job does not support being started again”. Restarting a Job that is not

restartable causes a JobRestartException to

be thrown.

Job job = new SimpleJob();

job.setRestartable(false);

JobParameters jobParameters = new JobParameters();

JobExecution firstExecution = jobRepository.createJobExecution(job, jobParameters);

jobRepository.saveOrUpdate(firstExecution);

try {

jobRepository.createJobExecution(job, jobParameters);

fail();

}

catch (JobRestartException e) {

// expected

}This snippet of JUnit code shows how attempting to create a

JobExecution the first time for a non restartable

job will cause no issues. However, the second

attempt will throw a JobRestartException.

4.1.2. Intercepting Job Execution

During the course of the execution of a

Job, it may be useful to be notified of various

events in its lifecycle so that custom code may be executed. The

SimpleJob allows for this by calling a

JobListener at the appropriate time:

public interface JobExecutionListener {

void beforeJob(JobExecution jobExecution);

void afterJob(JobExecution jobExecution);

}JobListeners can be added to a SimpleJob by setting listeners on the job.

The following example shows how to add a listener element to an XML job definition:

<job id="footballJob">

<step id="playerload" parent="s1" next="gameLoad"/>

<step id="gameLoad" parent="s2" next="playerSummarization"/>

<step id="playerSummarization" parent="s3"/>

<listeners>

<listener ref="sampleListener"/>

</listeners>

</job>The following example shows how to add a listener method to a Java job definition:

@Bean

public Job footballJob() {

return this.jobBuilderFactory.get("footballJob")

.listener(sampleListener())

...

.build();

}It should be noted that the afterJob method is called regardless of the success or

failure of the Job. If success or failure needs to be determined, it can be obtained

from the JobExecution, as follows:

public void afterJob(JobExecution jobExecution){

if (jobExecution.getStatus() == BatchStatus.COMPLETED ) {

//job success

}

else if (jobExecution.getStatus() == BatchStatus.FAILED) {

//job failure

}

}The annotations corresponding to this interface are:

-

@BeforeJob -

@AfterJob

4.1.3. Inheriting from a Parent Job

If a group of Jobs share similar, but not

identical, configurations, then it may be helpful to define a "parent"

Job from which the concrete

Jobs may inherit properties. Similar to class

inheritance in Java, the "child" Job will combine

its elements and attributes with the parent’s.

In the following example, "baseJob" is an abstract

Job definition that defines only a list of

listeners. The Job "job1" is a concrete

definition that inherits the list of listeners from "baseJob" and merges

it with its own list of listeners to produce a

Job with two listeners and one

Step, "step1".

<job id="baseJob" abstract="true">

<listeners>

<listener ref="listenerOne"/>

<listeners>

</job>

<job id="job1" parent="baseJob">

<step id="step1" parent="standaloneStep"/>

<listeners merge="true">

<listener ref="listenerTwo"/>

<listeners>

</job>Please see the section on Inheriting from a Parent Step for more detailed information.

4.1.4. JobParametersValidator

A job declared in the XML namespace or using any subclass of

AbstractJob can optionally declare a validator for the job parameters at

runtime. This is useful when for instance you need to assert that a job

is started with all its mandatory parameters. There is a

DefaultJobParametersValidator that can be used to constrain combinations

of simple mandatory and optional parameters, and for more complex

constraints you can implement the interface yourself.

The configuration of a validator is supported through the XML namespace through a child element of the job, as shown in the following example:

<job id="job1" parent="baseJob3">

<step id="step1" parent="standaloneStep"/>

<validator ref="parametersValidator"/>

</job>The validator can be specified as a reference (as shown earlier) or as a nested bean definition in the beans namespace.

The configuration of a validator is supported through the java builders, as shown in the following example:

@Bean

public Job job1() {

return this.jobBuilderFactory.get("job1")

.validator(parametersValidator())

...

.build();

}4.2. Java Config

Spring 3 brought the ability to configure applications via java instead of XML. As of

Spring Batch 2.2.0, batch jobs can be configured using the same java config.

There are two components for the java based configuration: the @EnableBatchProcessing

annotation and two builders.

The @EnableBatchProcessing works similarly to the other @Enable* annotations in the

Spring family. In this case, @EnableBatchProcessing provides a base configuration for

building batch jobs. Within this base configuration, an instance of StepScope is

created in addition to a number of beans made available to be autowired:

-

JobRepository: bean name "jobRepository" -

JobLauncher: bean name "jobLauncher" -

JobRegistry: bean name "jobRegistry" -

PlatformTransactionManager: bean name "transactionManager" -

JobBuilderFactory: bean name "jobBuilders" -

StepBuilderFactory: bean name "stepBuilders"

The core interface for this configuration is the BatchConfigurer. The default

implementation provides the beans mentioned above and requires a DataSource as a bean

within the context to be provided. This data source is used by the JobRepository.

You can customize any of these beans

by creating a custom implementation of the BatchConfigurer interface.

Typically, extending the DefaultBatchConfigurer (which is provided if a

BatchConfigurer is not found) and overriding the required getter is sufficient.

However, implementing your own from scratch may be required. The following

example shows how to provide a custom transaction manager:

@Bean

public BatchConfigurer batchConfigurer(DataSource dataSource) {

return new DefaultBatchConfigurer(dataSource) {

@Override

public PlatformTransactionManager getTransactionManager() {

return new MyTransactionManager();

}

};

}|

Only one configuration class needs to have the |

With the base configuration in place, a user can use the provided builder factories to

configure a job. The following example shows a two step job configured with the

JobBuilderFactory and the StepBuilderFactory:

@Configuration

@EnableBatchProcessing

@Import(DataSourceConfiguration.class)

public class AppConfig {

@Autowired

private JobBuilderFactory jobs;

@Autowired

private StepBuilderFactory steps;

@Bean

public Job job(@Qualifier("step1") Step step1, @Qualifier("step2") Step step2) {

return jobs.get("myJob").start(step1).next(step2).build();

}

@Bean

protected Step step1(ItemReader<Person> reader,

ItemProcessor<Person, Person> processor,

ItemWriter<Person> writer) {

return steps.get("step1")

.<Person, Person> chunk(10)

.reader(reader)

.processor(processor)

.writer(writer)

.build();

}

@Bean

protected Step step2(Tasklet tasklet) {

return steps.get("step2")

.tasklet(tasklet)

.build();

}

}4.3. Configuring a JobRepository

When using @EnableBatchProcessing, a JobRepository is provided out of the box for you.

This section addresses configuring your own.

As described in earlier, the JobRepository is used for basic CRUD operations of the various persisted

domain objects within Spring Batch, such as

JobExecution and

StepExecution. It is required by many of the major

framework features, such as the JobLauncher,

Job, and Step.

The batch namespace abstracts away many of the implementation details of the

JobRepository implementations and their collaborators. However, there are still a few

configuration options available, as shown in the following example:

<job-repository id="jobRepository"

data-source="dataSource"

transaction-manager="transactionManager"

isolation-level-for-create="SERIALIZABLE"

table-prefix="BATCH_"

max-varchar-length="1000"/>None of the configuration options listed above are required except the id. If they are

not set, the defaults shown above will be used. They are shown above for awareness

purposes. The max-varchar-length defaults to 2500, which is the length of the long

VARCHAR columns in the sample schema

scripts.

When using java configuration, a JobRepository is provided for you. A JDBC based one is

provided out of the box if a DataSource is provided, the Map based one if not. However,

you can customize the configuration of the JobRepository through an implementation of the

BatchConfigurer interface.

...

// This would reside in your BatchConfigurer implementation

@Override

protected JobRepository createJobRepository() throws Exception {

JobRepositoryFactoryBean factory = new JobRepositoryFactoryBean();

factory.setDataSource(dataSource);

factory.setTransactionManager(transactionManager);

factory.setIsolationLevelForCreate("ISOLATION_SERIALIZABLE");

factory.setTablePrefix("BATCH_");

factory.setMaxVarCharLength(1000);

return factory.getObject();

}

...None of the configuration options listed above are required except

the dataSource and transactionManager. If they are not set, the defaults shown above

will be used. They are shown above for awareness purposes. The

max varchar length defaults to 2500, which is the

length of the long VARCHAR columns in the

sample schema scripts

4.3.1. Transaction Configuration for the JobRepository

If the namespace or the provided FactoryBean is used, transactional advice is

automatically created around the repository. This is to ensure that the batch meta-data,

including state that is necessary for restarts after a failure, is persisted correctly.

The behavior of the framework is not well defined if the repository methods are not

transactional. The isolation level in the create* method attributes is specified

separately to ensure that, when jobs are launched, if two processes try to launch

the same job at the same time, only one succeeds. The default isolation level for that

method is SERIALIZABLE, which is quite aggressive. READ_COMMITTED would work just as

well. READ_UNCOMMITTED would be fine if two processes are not likely to collide in this

way. However, since a call to the create* method is quite short, it is unlikely that

SERIALIZED causes problems, as long as the database platform supports it. However, this

can be overridden.

The following example shows how to override the isolation level in XML:

<job-repository id="jobRepository"

isolation-level-for-create="REPEATABLE_READ" />The following example shows how to override the isolation level in Java:

// This would reside in your BatchConfigurer implementation

@Override

protected JobRepository createJobRepository() throws Exception {

JobRepositoryFactoryBean factory = new JobRepositoryFactoryBean();

factory.setDataSource(dataSource);

factory.setTransactionManager(transactionManager);

factory.setIsolationLevelForCreate("ISOLATION_REPEATABLE_READ");

return factory.getObject();

}If the namespace or factory beans are not used, then it is also essential to configure the transactional behavior of the repository using AOP.

The following example shows how to configure the transactional behavior of the repository in XML:

<aop:config>

<aop:advisor

pointcut="execution(* org.springframework.batch.core..*Repository+.*(..))"/>

<advice-ref="txAdvice" />

</aop:config>

<tx:advice id="txAdvice" transaction-manager="transactionManager">

<tx:attributes>

<tx:method name="*" />

</tx:attributes>

</tx:advice>The preceding fragment can be used nearly as is, with almost no changes. Remember also to include the appropriate namespace declarations and to make sure spring-tx and spring-aop (or the whole of Spring) are on the classpath.

The following example shows how to configure the transactional behavior of the repository in Java:

@Bean

public TransactionProxyFactoryBean baseProxy() {

TransactionProxyFactoryBean transactionProxyFactoryBean = new TransactionProxyFactoryBean();

Properties transactionAttributes = new Properties();

transactionAttributes.setProperty("*", "PROPAGATION_REQUIRED");

transactionProxyFactoryBean.setTransactionAttributes(transactionAttributes);

transactionProxyFactoryBean.setTarget(jobRepository());

transactionProxyFactoryBean.setTransactionManager(transactionManager());

return transactionProxyFactoryBean;

}4.3.2. Changing the Table Prefix

Another modifiable property of the JobRepository is the table prefix of the meta-data

tables. By default they are all prefaced with BATCH_. BATCH_JOB_EXECUTION and

BATCH_STEP_EXECUTION are two examples. However, there are potential reasons to modify this

prefix. If the schema names needs to be prepended to the table names, or if more than one

set of meta data tables is needed within the same schema, then the table prefix needs to

be changed:

The following example shows how to change the table prefix in XML:

<job-repository id="jobRepository"

table-prefix="SYSTEM.TEST_" />The following example shows how to change the table prefix in Java:

// This would reside in your BatchConfigurer implementation

@Override

protected JobRepository createJobRepository() throws Exception {

JobRepositoryFactoryBean factory = new JobRepositoryFactoryBean();

factory.setDataSource(dataSource);

factory.setTransactionManager(transactionManager);

factory.setTablePrefix("SYSTEM.TEST_");

return factory.getObject();

}Given the preceding changes, every query to the meta-data tables is prefixed with

SYSTEM.TEST_. BATCH_JOB_EXECUTION is referred to as SYSTEM.TEST_JOB_EXECUTION.

|

Only the table prefix is configurable. The table and column names are not. |

4.3.3. In-Memory Repository

There are scenarios in which you may not want to persist your domain objects to the

database. One reason may be speed; storing domain objects at each commit point takes extra

time. Another reason may be that you just don’t need to persist status for a particular

job. For this reason, Spring batch provides an in-memory Map version of the job

repository.

The following example shows the inclusion of MapJobRepositoryFactoryBean in XML:

<bean id="jobRepository"

class="org.springframework.batch.core.repository.support.MapJobRepositoryFactoryBean">

<property name="transactionManager" ref="transactionManager"/>

</bean>The following example shows the inclusion of MapJobRepositoryFactoryBean in Java:

// This would reside in your BatchConfigurer implementation

@Override

protected JobRepository createJobRepository() throws Exception {

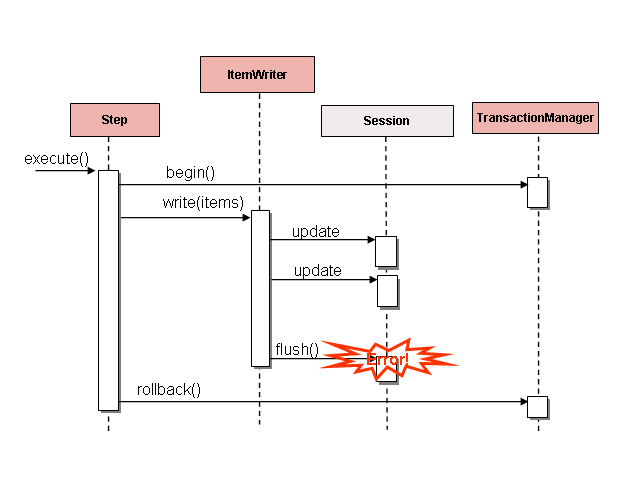

MapJobRepositoryFactoryBean factory = new MapJobRepositoryFactoryBean();