Conditional execution is expressed using a double ampersand symbol &&.

This allows each task in the sequence to be launched only if the previous task

successfully completed. For example:

task create my-composed-task --definition "foo && bar"

When the composed task my-composed-task is launched, it will launch the

task foo and if it completes successfully, then the task bar will be

launched. If the foo task fails, then the task bar will not launch.

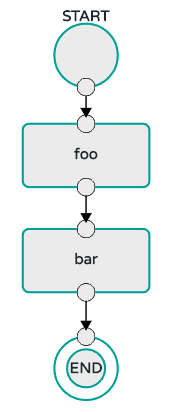

You can also use the Spring Cloud Data Flow Dashboard to create your conditional execution. By using the designer to drag and drop applications that are required, and connecting them together to create your directed graph. For example:

The diagram above is a screen capture of the directed graph as it being created using the Spring Cloud Data Flow Dashboard. We see that are 4 components in the diagram that comprise a conditional execution:

- Start icon - All directed graphs start from this symbol. There will only be one.

- Task icon - Represents each task in the directed graph.

- End icon - Represents the termination of a directed graph.

Solid line arrow - Represents the flow conditional execution flow between:

- Two applications

- The start control node and an application

- An application and the end control node

![[Note]](images/note.png) | Note |

|---|---|

You can view a diagram of your directed graph by clicking the detail button next to the composed task definition on the definitions tab. |

The DSL supports fine grained control over the transitions taken during the

execution of the directed graph. Transitions are specified by providing a

condition for equality based on the exit status of the previous task.

A task transition is represented by the following symbol ->.

A basic transition would look like the following:

task create my-transition-composed-task --definition "foo 'FAILED' -> bar 'COMPLETED' -> baz"

In the example above foo would launch and if it had an exit status of FAILED,

then the bar task would launch. If the exit status of foo was COMPLETED

then baz would launch. All other statuses returned by foo will have no effect

and task would terminate normally.

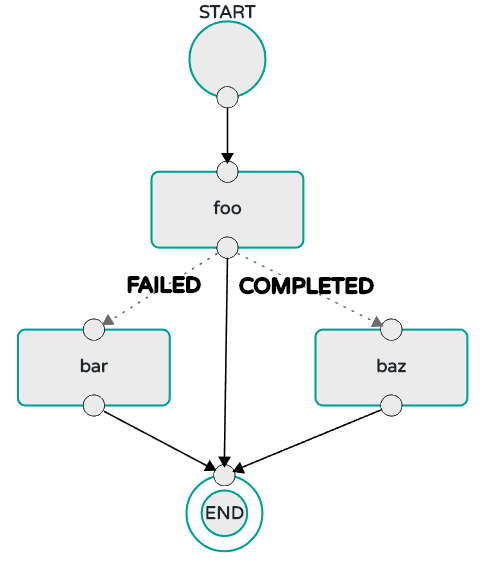

Using the Spring Cloud Data Flow Dashboard to create the same "basic transition" would look like:

The diagram above is a screen capture of the directed graph as it being created using the Spring Cloud Data Flow Dashboard. Notice that there are 2 different types of connectors:

- Dashed line - Is the line used to represent transitions from the application to one of the possible destination applications.

- Solid line - Used to connect applications in a conditional execution or a connection between the application and a control node (end, start).

When creating a transition, link the application to each of possible destination using the connector. Once complete go to each connection and select it by clicking it. A bolt icon should appear, click that icon and enter the exit status required for that connector. The solid line for that connector will turn to a dashed line.

Wildcards are supported for transitions by the DSL for example:

task create my-transition-composed-task --definition "foo 'FAILED' -> bar '*' -> baz"

In the example above foo would launch and if it had an exit status of FAILED,

then the bar task would launch. Any exit status of foo other than FAILED

then baz would launch.

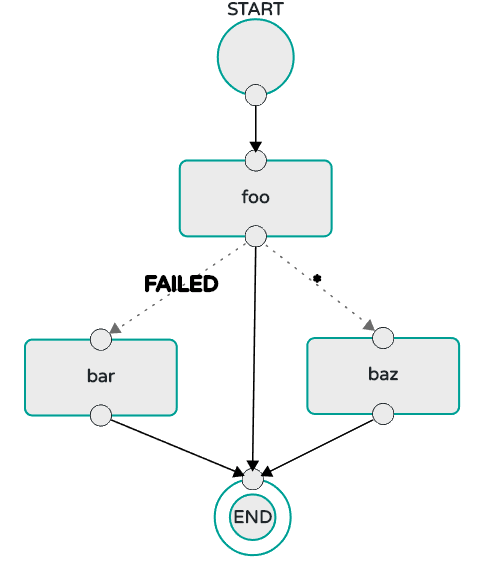

Using the Spring Cloud Data Flow Dashboard to create the same "transition with wildcard" would look like:

A transition can be followed by a conditional execution so long as the wildcard is not used. For example:

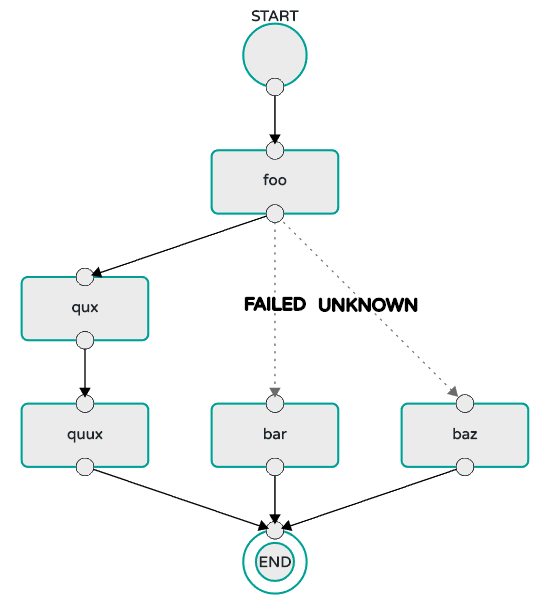

task create my-transition-conditional-execution-task --definition "foo 'FAILED' -> bar 'UNKNOWN' -> baz && qux && quux"

In the example above foo would launch and if it had an exit status of FAILED,

then the bar task would launch. If foo had an exit status of UNKNOWN then

baz would launch. Any exit status of foo other than FAILED or UNKNOWN

then qux would launch and upon successful completion quux would launch.

Using the Spring Cloud Data Flow Dashboard to create the same "transition with conditional execution" would look like:

![[Note]](images/note.png) | Note |

|---|---|

In this diagram we see the dashed line (transition) connecting the |

Splits allow for multiple tasks within a composed task to be run in parallel.

It is denoted by using angle brackets <> to group tasks and flows that are to

be run in parallel. These tasks and flows are separated by the double pipe ||

. For example:

task create my-split-task --definition "<foo || bar || baz>"

The example above will launch tasks foo, bar and baz in parallel.

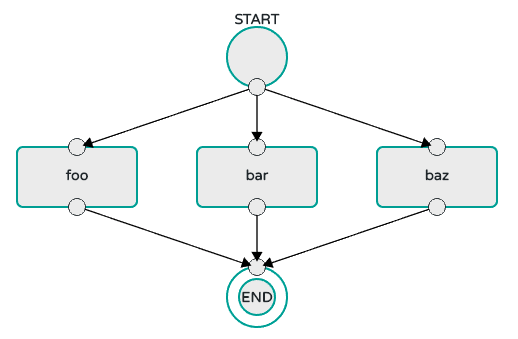

Using the Spring Cloud Data Flow Dashboard to create the same "split execution" would look like:

With the task DSL a user may also execute multiple split groups in succession. For example:

task create my-split-task --definition "<foo || bar || baz> && <qux || quux>"

In the example above tasks foo, bar and baz will be launched in parallel,

once they all complete then tasks qux, quux will be launched in parallel.

Once they complete the composed task will end. However if foo, bar, or

baz fails then, the split containing qux and quux will not launch.

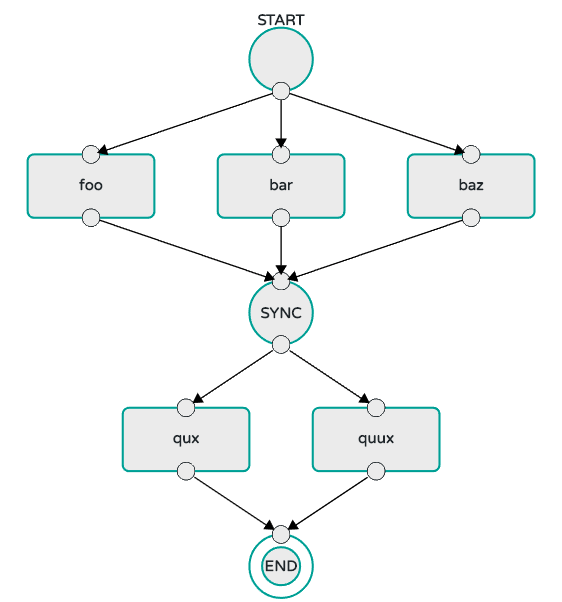

Using the Spring Cloud Data Flow Dashboard to create the same "split with multiple groups" would look like:

Notice that there is a SYNC control node that is by the designer when

connecting two consecutive splits.

A split can also have a conditional execution within the angle brackets. For example:

task create my-split-task --definition "<foo && bar || baz>"

In the example above we see that foo and baz will be launched in parallel,

however bar will not launch until foo completes successfully.

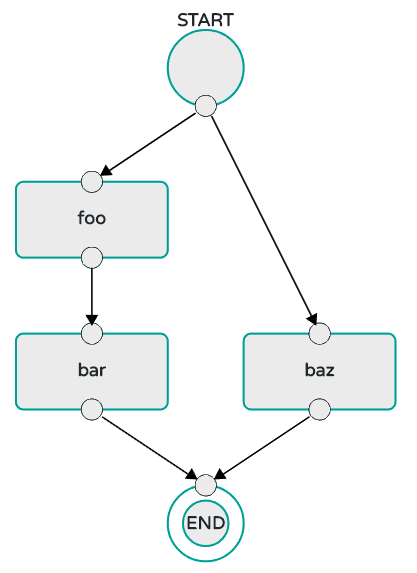

Using the Spring Cloud Data Flow Dashboard to create the same "split containing conditional execution" would look like: