Darwin.RELEASE

Copyright © 2013-2016 Pivotal Software, Inc.

Table of Contents

- I. Reference Guide

- II. Starters

- 2. Sources

- 2.1. File Source

- 2.2. FTP Source

- 2.3. Gemfire Source

- 2.4. Gemfire-CQ Source

- 2.5. Http Source

- 2.6. JDBC Source

- 2.7. JMS Source

- 2.8. Load Generator Source

- 2.9. Mail Source

- 2.10. MongoDB Source

- 2.11. MQTT Source

- 2.12. RabbitMQ Source

- 2.13. Amazon S3 Source

- 2.14. SFTP Source

- 2.15. SYSLOG Source

- 2.16. TCP

- 2.17. TCP Client as a Source which connects to a TCP server and receives data

- 2.18. Time Source

- 2.19. Trigger Source

- 2.20. TriggerTask Source

- 2.21. Twitter Stream Source

- 3. Processors

- 3.1. Aggregator Processor

- 3.2. Bridge Processor

- 3.3. Filter Processor

- 3.4. Groovy Filter Processor

- 3.5. Groovy Transform Processor

- 3.6. gRPC Processor

- 3.7. Header Enricher Processor

- 3.8. Http Client Processor

- 3.9. PMML Processor

- 3.10. Python Http Processor

- 3.11. Jython Processor

- 3.12. Scripable Transform Processor

- 3.13. Splitter Processor

- 3.14. TCP Client as a processor which connects to a TCP server, sends data to it and also receives data.

- 3.15. Transform Processor

- 3.16. TensorFlow Processor

- 3.17. Twitter Sentiment Analysis Processor

- 4. Sinks

- 4.1. Aggregate Counter Sink

- 4.2. Cassandra Sink

- 4.3. Counter Sink

- 4.4. Field Value Counter Sink

- 4.5. File Sink

- 4.6. FTP Sink

- 4.7. Gemfire Sink

- 4.8. Gpfdist Sink

- 4.9. HDFS Sink

- 4.10. Jdbc Sink

- 4.11. Log Sink

- 4.12. RabbitMQ Sink

- 4.13. MongoDB Sink

- 4.14. MQTT Sink

- 4.15. Pgcopy Sink

- 4.16. Redis Sink

- 4.17. Router Sink

- 4.18. Amazon S3 Sink

- 4.19. SFTP Sink

- 4.20. TCP Sink

- 4.21. Throughput Sink

- 4.22. Websocket Sink

- III. Appendices

This section will provide you with a detailed overview of Spring Cloud Stream Application Starters, their purpose, and how to use them. It assumes familiarity with general Spring Cloud Stream concepts, which can be found in the Spring Cloud Stream reference documentation.

Spring Cloud Stream Application Starters provide you with out-of-the-box Spring Cloud Stream uility applications that you can run independently or with Spring Cloud Data Flow. You can also use the starters as a basis for creating your own applications.

They include:

- connectors (sources, processors, and sinks) for a variety of middleware technologies including message brokers, storage (relational, non-relational, filesystem);

- adapters for various network protocols;

- generic processors that can be customized via Spring Expression Language (SpEL) or scripting.

You can find a detailed listing of all the starters and as their options in the corresponding section of this guide.

You can find all available app starter repositories in this GitHub Organization.

As a user of Spring Cloud Stream Application Starters you have access to two types of artifacts.

Starters are libraries that contain the complete configuration of a Spring Cloud Stream application with a specific role (e.g. an HTTP source that receives HTTP POST requests and forwards the data on its output channel to downstream Spring Cloud Stream applications). Starters are not executable applications, and are intended to be included in other Spring Boot applications, along with an available Binder implementation of your choice.

Out-of-the-box applications are Spring Boot applications that include the starters and a Binder implementation - a fully functional uber-jar. These uber-jar’s include minimal code required to execute standalone. For each starter application, the project provides a prebuilt version for Apache Kafka and Rabbit MQ Binders.

![[Note]](images/note.png) | Note |

|---|---|

Only starters are present in the source code of the project. Prebuilt applications are generated according to the stream apps generator maven plugin. |

Based on their target application type, starters can be either:

- a source that connects to an external resource to poll and receive data that is published to the default "output" channel;

- a processor that receives data from an "input" channel and processes it, sending the result on the default "output" channel;

- a sink that connects to an external resource to send the received data to the default "input" channel.

You can easily identify the type and functionality of a starter based on its name.

All starters are named following the convention spring-cloud-starter-stream-<type>-<functionality>.

For example spring-cloud-starter-stream-source-file is a starter for a file source that polls a directory and sends file data on the output channel (read the reference documentation of the source for details).

Conversely, spring-cloud-starter-stream-sink-cassandra is a starter for a Cassandra sink that writes the data that it receives on the input channel to Cassandra (read the reference documentation of the sink for details).

The prebuilt applications follow a naming convention too: <functionality>-<type>-<binder>. For example, cassandra-sink-kafka-10 is a Cassandra sink using the Kafka binder that is running with Kafka version 0.10.

You either get access to the artifacts produced by Spring Cloud Stream Application Starters via Maven, Docker, or building the artifacts yourself.

Starters are available as Maven artifacts in the Spring repositories. You can add them as dependencies to your application, as follows:

<dependency> <groupId>org.springframework.cloud.stream.app</groupId> <artifactId>spring-cloud-starter-stream-sink-cassandra</artifactId> <version>1.0.0.BUILD-SNAPSHOT</version> </dependency>

From this, you can infer the coordinates for other starters found in this guide.

While the version may vary, the group will always remain org.springframework.cloud.stream.app and the artifact id follows the naming convention spring-cloud-starter-stream-<type>-<functionality> described previously.

Prebuilt applications are available as Maven artifacts too.

It is not encouraged to use them directly as dependencies, as starters should be used instead.

Following the typical Maven <group>:<artifactId>:<version> convention, they can be referenced for example as:

org.springframework.cloud.stream.app:cassandra-sink-rabbit:1.0.0.BUILD-SNAPSHOT

You can download the executable jar artifacts from the Spring Maven repositories. The root directory of the Maven repository that hosts release versions is repo.spring.io/release/org/springframework/cloud/stream/app/. From there you can navigate to the latest release version of a specific app, for example log-sink-rabbit-1.1.1.RELEASE.jar. Use the Milestone and Snapshot repository locations for Milestone and Snapshot executuable jar artifacts.

The Docker versions of the applications are available in Docker Hub, at hub.docker.com/r/springcloudstream/. Naming and versioning follows the same general conventions as Maven, e.g.

docker pull springcloudstream/cassandra-sink-kafka-10will pull the latest Docker image of the Cassandra sink with the Kafka binder that is running with Kafka version 0.10.

You can build the project and generate the artifacts (including the prebuilt applications) on your own. This is useful if you want to deploy the artifacts locally or add additional features.

First, you need to generate the prebuilt applications. This is done by running the application generation Maven plugin. You can do so by simply invoking the maven build with the generateApps profile and install lifecycle.

mvn clean install -PgenerateApps

Each of the prebuilt applciation will contain:

pom.xmlfile with the required dependencies (starter and binder)- a class that contains the

mainmethod of the application and imports the predefined configuration - generated integration test code that validates the component against the configured binder.

For example, spring-cloud-starter-stream-sink-cassandra will generate cassandra-sink-rabbit and cassandra-sink-kafka-10 as completely functional applications.

Apart from accessing the sources, sinks and processors already provided by the project, in this section we will describe how to:

- Use a different binder than Kafka or Rabbit

- Create your own applications

- Customize dependencies such as Hadoop distributions or JDBC drivers

Prebuilt applications are provided for both kafka and rabbit binders. But if you want to connect to a different middleware system, and you have a binder for it, you will need to create new artifacts.

<dependencies> <!- other dependencies --> <dependency> <groupId>org.springframework.cloud.stream.app</groupId> <artifactId>spring-cloud-starter-stream-sink-cassandra</artifactId> <version>1.0.0.BUILD-SNAPSHOT</version> </dependency> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-stream-binder-gemfire</artifactId> <version>1.0.0.BUILD-SNAPSHOT</version> </dependency> </dependencies>

The next step is to create the project’s main class and import the configuration provided by the starter.

package org.springframework.cloud.stream.app.cassandra.sink.rabbit; import org.springframework.boot.SpringApplication; import org.springframework.boot.autoconfigure.SpringBootApplication; import org.springframework.cloud.stream.app.cassandra.sink.CassandraSinkConfiguration; import org.springframework.context.annotation.Import; @SpringBootApplication @Import(CassandraSinkConfiguration.class) public class CassandraSinkGemfireApplication { public static void main(String[] args) { SpringApplication.run(CassandraSinkGemfireApplication.class, args); } }

Spring Cloud Stream Application Starters consists of regular Spring Boot applications with some additional conventions that facilitate generating prebuilt applications with the preconfigured binders. Sometimes, your solution may require additional applications that are not in the scope of out-of-the-box Spring Cloud Stream Application Starters, or require additional tweaks and enhancements. In this section we will show you how to create custom applications that can be part of your solution, along with Spring Cloud Stream application starters. You have the following options:

- create new Spring Cloud Stream applications;

- use the starters to create customized versions;

If you want to add your own custom applications to your solution, you can simply create a new Spring Cloud Stream app project with the binder of your choice and run it the same way as the applications provided by Spring Cloud Stream Application Starters, independently or via Spring Cloud Data Flow. The process is described in the Getting Started Guide of Spring Cloud Stream.

An alternative way to bootstrap your application is to go to the Spring Initializr and choose a Spring Cloud Stream Binder of your choice. This way you already have the necessary infrastructure ready to go and mainly focus on the specifics of the application.

The following requirements need to be followed when you go with this option:

- a single inbound channel named

inputfor sources - the simplest way to do so is by using the predefined interfaceorg.spring.cloud.stream.messaging.Source; - a single outbound channel named

outputfor sinks - the simplest way to do so is by using the predefined interfaceorg.spring.cloud.stream.messaging.Sink; - both an inbound channel named

inputand an outbound channel namedoutputfor processors - the simplest way to do so is by using the predefined interfaceorg.spring.cloud.stream.messaging.Processor.

You can also reuse the starters provided by Spring Cloud Stream Application Starters to create custom components, enriching the behavior of the application.

For example, you can add a Spring Security layer to your HTTP source, add additional configurations to the ObjectMapper used for JSON transformation wherever that happens, or change the JDBC driver or Hadoop distribution that the application is using.

In order to do this, you should set up your project following a process similar to customizing a binder.

In fact, customizing the binder is the simplest form of creating a custom component.

As a reminder, this involves:

- adding the starter to your project

- choosing the binder

- adding the main class and importing the starter configuration.

After doing so, you can simply add the additional configuration for the extra features of your application.

If you’re looking to patch the pre-built applications to accommodate the addition of new dependencies, you can use the following example as the reference. Let’s review the steps to add mysql driver to jdbc-sink application.

- Go to: start-scs.cfapps.io/

- Select the application and binder dependencies [`JDBC sink` and `Rabbit binder starter`]

- Generate and load the project in an IDE

- Add

mysqljava-driver dependency

<dependencies> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>5.1.37</version> </dependency> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-stream-binder-rabbit</artifactId> </dependency> <dependency> <groupId>org.springframework.cloud.stream.app</groupId> <artifactId>spring-cloud-starter-stream-sink-jdbc</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> </dependency> </dependencies>

- Import the respective configuration class to the generated Spring Boot application. In the case of

jdbcsink, it is:@Import(org.springframework.cloud.stream.app.jdbc.sink.JdbcSinkConfiguration.class). You can find the configuration class for other applications in their respective repositories.

@SpringBootApplication @Import(org.springframework.cloud.stream.app.jdbc.sink.JdbcSinkConfiguration.class) public class DemoApplication { public static void main(String[] args) { SpringApplication.run(DemoApplication.class, args); } }

- Build and install the application to desired maven repository

- The patched copy of

jdbc-sinkapplication now includesmysqldriver in it - This application can be run as a standalone uberjar

In this section, we will explain how to develop a custom source/sink/processor application and then generate maven and docker artifacts for it with the necessary middleware bindings using the existing tooling provided by the spring cloud stream app starter infrastructure. For explanation purposes, we will assume that we are creating a new source application for a technology named foobar.

- Create a repository called foobar in your local github account

- The root artifact (something like foobar-app-starters-build) must inherit from

app-starters-build

Please follow the instructions above for designing a proper Spring Cloud Stream Source. You may also look into the existing

starters for how to structure a new one. The default naming for the main @Configuration class is

FoobarSourceConfiguration and the default package for this @Configuration

is org.springfamework.cloud.stream.app.foobar.source. If you have a different class/package name, see below for

overriding that in the app generator. The technology/functionality name for which you create

a starter can be a hyphenated stream of strings such as in scriptable-transform which is a processor type in the

module spring-cloud-starter-stream-processor-scriptable-transform.

The starters in spring-cloud-stream-app-starters are slightly different from the other starters in spring-boot and

spring-cloud in that here we don’t provide a way to auto configure any configuration through spring factories mechanism.

Rather, we delegate this responsibility to the maven plugin that is generating the binder based apps. Therefore, you don’t

have to provide a spring.factories file that lists all your configuration classes.

- The starter module needs to inherit from the parent (

foobar-app-starters-build) - Add the new foobar source module to the root pom of the new repository

- In the pom.xml for the source module, add the following in the

buildsection. This will add the necessary plugin configuration for app generation as well as generating proper documentation metadata. Please ensure that your root pom inherits app-starters-build as the base configuration for the plugins is specified there.

<build> <plugins> <plugin> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-app-starter-doc-maven-plugin</artifactId> </plugin> <plugin> <groupId>org.springframework.cloud.stream.app.plugin</groupId> <artifactId>spring-cloud-stream-app-maven-plugin</artifactId> <configuration> <generatedProjectHome>${session.executionRootDirectory}/apps</generatedProjectHome> <generatedProjectVersion>${project.version}</generatedProjectVersion> <bom> <name>scs-bom</name> <groupId>org.springframework.cloud.stream.app</groupId> <artifactId>foobar-app-dependencies</artifactId> <version>${project.version}</version> </bom> <generatedApps> <foobar-source/> </generatedApps> </configuration> </plugin> </plugins> </build>

More information about the maven plugin used above to generate the apps can be found here: github.com/spring-cloud/spring-cloud-stream-app-maven-plugin

If you did not follow the default convention expected by the plugin for where it is looking for the main configuration

class, which is org.springfamework.cloud.stream.app.foobar.source.FoobarSourceConfiguration, you can override that in

the configuration for the plugin. For example, if your main configuration class is foo.bar.SpecialFooBarConfiguration.class,

this is how you can tell the plugin to override the default.

<foobar-source> <autoConfigClass>foo.bar.SpecialFooBarConfiguration.class</autoConfigClass> </foobar-source>

- Create a new module to manage dependencies for foobar (

foobar-app-dependencies). This is the bom (bill of material) for this project. It is advised that this bom is inherited fromspring-cloud-dependencies-parent. Please see other starter repositories for guidelines. - You need to add the new starter dependency to the BOM in the dependency management section. For example,

<dependencyManagement> ... ... <dependency> <groupId>org.springframework.cloud.stream.app</groupId> <artifactId>spring-cloud-starter-stream-source-foobar</artifactId> <version>1.0.0.BUILD-SNAPSHOT</version> </dependency> ... ...

- At the root of the repository build, install and generate the apps:

./mvnw clean install -PgenerateApps

This will generate the binder based foobar source apps in a directory named apps at the root of the repository.

If you want to change the location where the apps are generated, for instance `/tmp/scs-apps, you can do it in the

configuration section of the plugin.

<configuration> ... <generatedProjectHome>/tmp/scs-apps</generatedProjectHome> ... </configuration

By default, we generate apps for both Kafka 09/10 and Rabbitmq binders - spring-cloud-stream-binder-kafka and

spring-cloud-stream-binder-rabbit. Say, if you have a custom binder you created for some middleware (say JMS),

which you need to generate apps for foobar source, you can add that binder to the binders list in the configuration

section as in the following.

<binders> <jms /> </binders>

Please note that this would only work, as long as there is a binder with the maven coordinates of

org.springframework.cloud.stream as group id and spring-cloud-stream-binder-jms as artifact id.

This artifact needs to be specified in the BOM above and available through a maven repository as well.

If you have an artifact that is only available through a private internal maven repository (may be an enterprise wide Nexus repo that you use globally across teams), and you need that for your app, you can define that as part of the maven plugin configuration.

For example,

<configuration> ... <extraRepositories> <repository> <id>private-internal-nexus</id> <url>.../</url> <name>...</name> <snapshotEnabled>...</snapshotEnabled> </repository> </extraRepositories> </configuration>

Then you can define this as part of your app tag:

<foobar-source> <extraRepositories> <private-internal-nexus /> </extraRepositories> </foobar-source>

- cd into the directory where you generated the apps (

appsat the root of the repository by default, unless you changed it elsewhere as described above).

Here you will see foobar-source-kafka-09, foobar-source-kafka-10 and foobar-source-rabbit.

If you added more binders as described above, you would see that app as well here - for example foobar-source-jms.

You can import these apps directly into your IDE of choice if you further want to do any customizations on them. Each of them is a self contained spring boot application project.

For the generated apps, the parent is spring-boot-starter-parent as required by the underlying Spring Initializr library.

You can cd into these custom foobar-source directories and do the following to build the apps:

cd foo-source-kafka-10

mvn clean install

This would install the foo-source-kafka-10 into your local maven cache (~/.m2 by default).

The app generation phase adds an integration test to the app project that is making sure that all the spring

components and contexts are loaded properly. However, these tests are not run by default when you do a mvn install.

You can force the running of these tests by doing the following:

mvn clean install -DskipTests=false

One important note about running these tests in generated apps:

If your application’s spring beans need to interact with

some real services out there or expect some properties to be present in the context, these tests will fail unless you make

those things available. An example would be a Twitter Source, where the underlying spring beans are trying to create a

twitter template and will fail if it can’t find the credentials available through properties. One way to solve this and

still run the generated context load tests would be to create a mock class that provides these properties or mock beans

(for example, a mock twitter template) and tell the maven plugin about its existence. You can use the existing module

app-starters-test-support for this purpose and add the mock class there.

See the class org.springframework.cloud.stream.app.test.twitter.TwitterTestConfiguration for reference.

You can create a similar class for your foobar source - FoobarTestConfiguration and add that to the plugin configuration.

You only need to do this if you run into this particular issue of spring beans are not created properly in the

integration test in the generated apps.

<foobar-source> <extraTestConfigClass>org.springframework.cloud.stream.app.test.foobar.FoobarTestConfiguration.class</extraTestConfigClass> </foobar-source>

When you do the above, this test configuration will be automatically imported into the context of your test class.

Also note that, you need to regenerate the apps each time you make a configuration change in the plugin.

- Now that you built the applications, they are available under the

targetdirectories of the respective apps and also as maven artifacts in your local maven repository. Go to thetargetdirectory and run the following:

java -jar foobar-source-kafa-10.jar [Ensure that you have kafka running locally when you do this]

It should start the application up.

- The generated apps also support the creation of docker images. You can cd into one of the foobar-source* app and do the following:

mvn clean package docker:build

This creates the docker image under the target/docker/springcloudstream directory. Please ensure that the Docker

container is up and running and DOCKER_HOST environment variable is properly set before you try docker:build.

All the generated apps from the various app repositories are uploaded to Docker Hub

However, for a custom app that you build, this won’t be uploaded to docker hub under springcloudstream repository.

If you think that there is a general need for this app, you should try contributing this starter as a new repository to Spring Cloud Stream App Starters.

Upon review, this app then can be eventually available through the above location in docker hub.

If you still need to push this to docker hub under a different repository (may be an enterprise repo that you manage for your organization) you can take the following steps.

Go to the pom.xml of the generated app [ example - foo-source-kafka/pom.xml]

Search for springcloudstream. Replace with your repository name.

Then do this:

mvn clean package docker:build docker:push -Ddocker.username=[provide your username] -Ddocker.password=[provide password]

This would upload the docker image to the docker hub in your custom repository.

In the following sections, you can find a brief faq on various things that we discussed above and a few other infrastructure related topics.

- What are Spring Cloud Stream Application Starters? Spring Cloud Stream Application Starters are Spring Boot based Spring Integration applications that provide integration with external systems. GitHub: github.com/spring-cloud-stream-app-starters Project page: cloud.spring.io/spring-cloud-stream-app-starters/

What is the parent for stream app starters? The parent for all app starters is

app-starters-buildwhich is coming from the core project. github.com/spring-cloud-stream-app-starters/core For example:<parent> <groupId>org.springframework.cloud.stream.app</groupId> <artifactId>app-starters-build</artifactId> <version>2.0.0.RELEASE</version> <relativePath/> </parent>

- Why is there a BOM in the core proejct?

Core defines a BOM which contains all the dependency management for common artifacts. This BOM is named as

app-starters-core-dependencies. We need this bom during app generation to pull down all the core dependencies. - What are the contents of the core BOM? In addition to the common artifacts in core, the app-starters-core-dependencies BOM also adds dependency management for spring-cloud-dependencies which will include spring-cloud-stream transitively.

- Where is the core BOM used?

There are two places where the core BOM is used. It is used to provide compile time dependency management for all the starters.

This is defined in the

app-starters-buildartfiact. This same BOM is referenced through the maven plugin configuration for the app generation. The generated apps thus will include this bom also in their pom.xml files. What spring cloud stream artifacts does the parent artifact (

app-starters-build) include?- spring-cloud-stream

- Spring-cloud-stream-test-support-internal

- spring-cloud-stream-test-support

What other artfiacts are available through the parent

app-starters-buildand where are they coming from? In addition to the above artifacts, the artifacts below also included inapp-starters-buildby default.- json-path

- spring-integration-xml

- spring-boot-starter-logging

- spring boot-starter-security Spring-cloud-build is the parent for app-starters-build. Spring-cloud-build imports spring-boot-dependencies and that is from where these artifacts are coming from.

- I did not see any other Spring Integration components used in the above 2 lists. Where are those dependencies coming from for individual starters? Spring-integration bom is imported in the spring-boot-dependencies bom and this is where the default SI dependencies are coming for SCSt app starters.

Can you summarize all the BOM’s that SCSt app starters depend on? All SCSt app starters have access to dependencies defined in the following BOM’s and other dependencies from any other BOM’s these three boms import transitively as in the case of Spring Integration:

- app-starters-core-dependencies

- spring-cloud-dependencies

- spring-boot-dependencies

- Each app starter has

app-starter-buildas the parent which in turn hasspring-cloud-buildas parent. The above documentation states that the generated apps havespring-boot-starteras the parent. Why the mismatch? There is no mismatch per se, but a slight subtlety. As the question frames, each app starter has access to artifacts managed all the way throughspring-cloud-buildat compile time. However, this is not the case for the generated apps at runtime. Generated apps are managed by boot. Their parent isspring-boot-starterthat importsspring-boot-dependenciesbom that includes a majority of the components that these apps need. The additional dependencies that the generated application needs are managed by including a BOM specific to each application starter. - Why is there an app starter specific BOM in each app starer repositories? For example,

time-app-dependencies. This is an important BOM. At runtime, the generated apps get the versions used in their dependencies through a BOM that is managing the dependencies. Since all the boms that we specified above only for the helper artifacts, we need a place to manage the starters themselves. This is where the app specific BOM comes into play. In addition to this need, as it becomes clear below, there are other uses for this BOM such as dependency overrides etc. But in a nutshell, all the starter dependencies go to this BOM. For instance, take TCP repo as an example. It has a starter for source, sink, client processor etc. All these dependencies are managed through the app specifictcp-app-dependenciesbom. This bom is provided to the app generator maven plugin in addition to the core bom. This app specific bom hasspring-cloud-dependencies-parentas parent. - How do I create a new app starter project?

If you have a general purpose starter that can be provided as an of of the box app, create an issue for that in app-starters-release.

If there is a consensus, then a repository can be created in the

spring-cloud-stream-app-startersorganization where you can start contributing the starters and other components. - I created a new starter according to the guidelines above, now how do I generate binder specific apps for the new starters? By default, the app-starters-build in core is configured with the common configuration needed for the app generator maven plugin. It is configured for generating apps for kafka-09, kafka-10 and rabbitmq binders. In your starter you already have the configuration specified for the plugin from the parent. Modify the configuration for your starter accordingly. Refer to an existing starter for guidelines. Here is an example of modifying such a configuration : github.com/spring-cloud-stream-app-starters/time/blob/master/spring-cloud-starter-stream-source-time/pom.xml Look for spring-cloud-stream-app-maven-plugin in the plugins section under build. You generate binder based apps using the generateApps maven profile. You need the maven install lifecycle to generate the apps.

How do I override Spring Integration version that is coming from spring-boot-dependencies by default? The following solution only works if the versions you want to override are available through a new Spring Integration BOM. Go to your app starter specific bom. Override the property as following:

<spring-integration.version>VERSION GOES HERE</spring-integration.version>

Then add the following in the dependencies management section in the BOM.

<dependency> <groupId>org.springframework.integration</groupId> <artifactId>spring-integration-bom</artifactId> <version>${spring-integration.version}</version> <scope>import</scope> <type>pom</type> </dependency>How do I override spring-cloud-stream artifacts coming by default in spring-cloud-dependencies defined in core BOM? The following solution only works if the versions you want to override are available through a new Spring-Cloud-Dependencies BOM. Go to your app starter specific bom. Override the property as following:

<spring-cloud-dependencies.version>VERSION GOES HERE</spring-cloud-dependencies.version>

Then add the following in the dependencies management section in the BOM.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-dependencies</artifactId> <version>${spring-cloud-dependencies.version}</version> <scope>import</scope> <type>pom</type> </dependency>What if there is no spring-cloud-dependencies BOM available that contains my versions of spring-cloud-stream, but there is a spring-cloud-stream BOM available? Go to your app starter specific BOM. Override the property as below.

<spring-cloud-stream.version>VERSION GOES HERE</spring-cloud-stream.version>

Then add the following in the dependencies management section in the BOM.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-stream-dependencies</artifactId> <version>${spring-cloud-stream.version}</version> <scope>import</scope> <type>pom</type> </dependency>What if I want to override a single artifact that is provided through a bom? For example spring-integration-java-dsl? Go to your app starter BOM and add the following property with the version you want to override:

<spring-integration-java-dsl.version>VERSION GOES HERE</spring-integration-java-dsl.version>

Then in the dependency management section add the following:

<dependency> <groupId>org.springframework.integration</groupId> <artifactId>spring-integration-java-dsl</artifactId> <version>${spring-integration-java-dsl.version}</version> </dependency>How do I override the boot version used in a particular app? When you generate the app, override the boot version as follows.

./mvnw clean install -PgenerateApps -DbootVersion=<boot version to override>

For example: ./mvnw clean install -PgenerateApps -DbootVersion=2.0.0.BUILD-SNAPSHOT

You can also override the boot version more permanently by overriding the following property in your starter pom.

<bootVersion>2.0.0.BUILD-SNAPSHOT</bootVersion>

This application polls a directory and sends new files or their contents to the output channel.

The file source provides the contents of a File as a byte array by default.

However, this can be customized using the --mode option:

- ref Provides a

java.io.Filereference - lines Will split files line-by-line and emit a new message for each line

- contents The default. Provides the contents of a file as a byte array

When using --mode=lines, you can also provide the additional option --withMarkers=true.

If set to true, the underlying FileSplitter will emit additional start-of-file and end-of-file marker messages before and after the actual data.

The payload of these 2 additional marker messages is of type FileSplitter.FileMarker. The option withMarkers defaults to false if not explicitly set.

Content-Type: application/octet-streamfile_originalFile: <java.io.File>file_name: <file name>

Content-Type: text/plainfile_originalFile: <java.io.File>file_name: <file name>correlationId: <UUID>(same for each line)sequenceNumber: <n>sequenceSize: 0(number of lines is not know until the file is read)

The file source has the following options:

- file.consumer.markers-json

- When 'fileMarkers == true', specify if they should be produced

as FileSplitter.FileMarker objects or JSON. (Boolean, default:

true) - file.consumer.mode

- The FileReadingMode to use for file reading sources.

Values are 'ref' - The File object,

'lines' - a message per line, or

'contents' - the contents as bytes. (FileReadingMode, default:

<none>, possible values:ref,lines,contents) - file.consumer.with-markers

- Set to true to emit start of file/end of file marker messages before/after the data.

Only valid with FileReadingMode 'lines'. (Boolean, default:

<none>) - file.directory

- The directory to poll for new files. (String, default:

<none>) - file.filename-pattern

- A simple ant pattern to match files. (String, default:

<none>) - file.filename-regex

- A regex pattern to match files. (Pattern, default:

<none>) - file.prevent-duplicates

- Set to true to include an AcceptOnceFileListFilter which prevents duplicates. (Boolean, default:

true) - trigger.cron

- Cron expression value for the Cron Trigger. (String, default:

<none>) - trigger.date-format

- Format for the date value. (String, default:

<none>) - trigger.fixed-delay

- Fixed delay for periodic triggers. (Integer, default:

1) - trigger.initial-delay

- Initial delay for periodic triggers. (Integer, default:

0) - trigger.max-messages

- Maximum messages per poll, -1 means infinity. (Long, default:

-1) - trigger.time-unit

- The TimeUnit to apply to delay values. (TimeUnit, default:

SECONDS, possible values:NANOSECONDS,MICROSECONDS,MILLISECONDS,SECONDS,MINUTES,HOURS,DAYS)

The ref option is useful in some cases in which the file contents are large and it would be more efficient to send the file path.

$ ./mvnw clean install -PgenerateApps $ cd apps You can find the corresponding binder based projects here. You can then cd into one of the folders and build it: $ ./mvnw clean package

This source application supports transfer of files using the FTP protocol.

Files are transferred from the remote directory to the local directory where the app is deployed.

Messages emitted by the source are provided as a byte array by default. However, this can be

customized using the --mode option:

- ref Provides a

java.io.Filereference - lines Will split files line-by-line and emit a new message for each line

- contents The default. Provides the contents of a file as a byte array

When using --mode=lines, you can also provide the additional option --withMarkers=true.

If set to true, the underlying FileSplitter will emit additional start-of-file and end-of-file marker messages before and after the actual data.

The payload of these 2 additional marker messages is of type FileSplitter.FileMarker. The option withMarkers defaults to false if not explicitly set.

Content-Type: application/octet-streamfile_orginalFile: <java.io.File>file_name: <file name>

Content-Type: text/plainfile_orginalFile: <java.io.File>file_name: <file name>correlationId: <UUID>(same for each line)sequenceNumber: <n>sequenceSize: 0(number of lines is not know until the file is read)

The ftp source has the following options:

- file.consumer.markers-json

- When 'fileMarkers == true', specify if they should be produced

as FileSplitter.FileMarker objects or JSON. (Boolean, default:

true) - file.consumer.mode

- The FileReadingMode to use for file reading sources.

Values are 'ref' - The File object,

'lines' - a message per line, or

'contents' - the contents as bytes. (FileReadingMode, default:

<none>, possible values:ref,lines,contents) - file.consumer.with-markers

- Set to true to emit start of file/end of file marker messages before/after the data.

Only valid with FileReadingMode 'lines'. (Boolean, default:

<none>) - ftp.auto-create-local-dir

- Set to true to create the local directory if it does not exist. (Boolean, default:

true) - ftp.delete-remote-files

- Set to true to delete remote files after successful transfer. (Boolean, default:

false) - ftp.factory.cache-sessions

- <documentation missing> (Boolean, default:

<none>) - ftp.factory.client-mode

- The client mode to use for the FTP session. (ClientMode, default:

<none>, possible values:ACTIVE,PASSIVE) - ftp.factory.host

- <documentation missing> (String, default:

<none>) - ftp.factory.password

- <documentation missing> (String, default:

<none>) - ftp.factory.port

- The port of the server. (Integer, default:

21) - ftp.factory.username

- <documentation missing> (String, default:

<none>) - ftp.filename-pattern

- A filter pattern to match the names of files to transfer. (String, default:

<none>) - ftp.filename-regex

- A filter regex pattern to match the names of files to transfer. (Pattern, default:

<none>) - ftp.local-dir

- The local directory to use for file transfers. (File, default:

<none>) - ftp.preserve-timestamp

- Set to true to preserve the original timestamp. (Boolean, default:

true) - ftp.remote-dir

- The remote FTP directory. (String, default:

/) - ftp.remote-file-separator

- The remote file separator. (String, default:

/) - ftp.tmp-file-suffix

- The suffix to use while the transfer is in progress. (String, default:

.tmp) - trigger.cron

- Cron expression value for the Cron Trigger. (String, default:

<none>) - trigger.date-format

- Format for the date value. (String, default:

<none>) - trigger.fixed-delay

- Fixed delay for periodic triggers. (Integer, default:

1) - trigger.initial-delay

- Initial delay for periodic triggers. (Integer, default:

0) - trigger.max-messages

- Maximum messages per poll, -1 means infinity. (Long, default:

-1) - trigger.time-unit

- The TimeUnit to apply to delay values. (TimeUnit, default:

SECONDS, possible values:NANOSECONDS,MICROSECONDS,MILLISECONDS,SECONDS,MINUTES,HOURS,DAYS)

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one one of the folders and build it:

$ ./mvnw clean package

This source allows you to subscribe to any creates or updates to a Gemfire region. The application configures a client cache and client region, along with the necessary subscriptions enabled. By default the payload contains the updated entry value, but may be controlled by passing in a SpEL expression that uses the EntryEvent as the evaluation context.

The gemfire source supports the following configuration properties:

- gemfire.cache-event-expression

- SpEL expression to extract fields from a cache event. (Expression, default:

<none>) - gemfire.pool.connect-type

- Specifies connection type: 'server' or 'locator'. (ConnectType, default:

<none>, possible values:locator,server) - gemfire.pool.host-addresses

- Specifies one or more Gemfire locator or server addresses formatted as [host]:[port]. (InetSocketAddress[], default:

<none>) - gemfire.pool.subscription-enabled

- Set to true to enable subscriptions for the client pool. Required to sync updates to the client cache. (Boolean, default:

false) - gemfire.region.region-name

- The region name. (String, default:

<none>) - gemfire.security.password

- The cache password. (String, default:

<none>) - gemfire.security.username

- The cache username. (String, default:

<none>)

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one of the folders and build it:

$ ./mvnw clean package

Continuous query allows client applications to create a GemFire query using Object Query Language (OQL) and to register a CQ listener which subscribes to the query and is notified every time the query’s result set changes. The gemfire-cq source registers a CQ which will post CQEvent messages to the stream.

The gemfire-cq source supports the following configuration properties:

- gemfire.cq-event-expression

- SpEL expression to use to extract data from a cq event. (Expression, default:

<none>) - gemfire.pool.connect-type

- Specifies connection type: 'server' or 'locator'. (ConnectType, default:

<none>, possible values:locator,server) - gemfire.pool.host-addresses

- Specifies one or more Gemfire locator or server addresses formatted as [host]:[port]. (InetSocketAddress[], default:

<none>) - gemfire.pool.subscription-enabled

- Set to true to enable subscriptions for the client pool. Required to sync updates to the client cache. (Boolean, default:

false) - gemfire.query

- The OQL query (String, default:

<none>) - gemfire.security.password

- The cache password. (String, default:

<none>) - gemfire.security.username

- The cache username. (String, default:

<none>)

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one of the folders and build it:

$ ./mvnw clean package

A source application that listens for HTTP requests and emits the body as a message payload.

If the Content-Type matches text/* or application/json, the payload will be a String,

otherwise the payload will be a byte array.

The http source supports the following configuration properties:

- http.cors.allow-credentials

- Whether the browser should include any cookies associated with the domain of the request being annotated. (Boolean, default:

<none>) - http.cors.allowed-headers

- List of request headers that can be used during the actual request. (String[], default:

<none>) - http.cors.allowed-origins

- List of allowed origins, e.g. "http://domain1.com". (String[], default:

<none>) - http.enable-csrf

- The security CSRF enabling flag. Makes sense only if 'enableSecurity = true'. (Boolean, default:

false) - http.enable-security

- The security enabling flag. (Boolean, default:

false) - http.mapped-request-headers

- Headers that will be mapped. (String[], default:

<none>) - http.path-pattern

- An Ant-Style pattern to determine which http requests will be captured. (String, default:

/) - server.port

- Server HTTP port. (Integer, default:

8080)

![[Note]](images/note.png) | Note |

|---|---|

Security is disabled for this application by default.

To enable it, you should use the mentioned above |

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one one of the folders and build it:

$ ./mvnw clean package

This source polls data from an RDBMS.

This source is fully based on the DataSourceAutoConfiguration, so refer to the

Spring Boot JDBC Support for more

information.

The jdbc source has the following options:

- jdbc.max-rows-per-poll

- Max numbers of rows to process for each poll. (Integer, default:

0) - jdbc.query

- The query to use to select data. (String, default:

<none>) - jdbc.split

- Whether to split the SQL result as individual messages. (Boolean, default:

true) - jdbc.update

- An SQL update statement to execute for marking polled messages as 'seen'. (String, default:

<none>) - spring.datasource.data

- Data (DML) script resource references. (List<String>, default:

<none>) - spring.datasource.driver-class-name

- Fully qualified name of the JDBC driver. Auto-detected based on the URL by default. (String, default:

<none>) - spring.datasource.initialization-mode

- Initialize the datasource using available DDL and DML scripts. (DataSourceInitializationMode, default:

embedded, possible values:ALWAYS,EMBEDDED,NEVER) - spring.datasource.password

- Login password of the database. (String, default:

<none>) - spring.datasource.schema

- Schema (DDL) script resource references. (List<String>, default:

<none>) - spring.datasource.url

- JDBC url of the database. (String, default:

<none>) - spring.datasource.username

- Login username of the database. (String, default:

<none>) - trigger.cron

- Cron expression value for the Cron Trigger. (String, default:

<none>) - trigger.date-format

- Format for the date value. (String, default:

<none>) - trigger.fixed-delay

- Fixed delay for periodic triggers. (Integer, default:

1) - trigger.initial-delay

- Initial delay for periodic triggers. (Integer, default:

0) - trigger.max-messages

- Maximum messages per poll, -1 means infinity. (Long, default:

1) - trigger.time-unit

- The TimeUnit to apply to delay values. (TimeUnit, default:

<none>, possible values:NANOSECONDS,MICROSECONDS,MILLISECONDS,SECONDS,MINUTES,HOURS,DAYS)

Also see the Spring Boot Documentation

for addition DataSource properties and TriggerProperties and MaxMessagesProperties for polling options.

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one one of the folders and build it:

$ ./mvnw clean package

The "jms" source enables receiving messages from JMS.

The jms source has the following options:

- jms.client-id

- Client id for durable subscriptions. (String, default:

<none>) - jms.destination

- The destination from which to receive messages (queue or topic). (String, default:

<none>) - jms.message-selector

- A selector for messages; (String, default:

<none>) - jms.session-transacted

- True to enable transactions and select a DefaultMessageListenerContainer, false to

select a SimpleMessageListenerContainer. (Boolean, default:

true) - jms.subscription-durable

- True for a durable subscription. (Boolean, default:

<none>) - jms.subscription-name

- The name of a durable or shared subscription. (String, default:

<none>) - jms.subscription-shared

- True for a shared subscription. (Boolean, default:

<none>) - spring.jms.jndi-name

- Connection factory JNDI name. When set, takes precedence to others connection

factory auto-configurations. (String, default:

<none>) - spring.jms.listener.acknowledge-mode

- Acknowledge mode of the container. By default, the listener is transacted with

automatic acknowledgment. (AcknowledgeMode, default:

<none>, possible values:AUTO,CLIENT,DUPS_OK) - spring.jms.listener.auto-startup

- Start the container automatically on startup. (Boolean, default:

true) - spring.jms.listener.concurrency

- Minimum number of concurrent consumers. (Integer, default:

<none>) - spring.jms.listener.max-concurrency

- Maximum number of concurrent consumers. (Integer, default:

<none>) - spring.jms.pub-sub-domain

- Whether the default destination type is topic. (Boolean, default:

false)

![[Note]](images/note.png) | Note |

|---|---|

Spring boot broker configuration is used; refer to the

Spring Boot Documentation for more information.

The |

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one of the folders and build it:

$ ./mvnw clean package

A source that sends generated data and dispatches it to the stream. This is to provide a method for users to identify the performance of Spring Cloud Data Flow in different environments and deployment types.

The load-generator source has the following options:

- load-generator.generate-timestamp

- <documentation missing> (Boolean, default:

false) - load-generator.message-count

- <documentation missing> (Integer, default:

1000) - load-generator.message-size

- <documentation missing> (Integer, default:

1000) - load-generator.producers

- <documentation missing> (Integer, default:

1)

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one one of the folders and build it:

$ ./mvnw clean package

A source module that listens for Emails and emits the message body as a message payload.

The mail source supports the following configuration properties:

- mail.charset

- The charset for byte[] mail-to-string transformation. (String, default:

UTF-8) - mail.delete

- Set to true to delete email after download. (Boolean, default:

false) - mail.expression

- Configure a SpEL expression to select messages. (String, default:

true) - mail.idle-imap

- Set to true to use IdleImap Configuration. (Boolean, default:

false) - mail.java-mail-properties

- JavaMail properties as a new line delimited string of name-value pairs, e.g.

'foo=bar\n baz=car'. (Properties, default:

<none>) - mail.mark-as-read

- Set to true to mark email as read. (Boolean, default:

false) - mail.url

- Mail connection URL for connection to Mail server e.g.

'imaps://username:[email protected]:993/Inbox'. (URLName, default:

<none>) - mail.user-flag

- The flag to mark messages when the server does not support \Recent (String, default:

<none>) - trigger.cron

- Cron expression value for the Cron Trigger. (String, default:

<none>) - trigger.date-format

- Format for the date value. (String, default:

<none>) - trigger.fixed-delay

- Fixed delay for periodic triggers. (Integer, default:

1) - trigger.initial-delay

- Initial delay for periodic triggers. (Integer, default:

0) - trigger.max-messages

- Maximum messages per poll, -1 means infinity. (Long, default:

1) - trigger.time-unit

- The TimeUnit to apply to delay values. (TimeUnit, default:

<none>, possible values:NANOSECONDS,MICROSECONDS,MILLISECONDS,SECONDS,MINUTES,HOURS,DAYS)

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one of the folders and build it:

$ ./mvnw clean package

This source polls data from MongoDB.

This source is fully based on the MongoDataAutoConfiguration, so refer to the

Spring Boot MongoDB Support

for more information.

The mongodb source has the following options:

- mongodb.collection

- The MongoDB collection to query (String, default:

<none>) - mongodb.query

- The MongoDB query (String, default:

{ }) - mongodb.query-expression

- The SpEL expression in MongoDB query DSL style (Expression, default:

<none>) - mongodb.split

- Whether to split the query result as individual messages. (Boolean, default:

true) - spring.data.mongodb.authentication-database

- Authentication database name. (String, default:

<none>) - spring.data.mongodb.database

- Database name. (String, default:

<none>) - spring.data.mongodb.field-naming-strategy

- Fully qualified name of the FieldNamingStrategy to use. (Class<?>, default:

<none>) - spring.data.mongodb.grid-fs-database

- GridFS database name. (String, default:

<none>) - spring.data.mongodb.host

- Mongo server host. Cannot be set with URI. (String, default:

<none>) - spring.data.mongodb.password

- Login password of the mongo server. Cannot be set with URI. (Character[], default:

<none>) - spring.data.mongodb.port

- Mongo server port. Cannot be set with URI. (Integer, default:

<none>) - spring.data.mongodb.uri

- Mongo database URI. Cannot be set with host, port and credentials. (String, default:

<none>) - spring.data.mongodb.username

- Login user of the mongo server. Cannot be set with URI. (String, default:

<none>) - trigger.cron

- Cron expression value for the Cron Trigger. (String, default:

<none>) - trigger.date-format

- Format for the date value. (String, default:

<none>) - trigger.fixed-delay

- Fixed delay for periodic triggers. (Integer, default:

1) - trigger.initial-delay

- Initial delay for periodic triggers. (Integer, default:

0) - trigger.max-messages

- Maximum messages per poll, -1 means infinity. (Long, default:

-1) - trigger.time-unit

- The TimeUnit to apply to delay values. (TimeUnit, default:

SECONDS, possible values:NANOSECONDS,MICROSECONDS,MILLISECONDS,SECONDS,MINUTES,HOURS,DAYS)

Also see the Spring Boot Documentation for additional MongoProperties properties.

See and TriggerProperties for polling options.

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one of the folders and build it:

$ ./mvnw clean package

The "mqtt" source enables receiving messages from MQTT.

The mqtt source has the following options:

- mqtt.binary

- true to leave the payload as bytes (Boolean, default:

false) - mqtt.charset

- the charset used to convert bytes to String (when binary is false) (String, default:

UTF-8) - mqtt.clean-session

- whether the client and server should remember state across restarts and reconnects (Boolean, default:

true) - mqtt.client-id

- identifies the client (String, default:

stream.client.id.source) - mqtt.connection-timeout

- the connection timeout in seconds (Integer, default:

30) - mqtt.keep-alive-interval

- the ping interval in seconds (Integer, default:

60) - mqtt.password

- the password to use when connecting to the broker (String, default:

guest) - mqtt.persistence

- 'memory' or 'file' (String, default:

memory) - mqtt.persistence-directory

- Persistence directory (String, default:

/tmp/paho) - mqtt.qos

- the qos; a single value for all topics or a comma-delimited list to match the topics (int[], default:

[0]) - mqtt.topics

- the topic(s) (comma-delimited) to which the source will subscribe (String[], default:

[stream.mqtt]) - mqtt.url

- location of the mqtt broker(s) (comma-delimited list) (String[], default:

[tcp://localhost:1883]) - mqtt.username

- the username to use when connecting to the broker (String, default:

guest)

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one one of the folders and build it:

$ ./mvnw clean package

The "rabbit" source enables receiving messages from RabbitMQ.

The queue(s) must exist before the stream is deployed; they are not created automatically. You can easily create a Queue using the RabbitMQ web UI.

The rabbit source has the following options:

- rabbit.enable-retry

- true to enable retry. (Boolean, default:

false) - rabbit.initial-retry-interval

- Initial retry interval when retry is enabled. (Integer, default:

1000) - rabbit.mapped-request-headers

- Headers that will be mapped. (String[], default:

[STANDARD_REQUEST_HEADERS]) - rabbit.max-attempts

- The maximum delivery attempts when retry is enabled. (Integer, default:

3) - rabbit.max-retry-interval

- Max retry interval when retry is enabled. (Integer, default:

30000) - rabbit.own-connection

- When true, use a separate connection based on the boot properties. (Boolean, default:

false) - rabbit.queues

- The queues to which the source will listen for messages. (String[], default:

<none>) - rabbit.requeue

- Whether rejected messages should be requeued. (Boolean, default:

true) - rabbit.retry-multiplier

- Retry backoff multiplier when retry is enabled. (Double, default:

2) - rabbit.transacted

- Whether the channel is transacted. (Boolean, default:

false) - spring.rabbitmq.addresses

- Comma-separated list of addresses to which the client should connect. (String, default:

<none>) - spring.rabbitmq.connection-timeout

- Connection timeout. Set it to zero to wait forever. (Duration, default:

<none>) - spring.rabbitmq.host

- RabbitMQ host. (String, default:

localhost) - spring.rabbitmq.password

- Login to authenticate against the broker. (String, default:

guest) - spring.rabbitmq.port

- RabbitMQ port. (Integer, default:

5672) - spring.rabbitmq.publisher-confirms

- Whether to enable publisher confirms. (Boolean, default:

false) - spring.rabbitmq.publisher-returns

- Whether to enable publisher returns. (Boolean, default:

false) - spring.rabbitmq.requested-heartbeat

- Requested heartbeat timeout; zero for none. If a duration suffix is not specified,

seconds will be used. (Duration, default:

<none>) - spring.rabbitmq.username

- Login user to authenticate to the broker. (String, default:

guest) - spring.rabbitmq.virtual-host

- Virtual host to use when connecting to the broker. (String, default:

<none>)

Also see the Spring Boot Documentation for addition properties for the broker connections and listener properties.

![[Note]](images/note.png) | Note |

|---|---|

With the default ackMode (AUTO) and requeue (true) options, failed message deliveries will be retried

indefinitely.

Since there is not much processing in the rabbit source, the risk of failure in the source itself is small, unless

the downstream |

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one of the folders and build it:

$ ./mvnw clean package

This source app supports transfer of files using the Amazon S3 protocol.

Files are transferred from the remote directory (S3 bucket) to the local directory where the application is deployed.

Messages emitted by the source are provided as a byte array by default. However, this can be

customized using the --mode option:

- ref Provides a

java.io.Filereference - lines Will split files line-by-line and emit a new message for each line

- contents The default. Provides the contents of a file as a byte array

When using --mode=lines, you can also provide the additional option --withMarkers=true.

If set to true, the underlying FileSplitter will emit additional start-of-file and end-of-file marker messages before and after the actual data.

The payload of these 2 additional marker messages is of type FileSplitter.FileMarker. The option withMarkers defaults to false if not explicitly set.

Content-Type: application/octet-streamfile_orginalFile: <java.io.File>file_name: <file name>

Content-Type: text/plainfile_orginalFile: <java.io.File>file_name: <file name>correlationId: <UUID>(same for each line)sequenceNumber: <n>sequenceSize: 0(number of lines is not know until the file is read)

The s3 source has the following options:

- file.consumer.markers-json

- When 'fileMarkers == true', specify if they should be produced

as FileSplitter.FileMarker objects or JSON. (Boolean, default:

true) - file.consumer.mode

- The FileReadingMode to use for file reading sources.

Values are 'ref' - The File object,

'lines' - a message per line, or

'contents' - the contents as bytes. (FileReadingMode, default:

<none>, possible values:ref,lines,contents) - file.consumer.with-markers

- Set to true to emit start of file/end of file marker messages before/after the data.

Only valid with FileReadingMode 'lines'. (Boolean, default:

<none>) - s3.auto-create-local-dir

- Create or not the local directory. (Boolean, default:

true) - s3.delete-remote-files

- Delete or not remote files after processing. (Boolean, default:

false) - s3.filename-pattern

- The pattern to filter remote files. (String, default:

<none>) - s3.filename-regex

- The regexp to filter remote files. (Pattern, default:

<none>) - s3.local-dir

- The local directory to store files. (File, default:

<none>) - s3.preserve-timestamp

- To transfer or not the timestamp of the remote file to the local one. (Boolean, default:

true) - s3.remote-dir

- AWS S3 bucket resource. (String, default:

bucket) - s3.remote-file-separator

- Remote File separator. (String, default:

/) - s3.tmp-file-suffix

- Temporary file suffix. (String, default:

.tmp) - trigger.cron

- Cron expression value for the Cron Trigger. (String, default:

<none>) - trigger.date-format

- Format for the date value. (String, default:

<none>) - trigger.fixed-delay

- Fixed delay for periodic triggers. (Integer, default:

1) - trigger.initial-delay

- Initial delay for periodic triggers. (Integer, default:

0) - trigger.max-messages

- Maximum messages per poll, -1 means infinity. (Long, default:

-1) - trigger.time-unit

- The TimeUnit to apply to delay values. (TimeUnit, default:

SECONDS, possible values:NANOSECONDS,MICROSECONDS,MILLISECONDS,SECONDS,MINUTES,HOURS,DAYS)

The Amazon S3 Source (as all other Amazon AWS applications) is based on the Spring Cloud AWS project as a foundation, and its auto-configuration classes are used automatically by Spring Boot. Consult their documentation regarding required and useful auto-configuration properties.

Some of them are about AWS credentials:

- cloud.aws.credentials.accessKey

- cloud.aws.credentials.secretKey

- cloud.aws.credentials.instanceProfile

- cloud.aws.credentials.profileName

- cloud.aws.credentials.profilePath

Other are for AWS Region definition:

- cloud.aws.region.auto

- cloud.aws.region.static

And for AWS Stack:

- cloud.aws.stack.auto

- cloud.aws.stack.name

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one one of the folders and build it:

$ ./mvnw clean package

This source app supports transfer of files using the SFTP protocol.

Files are transferred from the remote directory to the local directory where the application is deployed.

Messages emitted by the source are provided as a byte array by default. However, this can be

customized using the --mode option:

- ref Provides a

java.io.Filereference - lines Will split files line-by-line and emit a new message for each line

- contents The default. Provides the contents of a file as a byte array

When using --mode=lines, you can also provide the additional option --withMarkers=true.

If set to true, the underlying FileSplitter will emit additional start-of-file and end-of-file marker messages before and after the actual data.

The payload of these 2 additional marker messages is of type FileSplitter.FileMarker. The option withMarkers defaults to false if not explicitly set.

When configuring the sftp.factory.known-hosts-expression option, the root object of the evaluation is the application context, an example might be sftp.factory.known-hosts-expression = @systemProperties['user.home'] + '/.ssh/known_hosts'.

Content-Type: application/octet-streamfile_originalFile: <java.io.File>file_name: <file name>

Content-Type: text/plainfile_originalFile: <java.io.File>file_name: <file name>correlationId: <UUID>(same for each line)sequenceNumber: <n>sequenceSize: 0(number of lines is not know until the file is read)

The sftp source has the following options:

- file.consumer.markers-json

- When 'fileMarkers == true', specify if they should be produced

as FileSplitter.FileMarker objects or JSON. (Boolean, default:

true) - file.consumer.mode

- The FileReadingMode to use for file reading sources.

Values are 'ref' - The File object,

'lines' - a message per line, or

'contents' - the contents as bytes. (FileReadingMode, default:

<none>, possible values:ref,lines,contents) - file.consumer.with-markers

- Set to true to emit start of file/end of file marker messages before/after the data.

Only valid with FileReadingMode 'lines'. (Boolean, default:

<none>) - sftp.auto-create-local-dir

- Set to true to create the local directory if it does not exist. (Boolean, default:

true) - sftp.batch.batch-resource-uri

- The URI of the batch artifact to be applied to the TaskLaunchRequest. (String, default:

<empty string>) - sftp.batch.data-source-password

- The datasource password to be applied to the TaskLaunchRequest. (String, default:

<none>) - sftp.batch.data-source-url

- The datasource url to be applied to the TaskLaunchRequest. Defaults to h2 in-memory

JDBC datasource url. (String, default:

jdbc:h2:tcp://localhost:19092/mem:dataflow) - sftp.batch.data-source-user-name

- The datasource user name to be applied to the TaskLaunchRequest. Defaults to "sa" (String, default:

sa) - sftp.batch.deployment-properties

- Comma delimited list of deployment properties to be applied to the

TaskLaunchRequest. (String, default:

<none>) - sftp.batch.environment-properties

- Comma delimited list of environment properties to be applied to the

TaskLaunchRequest. (String, default:

<none>) - sftp.batch.job-parameters

- Comma separated list of optional job parameters in key=value format. (List<String>, default:

<none>) - sftp.batch.local-file-path-job-parameter-name

- Value to use as the local file job parameter name. Defaults to "localFilePath". (String, default:

localFilePath) - sftp.batch.local-file-path-job-parameter-value

- The file path to use as the local file job parameter value. Defaults to "java.io.tmpdir". (String, default:

<none>) - sftp.batch.remote-file-path-job-parameter-name

- Value to use as the remote file job parameter name. Defaults to "remoteFilePath". (String, default:

remoteFilePath) - sftp.delete-remote-files

- Set to true to delete remote files after successful transfer. (Boolean, default:

false) - sftp.factory.allow-unknown-keys

- True to allow an unknown or changed key. (Boolean, default:

false) - sftp.factory.cache-sessions

- Cache sessions (Boolean, default:

<none>) - sftp.factory.host

- The host name of the server. (String, default:

localhost) - sftp.factory.known-hosts-expression

- A SpEL expression resolving to the location of the known hosts file. (Expression, default:

<none>) - sftp.factory.pass-phrase

- Passphrase for user's private key. (String, default:

<empty string>) - sftp.factory.password

- The password to use to connect to the server. (String, default:

<none>) - sftp.factory.port

- The port of the server. (Integer, default:

22) - sftp.factory.private-key

- Resource location of user's private key. (String, default:

<empty string>) - sftp.factory.username

- The username to use to connect to the server. (String, default:

<none>) - sftp.filename-pattern

- A filter pattern to match the names of files to transfer. (String, default:

<none>) - sftp.filename-regex

- A filter regex pattern to match the names of files to transfer. (Pattern, default:

<none>) - sftp.list-only

- Set to true to return file metadata without the entire payload. (Boolean, default:

false) - sftp.local-dir

- The local directory to use for file transfers. (File, default:

<none>) - sftp.metadata.redis.key-name

- The key name to use when storing file metadata. Defaults to "sftpSource". (String, default:

sftpSource) - sftp.preserve-timestamp

- Set to true to preserve the original timestamp. (Boolean, default:

true) - sftp.remote-dir

- The remote FTP directory. (String, default:

/) - sftp.remote-file-separator

- The remote file separator. (String, default:

/) - sftp.stream

- Set to true to stream the file rather than copy to a local directory. (Boolean, default:

false) - sftp.task-launcher-output

- Set to true to create output suitable for a task launch request. (Boolean, default:

false) - sftp.tmp-file-suffix

- The suffix to use while the transfer is in progress. (String, default:

.tmp) - trigger.cron

- Cron expression value for the Cron Trigger. (String, default:

<none>) - trigger.date-format

- Format for the date value. (String, default:

<none>) - trigger.fixed-delay

- Fixed delay for periodic triggers. (Integer, default:

1) - trigger.initial-delay

- Initial delay for periodic triggers. (Integer, default:

0) - trigger.max-messages

- Maximum messages per poll, -1 means infinity. (Long, default:

-1) - trigger.time-unit

- The TimeUnit to apply to delay values. (TimeUnit, default:

SECONDS, possible values:NANOSECONDS,MICROSECONDS,MILLISECONDS,SECONDS,MINUTES,HOURS,DAYS)

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one one of the folders and build it:

$ ./mvnw clean package

The syslog source receives SYSLOG packets over UDP, TCP, or both. RFC3164 (BSD) and RFC5424 formats are supported.

The syslog source has the following options:

- syslog.buffer-size

- the buffer size used when decoding messages; larger messages will be rejected. (Integer, default:

2048) - syslog.nio

- whether or not to use NIO (when supporting a large number of connections). (Boolean, default:

false) - syslog.port

- The port to listen on. (Integer, default:

1514) - syslog.protocol

- tcp or udp (String, default:

tcp) - syslog.reverse-lookup

- whether or not to perform a reverse lookup on the incoming socket. (Boolean, default:

false) - syslog.rfc

- '5424' or '3164' - the syslog format according the the RFC; 3164 is aka 'BSD' format. (String, default:

3164) - syslog.socket-timeout

- the socket timeout. (Integer, default:

0)

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one of the folders and build it:

$ ./mvnw clean package

The tcp source acts as a server and allows a remote party to connect to it and submit data over a raw tcp socket.

TCP is a streaming protocol and some mechanism is needed to frame messages on the wire. A number of decoders are available, the default being 'CRLF' which is compatible with Telnet.

Messages produced by the TCP source application have a byte[] payload.

- tcp.buffer-size

- The buffer size used when decoding messages; larger messages will be rejected. (Integer, default:

2048) - tcp.decoder

- The decoder to use when receiving messages. (Encoding, default:

<none>, possible values:CRLF,LF,NULL,STXETX,RAW,L1,L2,L4) - tcp.nio

- Whether or not to use NIO. (Boolean, default:

false) - tcp.port

- The port on which to listen; 0 for the OS to choose a port. (Integer, default:

1234) - tcp.reverse-lookup

- Perform a reverse DNS lookup on the remote IP Address; if false,

just the IP address is included in the message headers. (Boolean, default:

false) - tcp.socket-timeout

- The timeout (ms) before closing the socket when no data is received. (Integer, default:

120000) - tcp.use-direct-buffers

- Whether or not to use direct buffers. (Boolean, default:

false)

Text Data

- CRLF (default)

- text terminated by carriage return (0x0d) followed by line feed (0x0a)

- LF

- text terminated by line feed (0x0a)

- NULL

- text terminated by a null byte (0x00)

- STXETX

- text preceded by an STX (0x02) and terminated by an ETX (0x03)

Text and Binary Data

- RAW

- no structure - the client indicates a complete message by closing the socket

- L1

- data preceded by a one byte (unsigned) length field (supports up to 255 bytes)

- L2

- data preceded by a two byte (unsigned) length field (up to 216-1 bytes)

- L4

- data preceded by a four byte (signed) length field (up to 231-1 bytes)

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one one of the folders and build it:

$ ./mvnw clean package

The tcp-client source has the following options:

- tcp.buffer-size

- The buffer size used when decoding messages; larger messages will be rejected. (Integer, default:

2048) - tcp.charset

- The charset used when converting from bytes to String. (String, default:

UTF-8) - tcp.decoder

- The decoder to use when receiving messages. (Encoding, default:

<none>, possible values:CRLF,LF,NULL,STXETX,RAW,L1,L2,L4) - tcp.host

- The host to which this client will connect. (String, default:

localhost) - tcp.nio

- Whether or not to use NIO. (Boolean, default:

false) - tcp.port

- The port on which to listen; 0 for the OS to choose a port. (Integer, default:

1234) - tcp.retry-interval

- Retry interval (in milliseconds) to check the connection and reconnect. (Long, default:

60000) - tcp.reverse-lookup

- Perform a reverse DNS lookup on the remote IP Address; if false,

just the IP address is included in the message headers. (Boolean, default:

false) - tcp.socket-timeout

- The timeout (ms) before closing the socket when no data is received. (Integer, default:

120000) - tcp.use-direct-buffers

- Whether or not to use direct buffers. (Boolean, default:

false)

$ ./mvnw clean install -PgenerateApps $ cd apps

You can find the corresponding binder based projects here. You can then cd into one one of the folders and build it:

$ ./mvnw clean package

The time source will simply emit a String with the current time every so often.

The time source has the following options:

- trigger.cron

- Cron expression value for the Cron Trigger. (String, default:

<none>) - trigger.date-format

- Format for the date value. (String, default:

<none>) - trigger.fixed-delay

- Fixed delay for periodic triggers. (Integer, default:

1) - trigger.initial-delay

- Initial delay for periodic triggers. (Integer, default:

0) - trigger.max-messages

- Maximum messages per poll, -1 means infinity. (Long, default:

1) - trigger.time-unit

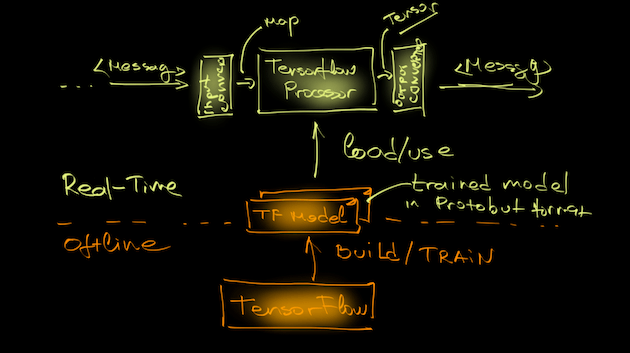

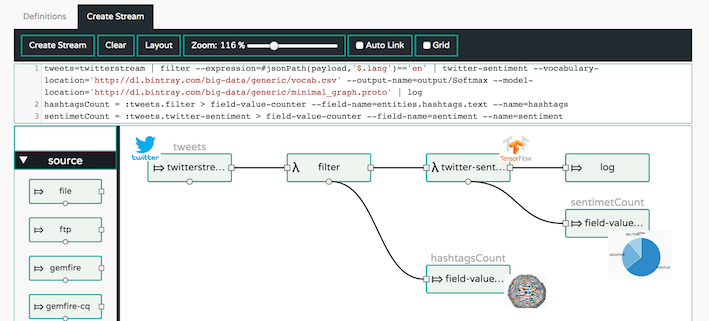

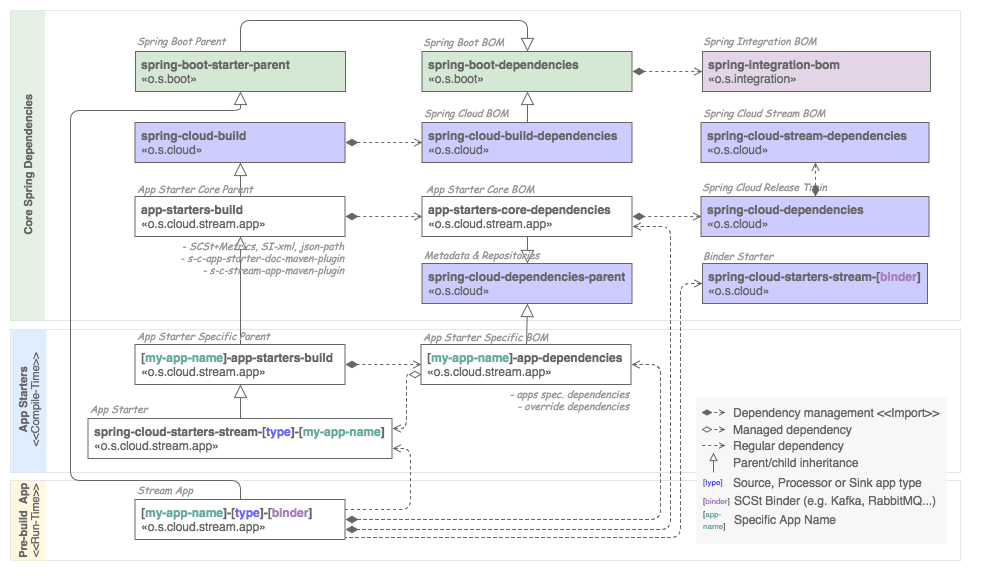

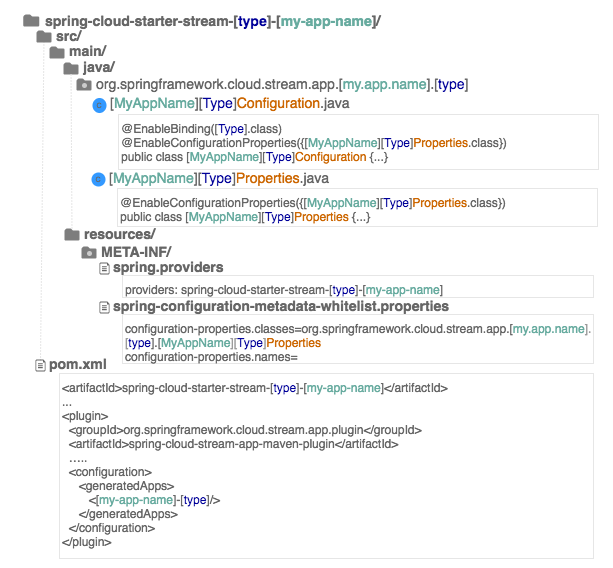

- The TimeUnit to apply to delay values. (TimeUnit, default: