3.2.10

Preface

A Brief History of Spring’s Data Integration Journey

Spring’s journey on Data Integration started with Spring Integration. With its programming model, it provided a consistent developer experience to build applications that can embrace Enterprise Integration Patterns to connect with external systems such as, databases, message brokers, and among others.

Fast forward to the cloud-era, where microservices have become prominent in the enterprise setting. Spring Boot transformed the way how developers built Applications. With Spring’s programming model and the runtime responsibilities handled by Spring Boot, it became seamless to develop stand-alone, production-grade Spring-based microservices.

To extend this to Data Integration workloads, Spring Integration and Spring Boot were put together into a new project. Spring Cloud Stream was born.

With Spring Cloud Stream, developers can:

-

Build, test and deploy data-centric applications in isolation.

-

Apply modern microservices architecture patterns, including composition through messaging.

-

Decouple application responsibilities with event-centric thinking. An event can represent something that has happened in time, to which the downstream consumer applications can react without knowing where it originated or the producer’s identity.

-

Port the business logic onto message brokers (such as RabbitMQ, Apache Kafka, Amazon Kinesis).

-

Rely on the framework’s automatic content-type support for common use-cases. Extending to different data conversion types is possible.

-

and many more. . .

Quick Start

You can try Spring Cloud Stream in less than 5 min even before you jump into any details by following this three-step guide.

We show you how to create a Spring Cloud Stream application that receives messages coming from the messaging middleware of your choice (more on this later) and logs received messages to the console.

We call it LoggingConsumer.

While not very practical, it provides a good introduction to some of the main concepts

and abstractions, making it easier to digest the rest of this user guide.

The three steps are as follows:

Creating a Sample Application by Using Spring Initializr

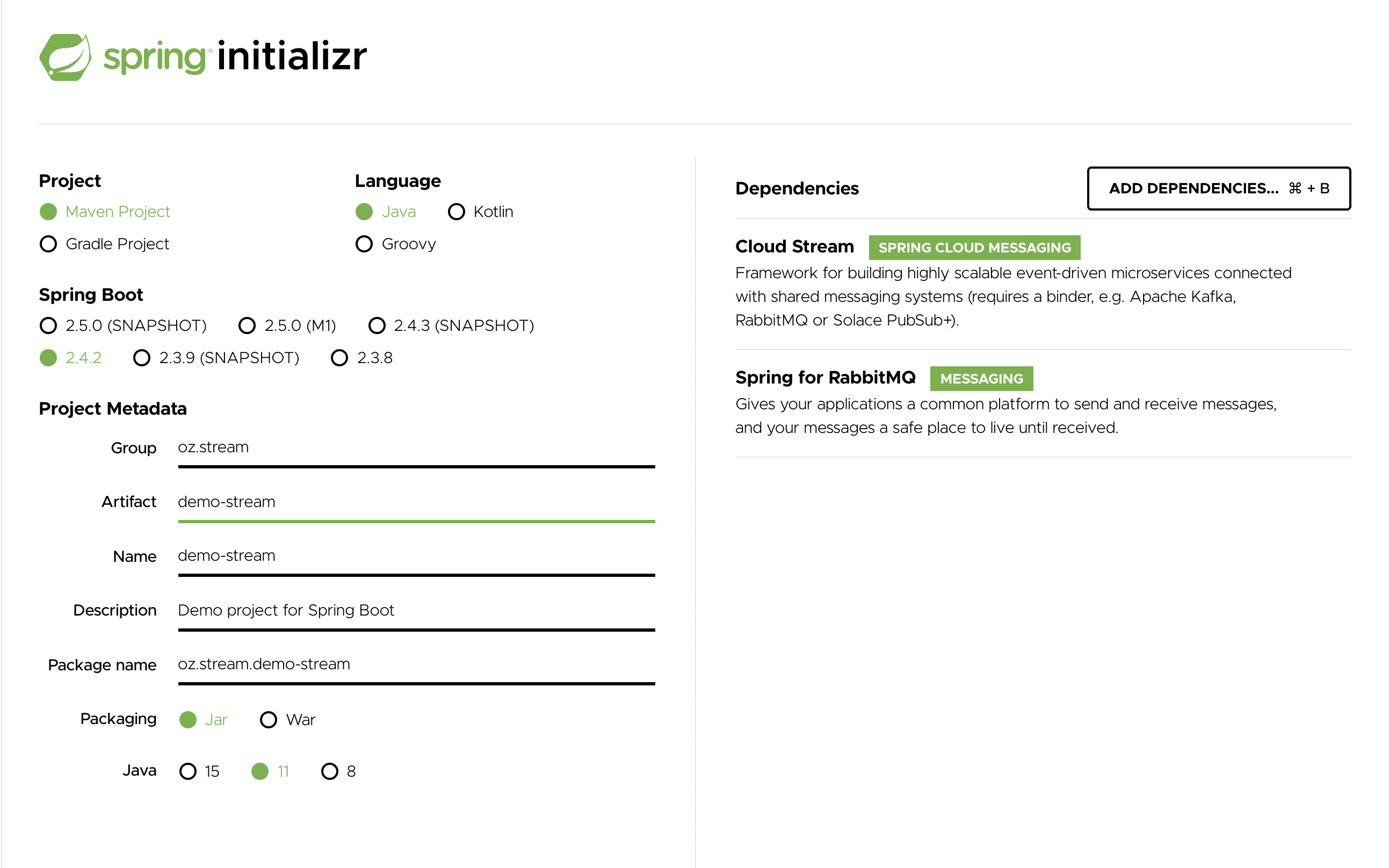

To get started, visit the Spring Initializr. From there, you can generate our LoggingConsumer application. To do so:

-

In the Dependencies section, start typing

stream. When the “Cloud Stream” option should appears, select it. -

Start typing either 'kafka' or 'rabbit'.

-

Select “Kafka” or “RabbitMQ”.

Basically, you choose the messaging middleware to which your application binds. We recommend using the one you have already installed or feel more comfortable with installing and running. Also, as you can see from the Initilaizer screen, there are a few other options you can choose. For example, you can choose Gradle as your build tool instead of Maven (the default).

-

In the Artifact field, type 'logging-consumer'.

The value of the Artifact field becomes the application name. If you chose RabbitMQ for the middleware, your Spring Initializr should now be as follows:

-

Click the Generate Project button.

Doing so downloads the zipped version of the generated project to your hard drive.

-

Unzip the file into the folder you want to use as your project directory.

| We encourage you to explore the many possibilities available in the Spring Initializr. It lets you create many different kinds of Spring applications. |

Importing the Project into Your IDE

Now you can import the project into your IDE. Keep in mind that, depending on the IDE, you may need to follow a specific import procedure. For example, depending on how the project was generated (Maven or Gradle), you may need to follow specific import procedure (for example, in Eclipse or STS, you need to use File → Import → Maven → Existing Maven Project).

Once imported, the project must have no errors of any kind. Also, src/main/java should contain com.example.loggingconsumer.LoggingConsumerApplication.

Technically, at this point, you can run the application’s main class. It is already a valid Spring Boot application. However, it does not do anything, so we want to add some code.

Adding a Message Handler, Building, and Running

Modify the com.example.loggingconsumer.LoggingConsumerApplication class to look as follows:

@SpringBootApplication

public class LoggingConsumerApplication {

public static void main(String[] args) {

SpringApplication.run(LoggingConsumerApplication.class, args);

}

@Bean

public Consumer<Person> log() {

return person -> {

System.out.println("Received: " + person);

};

}

public static class Person {

private String name;

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public String toString() {

return this.name;

}

}

}

As you can see from the preceding listing:

-

We are using functional programming model (see Spring Cloud Function support) to define a single message handler as

Consumer. -

We are relying on framework conventions to bind such handler to the input destination binding exposed by the binder.

Doing so also lets you see one of the core features of the framework: It tries to automatically convert incoming message payloads to type Person.

You now have a fully functional Spring Cloud Stream application that does listens for messages.

From here, for simplicity, we assume you selected RabbitMQ in step one.

Assuming you have RabbitMQ installed and running, you can start the application by running its main method in your IDE.

You should see following output:

--- [ main] c.s.b.r.p.RabbitExchangeQueueProvisioner : declaring queue for inbound: input.anonymous.CbMIwdkJSBO1ZoPDOtHtCg, bound to: input

--- [ main] o.s.a.r.c.CachingConnectionFactory : Attempting to connect to: [localhost:5672]

--- [ main] o.s.a.r.c.CachingConnectionFactory : Created new connection: rabbitConnectionFactory#2a3a299:0/SimpleConnection@66c83fc8. . .

. . .

--- [ main] o.s.i.a.i.AmqpInboundChannelAdapter : started inbound.input.anonymous.CbMIwdkJSBO1ZoPDOtHtCg

. . .

--- [ main] c.e.l.LoggingConsumerApplication : Started LoggingConsumerApplication in 2.531 seconds (JVM running for 2.897)Go to the RabbitMQ management console or any other RabbitMQ client and send a message to input.anonymous.CbMIwdkJSBO1ZoPDOtHtCg.

The anonymous.CbMIwdkJSBO1ZoPDOtHtCg part represents the group name and is generated, so it is bound to be different in your environment.

For something more predictable, you can use an explicit group name by setting spring.cloud.stream.bindings.input.group=hello (or whatever name you like).

The contents of the message should be a JSON representation of the Person class, as follows:

{"name":"Sam Spade"}

Then, in your console, you should see:

Received: Sam Spade

You can also build and package your application into a boot jar (by using ./mvnw clean install) and run the built JAR by using the java -jar command.

Now you have a working (albeit very basic) Spring Cloud Stream application.

Notable Deprecations

-

Annotation-based programming model. Basically the @EnableBInding, @StreamListener and all related annotations are now deprecated in favor of the functional programming model. See Spring Cloud Function support for more details.

-

Reactive module (

spring-cloud-stream-reactive) is discontinued and no longer distributed in favor of native support via spring-cloud-function. For backward compatibility you can still bringspring-cloud-stream-reactivefrom previous versions. -

Test support binder

spring-cloud-stream-test-supportwith MessageCollector in favor of a new test binder. See Testing for more details. -

@StreamMessageConverter - deprecated as it is no longer required.

-

The

original-content-typeheader references have been removed after it’s been deprecated in v2.0. -

The

BinderAwareChannelResolveris deprecated in favor if providingspring.cloud.stream.sendto.destinationproperty. This is primarily for function-based programming model. For StreamListener it would still be required and thus will stay until we deprecate and eventually discontinue StreamListener and annotation-based programming model.

Spring Expression Language (SpEL) in the context of Streaming data

Throughout this reference manual you will encounter many features and examples where you can utilize Spring Expression Language (SpEL). It is important to understand certain limitations when it comes to using it.

SpEL gives you access to the current Message as well as the Application Context you are running in.

However it is important to understand what type of data SpEL can see especially in the context of the incoming Message.

From the broker, the message arrives in a form of a byte[]. It is then transformed to a Message<byte[]> by the binders where as you can see the payload of the message maintains its raw form. The headers of the message are <String, Object>, where values are typically another primitive or a collection/array of primitives, hence Object.

That is because binder does not know the required input type as it has no access to the user code (function). So effectively binder delivered an envelope with the payload and some readable meta-data in the form of message headers, just like the letter delivered by mail.

This means that while accessing payload of the message is possible you will only have access to it as raw data (i.e., byte[]). And while it may be very common for developers to ask for ability to have SpEL access to fields of a payload object as concrete type (e.g., Foo, Bar etc), you can see how difficult or even impossible would it be to achieve.

Here is one example to demonstrate the problem; Imagine you have a routing expression to route to different functions based on payload type. This requirement would imply payload conversion from byte[] to a specific type and then applying the SpEL. However, in order to perform such conversion we would need to know the actual type to pass to converter and that comes from function’s signature which we don’t know which one. A better approach to solve this requirement would be to pass the type information as message headers (e.g., application/json;type=foo.bar.Baz ). You’ll get a clear readable String value that could be accessed and evaluated in a year and easy to read SpEL expression.

Additionally it is considered very bad practice to use payload for routing decisions, since the payload is considered to be privileged data - data only to be read by its final recipient. Again, using the mail delivery analogy you would not want the mailman to open your envelope and read the contents of the letter to make some delivery decisions. The same concept applies here, especially when it is relatively easy to include such information when generating a Message. It enforces certain level of discipline related to the design of data to be transmitted over the network and which pieces of such data can be considered as public and which are privileged.

Introducing Spring Cloud Stream

Spring Cloud Stream is a framework for building message-driven microservice applications. Spring Cloud Stream builds upon Spring Boot to create standalone, production-grade Spring applications and uses Spring Integration to provide connectivity to message brokers. It provides opinionated configuration of middleware from several vendors, introducing the concepts of persistent publish-subscribe semantics, consumer groups, and partitions.

By adding spring-cloud-stream dependencies to the classpath of your application, you get immediate connectivity

to a message broker exposed by the provided spring-cloud-stream binder (more on that later), and you can implement your functional

requirement, which is run (based on the incoming message) by a java.util.function.Function.

The following listing shows a quick example:

@SpringBootApplication

public class SampleApplication {

public static void main(String[] args) {

SpringApplication.run(SampleApplication.class, args);

}

@Bean

public Function<String, String> uppercase() {

return value -> value.toUpperCase();

}

}

The following listing shows the corresponding test:

@SpringBootTest(classes = SampleApplication.class)

@Import({TestChannelBinderConfiguration.class})

class BootTestStreamApplicationTests {

@Autowired

private InputDestination input;

@Autowired

private OutputDestination output;

@Test

void contextLoads() {

input.send(new GenericMessage<byte[]>("hello".getBytes()));

assertThat(output.receive().getPayload()).isEqualTo("HELLO".getBytes());

}

}

Main Concepts

Spring Cloud Stream provides a number of abstractions and primitives that simplify the writing of message-driven microservice applications. This section gives an overview of the following:

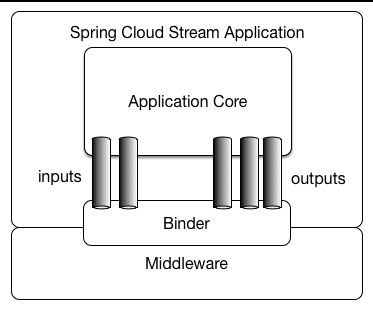

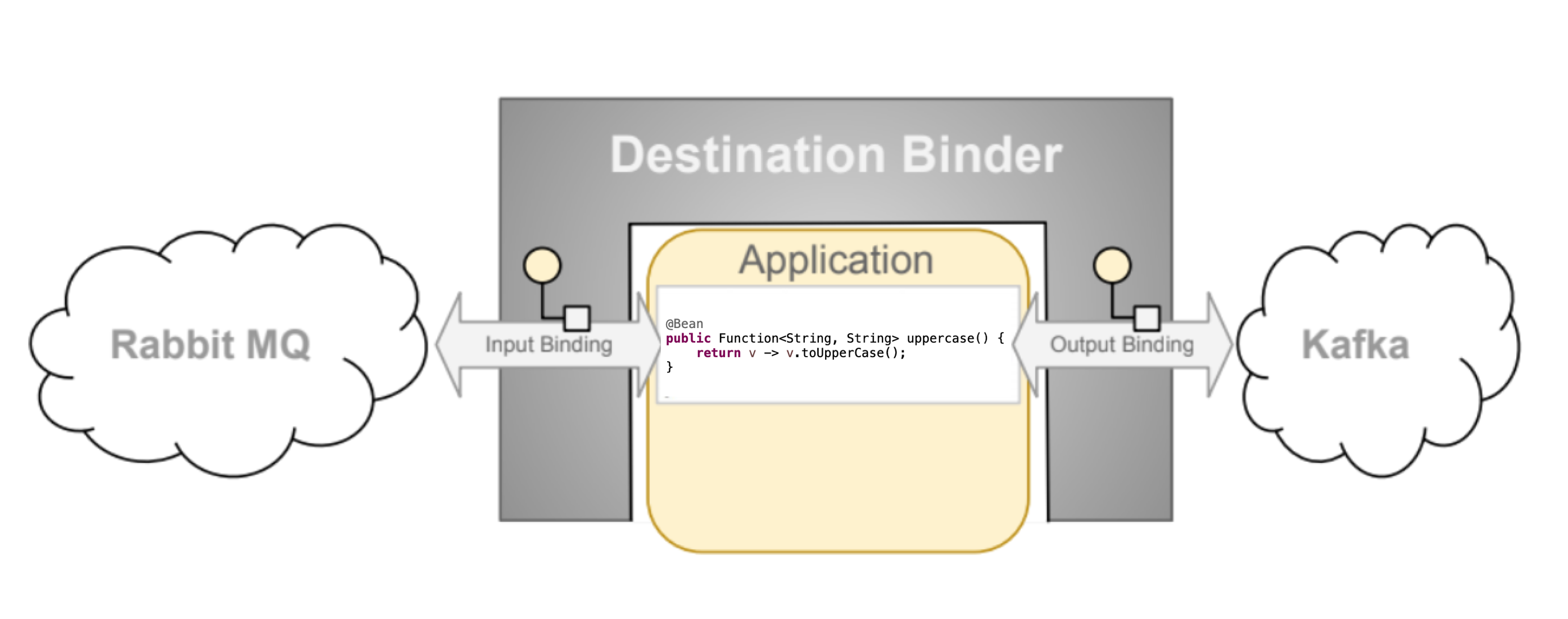

Application Model

A Spring Cloud Stream application consists of a middleware-neutral core. The application communicates with the outside world by establishing bindings between destinations exposed by the external brokers and input/output arguments in your code. Broker specific details necessary to establish bindings are handled by middleware-specific Binder implementations.

Fat JAR

Spring Cloud Stream applications can be run in stand-alone mode from your IDE for testing. To run a Spring Cloud Stream application in production, you can create an executable (or “fat”) JAR by using the standard Spring Boot tooling provided for Maven or Gradle. See the Spring Boot Reference Guide for more details.

The Binder Abstraction

Spring Cloud Stream provides Binder implementations for Kafka and Rabbit MQ. The framework also includes a test binder for integration testing of your applications as spring-cloud-stream application. See Testing section for more details.

Binder abstraction is also one of the extension points of the framework, which means you can implement your own binder on top of Spring Cloud Stream.

In the How to create a Spring Cloud Stream Binder from scratch post a community member documents

in details, with an example, a set of steps necessary to implement a custom binder.

The steps are also highlighted in the Implementing Custom Binders section.

Spring Cloud Stream uses Spring Boot for configuration, and the Binder abstraction makes it possible for a Spring Cloud Stream application to be flexible in how it connects to middleware.

For example, deployers can dynamically choose, at runtime, the mapping between the external destinations (such as the Kafka topics or RabbitMQ exchanges) and inputs

and outputs of the message handler (such as input parameter of the function and its return argument).

Such configuration can be provided through external configuration properties and in any form supported by Spring Boot (including application arguments, environment variables, and application.yml or application.properties files).

In the sink example from the Introducing Spring Cloud Stream section, setting the spring.cloud.stream.bindings.input.destination application property to raw-sensor-data causes it to read from the raw-sensor-data Kafka topic or from a queue bound to the raw-sensor-data RabbitMQ exchange.

Spring Cloud Stream automatically detects and uses a binder found on the classpath. You can use different types of middleware with the same code. To do so, include a different binder at build time. For more complex use cases, you can also package multiple binders with your application and have it choose the binder( and even whether to use different binders for different bindings) at runtime.

Persistent Publish-Subscribe Support

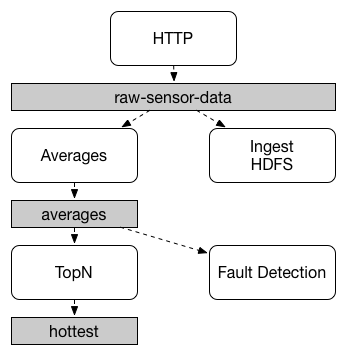

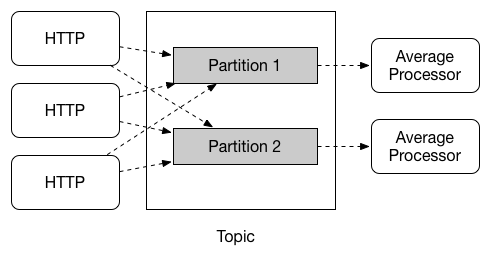

Communication between applications follows a publish-subscribe model, where data is broadcast through shared topics. This can be seen in the following figure, which shows a typical deployment for a set of interacting Spring Cloud Stream applications.

Data reported by sensors to an HTTP endpoint is sent to a common destination named raw-sensor-data.

From the destination, it is independently processed by a microservice application that computes time-windowed averages and by another microservice application that ingests the raw data into HDFS (Hadoop Distributed File System).

In order to process the data, both applications declare the topic as their input at runtime.

The publish-subscribe communication model reduces the complexity of both the producer and the consumer and lets new applications be added to the topology without disruption of the existing flow. For example, downstream from the average-calculating application, you can add an application that calculates the highest temperature values for display and monitoring. You can then add another application that interprets the same flow of averages for fault detection. Doing all communication through shared topics rather than point-to-point queues reduces coupling between microservices.

While the concept of publish-subscribe messaging is not new, Spring Cloud Stream takes the extra step of making it an opinionated choice for its application model. By using native middleware support, Spring Cloud Stream also simplifies use of the publish-subscribe model across different platforms.

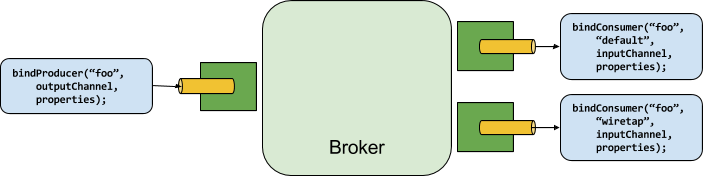

Consumer Groups

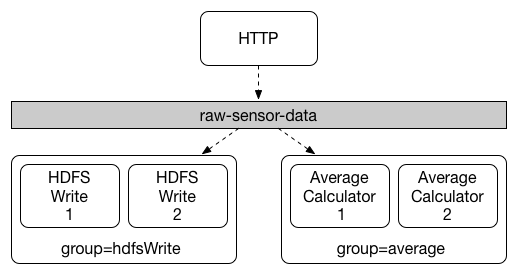

While the publish-subscribe model makes it easy to connect applications through shared topics, the ability to scale up by creating multiple instances of a given application is equally important. When doing so, different instances of an application are placed in a competing consumer relationship, where only one of the instances is expected to handle a given message.

Spring Cloud Stream models this behavior through the concept of a consumer group.

(Spring Cloud Stream consumer groups are similar to and inspired by Kafka consumer groups.)

Each consumer binding can use the spring.cloud.stream.bindings.<bindingName>.group property to specify a group name.

For the consumers shown in the following figure, this property would be set as spring.cloud.stream.bindings.<bindingName>.group=hdfsWrite or spring.cloud.stream.bindings.<bindingName>.group=average.

All groups that subscribe to a given destination receive a copy of published data, but only one member of each group receives a given message from that destination. By default, when a group is not specified, Spring Cloud Stream assigns the application to an anonymous and independent single-member consumer group that is in a publish-subscribe relationship with all other consumer groups.

Consumer Types

Two types of consumer are supported:

-

Message-driven (sometimes referred to as Asynchronous)

-

Polled (sometimes referred to as Synchronous)

Prior to version 2.0, only asynchronous consumers were supported. A message is delivered as soon as it is available and a thread is available to process it.

When you wish to control the rate at which messages are processed, you might want to use a synchronous consumer.

Durability

Consistent with the opinionated application model of Spring Cloud Stream, consumer group subscriptions are durable. That is, a binder implementation ensures that group subscriptions are persistent and that, once at least one subscription for a group has been created, the group receives messages, even if they are sent while all applications in the group are stopped.

|

Anonymous subscriptions are non-durable by nature. For some binder implementations (such as RabbitMQ), it is possible to have non-durable group subscriptions. |

In general, it is preferable to always specify a consumer group when binding an application to a given destination. When scaling up a Spring Cloud Stream application, you must specify a consumer group for each of its input bindings. Doing so prevents the application’s instances from receiving duplicate messages (unless that behavior is desired, which is unusual).

Partitioning Support

Spring Cloud Stream provides support for partitioning data between multiple instances of a given application. In a partitioned scenario, the physical communication medium (such as the broker topic) is viewed as being structured into multiple partitions. One or more producer application instances send data to multiple consumer application instances and ensure that data identified by common characteristics are processed by the same consumer instance.

Spring Cloud Stream provides a common abstraction for implementing partitioned processing use cases in a uniform fashion. Partitioning can thus be used whether the broker itself is naturally partitioned (for example, Kafka) or not (for example, RabbitMQ).

Partitioning is a critical concept in stateful processing, where it is critical (for either performance or consistency reasons) to ensure that all related data is processed together. For example, in the time-windowed average calculation example, it is important that all measurements from any given sensor are processed by the same application instance.

| To set up a partitioned processing scenario, you must configure both the data-producing and the data-consuming ends. |

Programming Model

To understand the programming model, you should be familiar with the following core concepts:

-

Destination Binders: Components responsible to provide integration with the external messaging systems.

-

Bindings: Bridge between the external messaging systems and application provided Producers and Consumers of messages (created by the Destination Binders).

-

Message: The canonical data structure used by producers and consumers to communicate with Destination Binders (and thus other applications via external messaging systems).

Destination Binders

Destination Binders are extension components of Spring Cloud Stream responsible for providing the necessary configuration and implementation to facilitate integration with external messaging systems. This integration is responsible for connectivity, delegation, and routing of messages to and from producers and consumers, data type conversion, invocation of the user code, and more.

Binders handle a lot of the boiler plate responsibilities that would otherwise fall on your shoulders. However, to accomplish that, the binder still needs some help in the form of minimalistic yet required set of instructions from the user, which typically come in the form of some type of binding configuration.

While it is out of scope of this section to discuss all of the available binder and binding configuration options (the rest of the manual covers them extensively), Binding as a concept, does require special attention. The next section discusses it in detail.

Bindings

As stated earlier, Bindings provide a bridge between the external messaging system (e.g., queue, topic etc.) and application-provided Producers and Consumers.

The following example shows a fully configured and functioning Spring Cloud Stream application that receives the payload of the message

as a String type (see Content Type Negotiation section), logs it to the console and sends it down stream after converting it to upper case.

@SpringBootApplication

public class SampleApplication {

public static void main(String[] args) {

SpringApplication.run(SampleApplication.class, args);

}

@Bean

public Function<String, String> uppercase() {

return value -> {

System.out.println("Received: " + value);

return value.toUpperCase();

};

}

}

The above example looks no different then any vanilla spring-boot application. It defines a single bean of type Function

and that it is. So, how does it became spring-cloud-stream application?

It becomes spring-cloud-stream application simply based on the presence of spring-cloud-stream and binder dependencies

and auto-configuration classes on the classpath effectively setting the context for your boot application as spring-cloud-stream application.

And in this context beans of type Supplier, Function or Consumer are treated as defacto message handlers triggering

binding of to destinations exposed by the provided binder following certain naming conventions and

rules to avoid extra configuration.

Binding and Binding names

Binding is an abstraction that represents a bridge between sources and targets exposed by the binder and user code, This abstraction has a name and while we try to do our best to limit configuration required to run spring-cloud-stream applications, being aware of such name(s) is necessary for cases where additional per-binding configuration is required.

Throughout this manual you will see examples of configuration properties such as spring.cloud.stream.bindings.input.destination=myQueue.

The input segment in this property name is what we refer to as binding name and it could derive via several mechanisms.

The following sub-sections will describe the naming conventions and configuration elements used by spring-cloud-stream to control binding names.

If your binding name has special characters, such as the . character, you need to surround the binding key with brackets ([]) and then wrap it in qoutes.

For example spring.cloud.stream.bindings."[my.output.binding.key]".destination.

|

Functional binding names

Unlike the explicit naming required by annotation-based support (legacy) used in the previous versions of spring-cloud-stream, the functional programming model defaults to a simple convention when it comes to binding names, thus greatly simplifying application configuration. Let’s look at the first example:

@SpringBootApplication

public class SampleApplication {

@Bean

public Function<String, String> uppercase() {

return value -> value.toUpperCase();

}

}

In the preceding example we have an application with a single function which acts as message handler. As a Function it has an

input and output.

The naming convention used to name input and output bindings is as follows:

-

input -

<functionName> + -in- + <index> -

output -

<functionName> + -out- + <index>

The in and out corresponds to the type of binding (such as input or output).

The index is the index of the input or output binding. It is always 0 for typical single input/output function,

so it’s only relevant for Functions with multiple input and output arguments.

So if for example you would want to map the input of this function to a remote destination (e.g., topic, queue etc) called "my-topic" you would do so with the following property:

--spring.cloud.stream.bindings.uppercase-in-0.destination=my-topic

Note how uppercase-in-0 is used as a segment in property name. The same goes for uppercase-out-0.

Descriptive Binding Names

Some times to improve readability you may want to give your binding a more descriptive name (such as 'account', 'orders` etc).

Another way of looking at it is you can map an implicit binding name to an explicit binding name. And you can do it with

spring.cloud.stream.function.bindings.<binding-name> property.

This property also provides a migration path for existing applications that rely on custom interface-based

bindings that require explicit names.

For example,

--spring.cloud.stream.function.bindings.uppercase-in-0=input

In the preceding example you mapped and effectively renamed uppercase-in-0 binding name to input. Now all configuration

properties can refer to input binding name instead (e.g., --spring.cloud.stream.bindings.input.destination=my-topic).

While descriptive binding names may enhance the readability aspect of the configuration, they also create

another level of misdirection by mapping an implicit binding name to an explicit binding name. And since all subsequent

configuration properties will use the explicit binding name you must always refer to this 'bindings' property to

correlate which function it actually corresponds to. We believe that for most cases (with the exception of Functional Composition)

it may be an overkill, so, it is our recommendation to avoid using it altogether, especially

since not using it provides a clear path between binder destination and binding name, such as spring.cloud.stream.bindings.uppercase-in-0.destination=sample-topic,

where you are clearly correlating the input of uppercase function to sample-topic destination.

|

For more on properties and other configuration options please see Configuration Options section.

Explicit binding creation

In the previous section we explained how bindings are created implicitly driven by Function, Supplier or Consumer provided by your application.

However, there are times when you may need to create binding explicitly where bindings are not tied to any function. This is typically done to

support integrations with other frameworks (e.g., Spring Integration framework) where you may need direct access to the underlying MessageChannel.

Spring Cloud Stream allows you to define input and output bindings explicitly via spring.cloud.stream.input-bindings and spring.cloud.stream.output-bindings

properties. Noticed the plural in the property names allowing you to define multiple bindings by simply using ; as a delimiter.

Just look at the following test case as an example:

@Test

public void testExplicitBindings() {

try (ConfigurableApplicationContext context = new SpringApplicationBuilder(

TestChannelBinderConfiguration.getCompleteConfiguration(EmptyConfiguration.class))

.web(WebApplicationType.NONE)

.run("--spring.jmx.enabled=false",

"--spring.cloud.stream.input-bindings=fooin;barin",

"--spring.cloud.stream.output-bindings=fooout;barout")) {

assertThat(context.getBean("fooin-in-0", MessageChannel.class)).isNotNull();

assertThat(context.getBean("barin-in-0", MessageChannel.class)).isNotNull();

assertThat(context.getBean("fooout-out-0", MessageChannel.class)).isNotNull();

assertThat(context.getBean("barout-out-0", MessageChannel.class)).isNotNull();

}

}

@EnableAutoConfiguration

@Configuration

public static class EmptyConfiguration {

}

As you can see we have declared two input bindings and two output bindings while our configuration had no functions defined, yet we were able to successfully create these bindings and access their corresponding channels.

The rest of the binding rules that apply to implicit bindings apply here as well (for example, you can see that fooin turned into fooin-in-0 binding/channel etc).

Producing and Consuming Messages

You can write a Spring Cloud Stream application by simply writing functions and exposing them as `@Bean`s. You can also use Spring Integration annotations based configuration or Spring Cloud Stream annotation based configuration, although starting with spring-cloud-stream 3.x we recommend using functional implementations.

Spring Cloud Function support

Overview

Since Spring Cloud Stream v2.1, another alternative for defining stream handlers and sources is to use build-in

support for Spring Cloud Function where they can be expressed as beans of

type java.util.function.[Supplier/Function/Consumer].

To specify which functional bean to bind to the external destination(s) exposed by the bindings,

you must provide spring.cloud.function.definition property.

In the event you only have single bean of type java.util.function.[Supplier/Function/Consumer], you can

skip the spring.cloud.function.definition property, since such functional bean will be auto-discovered. However,

it is considered best practice to use such property to avoid any confusion.

Some time this auto-discovery can get in the way, since single bean of type java.util.function.[Supplier/Function/Consumer]

could be there for purposes other then handling messages, yet being single it is auto-discovered and auto-bound.

For these rare scenarios you can disable auto-discovery by providing spring.cloud.stream.function.autodetect property with value set to false.

|

Here is the example of the application exposing message handler as java.util.function.Function effectively supporting

pass-thru semantics by acting as consumer and producer of data.

@SpringBootApplication

public class MyFunctionBootApp {

public static void main(String[] args) {

SpringApplication.run(MyFunctionBootApp.class);

}

@Bean

public Function<String, String> toUpperCase() {

return s -> s.toUpperCase();

}

}

In the preceding example, we define a bean of type java.util.function.Function called toUpperCase to be acting as message handler

whose 'input' and 'output' must be bound to the external destinations exposed by the provided destination binder.

By default the 'input' and 'output' binding names will be toUpperCase-in-0 and toUpperCase-out-0.

Please see Functional binding names section for details on naming convention used to establish binding names.

Below are the examples of simple functional applications to support other semantics:

Here is the example of a source semantics exposed as java.util.function.Supplier

@SpringBootApplication

public static class SourceFromSupplier {

@Bean

public Supplier<Date> date() {

return () -> new Date(12345L);

}

}

Here is the example of a sink semantics exposed as java.util.function.Consumer

@SpringBootApplication

public static class SinkFromConsumer {

@Bean

public Consumer<String> sink() {

return System.out::println;

}

}

Suppliers (Sources)

Function and Consumer are pretty straightforward when it comes to how their invocation is triggered. They are triggered based

on data (events) sent to the destination they are bound to. In other words, they are classic event-driven components.

However, Supplier is in its own category when it comes to triggering. Since it is, by definition, the source (the origin) of the data, it does not

subscribe to any in-bound destination and, therefore, has to be triggered by some other mechanism(s).

There is also a question of Supplier implementation, which could be imperative or reactive and which directly relates to the triggering of such suppliers.

Consider the following sample:

@SpringBootApplication

public static class SupplierConfiguration {

@Bean

public Supplier<String> stringSupplier() {

return () -> "Hello from Supplier";

}

}

The preceding Supplier bean produces a string whenever its get() method is invoked. However, who invokes this method and how often?

The framework provides a default polling mechanism (answering the question of "Who?") that will trigger the invocation of the supplier and by default it will do so

every second (answering the question of "How often?").

In other words, the above configuration produces a single message every second and each message is sent to an output destination that is exposed by the binder.

To learn how to customize the polling mechanism, see Polling Configuration Properties section.

Consider a different example:

@SpringBootApplication

public static class SupplierConfiguration {

@Bean

public Supplier<Flux<String>> stringSupplier() {

return () -> Flux.fromStream(Stream.generate(new Supplier<String>() {

@Override

public String get() {

try {

Thread.sleep(1000);

return "Hello from Supplier";

} catch (Exception e) {

// ignore

}

}

})).subscribeOn(Schedulers.elastic()).share();

}

}

The preceding Supplier bean adopts the reactive programming style. Typically, and unlike the imperative supplier,

it should be triggered only once, given that the invocation of its get() method produces (supplies) the continuous stream of messages and not an

individual message.

The framework recognizes the difference in the programming style and guarantees that such a supplier is triggered only once.

However, imagine the use case where you want to poll some data source and return a finite stream of data representing the result set. The reactive programming style is a perfect mechanism for such a Supplier. However, given the finite nature of the produced stream, such Supplier still needs to be invoked periodically.

Consider the following sample, which emulates such use case by producing a finite stream of data:

@SpringBootApplication

public static class SupplierConfiguration {

@PollableBean

public Supplier<Flux<String>> stringSupplier() {

return () -> Flux.just("hello", "bye");

}

}

The bean itself is annotated with PollableBean annotation (sub-set of @Bean), thus signaling to the framework that although the implementation

of such a supplier is reactive, it still needs to be polled.

There is a splittable attribute defined in PollableBean which signals to the post processors of this annotation

that the result produced by the annotated component has to be split and is set to true by default. It means that

the framework will split the returning sending out each item as an individual message. If this is not

he desired behavior you can set it to false at which point such supplier will simply return

the produced Flux without splitting it.

|

Supplier & threading

As you have learned by now, unlike Function and Consumer, which are triggered by an event (they have input data), Supplier does not have

any input and thus triggered by a different mechanism - poller, which may have an unpredictable threading mechanism. And while the details of the

threading mechanism most of the time are not relevant to the downstream execution of the function it may present an issue in certain cases

especially with integrated frameworks that may have certain expectations to thread affinity. For example, Spring Cloud Sleuth which relies

on tracing data stored in thread local.

For those cases we have another mechanism via StreamBridge, where user has more control over threading mechanism. You can get more details

in Sending arbitrary data to an output (e.g. Foreign event-driven sources) section.

|

Consumer (Reactive)

Reactive Consumer is a little bit special because it has a void return type, leaving framework with no reference to subscribe to.

Most likely you will not need to write Consumer<Flux<?>>, and instead write it as a Function<Flux<?>, Mono<Void>> invoking then

operator as the last operator on your stream.

For example:

public Function<Flux<?>, Mono<Void>>consumer() {

return flux -> flux.map(..).filter(..).then();

}

But if you do need to write an explicit Consumer<Flux<?>>, remember to subscribe to the incoming Flux.

Also, keep in mind that the same rule applies for function composition when mixing reactive and imperative functions.

Spring Cloud Function indeed supports composing reactive functions with imperative, however you must be aware of certain limitations.

For example, assume you have composed reactive function with imperative consumer.

The result of such composition is a reactive Consumer. However, there is no way to subscribe to such consumer as discussed earlier in this section,

so this limitation can only be addressed by either making your consumer reactive and subscribing manually (as discussed earlier), or changing your function to be imperative.

Polling Configuration Properties

The following properties are exposed (although deprecated since version 3.2) by Spring Cloud Stream and are prefixed with the spring.cloud.stream.poller:

- fixedDelay

-

Fixed delay for default poller in milliseconds.

Default: 1000L.

- maxMessagesPerPoll

-

Maximum messages for each polling event of the default poller.

Default: 1L.

- cron

-

Cron expression value for the Cron Trigger.

Default: none.

- initialDelay

-

Initial delay for periodic triggers.

Default: 0.

- timeUnit

-

The TimeUnit to apply to delay values.

Default: MILLISECONDS.

For example --spring.cloud.stream.poller.fixed-delay=2000 sets the poller interval to poll every two seconds.

These poller properties are deprecated starting version 3.2 in favor of similar configuration properties from Spring Boot auto-configuration for Spring Integration.

See org.springframework.boot.autoconfigure.integration.IntegrationProperties.Poller for more information.

|

Per-binding polling configuration

The previous section shows how to configure a single default poller that will be applied to all bindings. While it fits well with the model of microservices spring-cloud-stream designed for where each microservice represents a single component (e.g., Supplier) and thus default poller configuration is enough, there are edge cases where you may have several components that require different polling configurations

For such cases please use per-binding way of configuring poller. For example, assume you have an output binding supply-out-0. In this case you can configure poller for such

binding using spring.cloud.stream.bindings.supply-out-0.producer.poller.. prefix (e.g., spring.cloud.stream.bindings.supply-out-0.producer.poller.fixed-delay=2000).

Sending arbitrary data to an output (e.g. Foreign event-driven sources)

There are cases where the actual source of data may be coming from the external (foreign) system that is not a binder. For example, the source of the data may be a classic REST endpoint. How do we bridge such source with the functional mechanism used by spring-cloud-stream?

Spring Cloud Stream provides two mechanisms, so let’s look at them in more details

Here, for both samples we’ll use a standard MVC endpoint method called delegateToSupplier bound to the root web context,

delegating incoming requests to stream via StreamBridge mechanism.

@SpringBootApplication

@Controller

public class WebSourceApplication {

public static void main(String[] args) {

SpringApplication.run(WebSourceApplication.class, "--spring.cloud.stream.source=toStream");

}

@Autowired

private StreamBridge streamBridge;

@RequestMapping

@ResponseStatus(HttpStatus.ACCEPTED)

public void delegateToSupplier(@RequestBody String body) {

System.out.println("Sending " + body);

streamBridge.send("toStream-out-0", body);

}

}

Here we autowire a StreamBridge bean which allows us to send data to an output binding effectively

bridging non-stream application with spring-cloud-stream. Note that preceding example does not have any

source functions defined (e.g., Supplier bean) leaving the framework with no trigger to create source bindings in advance, which would be typical for cases where configuration contains function beans. And that is fine, since StreamBridge will initiate creation of output bindings (as well as

destination auto-provisioning if necessary) for non existing bindings on the first call to its send(..) operation caching it for

subsequent reuse (see StreamBridge and Dynamic Destinations for more details).

However, if you want to pre-create an output binding at the initialization (startup) time you can benefit from spring.cloud.stream.source property where you can declare the name of your sources.

The provided name will be used as a trigger to create a source binding.

So in the preceding example the name of the output binding will be toStream-out-0 which is consistent with the binding naming

convention used by functions (see Binding and Binding names). You can use ; to signify multiple sources (multiple output bindings)

(e.g., --spring.cloud.stream.source=foo;bar)

Also, note that streamBridge.send(..) method takes an Object for data. This means you can send POJO or Message to it and it

will go through the same routine when sending output as if it was from any Function or Supplier providing the same level

of consistency as with functions. This means the output type conversion, partitioning etc are honored as if it was from the output produced by functions.

StreamBridge and Dynamic Destinations

StreamBridge can also be used for cases when output destination(s) are not known ahead of time similar to the use cases

described in Routing FROM Consumer section.

Let’s look at the example

@SpringBootApplication

@Controller

public class WebSourceApplication {

public static void main(String[] args) {

SpringApplication.run(WebSourceApplication.class, args);

}

@Autowired

private StreamBridge streamBridge;

@RequestMapping

@ResponseStatus(HttpStatus.ACCEPTED)

public void delegateToSupplier(@RequestBody String body) {

System.out.println("Sending " + body);

streamBridge.send("myDestination", body);

}

}

As you can see the preceding example is very similar to the previous one with the exception of explicit binding instruction provided via

spring.cloud.stream.source property (which is not provided).

Here we’re sending data to myDestination name which does not exist as a binding. Therefore such name will be treated as dynamic destination

as described in Routing FROM Consumer section.

In the preceding example, we are using ApplicationRunner as a foreign source to feed the stream.

A more practical example, where the foreign source is REST endpoint.

@SpringBootApplication

@Controller

public class WebSourceApplication {

public static void main(String[] args) {

SpringApplication.run(WebSourceApplication.class);

}

@Autowired

private StreamBridge streamBridge;

@RequestMapping

@ResponseStatus(HttpStatus.ACCEPTED)

public void delegateToSupplier(@RequestBody String body) {

streamBridge.send("myBinding", body);

}

}

As you can see inside of delegateToSupplier method we’re using StreamBridge to send data to myBinding binding. And here you’re also benefiting from

the dynamic features of StreamBridge where if myBinding doesn’t exist it will be created automatically and cached, otherwise existing binding will be used.

Caching dynamic destinations (bindings) could result in memory leaks in the event there are many dynamic destinations. To have some level of control

we provide a self-evicting caching mechanism for output bindings with default cache size of 10. This means that if your dynamic destination size goes above that number, there is a possibility that an existing binding will be evicted and thus would need to be recreated which could cause minor performance degradation. You can increase the cache size via spring.cloud.stream.dynamic-destination-cache-size property setting it to the desired value.

|

curl -H "Content-Type: text/plain" -X POST -d "hello from the other side" http://localhost:8080/

By showing two examples we want to emphasize the approach will work with any type of foreign sources.

If you are using the Solace PubSub+ binder, Spring Cloud Stream has reserved the scst_targetDestination header (retrievable via BinderHeaders.TARGET_DESTINATION), which allows for messages to be redirected from their bindings' configured destination to the target destination specified by this header. This allows for the binder to manage the resources necessary to publish to dynamic destinations, relieving the framework from having to do so, and avoids the caching issues mentioned in the previous Note. More info here.

|

Output Content Type with StreamBridge

You can also provide specific content type if necessary with the following method signature public boolean send(String bindingName, Object data, MimeType outputContentType).

Or if you send data as a Message, its content type will be honored.

Using specific binder type with StreamBridge

Spring Cloud Stream supports multiple binder scenarios. For example you may be receiving data from Kafka and sending it to RabbitMQ.

For more information on multiple binders scenarios, please see Binders section and specifically Multiple Binders on the Classpath

In the event you are planning to use StreamBridge and have more then one binder configured in your application you must also tell StreamBridge

which binder to use. And for that there are two more variations of send method:

public boolean send(String bindingName, @Nullable String binderType, Object data)

public boolean send(String bindingName, @Nullable String binderType, Object data, MimeType outputContentType)

As you can see there is one additional argument that you can provide - binderType, telling BindingService which binder to use when creating dynamic binding.

For cases where spring.cloud.stream.source property is used or the binding was already created under different binder, the binderType

argument will have no effect.

|

Using channel interceptors with StreamBridge

Since StreamBridge uses a MessageChannel to establish the output binding, you can activate channel interceptors when sending data through StreamBridge.

It is up to the application to decide which channel interceptors to apply on StreamBridge.

Spring Cloud Stream does not inject all the channel interceptors detected into StreamBridge unless they are annoatated with @GlobalChannelInterceptor(patterns = "*").

Let us assume that you have the following two different StreamBridge bindings in the application.

streamBridge.send("foo-out-0", message);

and

streamBridge.send("bar-out-0", message);

Now, if you want a channel interceptor applied on both the StreamBridge bindings, then you can declare the following GlobalChannelInterceptor bean.

@Bean

@GlobalChannelInterceptor(patterns = "*")

public ChannelInterceptor customInterceptor() {

return new ChannelInterceptor() {

@Override

public Message<?> preSend(Message<?> message, MessageChannel channel) {

...

}

};

}However, if you don’t like the global approach above and want to have a dedicated interceptor for each binding, then you can do the following.

@Bean

@GlobalChannelInterceptor(patterns = "foo-*")

public ChannelInterceptor fooInterceptor() {

return new ChannelInterceptor() {

@Override

public Message<?> preSend(Message<?> message, MessageChannel channel) {

...

}

};

}and

@Bean

@GlobalChannelInterceptor(patterns = "bar-*")

public ChannelInterceptor barInterceptor() {

return new ChannelInterceptor() {

@Override

public Message<?> preSend(Message<?> message, MessageChannel channel) {

...

}

};

}You have the flexibility to make the patterns more strict or customized to your business needs.

With this approach, the application gets the ability to decide which interceptors to inject in StreamBridge rather than applying all the available interceptors.

Reactive Functions support

Since Spring Cloud Function is build on top of Project Reactor there isn’t much you need to do

to benefit from reactive programming model while implementing Supplier, Function or Consumer.

For example:

@SpringBootApplication

public static class SinkFromConsumer {

@Bean

public Function<Flux<String>, Flux<String>> reactiveUpperCase() {

return flux -> flux.map(val -> val.toUpperCase());

}

}

|

Few important things must be understood when choosing reactive or imperative programming model. Fully reactive or just API? Using reactive API does not necessarily imply that you can benefit from all of the reactive features of such API. In other words things like back-pressure and other advanced features will only work when they are working with compatible system - such as Reactive Kafka binder. In the event you are using regular Kafka or Rabbit or any other non-reactive binder, you can only benefit from the conveniences of the reactive API itself and not its advanced features, since the actual sources or targets of the stream are not reactive. Error handling and retries Throughout this manual you will see several reference on the framework-based error handling, retries and other features as well as configuration properties associated with them. It is important to understand that they only effect the imperative functions and you should NOT have the same expectations when it comes to reactive functions. And here is why. . .

There is a fundamental difference between reactive and imperative functions.

Imperative function is a message handler that is invoked by the framework on each message it receives. So for N messages there will be N invocations of such function and because of that we can wrap such function and add additional functionality such as error handling, retries etc.

Reactive function is initialization function. It is invoked only once to get a reference to a Flux/Mono provided by the user to be connected with the one provided by the framework. After that we (the framework) have absolutely no visibility nor control of the stream.

Therefore, with reactive functions you must rely on the richness of the reactive API when it comes to error handling and retries (i.e., |

Functional Composition

Using functional programming model you can also benefit from functional composition where you can dynamically compose complex handlers from a set of simple functions. As an example let’s add the following function bean to the application defined above

@Bean

public Function<String, String> wrapInQuotes() {

return s -> "\"" + s + "\"";

}

and modify the spring.cloud.function.definition property to reflect your intention to compose a new function from both ‘toUpperCase’ and ‘wrapInQuotes’.

To do so Spring Cloud Function relies on | (pipe) symbol. So, to finish our example our property will now look like this:

--spring.cloud.function.definition=toUpperCase|wrapInQuotes

| One of the great benefits of functional composition support provided by Spring Cloud Function is the fact that you can compose reactive and imperative functions. |

The result of a composition is a single function which, as you may guess, could have a very long and rather cryptic name (e.g., foo|bar|baz|xyz. . .)

presenting a great deal of inconvenience when it comes to other configuration properties. This is where descriptive binding names

feature described in Functional binding names section can help.

For example, if we want to give our toUpperCase|wrapInQuotes a more descriptive name we can do so

with the following property spring.cloud.stream.function.bindings.toUpperCase|wrapInQuotes-in-0=quotedUpperCaseInput allowing

other configuration properties to refer to that binding name (e.g., spring.cloud.stream.bindings.quotedUpperCaseInput.destination=myDestination).

Functional Composition and Cross-cutting Concerns

Function composition effectively allows you to address complexity by breaking it down to a set of simple and individually manageable/testable components that could still be represented as one at runtime. But that is not the only benefit.

You can also use composition to address certain cross-cutting non-functional concerns, such as content enrichment. For example, assume you have an incoming message that may be lacking certain headers, or some headers are not in the exact state your business function would expect. You can now implement a separate function that addresses those concerns and then compose it with the main business function.

Let’s look at the example

@SpringBootApplication

public class DemoStreamApplication {

public static void main(String[] args) {

SpringApplication.run(DemoStreamApplication.class,

"--spring.cloud.function.definition=enrich|echo",

"--spring.cloud.stream.function.bindings.enrich|echo-in-0=input",

"--spring.cloud.stream.bindings.input.destination=myDestination",

"--spring.cloud.stream.bindings.input.group=myGroup");

}

@Bean

public Function<Message<String>, Message<String>> enrich() {

return message -> {

Assert.isTrue(!message.getHeaders().containsKey("foo"), "Should NOT contain 'foo' header");

return MessageBuilder.fromMessage(message).setHeader("foo", "bar").build();

};

}

@Bean

public Function<Message<String>, Message<String>> echo() {

return message -> {

Assert.isTrue(message.getHeaders().containsKey("foo"), "Should contain 'foo' header");

System.out.println("Incoming message " + message);

return message;

};

}

}

While trivial, this example demonstrates how one function enriches the incoming Message with the additional header(s) (non-functional concern),

so the other function - echo - can benefit form it. The echo function stays clean and focused on business logic only.

You can also see the usage of spring.cloud.stream.function.bindings property to simplify composed binding name.

Functions with multiple input and output arguments

Starting with version 3.0 spring-cloud-stream provides support for functions that have multiple inputs and/or multiple outputs (return values). What does this actually mean and what type of use cases it is targeting?

-

Big Data: Imagine the source of data you’re dealing with is highly un-organized and contains various types of data elements (e.g., orders, transactions etc) and you effectively need to sort it out.

-

Data aggregation: Another use case may require you to merge data elements from 2+ incoming _streams.

The above describes just a few use cases where you may need to use a single function to accept and/or produce multiple streams of data. And that is the type of use cases we are targeting here.

Also, note a slightly different emphasis on the concept of streams here. The assumption is that such functions are only valuable

if they are given access to the actual streams of data (not the individual elements). So for that we are relying on

abstractions provided by Project Reactor (i.e., Flux and Mono) which is already available on the

classpath as part of the dependencies brought in by spring-cloud-functions.

Another important aspect is representation of multiple input and outputs. While java provides

variety of different abstractions to represent multiple of something those abstractions

are a) unbounded, b) lack arity and c) lack type information which are all important in this context.

As an example, let’s look at Collection or an array which only allows us to

describe multiple of a single type or up-cast everything to an Object, affecting the transparent type conversion feature of

spring-cloud-stream and so on.

So to accommodate all these requirements the initial support is relying on the signature which utilizes another abstraction provided by Project Reactor - Tuples. However, we are working on allowing a more flexible signatures.

| Please refer to Binding and Binding names section to understand the naming convention used to establish binding names used by such application. |

Let’s look at the few samples:

@SpringBootApplication

public class SampleApplication {

@Bean

public Function<Tuple2<Flux<String>, Flux<Integer>>, Flux<String>> gather() {

return tuple -> {

Flux<String> stringStream = tuple.getT1();

Flux<String> intStream = tuple.getT2().map(i -> String.valueOf(i));

return Flux.merge(stringStream, intStream);

};

}

}

The above example demonstrates function which takes two inputs (first of type String and second of type Integer)

and produces a single output of type String.

So, for the above example the two input bindings will be gather-in-0 and gather-in-1 and for consistency the

output binding also follows the same convention and is named gather-out-0.

Knowing that will allow you to set binding specific properties.

For example, the following will override content-type for gather-in-0 binding:

--spring.cloud.stream.bindings.gather-in-0.content-type=text/plain

@SpringBootApplication

public class SampleApplication {

@Bean

public static Function<Flux<Integer>, Tuple2<Flux<String>, Flux<String>>> scatter() {

return flux -> {

Flux<Integer> connectedFlux = flux.publish().autoConnect(2);

UnicastProcessor even = UnicastProcessor.create();

UnicastProcessor odd = UnicastProcessor.create();

Flux<Integer> evenFlux = connectedFlux.filter(number -> number % 2 == 0).doOnNext(number -> even.onNext("EVEN: " + number));

Flux<Integer> oddFlux = connectedFlux.filter(number -> number % 2 != 0).doOnNext(number -> odd.onNext("ODD: " + number));

return Tuples.of(Flux.from(even).doOnSubscribe(x -> evenFlux.subscribe()), Flux.from(odd).doOnSubscribe(x -> oddFlux.subscribe()));

};

}

}

The above example is somewhat of a the opposite from the previous sample and demonstrates function which

takes single input of type Integer and produces two outputs (both of type String).

So, for the above example the input binding is scatter-in-0 and the

output bindings are scatter-out-0 and scatter-out-1.

And you test it with the following code:

@Test

public void testSingleInputMultiOutput() {

try (ConfigurableApplicationContext context = new SpringApplicationBuilder(

TestChannelBinderConfiguration.getCompleteConfiguration(

SampleApplication.class))

.run("--spring.cloud.function.definition=scatter")) {

InputDestination inputDestination = context.getBean(InputDestination.class);

OutputDestination outputDestination = context.getBean(OutputDestination.class);

for (int i = 0; i < 10; i++) {

inputDestination.send(MessageBuilder.withPayload(String.valueOf(i).getBytes()).build());

}

int counter = 0;

for (int i = 0; i < 5; i++) {

Message<byte[]> even = outputDestination.receive(0, 0);

assertThat(even.getPayload()).isEqualTo(("EVEN: " + String.valueOf(counter++)).getBytes());

Message<byte[]> odd = outputDestination.receive(0, 1);

assertThat(odd.getPayload()).isEqualTo(("ODD: " + String.valueOf(counter++)).getBytes());

}

}

}

Multiple functions in a single application

There may also be a need for grouping several message handlers in a single application. You would do so by defining several functions.

@SpringBootApplication

public class SampleApplication {

@Bean

public Function<String, String> uppercase() {

return value -> value.toUpperCase();

}

@Bean

public Function<String, String> reverse() {

return value -> new StringBuilder(value).reverse().toString();

}

}

In the above example we have configuration which defines two functions uppercase and reverse.

So first, as mentioned before, we need to notice that there is a a conflict (more then one function) and therefore

we need to resolve it by providing spring.cloud.function.definition property pointing to the actual function

we want to bind. Except here we will use ; delimiter to point to both functions (see test case below).

| As with functions with multiple inputs/outputs, please refer to Binding and Binding names section to understand the naming convention used to establish binding names used by such application. |

And you test it with the following code:

@Test

public void testMultipleFunctions() {

try (ConfigurableApplicationContext context = new SpringApplicationBuilder(

TestChannelBinderConfiguration.getCompleteConfiguration(

ReactiveFunctionConfiguration.class))

.run("--spring.cloud.function.definition=uppercase;reverse")) {

InputDestination inputDestination = context.getBean(InputDestination.class);

OutputDestination outputDestination = context.getBean(OutputDestination.class);

Message<byte[]> inputMessage = MessageBuilder.withPayload("Hello".getBytes()).build();

inputDestination.send(inputMessage, "uppercase-in-0");

inputDestination.send(inputMessage, "reverse-in-0");

Message<byte[]> outputMessage = outputDestination.receive(0, "uppercase-out-0");

assertThat(outputMessage.getPayload()).isEqualTo("HELLO".getBytes());

outputMessage = outputDestination.receive(0, "reverse-out-1");

assertThat(outputMessage.getPayload()).isEqualTo("olleH".getBytes());

}

}

Batch Consumers

When using a MessageChannelBinder that supports batch listeners, and the feature is enabled for the consumer binding, you can set spring.cloud.stream.bindings.<binding-name>.consumer.batch-mode to true to enable the

entire batch of messages to be passed to the function in a List.

@Bean

public Function<List<Person>, Person> findFirstPerson() {

return persons -> persons.get(0);

}

Batch Producers

You can also use the concept of batching on the producer side by returning a collection of Messages which effectively provides an inverse effect where each message in the collection will be sent individually by the binder.

Consider the following function:

@Bean

public Function<String, List<Message<String>>> batch() {

return p -> {

List<Message<String>> list = new ArrayList<>();

list.add(MessageBuilder.withPayload(p + ":1").build());

list.add(MessageBuilder.withPayload(p + ":2").build());

list.add(MessageBuilder.withPayload(p + ":3").build());

list.add(MessageBuilder.withPayload(p + ":4").build());

return list;

};

}

Each message in the returned list will be sent individually resulting in four messages sent to output destination.

Spring Integration flow as functions

When you implement a function, you may have complex requirements that fit the category of Enterprise Integration Patterns (EIP). These are best handled by using a framework such as Spring Integration (SI), which is a reference implementation of EIP.

Thankfully SI already provides support for exposing integration flows as functions via Integration flow as gateway Consider the following sample:

@SpringBootApplication

public class FunctionSampleSpringIntegrationApplication {

public static void main(String[] args) {

SpringApplication.run(FunctionSampleSpringIntegrationApplication.class, args);

}

@Bean

public IntegrationFlow uppercaseFlow() {

return IntegrationFlows.from(MessageFunction.class, "uppercase")

.<String, String>transform(String::toUpperCase)

.logAndReply(LoggingHandler.Level.WARN);

}

public interface MessageFunction extends Function<Message<String>, Message<String>> {

}

}

For those who are familiar with SI you can see we define a bean of type IntegrationFlow where we

declare an integration flow that we want to expose as a Function<String, String> (using SI DSL) called uppercase.

The MessageFunction interface lets us explicitly declare the type of the inputs and outputs for proper type conversion.

See Content Type Negotiation section for more on type conversion.

To receive raw input you can use from(Function.class, …).

The resulting function is bound to the input and output destinations exposed by the target binder.

| Please refer to Binding and Binding names section to understand the naming convention used to establish binding names used by such application. |

For more details on interoperability of Spring Integration and Spring Cloud Stream specifically around functional programming model you may find this post very interesting, as it dives a bit deeper into various patterns you can apply by merging the best of Spring Integration and Spring Cloud Stream/Functions.

Using Polled Consumers

Overview

When using polled consumers, you poll the PollableMessageSource on demand.

To define binding for polled consumer you need to provide spring.cloud.stream.pollable-source property.

Consider the following example of a polled consumer binding:

--spring.cloud.stream.pollable-source=myDestinationThe pollable-source name myDestination in the preceding example will result in myDestination-in-0 binding name to stay

consistent with functional programming model.

Given the polled consumer in the preceding example, you might use it as follows:

@Bean

public ApplicationRunner poller(PollableMessageSource destIn, MessageChannel destOut) {

return args -> {

while (someCondition()) {

try {

if (!destIn.poll(m -> {

String newPayload = ((String) m.getPayload()).toUpperCase();

destOut.send(new GenericMessage<>(newPayload));

})) {

Thread.sleep(1000);

}

}

catch (Exception e) {

// handle failure

}

}

};

}

A less manual and more Spring-like alternative would be to configure a scheduled task bean. For example,

@Scheduled(fixedDelay = 5_000)

public void poll() {

System.out.println("Polling...");

this.source.poll(m -> {

System.out.println(m.getPayload());

}, new ParameterizedTypeReference<Foo>() { });

}

The PollableMessageSource.poll() method takes a MessageHandler argument (often a lambda expression, as shown here).

It returns true if the message was received and successfully processed.

As with message-driven consumers, if the MessageHandler throws an exception, messages are published to error channels,

as discussed in Error Handling.

Normally, the poll() method acknowledges the message when the MessageHandler exits.

If the method exits abnormally, the message is rejected (not re-queued), but see Handling Errors.

You can override that behavior by taking responsibility for the acknowledgment, as shown in the following example:

@Bean

public ApplicationRunner poller(PollableMessageSource dest1In, MessageChannel dest2Out) {

return args -> {

while (someCondition()) {

if (!dest1In.poll(m -> {

StaticMessageHeaderAccessor.getAcknowledgmentCallback(m).noAutoAck();

// e.g. hand off to another thread which can perform the ack

// or acknowledge(Status.REQUEUE)

})) {

Thread.sleep(1000);

}

}

};

}

You must ack (or nack) the message at some point, to avoid resource leaks.

|

Some messaging systems (such as Apache Kafka) maintain a simple offset in a log. If a delivery fails and is re-queued with StaticMessageHeaderAccessor.getAcknowledgmentCallback(m).acknowledge(Status.REQUEUE);, any later successfully ack’d messages are redelivered.

|

There is also an overloaded poll method, for which the definition is as follows:

poll(MessageHandler handler, ParameterizedTypeReference<?> type)

The type is a conversion hint that allows the incoming message payload to be converted, as shown in the following example:

boolean result = pollableSource.poll(received -> {

Map<String, Foo> payload = (Map<String, Foo>) received.getPayload();

...

}, new ParameterizedTypeReference<Map<String, Foo>>() {});

Handling Errors

By default, an error channel is configured for the pollable source; if the callback throws an exception, an ErrorMessage is sent to the error channel (<destination>.<group>.errors); this error channel is also bridged to the global Spring Integration errorChannel.

You can subscribe to either error channel with a @ServiceActivator to handle errors; without a subscription, the error will simply be logged and the message will be acknowledged as successful.

If the error channel service activator throws an exception, the message will be rejected (by default) and won’t be redelivered.

If the service activator throws a RequeueCurrentMessageException, the message will be requeued at the broker and will be again retrieved on a subsequent poll.

If the listener throws a RequeueCurrentMessageException directly, the message will be requeued, as discussed above, and will not be sent to the error channels.

Event Routing

Event Routing, in the context of Spring Cloud Stream, is the ability to either a) route events to a particular event subscriber or b) route events produced by an event subscriber to a particular destination. Here we’ll refer to it as route ‘TO’ and route ‘FROM’.

Routing TO Consumer

Routing can be achieved by relying on RoutingFunction available in Spring Cloud Function 3.0. All you need to do is enable it via

--spring.cloud.stream.function.routing.enabled=true application property or provide spring.cloud.function.routing-expression property.

Once enabled RoutingFunction will be bound to input destination

receiving all the messages and route them to other functions based on the provided instruction.

For the purposes of binding the name of the routing destination is functionRouter-in-0

(see RoutingFunction.FUNCTION_NAME and binding naming convention Functional binding names).

|

Instruction could be provided with individual messages as well as application properties.

Here are couple of samples:

Using message headers

@SpringBootApplication

public class SampleApplication {

public static void main(String[] args) {

SpringApplication.run(SampleApplication.class,

"--spring.cloud.stream.function.routing.enabled=true");

}

@Bean

public Consumer<String> even() {

return value -> {

System.out.println("EVEN: " + value);

};

}

@Bean

public Consumer<String> odd() {

return value -> {

System.out.println("ODD: " + value);

};

}

}

By sending a message to the functionRouter-in-0 destination exposed by the binder (i.e., rabbit, kafka),

such message will be routed to the appropriate (‘even’ or ‘odd’) Consumer.

By default RoutingFunction will look for a spring.cloud.function.definition or spring.cloud.function.routing-expression (for more dynamic scenarios with SpEL)

header and if it is found, its value will be treated as the routing instruction.

For example,

setting spring.cloud.function.routing-expression header to value T(java.lang.System).currentTimeMillis() % 2 == 0 ? 'even' : 'odd' will end up semi-randomly routing request to either odd or even functions.

Also, for SpEL, the root object of the evaluation context is Message so you can do evaluation on individual headers (or message) as well ….routing-expression=headers['type']

Using application properties

The spring.cloud.function.routing-expression and/or spring.cloud.function.definition

can be passed as application properties (e.g., spring.cloud.function.routing-expression=headers['type'].

@SpringBootApplication

public class RoutingStreamApplication {

public static void main(String[] args) {

SpringApplication.run(RoutingStreamApplication.class,

"--spring.cloud.function.routing-expression="

+ "T(java.lang.System).nanoTime() % 2 == 0 ? 'even' : 'odd'");

}

@Bean

public Consumer<Integer> even() {

return value -> System.out.println("EVEN: " + value);

}

@Bean

public Consumer<Integer> odd() {

return value -> System.out.println("ODD: " + value);

}

}

| Passing instructions via application properties is especially important for reactive functions given that a reactive function is only invoked once to pass the Publisher, so access to the individual items is limited. |

Routing Function and output binding

RoutingFunction is a Function and as such treated no differently than any other function. Well. . . almost.

When RoutingFunction routes to another Function, its output is sent to the output binding of the RoutingFunction which

is functionRouter-in-0 as expected. But what if RoutingFunction routes to a Consumer? In other words the result of invocation

of the RoutingFunction may not produce anything to be sent to the output binding, thus making it necessary to even have one.

So, we do treat RoutingFunction a little bit differently when we create bindings. And even though it is transparent to you as a user

(there is really nothing for you to do), being aware of some of the mechanics would help you understand its inner workings.

So, the rule is;

We never create output binding for the RoutingFunction, only input. So when you routing to Consumer, the RoutingFunction effectively

becomes as a Consumer by not having any output bindings. However, if RoutingFunction happen to route to another Function which produces

the output, the output binding for the RoutingFunction will be create dynamically at which point RoutingFunction will act as a regular Function

with regards to bindings (having both input and output bindings).

Routing FROM Consumer

Aside from static destinations, Spring Cloud Stream lets applications send messages to dynamically bound destinations. This is useful, for example, when the target destination needs to be determined at runtime. Applications can do so in one of two ways.

BinderAwareChannelResolver

The BinderAwareChannelResolver is a special bean registered automatically by the framework.

You can autowire this bean into your application and use it to resolve output destination at runtime

The 'spring.cloud.stream.dynamicDestinations' property can be used for restricting the dynamic destination names to a known set (that is, intentionally allowed values). If this property is not set, any destination can be bound dynamically.

The following example demonstrates one of the common scenarios where REST controller uses a path variable to determine target destination:

@SpringBootApplication

@Controller