4.0.5

Reference Guide

This guide describes the Apache Kafka implementation of the Spring Cloud Stream Binder. It contains information about its design, usage, and configuration options, as well as information on how the Stream Cloud Stream concepts map onto Apache Kafka specific constructs. In addition, this guide explains the Kafka Streams binding capabilities of Spring Cloud Stream.

1. Apache Kafka Binder

1.1. Usage

To use Apache Kafka binder, you need to add spring-cloud-stream-binder-kafka as a dependency to your Spring Cloud Stream application, as shown in the following example for Maven:

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream-binder-kafka</artifactId>

</dependency>Alternatively, you can also use the Spring Cloud Stream Kafka Starter, as shown in the following example for Maven:

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-stream-kafka</artifactId>

</dependency>1.2. Overview

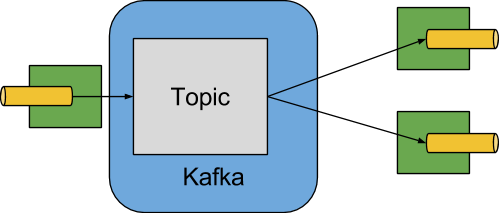

The following image shows a simplified diagram of how the Apache Kafka binder operates:

The Apache Kafka Binder implementation maps each destination to an Apache Kafka topic. The consumer group maps directly to the same Apache Kafka concept. Partitioning also maps directly to Apache Kafka partitions as well.

The binder currently uses the Apache Kafka kafka-clients version 3.1.0.

This client can communicate with older brokers (see the Kafka documentation), but certain features may not be available.

For example, with versions earlier than 0.11.x.x, native headers are not supported.

Also, 0.11.x.x does not support the autoAddPartitions property.

1.3. Configuration Options

This section contains the configuration options used by the Apache Kafka binder.

For common configuration options and properties pertaining to the binder, see the binding properties in core documentation.

1.3.1. Kafka Binder Properties

- spring.cloud.stream.kafka.binder.brokers

-

A list of brokers to which the Kafka binder connects.

Default:

localhost. - spring.cloud.stream.kafka.binder.defaultBrokerPort

-

brokersallows hosts specified with or without port information (for example,host1,host2:port2). This sets the default port when no port is configured in the broker list.Default:

9092. - spring.cloud.stream.kafka.binder.configuration

-

Key/Value map of client properties (both producers and consumer) passed to all clients created by the binder. Due to the fact that these properties are used by both producers and consumers, usage should be restricted to common properties — for example, security settings. Unknown Kafka producer or consumer properties provided through this configuration are filtered out and not allowed to propagate. Properties here supersede any properties set in boot.

Default: Empty map.

- spring.cloud.stream.kafka.binder.consumerProperties

-

Key/Value map of arbitrary Kafka client consumer properties. In addition to support known Kafka consumer properties, unknown consumer properties are allowed here as well. Properties here supersede any properties set in boot and in the

configurationproperty above.Default: Empty map.

- spring.cloud.stream.kafka.binder.headers

-

The list of custom headers that are transported by the binder. Only required when communicating with older applications (⇐ 1.3.x) with a

kafka-clientsversion < 0.11.0.0. Newer versions support headers natively.Default: empty.

- spring.cloud.stream.kafka.binder.healthTimeout

-

The time to wait to get partition information, in seconds. Health reports as down if this timer expires.

Default: 10.

- spring.cloud.stream.kafka.binder.requiredAcks

-

The number of required acks on the broker. See the Kafka documentation for the producer

acksproperty.Default:

1. - spring.cloud.stream.kafka.binder.minPartitionCount

-

Effective only if

autoCreateTopicsorautoAddPartitionsis set. The global minimum number of partitions that the binder configures on topics on which it produces or consumes data. It can be superseded by thepartitionCountsetting of the producer or by the value ofinstanceCount * concurrencysettings of the producer (if either is larger).Default:

1. - spring.cloud.stream.kafka.binder.producerProperties

-

Key/Value map of arbitrary Kafka client producer properties. In addition to support known Kafka producer properties, unknown producer properties are allowed here as well. Properties here supersede any properties set in boot and in the

configurationproperty above.Default: Empty map.

- spring.cloud.stream.kafka.binder.replicationFactor

-

The replication factor of auto-created topics if

autoCreateTopicsis active. Can be overridden on each binding.If you are using Kafka broker versions prior to 2.4, then this value should be set to at least 1. Starting with version 3.0.8, the binder uses-1as the default value, which indicates that the broker 'default.replication.factor' property will be used to determine the number of replicas. Check with your Kafka broker admins to see if there is a policy in place that requires a minimum replication factor, if that’s the case then, typically, thedefault.replication.factorwill match that value and-1should be used, unless you need a replication factor greater than the minimum.Default:

-1. - spring.cloud.stream.kafka.binder.autoCreateTopics

-

If set to

true, the binder creates new topics automatically. If set tofalse, the binder relies on the topics being already configured. In the latter case, if the topics do not exist, the binder fails to start.This setting is independent of the auto.create.topics.enablesetting of the broker and does not influence it. If the server is set to auto-create topics, they may be created as part of the metadata retrieval request, with default broker settings.Default:

true. - spring.cloud.stream.kafka.binder.autoAddPartitions

-

If set to

true, the binder creates new partitions if required. If set tofalse, the binder relies on the partition size of the topic being already configured. If the partition count of the target topic is smaller than the expected value, the binder fails to start.Default:

false. - spring.cloud.stream.kafka.binder.transaction.transactionIdPrefix

-

Enables transactions in the binder. See

transaction.idin the Kafka documentation and Transactions in thespring-kafkadocumentation. When transactions are enabled, individualproducerproperties are ignored and all producers use thespring.cloud.stream.kafka.binder.transaction.producer.*properties.Default

null(no transactions) - spring.cloud.stream.kafka.binder.transaction.producer.*

-

Global producer properties for producers in a transactional binder. See

spring.cloud.stream.kafka.binder.transaction.transactionIdPrefixand Kafka Producer Properties and the general producer properties supported by all binders.Default: See individual producer properties.

- spring.cloud.stream.kafka.binder.headerMapperBeanName

-

The bean name of a

KafkaHeaderMapperused for mappingspring-messagingheaders to and from Kafka headers. Use this, for example, if you wish to customize the trusted packages in aBinderHeaderMapperbean that uses JSON deserialization for the headers. If this customBinderHeaderMapperbean is not made available to the binder using this property, then the binder will look for a header mapper bean with the namekafkaBinderHeaderMapperthat is of typeBinderHeaderMapperbefore falling back to a defaultBinderHeaderMappercreated by the binder.Default: none.

- spring.cloud.stream.kafka.binder.considerDownWhenAnyPartitionHasNoLeader

-

Flag to set the binder health as

down, when any partitions on the topic, regardless of the consumer that is receiving data from it, is found without a leader.Default:

false. - spring.cloud.stream.kafka.binder.certificateStoreDirectory

-

When the truststore or keystore certificate location is given as a non-local file system resource (resources supported by org.springframework.core.io.Resource e.g. CLASSPATH, HTTP, etc.), the binder copies the resource from the path (which is convertible to org.springframework.core.io.Resource) to a location on the filesystem. This is true for both broker level certificates (

ssl.truststore.locationandssl.keystore.location) and certificates intended for schema registry (schema.registry.ssl.truststore.locationandschema.registry.ssl.keystore.location). Keep in mind that the truststore and keystore location paths must be provided underspring.cloud.stream.kafka.binder.configuration…. For example,spring.cloud.stream.kafka.binder.configuration.ssl.truststore.location,spring.cloud.stream.kafka.binder.configuration.schema.registry.ssl.truststore.location, etc. The file will be copied to the location specified as the value for this property which must be an existing directory on the filesystem that is writable by the process running the application. If this value is not set and the certificate file is a non-local file system resource, then it will be copied to System’s temp directory as returned bySystem.getProperty("java.io.tmpdir"). This is also true, if this value is present, but the directory cannot be found on the filesystem or is not writable.Default: none.

- spring.cloud.stream.kafka.binder.metrics.defaultOffsetLagMetricsEnabled

-

When set to true, the offset lag metric of each consumer topic is computed whenever the metric is accessed. When set to false only the periodically calculated offset lag is used.

Default: true

- spring.cloud.stream.kafka.binder.metrics.offsetLagMetricsInterval

-

The interval in which the offset lag for each consumer topic is computed. This value is used whenever

metrics.defaultOffsetLagMetricsEnabledis disabled or its computation is taking too long.Default: 60 seconds

- spring.cloud.stream.kafka.binder.enableObservation

-

Enable Micrometer observation registry on all the bindings in this binder.

Default: false

1.3.2. Kafka Consumer Properties

To avoid repetition, Spring Cloud Stream supports setting values for all channels, in the format of spring.cloud.stream.kafka.default.consumer.<property>=<value>.

|

The following properties are available for Kafka consumers only and

must be prefixed with spring.cloud.stream.kafka.bindings.<channelName>.consumer..

- admin.configuration

-

Since version 2.1.1, this property is deprecated in favor of

topic.properties, and support for it will be removed in a future version. - admin.replicas-assignment

-

Since version 2.1.1, this property is deprecated in favor of

topic.replicas-assignment, and support for it will be removed in a future version. - admin.replication-factor

-

Since version 2.1.1, this property is deprecated in favor of

topic.replication-factor, and support for it will be removed in a future version. - autoRebalanceEnabled

-

When

true, topic partitions is automatically rebalanced between the members of a consumer group. Whenfalse, each consumer is assigned a fixed set of partitions based onspring.cloud.stream.instanceCountandspring.cloud.stream.instanceIndex. This requires both thespring.cloud.stream.instanceCountandspring.cloud.stream.instanceIndexproperties to be set appropriately on each launched instance. The value of thespring.cloud.stream.instanceCountproperty must typically be greater than 1 in this case.Default:

true. - ackEachRecord

-

When

autoCommitOffsetistrue, this setting dictates whether to commit the offset after each record is processed. By default, offsets are committed after all records in the batch of records returned byconsumer.poll()have been processed. The number of records returned by a poll can be controlled with themax.poll.recordsKafka property, which is set through the consumerconfigurationproperty. Setting this totruemay cause a degradation in performance, but doing so reduces the likelihood of redelivered records when a failure occurs. Also, see the binderrequiredAcksproperty, which also affects the performance of committing offsets. This property is deprecated as of 3.1 in favor of usingackMode. If theackModeis not set and batch mode is not enabled,RECORDackMode will be used.Default:

false. - autoCommitOffset

-

Starting with version 3.1, this property is deprecated. See

ackModefor more details on alternatives. Whether to autocommit offsets when a message has been processed. If set tofalse, a header with the keykafka_acknowledgmentof the typeorg.springframework.kafka.support.Acknowledgmentheader is present in the inbound message. Applications may use this header for acknowledging messages. See the examples section for details. When this property is set tofalse, Kafka binder sets the ack mode toorg.springframework.kafka.listener.AbstractMessageListenerContainer.AckMode.MANUALand the application is responsible for acknowledging records. Also seeackEachRecord.Default:

true. - ackMode

-

Specify the container ack mode. This is based on the AckMode enumeration defined in Spring Kafka. If

ackEachRecordproperty is set totrueand consumer is not in batch mode, then this will use the ack mode ofRECORD, otherwise, use the provided ack mode using this property. - autoCommitOnError

-

In pollable consumers, if set to

true, it always auto commits on error. If not set (the default) or false, it will not auto commit in pollable consumers. Note that this property is only applicable for pollable consumers.Default: not set.

- resetOffsets

-

Whether to reset offsets on the consumer to the value provided by startOffset. Must be false if a

KafkaBindingRebalanceListeneris provided; see Using a KafkaBindingRebalanceListener. See Resetting Offsets for more information about this property.Default:

false. - startOffset

-

The starting offset for new groups. Allowed values:

earliestandlatest. If the consumer group is set explicitly for the consumer 'binding' (throughspring.cloud.stream.bindings.<channelName>.group), 'startOffset' is set toearliest. Otherwise, it is set tolatestfor theanonymousconsumer group. See Resetting Offsets for more information about this property.Default: null (equivalent to

earliest). - enableDlq

-

When set to true, it enables DLQ behavior for the consumer. By default, messages that result in errors are forwarded to a topic named

error.<destination>.<group>. The DLQ topic name can be configurable by setting thedlqNameproperty or by defining a@Beanof typeDlqDestinationResolver. This provides an alternative option to the more common Kafka replay scenario for the case when the number of errors is relatively small and replaying the entire original topic may be too cumbersome. See Dead-Letter Topic Processing processing for more information. Starting with version 2.0, messages sent to the DLQ topic are enhanced with the following headers:x-original-topic,x-exception-message, andx-exception-stacktraceasbyte[]. By default, a failed record is sent to the same partition number in the DLQ topic as the original record. See Dead-Letter Topic Partition Selection for how to change that behavior. Not allowed whendestinationIsPatternistrue.Default:

false. - dlqPartitions

-

When

enableDlqis true, and this property is not set, a dead letter topic with the same number of partitions as the primary topic(s) is created. Usually, dead-letter records are sent to the same partition in the dead-letter topic as the original record. This behavior can be changed; see Dead-Letter Topic Partition Selection. If this property is set to1and there is noDqlPartitionFunctionbean, all dead-letter records will be written to partition0. If this property is greater than1, you MUST provide aDlqPartitionFunctionbean. Note that the actual partition count is affected by the binder’sminPartitionCountproperty.Default:

none - configuration

-

Map with a key/value pair containing generic Kafka consumer properties. In addition to having Kafka consumer properties, other configuration properties can be passed here. For example some properties needed by the application such as

spring.cloud.stream.kafka.bindings.input.consumer.configuration.foo=bar. Thebootstrap.serversproperty cannot be set here; use multi-binder support if you need to connect to multiple clusters.Default: Empty map.

- dlqName

-

The name of the DLQ topic to receive the error messages.

Default: null (If not specified, messages that result in errors are forwarded to a topic named

error.<destination>.<group>). - dlqProducerProperties

-

Using this, DLQ-specific producer properties can be set. All the properties available through kafka producer properties can be set through this property. When native decoding is enabled on the consumer (i.e., useNativeDecoding: true) , the application must provide corresponding key/value serializers for DLQ. This must be provided in the form of

dlqProducerProperties.configuration.key.serializeranddlqProducerProperties.configuration.value.serializer.Default: Default Kafka producer properties.

- standardHeaders

-

Indicates which standard headers are populated by the inbound channel adapter. Allowed values:

none,id,timestamp, orboth. Useful if using native deserialization and the first component to receive a message needs anid(such as an aggregator that is configured to use a JDBC message store).Default:

none - converterBeanName

-

The name of a bean that implements

RecordMessageConverter. Used in the inbound channel adapter to replace the defaultMessagingMessageConverter.Default:

null - idleEventInterval

-

The interval, in milliseconds, between events indicating that no messages have recently been received. Use an

ApplicationListener<ListenerContainerIdleEvent>to receive these events. See Example: Pausing and Resuming the Consumer for a usage example.Default:

30000 - destinationIsPattern

-

When true, the destination is treated as a regular expression

Patternused to match topic names by the broker. When true, topics are not provisioned, andenableDlqis not allowed, because the binder does not know the topic names during the provisioning phase. Note, the time taken to detect new topics that match the pattern is controlled by the consumer propertymetadata.max.age.ms, which (at the time of writing) defaults to 300,000ms (5 minutes). This can be configured using theconfigurationproperty above.Default:

false - topic.properties

-

A

Mapof Kafka topic properties used when provisioning new topics — for example,spring.cloud.stream.kafka.bindings.input.consumer.topic.properties.message.format.version=0.9.0.0Default: none.

- topic.replicas-assignment

-

A Map<Integer, List<Integer>> of replica assignments, with the key being the partition and the value being the assignments. Used when provisioning new topics. See the

NewTopicJavadocs in thekafka-clientsjar.Default: none.

- topic.replication-factor

-

The replication factor to use when provisioning topics. Overrides the binder-wide setting. Ignored if

replicas-assignmentsis present.Default: none (the binder-wide default of -1 is used).

- pollTimeout

-

Timeout used for polling in pollable consumers.

Default: 5 seconds.

- transactionManager

-

Bean name of a

KafkaAwareTransactionManagerused to override the binder’s transaction manager for this binding. Usually needed if you want to synchronize another transaction with the Kafka transaction, using theChainedKafkaTransactionManaager. To achieve exactly once consumption and production of records, the consumer and producer bindings must all be configured with the same transaction manager.Default: none.

- txCommitRecovered

-

When using a transactional binder, the offset of a recovered record (e.g. when retries are exhausted and the record is sent to a dead letter topic) will be committed via a new transaction, by default. Setting this property to

falsesuppresses committing the offset of recovered record.Default: true.

- commonErrorHandlerBeanName

-

CommonErrorHandlerbean name to use per consumer binding. When present, this user providedCommonErrorHandlertakes precedence over any other error handlers defined by the binder. This is a handy way to express error handlers, if the application does not want to use aListenerContainerCustomizerand then check the destination/group combination to set an error handler.Default: none.

1.3.3. Resetting Offsets

When an application starts, the initial position in each assigned partition depends on two properties startOffset and resetOffsets.

If resetOffsets is false, normal Kafka consumer auto.offset.reset semantics apply.

i.e. If there is no committed offset for a partition for the binding’s consumer group, the position is earliest or latest.

By default, bindings with an explicit group use earliest, and anonymous bindings (with no group) use latest.

These defaults can be overridden by setting the startOffset binding property.

There will be no committed offset(s) the first time the binding is started with a particular group.

The other condition where no committed offset exists is if the offset has been expired.

With modern brokers (since 2.1), and default broker properties, the offsets are expired 7 days after the last member leaves the group.

See the offsets.retention.minutes broker property for more information.

When resetOffsets is true, the binder applies similar semantics to those that apply when there is no committed offset on the broker, as if this binding has never consumed from the topic; i.e. any current committed offset is ignored.

Following are two use cases when this might be used.

-

Consuming from a compacted topic containing key/value pairs. Set

resetOffsetstotrueandstartOffsettoearliest; the binding will perform aseekToBeginningon all newly assigned partitions. -

Consuming from a topic containing events, where you are only interested in events that occur while this binding is running. Set

resetOffsetstotrueandstartOffsettolatest; the binding will perform aseekToEndon all newly assigned partitions.

| If a rebalance occurs after the initial assignment, the seeks will only be performed on any newly assigned partitions that were not assigned during the initial assignment. |

For more control over topic offsets, see Using a KafkaBindingRebalanceListener; when a listener is provided, resetOffsets should not be set to true, otherwise, that will cause an error.

1.3.4. Consuming Batches

Starting with version 3.0, when spring.cloud.stream.bindings.<name>.consumer.batch-mode is set to true, all of the records received by polling the Kafka Consumer will be presented as a List<?> to the listener method.

Otherwise, the method will be called with one record at a time.

The size of the batch is controlled by Kafka consumer properties max.poll.records, fetch.min.bytes, fetch.max.wait.ms; refer to the Kafka documentation for more information.

Starting with version 4.0.2, the binder supports DLQ capabilities when consuming in batch mode.

Keep in mind that, when using DLQ on a consumer binding that is in batch mode, all the records received from the previous poll will be delivered to the DLQ topic.

Retry within the binder is not supported when using batch mode, so maxAttempts will be overridden to 1.

You can configure a DefaultErrorHandler (using a ListenerContainerCustomizer) to achieve similar functionality to retry in the binder.

You can also use a manual AckMode and call Ackowledgment.nack(index, sleep) to commit the offsets for a partial batch and have the remaining records redelivered.

Refer to the Spring for Apache Kafka documentation for more information about these techniques.

|

1.3.5. Kafka Producer Properties

To avoid repetition, Spring Cloud Stream supports setting values for all channels, in the format of spring.cloud.stream.kafka.default.producer.<property>=<value>.

|

The following properties are available for Kafka producers only and

must be prefixed with spring.cloud.stream.kafka.bindings.<channelName>.producer..

- admin.configuration

-

Since version 2.1.1, this property is deprecated in favor of

topic.properties, and support for it will be removed in a future version. - admin.replicas-assignment

-

Since version 2.1.1, this property is deprecated in favor of

topic.replicas-assignment, and support for it will be removed in a future version. - admin.replication-factor

-

Since version 2.1.1, this property is deprecated in favor of

topic.replication-factor, and support for it will be removed in a future version. - bufferSize

-

Upper limit, in bytes, of how much data the Kafka producer attempts to batch before sending.

Default:

16384. - sync

-

Whether the producer is synchronous.

Default:

false. - sendTimeoutExpression

-

A SpEL expression evaluated against the outgoing message used to evaluate the time to wait for ack when synchronous publish is enabled — for example,

headers['mySendTimeout']. The value of the timeout is in milliseconds. With versions before 3.0, the payload could not be used unless native encoding was being used because, by the time this expression was evaluated, the payload was already in the form of abyte[]. Now, the expression is evaluated before the payload is converted.Default:

none. - batchTimeout

-

How long the producer waits to allow more messages to accumulate in the same batch before sending the messages. (Normally, the producer does not wait at all and simply sends all the messages that accumulated while the previous send was in progress.) A non-zero value may increase throughput at the expense of latency.

Default:

0. - messageKeyExpression

-

A SpEL expression evaluated against the outgoing message used to populate the key of the produced Kafka message — for example,

headers['myKey']. With versions before 3.0, the payload could not be used unless native encoding was being used because, by the time this expression was evaluated, the payload was already in the form of abyte[]. Now, the expression is evaluated before the payload is converted. In the case of a regular processor (Function<String, String>orFunction<Message<?>, Message<?>), if the produced key needs to be same as the incoming key from the topic, this property can be set as below.spring.cloud.stream.kafka.bindings.<output-binding-name>.producer.messageKeyExpression: headers['kafka_receivedMessageKey']There is an important caveat to keep in mind for reactive functions. In that case, it is up to the application to manually copy the headers from the incoming messages to outbound messages. You can set the header, e.g.myKeyand useheaders['myKey']as suggested above or, for convenience, simply set theKafkaHeaders.MESSAGE_KEYheader, and you do not need to set this property at all.Default:

none. - headerPatterns

-

A comma-delimited list of simple patterns to match Spring messaging headers to be mapped to the Kafka

Headersin theProducerRecord. Patterns can begin or end with the wildcard character (asterisk). Patterns can be negated by prefixing with!. Matching stops after the first match (positive or negative). For example!ask,as*will passashbut notask.idandtimestampare never mapped.Default:

*(all headers - except theidandtimestamp) - configuration

-

Map with a key/value pair containing generic Kafka producer properties. The

bootstrap.serversproperty cannot be set here; use multi-binder support if you need to connect to multiple clusters.Default: Empty map.

- topic.properties

-

A

Mapof Kafka topic properties used when provisioning new topics — for example,spring.cloud.stream.kafka.bindings.output.producer.topic.properties.message.format.version=0.9.0.0 - topic.replicas-assignment

-

A Map<Integer, List<Integer>> of replica assignments, with the key being the partition and the value being the assignments. Used when provisioning new topics. See the

NewTopicJavadocs in thekafka-clientsjar.Default: none.

- topic.replication-factor

-

The replication factor to use when provisioning topics. Overrides the binder-wide setting. Ignored if

replicas-assignmentsis present.Default: none (the binder-wide default of -1 is used).

- useTopicHeader

-

Set to

trueto override the default binding destination (topic name) with the value of theKafkaHeaders.TOPICmessage header in the outbound message. If the header is not present, the default binding destination is used.Default:

false. - recordMetadataChannel

-

The bean name of a

MessageChannelto which successful send results should be sent; the bean must exist in the application context. The message sent to the channel is the sent message (after conversion, if any) with an additional headerKafkaHeaders.RECORD_METADATA. The header contains aRecordMetadataobject provided by the Kafka client; it includes the partition and offset where the record was written in the topic.ResultMetadata meta = sendResultMsg.getHeaders().get(KafkaHeaders.RECORD_METADATA, RecordMetadata.class)Failed sends go the producer error channel (if configured); see Error Channels.

Default: null.

The Kafka binder uses the partitionCount setting of the producer as a hint to create a topic with the given partition count (in conjunction with the minPartitionCount, the maximum of the two being the value being used).

Exercise caution when configuring both minPartitionCount for a binder and partitionCount for an application, as the larger value is used.

If a topic already exists with a smaller partition count and autoAddPartitions is disabled (the default), the binder fails to start.

If a topic already exists with a smaller partition count and autoAddPartitions is enabled, new partitions are added.

If a topic already exists with a larger number of partitions than the maximum of (minPartitionCount or partitionCount), the existing partition count is used.

|

- compression

-

Set the

compression.typeproducer property. Supported values arenone,gzip,snappy,lz4andzstd. If you override thekafka-clientsjar to 2.1.0 (or later), as discussed in the Spring for Apache Kafka documentation, and wish to usezstdcompression, usespring.cloud.stream.kafka.bindings.<binding-name>.producer.configuration.compression.type=zstd.Default:

none. - transactionManager

-

Bean name of a

KafkaAwareTransactionManagerused to override the binder’s transaction manager for this binding. Usually needed if you want to synchronize another transaction with the Kafka transaction, using theChainedKafkaTransactionManaager. To achieve exactly once consumption and production of records, the consumer and producer bindings must all be configured with the same transaction manager.Default: none.

- closeTimeout

-

Timeout in number of seconds to wait for when closing the producer.

Default:

30 - allowNonTransactional

-

Normally, all output bindings associated with a transactional binder will publish in a new transaction, if one is not already in process. This property allows you to override that behavior. If set to true, records published to this output binding will not be run in a transaction, unless one is already in process.

Default:

false

1.3.6. Usage examples

In this section, we show the use of the preceding properties for specific scenarios.

Example: Setting ackMode to MANUAL and Relying on Manual Acknowledgement

This example illustrates how one may manually acknowledge offsets in a consumer application.

This example requires that spring.cloud.stream.kafka.bindings.input.consumer.ackMode be set to MANUAL.

Use the corresponding input channel name for your example.

@SpringBootApplication

public class ManuallyAcknowdledgingConsumer {

public static void main(String[] args) {

SpringApplication.run(ManuallyAcknowdledgingConsumer.class, args);

}

@Bean

public Consumer<Message<?>> process() {

return message -> {

Acknowledgment acknowledgment = message.getHeaders().get(KafkaHeaders.ACKNOWLEDGMENT, Acknowledgment.class);

if (acknowledgment != null) {

System.out.println("Acknowledgment provided");

acknowledgment.acknowledge();

}

};

}Example: Security Configuration

Apache Kafka 0.9 supports secure connections between client and brokers.

To take advantage of this feature, follow the guidelines in the Apache Kafka Documentation as well as the Kafka 0.9 security guidelines from the Confluent documentation.

Use the spring.cloud.stream.kafka.binder.configuration option to set security properties for all clients created by the binder.

For example, to set security.protocol to SASL_SSL, set the following property:

spring.cloud.stream.kafka.binder.configuration.security.protocol=SASL_SSLAll the other security properties can be set in a similar manner.

When using Kerberos, follow the instructions in the reference documentation for creating and referencing the JAAS configuration.

Spring Cloud Stream supports passing JAAS configuration information to the application by using a JAAS configuration file and using Spring Boot properties.

Using JAAS Configuration Files

The JAAS and (optionally) krb5 file locations can be set for Spring Cloud Stream applications by using system properties. The following example shows how to launch a Spring Cloud Stream application with SASL and Kerberos by using a JAAS configuration file:

java -Djava.security.auth.login.config=/path.to/kafka_client_jaas.conf -jar log.jar \

--spring.cloud.stream.kafka.binder.brokers=secure.server:9092 \

--spring.cloud.stream.bindings.input.destination=stream.ticktock \

--spring.cloud.stream.kafka.binder.configuration.security.protocol=SASL_PLAINTEXTUsing Spring Boot Properties

As an alternative to having a JAAS configuration file, Spring Cloud Stream provides a mechanism for setting up the JAAS configuration for Spring Cloud Stream applications by using Spring Boot properties.

The following properties can be used to configure the login context of the Kafka client:

- spring.cloud.stream.kafka.binder.jaas.loginModule

-

The login module name. Not necessary to be set in normal cases.

Default:

com.sun.security.auth.module.Krb5LoginModule. - spring.cloud.stream.kafka.binder.jaas.controlFlag

-

The control flag of the login module.

Default:

required. - spring.cloud.stream.kafka.binder.jaas.options

-

Map with a key/value pair containing the login module options.

Default: Empty map.

The following example shows how to launch a Spring Cloud Stream application with SASL and Kerberos by using Spring Boot configuration properties:

java --spring.cloud.stream.kafka.binder.brokers=secure.server:9092 \

--spring.cloud.stream.bindings.input.destination=stream.ticktock \

--spring.cloud.stream.kafka.binder.autoCreateTopics=false \

--spring.cloud.stream.kafka.binder.configuration.security.protocol=SASL_PLAINTEXT \

--spring.cloud.stream.kafka.binder.jaas.options.useKeyTab=true \

--spring.cloud.stream.kafka.binder.jaas.options.storeKey=true \

--spring.cloud.stream.kafka.binder.jaas.options.keyTab=/etc/security/keytabs/kafka_client.keytab \

--spring.cloud.stream.kafka.binder.jaas.options.principal=kafka-client-1@EXAMPLE.COMThe preceding example represents the equivalent of the following JAAS file:

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

keyTab="/etc/security/keytabs/kafka_client.keytab"

principal="[email protected]";

};If the topics required already exist on the broker or will be created by an administrator, autocreation can be turned off and only client JAAS properties need to be sent.

Do not mix JAAS configuration files and Spring Boot properties in the same application.

If the -Djava.security.auth.login.config system property is already present, Spring Cloud Stream ignores the Spring Boot properties.

|

Be careful when using the autoCreateTopics and autoAddPartitions with Kerberos.

Usually, applications may use principals that do not have administrative rights in Kafka and Zookeeper.

Consequently, relying on Spring Cloud Stream to create/modify topics may fail.

In secure environments, we strongly recommend creating topics and managing ACLs administratively by using Kafka tooling.

|

Multi-binder configuration and JAAS

When connecting to multiple clusters in which each one requires separate JAAS configuration, then set the JAAS configuration using the property sasl.jaas.config.

When this property is present in the application, it takes precedence over the other strategies mentioned above.

See this KIP-85 for more details.

For example, if you have two clusters in your application with separate JAAS configuration, then the following is a template that you can use:

spring.cloud.stream:

binders:

kafka1:

type: kafka

environment:

spring:

cloud:

stream:

kafka:

binder:

brokers: localhost:9092

configuration.sasl.jaas.config: "org.apache.kafka.common.security.plain.PlainLoginModule required username=\"admin\" password=\"admin-secret\";"

kafka2:

type: kafka

environment:

spring:

cloud:

stream:

kafka:

binder:

brokers: localhost:9093

configuration.sasl.jaas.config: "org.apache.kafka.common.security.plain.PlainLoginModule required username=\"user1\" password=\"user1-secret\";"

kafka.binder:

configuration:

security.protocol: SASL_PLAINTEXT

sasl.mechanism: PLAINNote that both the Kafka clusters, and the sasl.jaas.config values for each of them are different in the above configuration.

See this sample application for more details on how to setup and run such an application.

Example: Pausing and Resuming the Consumer

If you wish to suspend consumption but not cause a partition rebalance, you can pause and resume the consumer.

This is facilitated by managing the binding lifecycle as shown in Binding visualization and control in the Spring Cloud Stream documentation, using State.PAUSED and State.RESUMED.

To resume, you can use an ApplicationListener (or @EventListener method) to receive ListenerContainerIdleEvent instances.

The frequency at which events are published is controlled by the idleEventInterval property.

1.4. Transactional Binder

Enable transactions by setting spring.cloud.stream.kafka.binder.transaction.transactionIdPrefix to a non-empty value, e.g. tx-.

When used in a processor application, the consumer starts the transaction; any records sent on the consumer thread participate in the same transaction.

When the listener exits normally, the listener container will send the offset to the transaction and commit it.

A common producer factory is used for all producer bindings configured using spring.cloud.stream.kafka.binder.transaction.producer.* properties; individual binding Kafka producer properties are ignored.

Normal binder retries (and dead lettering) are not supported with transactions because the retries will run in the original transaction, which may be rolled back and any published records will be rolled back too.

When retries are enabled (the common property maxAttempts is greater than zero) the retry properties are used to configure a DefaultAfterRollbackProcessor to enable retries at the container level.

Similarly, instead of publishing dead-letter records within the transaction, this functionality is moved to the listener container, again via the DefaultAfterRollbackProcessor which runs after the main transaction has rolled back.

|

If you wish to use transactions in a source application, or from some arbitrary thread for producer-only transaction (e.g. @Scheduled method), you must get a reference to the transactional producer factory and define a KafkaTransactionManager bean using it.

@Bean

public PlatformTransactionManager transactionManager(BinderFactory binders,

@Value("${unique.tx.id.per.instance}") String txId) {

ProducerFactory<byte[], byte[]> pf = ((KafkaMessageChannelBinder) binders.getBinder(null,

MessageChannel.class)).getTransactionalProducerFactory();

KafkaTransactionManager tm = new KafkaTransactionManager<>(pf);

tm.setTransactionId(txId)

return tm;

}

Notice that we get a reference to the binder using the BinderFactory; use null in the first argument when there is only one binder configured.

If more than one binder is configured, use the binder name to get the reference.

Once we have a reference to the binder, we can obtain a reference to the ProducerFactory and create a transaction manager.

Then you would use normal Spring transaction support, e.g. TransactionTemplate or @Transactional, for example:

public static class Sender {

@Transactional

public void doInTransaction(MessageChannel output, List<String> stuffToSend) {

stuffToSend.forEach(stuff -> output.send(new GenericMessage<>(stuff)));

}

}

If you wish to synchronize producer-only transactions with those from some other transaction manager, use a ChainedTransactionManager.

If you deploy multiple instances of your application, each instance needs a unique transactionIdPrefix.

|

1.5. Error Channels

Starting with version 1.3, the binder unconditionally sends exceptions to an error channel for each consumer destination and can also be configured to send async producer send failures to an error channel. See this section on error handling for more information.

The payload of the ErrorMessage for a send failure is a KafkaSendFailureException with properties:

-

failedMessage: The Spring MessagingMessage<?>that failed to be sent. -

record: The rawProducerRecordthat was created from thefailedMessage

There is no automatic handling of producer exceptions (such as sending to a Dead-Letter queue). You can consume these exceptions with your own Spring Integration flow.

1.6. Kafka Metrics

Kafka binder module exposes the following metrics:

spring.cloud.stream.binder.kafka.offset: This metric indicates how many messages have not been yet consumed from a given binder’s topic by a given consumer group.

The metrics provided are based on the Micrometer library.

The binder creates the KafkaBinderMetrics bean if Micrometer is on the classpath and no other such beans provided by the application.

The metric contains the consumer group information, topic and the actual lag in committed offset from the latest offset on the topic.

This metric is particularly useful for providing auto-scaling feedback to a PaaS platform.

The metric collection behaviour can be configured by setting properties in the spring.cloud.stream.kafka.binder.metrics namespace,

refer to the kafka binder properties section for more information.

You can exclude KafkaBinderMetrics from creating the necessary infrastructure like consumers and then reporting the metrics by providing the following component in the application.

@Component

class NoOpBindingMeters {

NoOpBindingMeters(MeterRegistry registry) {

registry.config().meterFilter(

MeterFilter.denyNameStartsWith(KafkaBinderMetrics.OFFSET_LAG_METRIC_NAME));

}

}More details on how to suppress meters selectively can be found here.

1.7. Tombstone Records (null record values)

When using compacted topics, a record with a null value (also called a tombstone record) represents the deletion of a key.

To receive such messages in a Spring Cloud Stream function, you can use the following strategy.

@Bean

public Function<Message<Person>, String> myFunction() {

return value -> {

Object v = value.getPayload();

String className = v.getClass().getName();

if (className.isEqualTo("org.springframework.kafka.support.KafkaNull")) {

// this is a tombstone record

}

else {

// continue with processing

}

};

}

1.8. Using a KafkaBindingRebalanceListener

Applications may wish to seek topics/partitions to arbitrary offsets when the partitions are initially assigned, or perform other operations on the consumer.

Starting with version 2.1, if you provide a single KafkaBindingRebalanceListener bean in the application context, it will be wired into all Kafka consumer bindings.

public interface KafkaBindingRebalanceListener {

/**

* Invoked by the container before any pending offsets are committed.

* @param bindingName the name of the binding.

* @param consumer the consumer.

* @param partitions the partitions.

*/

default void onPartitionsRevokedBeforeCommit(String bindingName, Consumer<?, ?> consumer,

Collection<TopicPartition> partitions) {

}

/**

* Invoked by the container after any pending offsets are committed.

* @param bindingName the name of the binding.

* @param consumer the consumer.

* @param partitions the partitions.

*/

default void onPartitionsRevokedAfterCommit(String bindingName, Consumer<?, ?> consumer, Collection<TopicPartition> partitions) {

}

/**

* Invoked when partitions are initially assigned or after a rebalance.

* Applications might only want to perform seek operations on an initial assignment.

* @param bindingName the name of the binding.

* @param consumer the consumer.

* @param partitions the partitions.

* @param initial true if this is the initial assignment.

*/

default void onPartitionsAssigned(String bindingName, Consumer<?, ?> consumer, Collection<TopicPartition> partitions,

boolean initial) {

}

}

You cannot set the resetOffsets consumer property to true when you provide a rebalance listener.

1.9. Retry and Dead Letter Processing

By default, when you configure retry (e.g. maxAttemts) and enableDlq in a consumer binding, these functions are performed within the binder, with no participation by the listener container or Kafka consumer.

There are situations where it is preferable to move this functionality to the listener container, such as:

-

The aggregate of retries and delays will exceed the consumer’s

max.poll.interval.msproperty, potentially causing a partition rebalance. -

You wish to publish the dead letter to a different Kafka cluster.

-

You wish to add retry listeners to the error handler.

-

…

To configure moving this functionality from the binder to the container, define a @Bean of type ListenerContainerWithDlqAndRetryCustomizer.

This interface has the following methods:

/**

* Configure the container.

* @param container the container.

* @param destinationName the destination name.

* @param group the group.

* @param dlqDestinationResolver a destination resolver for the dead letter topic (if

* enableDlq).

* @param backOff the backOff using retry properties (if configured).

* @see #retryAndDlqInBinding(String, String)

*/

void configure(AbstractMessageListenerContainer<?, ?> container, String destinationName, String group,

@Nullable BiFunction<ConsumerRecord<?, ?>, Exception, TopicPartition> dlqDestinationResolver,

@Nullable BackOff backOff);

/**

* Return false to move retries and DLQ from the binding to a customized error handler

* using the retry metadata and/or a {@code DeadLetterPublishingRecoverer} when

* configured via

* {@link #configure(AbstractMessageListenerContainer, String, String, BiFunction, BackOff)}.

* @param destinationName the destination name.

* @param group the group.

* @return false to disable retries and DLQ in the binding

*/

default boolean retryAndDlqInBinding(String destinationName, String group) {

return true;

}

The destination resolver and BackOff are created from the binding properties (if configured). The KafkaTemplate uses configuration from spring.kafka…. properties. You can then use these to create a custom error handler and dead letter publisher; for example:

@Bean

ListenerContainerWithDlqAndRetryCustomizer cust(KafkaTemplate<?, ?> template) {

return new ListenerContainerWithDlqAndRetryCustomizer() {

@Override

public void configure(AbstractMessageListenerContainer<?, ?> container, String destinationName,

String group,

@Nullable BiFunction<ConsumerRecord<?, ?>, Exception, TopicPartition> dlqDestinationResolver,

@Nullable BackOff backOff) {

if (destinationName.equals("topicWithLongTotalRetryConfig")) {

ConsumerRecordRecoverer dlpr = new DeadLetterPublishingRecoverer(template,

dlqDestinationResolver);

container.setCommonErrorHandler(new DefaultErrorHandler(dlpr, backOff));

}

}

@Override

public boolean retryAndDlqInBinding(String destinationName, String group) {

return !destinationName.contains("topicWithLongTotalRetryConfig");

}

};

}

Now, only a single retry delay needs to be greater than the consumer’s max.poll.interval.ms property.

When working with several binders, the 'ListenerContainerWithDlqAndRetryCustomizer' bean gets overridden by the 'DefaultBinderFactory'. For the bean to apply, you need to use a 'BinderCustomizer' to set the container customizer (See [binder-customizer]):

@Bean

public BinderCustomizer binderCustomizer(ListenerContainerWithDlqAndRetryCustomizer containerCustomizer) {

return (binder, binderName) -> {

if (binder instanceof KafkaMessageChannelBinder kafkaMessageChannelBinder) {

kafkaMessageChannelBinder.setContainerCustomizer(containerCustomizer);

}

else if (binder instanceof KStreamBinder) {

...

}

else if (binder instanceof RabbitMessageChannelBinder) {

...

}

};

}

1.10. Customizing Consumer and Producer configuration

If you want advanced customization of consumer and producer configuration that is used for creating ConsumerFactory and ProducerFactory in Kafka,

you can implement the following customizers.

-

ConsumerConfigCustomizer

-

ProducerConfigCustomizer

Both of these interfaces provide a way to configure the config map used for consumer and producer properties.

For example, if you want to gain access to a bean that is defined at the application level, you can inject that in the implementation of the configure method.

When the binder discovers that these customizers are available as beans, it will invoke the configure method right before creating the consumer and producer factories.

Both of these interfaces also provide access to both the binding and destination names so that they can be accessed while customizing producer and consumer properties.

1.11. Customizing AdminClient Configuration

As with consumer and producer config customization above, applications can also customize the configuration for admin clients by providing an AdminClientConfigCustomizer.

AdminClientConfigCustomizer’s configure method provides access to the admin client properties, using which you can define further customization.

Binder’s Kafka topic provisioner gives the highest precedence for the properties given through this customizer.

Here is an example of providing this customizer bean.

@Bean

public AdminClientConfigCustomizer adminClientConfigCustomizer() {

return props -> {

props.put(CommonClientConfigs.SECURITY_PROTOCOL_CONFIG, "SASL_SSL");

};

}1.12. Custom Kafka Binder Health Indicator

Kafka binder activates a default health indicator when Spring Boot actuator is on the classpath.

This health indicator checks the health of the binder and any communication issues with the Kafka broker.

If an application wants to disable this default health check implementation and include a custom implementation, then it can provide an implementation for KafkaBinderHealth interface.

KafkaBinderHealth is a marker interface that extends from HealthIndicator.

In the custom implementation, it must provide an implementation for the health() method.

The custom implementation must be present in the application configuration as a bean.

When the binder discovers the custom implementation, it will use that instead of the default implementation.

Here is an example of such a custom implementation bean in the application.

@Bean

public KafkaBinderHealth kafkaBinderHealthIndicator() {

return new KafkaBinderHealth() {

@Override

public Health health() {

// custom implementation details.

}

};

}1.13. Dead-Letter Topic Processing

1.13.1. Dead-Letter Topic Partition Selection

By default, records are published to the Dead-Letter topic using the same partition as the original record. This means the Dead-Letter topic must have at least as many partitions as the original record.

To change this behavior, add a DlqPartitionFunction implementation as a @Bean to the application context.

Only one such bean can be present.

The function is provided with the consumer group, the failed ConsumerRecord and the exception.

For example, if you always want to route to partition 0, you might use:

@Bean

public DlqPartitionFunction partitionFunction() {

return (group, record, ex) -> 0;

}

If you set a consumer binding’s dlqPartitions property to 1 (and the binder’s minPartitionCount is equal to 1), there is no need to supply a DlqPartitionFunction; the framework will always use partition 0.

If you set a consumer binding’s dlqPartitions property to a value greater than 1 (or the binder’s minPartitionCount is greater than 1), you must provide a DlqPartitionFunction bean, even if the partition count is the same as the original topic’s.

|

It is also possible to define a custom name for the DLQ topic.

In order to do so, create an implementation of DlqDestinationResolver as a @Bean to the application context.

When the binder detects such a bean, that takes precedence, otherwise it will use the dlqName property.

If neither of these are found, it will default to error.<destination>.<group>.

Here is an example of DlqDestinationResolver as a @Bean.

@Bean

public DlqDestinationResolver dlqDestinationResolver() {

return (rec, ex) -> {

if (rec.topic().equals("word1")) {

return "topic1-dlq";

}

else {

return "topic2-dlq";

}

};

}One important thing to keep in mind when providing an implementation for DlqDestinationResolver is that the provisioner in the binder will not auto create topics for the application.

This is because there is no way for the binder to infer the names of all the DLQ topics the implementation might send to.

Therefore, if you provide DLQ names using this strategy, it is the application’s responsibility to ensure that those topics are created beforehand.

1.13.2. Handling Records in a Dead-Letter Topic

Because the framework cannot anticipate how users would want to dispose of dead-lettered messages, it does not provide any standard mechanism to handle them. If the reason for the dead-lettering is transient, you may wish to route the messages back to the original topic. However, if the problem is a permanent issue, that could cause an infinite loop. The sample Spring Boot application within this topic is an example of how to route those messages back to the original topic, but it moves them to a “parking lot” topic after three attempts. The application is another spring-cloud-stream application that reads from the dead-letter topic. It exits when no messages are received for 5 seconds.

The examples assume the original destination is so8400out and the consumer group is so8400.

There are a couple of strategies to consider:

-

Consider running the rerouting only when the main application is not running. Otherwise, the retries for transient errors are used up very quickly.

-

Alternatively, use a two-stage approach: Use this application to route to a third topic and another to route from there back to the main topic.

The following code listings show the sample application:

spring.cloud.stream.bindings.input.group=so8400replay

spring.cloud.stream.bindings.input.destination=error.so8400out.so8400

spring.cloud.stream.bindings.output.destination=so8400out

spring.cloud.stream.bindings.parkingLot.destination=so8400in.parkingLot

spring.cloud.stream.kafka.binder.configuration.auto.offset.reset=earliest

spring.cloud.stream.kafka.binder.headers=x-retries@SpringBootApplication

public class ReRouteDlqKApplication implements CommandLineRunner {

private static final String X_RETRIES_HEADER = "x-retries";

public static void main(String[] args) {

SpringApplication.run(ReRouteDlqKApplication.class, args).close();

}

private final AtomicInteger processed = new AtomicInteger();

@Autowired

private StreamBridge streamBridge;

@Bean

public Function<Message<?>, Message<?>> reRoute() {

return failed -> {

processed.incrementAndGet();

Integer retries = failed.getHeaders().get(X_RETRIES_HEADER, Integer.class);

if (retries == null) {

System.out.println("First retry for " + failed);

return MessageBuilder.fromMessage(failed)

.setHeader(X_RETRIES_HEADER, 1)

.setHeader(BinderHeaders.PARTITION_OVERRIDE,

failed.getHeaders().get(KafkaHeaders.RECEIVED_PARTITION_ID))

.build();

}

else if (retries < 3) {

System.out.println("Another retry for " + failed);

return MessageBuilder.fromMessage(failed)

.setHeader(X_RETRIES_HEADER, retries + 1)

.setHeader(BinderHeaders.PARTITION_OVERRIDE,

failed.getHeaders().get(KafkaHeaders.RECEIVED_PARTITION_ID))

.build();

}

else {

System.out.println("Retries exhausted for " + failed);

streamBridge.send("parkingLot", MessageBuilder.fromMessage(failed)

.setHeader(BinderHeaders.PARTITION_OVERRIDE,

failed.getHeaders().get(KafkaHeaders.RECEIVED_PARTITION_ID))

.build());

}

return null;

};

}

@Override

public void run(String... args) throws Exception {

while (true) {

int count = this.processed.get();

Thread.sleep(5000);

if (count == this.processed.get()) {

System.out.println("Idle, exiting");

return;

}

}

}

}

1.14. Partitioning with the Kafka Binder

Apache Kafka supports topic partitioning natively.

Sometimes it is advantageous to send data to specific partitions — for example, when you want to strictly order message processing (all messages for a particular customer should go to the same partition).

The following example shows how to configure the producer and consumer side:

@SpringBootApplication

public class KafkaPartitionProducerApplication {

private static final Random RANDOM = new Random(System.currentTimeMillis());

private static final String[] data = new String[] {

"foo1", "bar1", "qux1",

"foo2", "bar2", "qux2",

"foo3", "bar3", "qux3",

"foo4", "bar4", "qux4",

};

public static void main(String[] args) {

new SpringApplicationBuilder(KafkaPartitionProducerApplication.class)

.web(false)

.run(args);

}

@Bean

public Supplier<Message<?>> generate() {

return () -> {

String value = data[RANDOM.nextInt(data.length)];

System.out.println("Sending: " + value);

return MessageBuilder.withPayload(value)

.setHeader("partitionKey", value)

.build();

};

}

}

spring:

cloud:

stream:

bindings:

generate-out-0:

destination: partitioned.topic

producer:

partition-key-expression: headers['partitionKey']

partition-count: 12

It is important to keep in mind that, since Apache Kafka supports partitioning natively, there is no need to rely on binder partitioning as described above unless you are using custom partition keys as in the example or an expression that involves the payload itself.

The binder-provided partitioning selection is otherwise intended for middleware technologies that do not support native partitioning.

Note that we are using a custom key called partitionKey in the above example, that will be the determining factor for the partition, thus in this case it is appropriate to use binder partitioning.

When using native Kafka partitioning, i.e, when you do not provide the partition-key-expression, then Apache Kafka will select a partition, which by default will be the hash value of the record key over the available number of partitions.

To add a key to an outbound record, set the KafkaHeaders.KEY header to the desired key value in a spring-messaging Message<?>.

By default, when no record key is provided, Apache Kafka will choose a partition based on the logic described in the Apache Kafka Documentation.

|

The topic must be provisioned to have enough partitions to achieve the desired concurrency for all consumer groups.

The above configuration supports up to 12 consumer instances (6 if their concurrency is 2, 4 if their concurrency is 3, and so on).

It is generally best to “over-provision” the partitions to allow for future increases in consumers or concurrency.

|

The preceding configuration uses the default partitioning (key.hashCode() % partitionCount).

This may or may not provide a suitably balanced algorithm, depending on the key values. In particular, note that this partitioning strategy differs from the default used by a standalone Kafka producer - such as the one used by Kafka Streams, meaning that the same key value may balance differently across partitions when produced by those clients.

You can override this default by using the partitionSelectorExpression or partitionSelectorClass properties.

|

Since partitions are natively handled by Kafka, no special configuration is needed on the consumer side. Kafka allocates partitions across the instances.

| The partitionCount for a kafka topic may change during runtime (e.g. due to an adminstration task). The calculated partitions will be different after that (e.g. new partitions will be used then). Since 4.0.3 of Spring Cloud Stream runtime changes of partition count will be supported. See also parameter 'spring.kafka.producer.properties.metadata.max.age.ms' to configure update interval. Due to some limitations it is not possible to use a 'partition-key-expression' which references the 'payload' of a message, the mechanism will be disabled in that case. The overall behavior is disabled by default and can be enabled using configuration parameter 'producer.dynamicPartitionUpdatesEnabled=true'. |

The following Spring Boot application listens to a Kafka stream and prints (to the console) the partition ID to which each message goes:

@SpringBootApplication

public class KafkaPartitionConsumerApplication {

public static void main(String[] args) {

new SpringApplicationBuilder(KafkaPartitionConsumerApplication.class)

.web(WebApplicationType.NONE)

.run(args);

}

@Bean

public Consumer<Message<String>> listen() {

return message -> {

int partition = (int) message.getHeaders().get(KafkaHeaders.RECEIVED_PARTITION);

System.out.println(message + " received from partition " + partition);

};

}

}

spring:

cloud:

stream:

bindings:

listen-in-0:

destination: partitioned.topic

group: myGroupYou can add instances as needed.

Kafka rebalances the partition allocations.

If the instance count (or instance count * concurrency) exceeds the number of partitions, some consumers are idle.

2. Reactive Kafka Binder

Kafka binder in Spring Cloud Stream provides a dedicated reactive binder based on the Reactor Kafka project.

This reactive Kafka binder enables full end-to-end reactive capabilities such as backpressure, reactive streams etc. in applications based on Apache Kafka.

When your Spring Cloud Stream Kafka application is written using reactive types (Flux, Mono etc.), it is recommended to use this reactive Kafka binder instead of the regular message channel based Kafka binder.

2.1. Maven Coordinates

Following are the maven coordinates for the reactive Kafka binder.

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream-binder-kafka-reactive</artifactId>

</dependency>2.2. Basic Example using the Reactive Kafka Binder

In this section, we show some basic code snippets for writing a reactive Kafka application using the reactive binder and details around them.

@Bean

public Function<Flux<String>, Flux<String>> uppercase() {

return s -> s.map(String::toUpperCase);

}

You can use the above upppercase function with both message channel based Kafka binder (spring-cloud-stream-binder-kafka) as well as the reactive Kafka binder (spring-cloud-stream-binder-kafka-reactive), the topic of discussion in this section.

When using this function with the regular Kafka binder, although you are using reactive types in the application (i.e., in the uppercase function), you only get the reactive streams within the execution of your function.

Outside the function’s execution context, there is no reactive benefits since the underlying binder is not based on the reactive stack.

Therefore, although this might look like it is bringing a full end-to-end reactive stack, this application is only partially reactive.

Now assume that you are using the proper reactive binder for Kafka - spring-cloud-stream-binder-kafka-reactive with the above function’s application.

This binder implementation will give the full reactive benefits all the way from consumption on the top end to publishing at the bottom end of the chain.

This is because the underlying binder is built on top of Reactor Kafka's core API’s.

On the consumer side, it makes use of the KafkaReceiver which is a reactive implementation of a Kafka consumer.

Similarly, on the producer side, it uses KafkaSender API which is the reactive implementation of a Kafka producer.

Since the foundations of the reactive Kafka binder is built upon a proper reactive Kafka API, applications get the full benefits of using reactive technologies.

Things like automatic back pressure, among other reactive capabilities, are built-in for the application when using this reactive Kafka binder.

Starting with version 4.0.2, you can customize the ReceiverOptions and SenderOptions by providing one or more ReceiverOptionsCustomizer or SenderOptionsCustomizer beans respectively.

They are BiFunction s which receive the binding name and initial options, returning the customized options.

The interfaces extend Ordered so the customizers will be applied in the order required, when more than one are present.

The binder does not commit offsets by default.

Starting with version 4.0.2, the KafkaHeaders.ACKNOWLEDGMENT header contains a ReceiverOffset object which allows you to cause the offset to be committed by calling its acknowledge() or commit() methods.

|

@Bean

public Consumer<Flux<Message<String>> consume() {

return msg -> {

process(msg.getPayload());

msg.getHeaders().get(KafkaHeaders.ACKNOWLEDGMENT, ReceiverOffset.class).acknowledge();

}

}

Refer to the reactor-kafka documentation and javadocs for more information.

In addition, starting with version 4.0.3, the Kafka consumer property reactiveAtmostOnce can be set to true and the binder will automatically commit the offsets before records returned by each poll are processed.

Also, starting with version 4.0.3, you can set the consumer property reactiveAutoCommit to true and the the binder will automatically commit the offsets after the records returned by each poll are processed.

In these cases, the acknowledgment header is not present.

4.0.2 also provided reactiveAutoCommit, but the implementation was incorrect, it behaved similarly to reactiveAtMostOnce.

|

The following is an example of how to use reaciveAutoCommit.

@Bean

Consumer<Flux<Flux<ConsumerRecord<?, String>>>> input() {

return flux -> flux

.doOnNext(inner -> inner

.doOnNext(val -> {

log.info(val.value());

})

.subscribe())

.subscribe();

}

Note that reactor-kafka returns a Flux<Flux<ConsumerRecord<?, ?>>> when using auto commit.

Given that Spring has no access to the contents of the inner flux, the application must deal with the native ConsumerRecord; there is no message conversion or conversion service applied to the contents.

This requires the use of native decoding (by specifying a Deserializer of the appropriate type in the configuration) to return record keys/values of the desired types.

2.3. Consuming Records in the Raw Format

In the above upppercase function, we are consuming the record as Flux<String> and then produce it as Flux<String>.

There might be occasions in which you need to receive the record in the original received format - the ReceiverRecord.

Here is such a function.

@Bean

public Function<Flux<ReceiverRecord<byte[], byte[]>>, Flux<String>> lowercase() {

return s -> s.map(rec -> new String(rec.value()).toLowerCase());

}

In this function, note that, we are consuming the record as Flux<ReceiverRecord<byte[], byte[]>> and then producing it as Flux<String>.

ReceiverRecord is the basic received record which is a specialized Kafka ConsumerRecord in Reactor Kafka.

When using the reactive Kafka binder, the above function will give you access to the ReceiverRecord type for each incoming record.

However, in this case, you need to provide a custom implementation for a RecordMessageConverter.

By default, the reactive Kafka binder uses a MessagingMessageConverter that converts the payload and headers from the ConsumerRecord.

Therefore, by the time your handler method receives it, the payload is already extracted from the received record and passed onto the method as in the case of the first function we looked above.

By providing a custom RecordMessageConverter implementation in the application, you can override the default behavior.

For example, if you want to consume the record as raw Flux<ReceiverRecord<byte[], byte[]>>, then you can provide the following bean definition in the application.

@Bean

RecordMessageConverter fullRawReceivedRecord() {

return new RecordMessageConverter() {

private final RecordMessageConverter converter = new MessagingMessageConverter();

@Override

public Message<?> toMessage(ConsumerRecord<?, ?> record, Acknowledgment acknowledgment,

Consumer<?, ?> consumer, Type payloadType) {

return MessageBuilder.withPayload(record).build();

}

@Override

public ProducerRecord<?, ?> fromMessage(Message<?> message, String defaultTopic) {

return this.converter.fromMessage(message, defaultTopic);

}

};

}

Then, you need to instruct the framework to use this converter for the required binding.

Here is an example based on our lowercase function.

spring.cloud.stream.kafka.bindings.lowercase-in-0.consumer.converterBeanName=fullRawReceivedRecord"lowercase-in-0 is the input binding name for our lowercase function.

For the outbound (lowecase-out-0), we still use the regular MessagingMessageConverter.

In the toMessage implementation above, we receive the raw ConsumerRecord (ReceiverRecord since we are in a reactive binder context) and then wrap it inside a Message.

Then that message payload which is the ReceiverRecord is provided to the user method.

If reactiveAutoCommit is false (default), call rec.receiverOffset().acknowledge() (or commit()) to cause the offset to be committed; if reactiveAutoCommit is true, the flux supplies ConsumerRecord s instead.

Refer to the reactor-kafka documentation and javadocs for more information.

2.4. Concurrency

When using reactive functions with the reactive Kafka binder, if you set concurrency on the consumer binding, then the binder creates as many dedicated KafkaReceiver objects as provided by the concurrency value.

In other words, this creates multiple reactive streams with separate Flux implementations.

This could be useful when you are consuming records from a partitioned topic.

For example, assume that the incoming topic has at least three partitions. Then you can set the following property.

spring.cloud.stream.bindings.lowercase-in-0.consumer.concurrency=3That will create three dedicated KafkaReceiver objects that generate three separate Flux implementations and then stream them to the handler method.

2.5. Multiplex

Starting with version 4.0.3, the common consumer property multiplex is now supported by the reactive binder, where a single binding can consume from multiple topics.

When false (default), a separate binding is created for each topic specified in a comma-delimited list in the common destination property.

2.6. Destination is Pattern

Starting with version 4.0.3, the destination-is-pattern Kafka binding consumer property is now supported.

The receiver options are conigured with a regex Pattern, allowing the binding to consume from any topic that matches the pattern.

2.7. Sender Result Channel

Starting with version 4.0.3, you can configure the resultMetadataChannel to receive SenderResult<?> s to determine success/failure of sends.

The SenderResult contains correlationMetadata to allow you to correlate results with sends; it also contains RecordMetadata, which indicates the TopicPartition and offset of the sent record.

The resultMetadataChannel must be a FluxMessageChannel instance.

Here is an example of how to use this feature, with correlation metadata of type Integer:

@Bean

FluxMessageChannel sendResults() {

return new FluxMessageChannel();

}

@ServiceActivator(inputChannel = "sendResults")

void handleResults(SenderResult<Integer> result) {

if (result.exception() != null) {

failureFor(result);

}

else {

successFor(result);

}

}

To set the correlation metadata on an output record, set the CORRELATION_ID header:

streamBridge.send("words1", MessageBuilder.withPayload("foobar")

.setCorrelationId(42)

.build());

When using the feature with a Function, the function output type must be a Message<?> with the correlation id header set to the desired value.

Metadata should be unique, at least for the duration of the send.

3. Kafka Streams Binder

3.1. Usage

For using the Kafka Streams binder, you just need to add it to your Spring Cloud Stream application, using the following maven coordinates:

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream-binder-kafka-streams</artifactId>

</dependency>A quick way to bootstrap a new project for Kafka Streams binder is to use Spring Initializr and then select "Cloud Streams" and "Spring for Kafka Streams" as shown below

3.2. Overview

Spring Cloud Stream includes a binder implementation designed explicitly for Apache Kafka Streams binding. With this native integration, a Spring Cloud Stream "processor" application can directly use the Apache Kafka Streams APIs in the core business logic.

Kafka Streams binder implementation builds on the foundations provided by the Spring for Apache Kafka project.

Kafka Streams binder provides binding capabilities for the three major types in Kafka Streams - KStream, KTable and GlobalKTable.

Kafka Streams applications typically follow a model in which the records are read from an inbound topic, apply business logic, and then write the transformed records to an outbound topic. Alternatively, a Processor application with no outbound destination can be defined as well.

In the following sections, we are going to look at the details of Spring Cloud Stream’s integration with Kafka Streams.

3.3. Programming Model

When using the programming model provided by Kafka Streams binder, both the high-level Streams DSL and a mix of both the higher level and the lower level Processor-API can be used as options.

When mixing both higher and lower level API’s, this is usually achieved by invoking transform or process API methods on KStream.

3.3.1. Functional Style

Starting with Spring Cloud Stream 3.0.0, Kafka Streams binder allows the applications to be designed and developed using the functional programming style that is available in Java 8.

This means that the applications can be concisely represented as a lambda expression of types java.util.function.Function or java.util.function.Consumer.

Let’s take a very basic example.

@SpringBootApplication

public class SimpleConsumerApplication {

@Bean

public java.util.function.Consumer<KStream<Object, String>> process() {

return input ->

input.foreach((key, value) -> {

System.out.println("Key: " + key + " Value: " + value);

});

}

}Albeit simple, this is a complete standalone Spring Boot application that is leveraging Kafka Streams for stream processing.

This is a consumer application with no outbound binding and only a single inbound binding.

The application consumes data and it simply logs the information from the KStream key and value on the standard output.