3.2.8.RELEASE

Copyright © 2004-2013

Table of Contents

- I. Overview of Spring Framework

- II. What's New in Spring 3

- 2. New Features and Enhancements in Spring Framework 3.0

- 2.1. Java 5

- 2.2. Improved documentation

- 2.3. New articles and tutorials

- 2.4. New module organization and build system

- 2.5. Overview of new features

- 2.5.1. Core APIs updated for Java 5

- 2.5.2. Spring Expression Language

- 2.5.3. The Inversion of Control (IoC) container

- 2.5.4. General purpose type conversion system and field formatting system

- 2.5.5. The Data Tier

- 2.5.6. The Web Tier

- 2.5.7. Declarative model validation

- 2.5.8. Early support for Java EE 6

- 2.5.9. Support for embedded databases

- 3. New Features and Enhancements in Spring Framework 3.1

- 3.1. Cache Abstraction

- 3.2. Bean Definition Profiles

- 3.3. Environment Abstraction

- 3.4. PropertySource Abstraction

- 3.5. Code equivalents for Spring's XML namespaces

- 3.6. Support for Hibernate 4.x

- 3.7. TestContext framework support for @Configuration classes and bean definition profiles

- 3.8. c: namespace for more concise constructor injection

- 3.9. Support for injection against non-standard JavaBeans setters

- 3.10. Support for Servlet 3 code-based configuration of Servlet Container

- 3.11. Support for Servlet 3 MultipartResolver

- 3.12. JPA EntityManagerFactory bootstrapping without persistence.xml

- 3.13. New HandlerMethod-based Support Classes For Annotated Controller Processing

- 3.14. "consumes" and "produces" conditions in

@RequestMapping - 3.15. Flash Attributes and

RedirectAttributes - 3.16. URI Template Variable Enhancements

- 3.17.

@ValidOn@RequestBodyController Method Arguments - 3.18.

@RequestPartAnnotation On Controller Method Arguments - 3.19.

UriComponentsBuilderandUriComponents

- 4. New Features and Enhancements in Spring Framework 3.2

- 4.1. Support for Servlet 3 based asynchronous request processing

- 4.2. Spring MVC Test framework

- 4.3. Content negotiation improvements

- 4.4.

@ControllerAdviceannotation - 4.5. Matrix variables

- 4.6. Abstract base class for code-based Servlet 3+ container initialization

- 4.7.

ResponseEntityExceptionHandlerclass - 4.8. Support for generic types in the

RestTemplateand in@RequestBodyarguments - 4.9. Jackson JSON 2 and related improvements

- 4.10. Tiles 3

- 4.11.

@RequestBodyimprovements - 4.12. HTTP PATCH method

- 4.13. Excluded patterns in mapped interceptors

- 4.14. Using meta-annotations for injection points and for bean definition methods

- 4.15. Initial support for JCache 0.5

- 4.16. Support for

@DateTimeFormatwithout Joda Time - 4.17. Global date & time formatting

- 4.18. New Testing Features

- 4.19. Concurrency refinements across the framework

- 4.20. New Gradle-based build and move to GitHub

- 4.21. Refined Java SE 7 / OpenJDK 7 support

- III. Core Technologies

- 5. The IoC container

- 5.1. Introduction to the Spring IoC container and beans

- 5.2. Container overview

- 5.3. Bean overview

- 5.4. Dependencies

- 5.5. Bean scopes

- 5.6. Customizing the nature of a bean

- 5.7. Bean definition inheritance

- 5.8. Container Extension Points

- 5.9. Annotation-based container configuration

- 5.10. Classpath scanning and managed components

- 5.10.1.

@Componentand further stereotype annotations - 5.10.2. Automatically detecting classes and registering bean definitions

- 5.10.3. Using filters to customize scanning

- 5.10.4. Defining bean metadata within components

- 5.10.5. Naming autodetected components

- 5.10.6. Providing a scope for autodetected components

- 5.10.7. Providing qualifier metadata with annotations

- 5.10.1.

- 5.11. Using JSR 330 Standard Annotations

- 5.12. Java-based container configuration

- 5.13. Registering a

LoadTimeWeaver - 5.14. Additional Capabilities of the

ApplicationContext - 5.15. The BeanFactory

- 6. Resources

- 7. Validation, Data Binding, and Type Conversion

- 7.1. Introduction

- 7.2. Validation using Spring's

Validatorinterface - 7.3. Resolving codes to error messages

- 7.4. Bean manipulation and the

BeanWrapper - 7.5. Spring 3 Type Conversion

- 7.6. Spring 3 Field Formatting

- 7.7. Configuring a global date & time format

- 7.8. Spring 3 Validation

- 8. Spring Expression Language (SpEL)

- 8.1. Introduction

- 8.2. Feature Overview

- 8.3. Expression Evaluation using Spring's Expression Interface

- 8.4. Expression support for defining bean definitions

- 8.5. Language Reference

- 8.5.1. Literal expressions

- 8.5.2. Properties, Arrays, Lists, Maps, Indexers

- 8.5.3. Inline lists

- 8.5.4. Array construction

- 8.5.5. Methods

- 8.5.6. Operators

- 8.5.7. Assignment

- 8.5.8. Types

- 8.5.9. Constructors

- 8.5.10. Variables

- 8.5.11. Functions

- 8.5.12. Bean references

- 8.5.13. Ternary Operator (If-Then-Else)

- 8.5.14. The Elvis Operator

- 8.5.15. Safe Navigation operator

- 8.5.16. Collection Selection

- 8.5.17. Collection Projection

- 8.5.18. Expression templating

- 8.6. Classes used in the examples

- 9. Aspect Oriented Programming with Spring

- 9.1. Introduction

- 9.2. @AspectJ support

- 9.3. Schema-based AOP support

- 9.4. Choosing which AOP declaration style to use

- 9.5. Mixing aspect types

- 9.6. Proxying mechanisms

- 9.7. Programmatic creation of @AspectJ Proxies

- 9.8. Using AspectJ with Spring applications

- 9.9. Further Resources

- 10. Spring AOP APIs

- 10.1. Introduction

- 10.2. Pointcut API in Spring

- 10.3. Advice API in Spring

- 10.4. Advisor API in Spring

- 10.5. Using the ProxyFactoryBean to create AOP proxies

- 10.6. Concise proxy definitions

- 10.7. Creating AOP proxies programmatically with the ProxyFactory

- 10.8. Manipulating advised objects

- 10.9. Using the "auto-proxy" facility

- 10.10. Using TargetSources

- 10.11. Defining new

Advicetypes - 10.12. Further resources

- 11. Testing

- IV. Data Access

- 12. Transaction Management

- 12.1. Introduction to Spring Framework transaction management

- 12.2. Advantages of the Spring Framework's transaction support model

- 12.3. Understanding the Spring Framework transaction abstraction

- 12.4. Synchronizing resources with transactions

- 12.5. Declarative transaction management

- 12.5.1. Understanding the Spring Framework's declarative transaction implementation

- 12.5.2. Example of declarative transaction implementation

- 12.5.3. Rolling back a declarative transaction

- 12.5.4. Configuring different transactional semantics for different beans

- 12.5.5.

<tx:advice/>settings - 12.5.6. Using

@Transactional - 12.5.7. Transaction propagation

- 12.5.8. Advising transactional operations

- 12.5.9. Using

@Transactionalwith AspectJ

- 12.6. Programmatic transaction management

- 12.7. Choosing between programmatic and declarative transaction management

- 12.8. Application server-specific integration

- 12.9. Solutions to common problems

- 12.10. Further Resources

- 13. DAO support

- 14. Data access with JDBC

- 14.1. Introduction to Spring Framework JDBC

- 14.2. Using the JDBC core classes to control basic JDBC processing and error handling

- 14.3. Controlling database connections

- 14.4. JDBC batch operations

- 14.5. Simplifying JDBC operations with the SimpleJdbc classes

- 14.5.1. Inserting data using SimpleJdbcInsert

- 14.5.2. Retrieving auto-generated keys using SimpleJdbcInsert

- 14.5.3. Specifying columns for a SimpleJdbcInsert

- 14.5.4. Using SqlParameterSource to provide parameter values

- 14.5.5. Calling a stored procedure with SimpleJdbcCall

- 14.5.6. Explicitly declaring parameters to use for a SimpleJdbcCall

- 14.5.7. How to define SqlParameters

- 14.5.8. Calling a stored function using SimpleJdbcCall

- 14.5.9. Returning ResultSet/REF Cursor from a SimpleJdbcCall

- 14.6. Modeling JDBC operations as Java objects

- 14.7. Common problems with parameter and data value handling

- 14.8. Embedded database support

- 14.8.1. Why use an embedded database?

- 14.8.2. Creating an embedded database instance using Spring XML

- 14.8.3. Creating an embedded database instance programmatically

- 14.8.4. Extending the embedded database support

- 14.8.5. Using HSQL

- 14.8.6. Using H2

- 14.8.7. Using Derby

- 14.8.8. Testing data access logic with an embedded database

- 14.9. Initializing a DataSource

- 15. Object Relational Mapping (ORM) Data Access

- 15.1. Introduction to ORM with Spring

- 15.2. General ORM integration considerations

- 15.3. Hibernate

- 15.3.1.

SessionFactorysetup in a Spring container - 15.3.2. Implementing DAOs based on plain Hibernate 3 API

- 15.3.3. Declarative transaction demarcation

- 15.3.4. Programmatic transaction demarcation

- 15.3.5. Transaction management strategies

- 15.3.6. Comparing container-managed and locally defined resources

- 15.3.7. Spurious application server warnings with Hibernate

- 15.3.1.

- 15.4. JDO

- 15.5. JPA

- 15.6. iBATIS SQL Maps

- 16. Marshalling XML using O/X Mappers

- V. The Web

- 17. Web MVC framework

- 17.1. Introduction to Spring Web MVC framework

- 17.2. The

DispatcherServlet - 17.3. Implementing Controllers

- 17.3.1. Defining a controller with

@Controller - 17.3.2. Mapping Requests With

@RequestMapping - 17.3.3. Defining

@RequestMappinghandler methods - Supported method argument types

- Supported method return types

- Binding request parameters to method parameters with

@RequestParam - Mapping the request body with the @RequestBody annotation

- Mapping the response body with the

@ResponseBodyannotation - Using

HttpEntity<?> - Using

@ModelAttributeon a method - Using

@ModelAttributeon a method argument - Using

@SessionAttributesto store model attributes in the HTTP session between requests - Specifying redirect and flash attributes

- Working with

"application/x-www-form-urlencoded"data - Mapping cookie values with the @CookieValue annotation

- Mapping request header attributes with the @RequestHeader annotation

- Method Parameters And Type Conversion

- Customizing

WebDataBinderinitialization - Support for the 'Last-Modified' Response Header To Facilitate Content Caching

- 17.3.4. Asynchronous Request Processing

- 17.3.5. Testing Controllers

- 17.3.1. Defining a controller with

- 17.4. Handler mappings

- 17.5. Resolving views

- 17.6. Using flash attributes

- 17.7. Building

URIs - 17.8. Using locales

- 17.9. Using themes

- 17.10. Spring's multipart (file upload) support

- 17.11. Handling exceptions

- 17.12. Convention over configuration support

- 17.13. ETag support

- 17.14. Code-based Servlet container initialization

- 17.15. Configuring Spring MVC

- 17.15.1. Enabling the MVC Java Config or the MVC XML Namespace

- 17.15.2. Customizing the Provided Configuration

- 17.15.3. Configuring Interceptors

- 17.15.4. Configuring Content Negotiation

- 17.15.5. Configuring View Controllers

- 17.15.6. Configuring Serving of Resources

- 17.15.7. mvc:default-servlet-handler

- 17.15.8. More Spring Web MVC Resources

- 17.15.9. Advanced Customizations with MVC Java Config

- 17.15.10. Advanced Customizations with the MVC Namespace

- 18. View technologies

- 18.1. Introduction

- 18.2. JSP & JSTL

- 18.3. Tiles

- 18.4. Velocity & FreeMarker

- 18.5. XSLT

- 18.6. Document views (PDF/Excel)

- 18.7. JasperReports

- 18.8. Feed Views

- 18.9. XML Marshalling View

- 18.10. JSON Mapping View

- 19. Integrating with other web frameworks

- 20. Portlet MVC Framework

- 20.1. Introduction

- 20.2. The

DispatcherPortlet - 20.3. The

ViewRendererServlet - 20.4. Controllers

- 20.5. Handler mappings

- 20.6. Views and resolving them

- 20.7. Multipart (file upload) support

- 20.8. Handling exceptions

- 20.9. Annotation-based controller configuration

- 20.9.1. Setting up the dispatcher for annotation support

- 20.9.2. Defining a controller with

@Controller - 20.9.3. Mapping requests with

@RequestMapping - 20.9.4. Supported handler method arguments

- 20.9.5. Binding request parameters to method parameters with

@RequestParam - 20.9.6. Providing a link to data from the model with

@ModelAttribute - 20.9.7. Specifying attributes to store in a Session with

@SessionAttributes - 20.9.8. Customizing

WebDataBinderinitialization

- 20.10. Portlet application deployment

- VI. Integration

- 21. Remoting and web services using Spring

- 21.1. Introduction

- 21.2. Exposing services using RMI

- 21.3. Using Hessian or Burlap to remotely call services via HTTP

- 21.4. Exposing services using HTTP invokers

- 21.5. Web services

- 21.5.1. Exposing servlet-based web services using JAX-RPC

- 21.5.2. Accessing web services using JAX-RPC

- 21.5.3. Registering JAX-RPC Bean Mappings

- 21.5.4. Registering your own JAX-RPC Handler

- 21.5.5. Exposing servlet-based web services using JAX-WS

- 21.5.6. Exporting standalone web services using JAX-WS

- 21.5.7. Exporting web services using the JAX-WS RI's Spring support

- 21.5.8. Accessing web services using JAX-WS

- 21.6. JMS

- 21.7. Auto-detection is not implemented for remote interfaces

- 21.8. Considerations when choosing a technology

- 21.9. Accessing RESTful services on the Client

- 22. Enterprise JavaBeans (EJB) integration

- 23. JMS (Java Message Service)

- 24. JMX

- 25. JCA CCI

- 26. Email

- 27. Task Execution and Scheduling

- 28. Dynamic language support

- 29. Cache Abstraction

- VII. Appendices

- A. Classic Spring Usage

- B. Classic Spring AOP Usage

- B.1. Pointcut API in Spring

- B.2. Advice API in Spring

- B.3. Advisor API in Spring

- B.4. Using the ProxyFactoryBean to create AOP proxies

- B.5. Concise proxy definitions

- B.6. Creating AOP proxies programmatically with the ProxyFactory

- B.7. Manipulating advised objects

- B.8. Using the "autoproxy" facility

- B.9. Using TargetSources

- B.10. Defining new

Advicetypes - B.11. Further resources

- C. Migrating to Spring Framework 3.1

- D. Migrating to Spring Framework 3.2

- D.1. Newly optional dependencies

- D.2. EHCache support moved to spring-context-support

- D.3. Inlining of spring-asm jar

- D.4. Explicit CGLIB dependency no longer required

- D.5. For OSGi users

- D.6. MVC Java Config and MVC Namespace

- D.7. Decoding of URI Variable Values

- D.8. HTTP PATCH method

- D.9. Tiles 3

- D.10. Spring MVC Test standalone project

- D.11. Spring Test Dependencies

- D.12. Public API changes

- E. XML Schema-based configuration

- E.1. Introduction

- E.2. XML Schema-based configuration

- F. Extensible XML authoring

- G. spring.tld

- H. spring-form.tld

- H.1. Introduction

- H.2. The

checkboxtag - H.3. The

checkboxestag - H.4. The

errorstag - H.5. The

formtag - H.6. The

hiddentag - H.7. The

inputtag - H.8. The

labeltag - H.9. The

optiontag - H.10. The

optionstag - H.11. The

passwordtag - H.12. The

radiobuttontag - H.13. The

radiobuttonstag - H.14. The

selecttag - H.15. The

textareatag

The Spring Framework is a lightweight solution and a potential one-stop-shop for building your enterprise-ready applications. However, Spring is modular, allowing you to use only those parts that you need, without having to bring in the rest. You can use the IoC container, with Struts on top, but you can also use only the Hibernate integration code or the JDBC abstraction layer. The Spring Framework supports declarative transaction management, remote access to your logic through RMI or web services, and various options for persisting your data. It offers a full-featured MVC framework, and enables you to integrate AOP transparently into your software.

Spring is designed to be non-intrusive, meaning that your domain logic code generally has no dependencies on the framework itself. In your integration layer (such as the data access layer), some dependencies on the data access technology and the Spring libraries will exist. However, it should be easy to isolate these dependencies from the rest of your code base.

This document is a reference guide to Spring Framework features. If you have any requests, comments, or questions on this document, please post them on the user mailing list or on the support forums at http://forum.springsource.org/.

Spring Framework is a Java platform that provides comprehensive infrastructure support for developing Java applications. Spring handles the infrastructure so you can focus on your application.

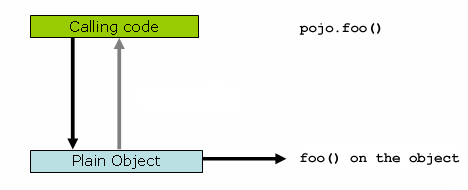

Spring enables you to build applications from “plain old Java objects” (POJOs) and to apply enterprise services non-invasively to POJOs. This capability applies to the Java SE programming model and to full and partial Java EE.

Examples of how you, as an application developer, can use the Spring platform advantage:

-

Make a Java method execute in a database transaction without having to deal with transaction APIs.

-

Make a local Java method a remote procedure without having to deal with remote APIs.

-

Make a local Java method a management operation without having to deal with JMX APIs.

-

Make a local Java method a message handler without having to deal with JMS APIs.

Java applications -- a loose term that runs the gamut from constrained applets to n-tier server-side enterprise applications -- typically consist of objects that collaborate to form the application proper. Thus the objects in an application have dependencies on each other.

Although the Java platform provides a wealth of application development functionality, it lacks the means to organize the basic building blocks into a coherent whole, leaving that task to architects and developers. True, you can use design patterns such as Factory, Abstract Factory, Builder, Decorator, and Service Locator to compose the various classes and object instances that make up an application. However, these patterns are simply that: best practices given a name, with a description of what the pattern does, where to apply it, the problems it addresses, and so forth. Patterns are formalized best practices that you must implement yourself in your application.

The Spring Framework Inversion of Control (IoC) component addresses this concern by providing a formalized means of composing disparate components into a fully working application ready for use. The Spring Framework codifies formalized design patterns as first-class objects that you can integrate into your own application(s). Numerous organizations and institutions use the Spring Framework in this manner to engineer robust, maintainable applications.

The Spring Framework consists of features organized into about 20 modules. These modules are grouped into Core Container, Data Access/Integration, Web, AOP (Aspect Oriented Programming), Instrumentation, and Test, as shown in the following diagram.

Overview of the Spring Framework

The Core Container consists of the Core, Beans, Context, and Expression Language modules.

The Core and

Beans modules provide the fundamental parts of the

framework, including the IoC and Dependency Injection features. The

BeanFactory is a sophisticated implementation of

the factory pattern. It removes the need for programmatic singletons and

allows you to decouple the configuration and specification of

dependencies from your actual program logic.

The Context

module builds on the solid base provided by the Core and Beans

modules: it is a means to access objects in a framework-style manner

that is similar to a JNDI registry. The Context module inherits its

features from the Beans module and adds support for internationalization

(using, for example, resource bundles), event-propagation,

resource-loading, and the transparent creation of contexts by, for

example, a servlet container. The Context module also supports Java EE

features such as EJB, JMX ,and basic remoting. The

ApplicationContext interface is the focal point

of the Context module.

The Expression Language module provides a powerful expression language for querying and manipulating an object graph at runtime. It is an extension of the unified expression language (unified EL) as specified in the JSP 2.1 specification. The language supports setting and getting property values, property assignment, method invocation, accessing the context of arrays, collections and indexers, logical and arithmetic operators, named variables, and retrieval of objects by name from Spring's IoC container. It also supports list projection and selection as well as common list aggregations.

The Data Access/Integration layer consists of the JDBC, ORM, OXM, JMS and Transaction modules.

The JDBC module provides a JDBC-abstraction layer that removes the need to do tedious JDBC coding and parsing of database-vendor specific error codes.

The ORM module provides integration layers for popular object-relational mapping APIs, including JPA, JDO, Hibernate, and iBatis. Using the ORM package you can use all of these O/R-mapping frameworks in combination with all of the other features Spring offers, such as the simple declarative transaction management feature mentioned previously.

The OXM module provides an abstraction layer that supports Object/XML mapping implementations for JAXB, Castor, XMLBeans, JiBX and XStream.

The Java Messaging Service (JMS) module contains features for producing and consuming messages.

The Transaction module supports programmatic and declarative transaction management for classes that implement special interfaces and for all your POJOs (plain old Java objects).

The Web layer consists of the Web, Web-Servlet, Web-Struts, and Web-Portlet modules.

Spring's Web module provides basic web-oriented integration features such as multipart file-upload functionality and the initialization of the IoC container using servlet listeners and a web-oriented application context. It also contains the web-related parts of Spring's remoting support.

The Web-Servlet module contains Spring's model-view-controller (MVC) implementation for web applications. Spring's MVC framework provides a clean separation between domain model code and web forms, and integrates with all the other features of the Spring Framework.

The Web-Struts module contains the support classes for integrating a classic Struts web tier within a Spring application. Note that this support is now deprecated as of Spring 3.0. Consider migrating your application to Struts 2.0 and its Spring integration or to a Spring MVC solution.

The Web-Portlet module provides the MVC implementation to be used in a portlet environment and mirrors the functionality of Web-Servlet module.

Spring's AOP module provides an AOP Alliance-compliant aspect-oriented programming implementation allowing you to define, for example, method-interceptors and pointcuts to cleanly decouple code that implements functionality that should be separated. Using source-level metadata functionality, you can also incorporate behavioral information into your code, in a manner similar to that of .NET attributes.

The separate Aspects module provides integration with AspectJ.

The Instrumentation module provides class instrumentation support and classloader implementations to be used in certain application servers.

The building blocks described previously make Spring a logical choice in many scenarios, from applets to full-fledged enterprise applications that use Spring's transaction management functionality and web framework integration.

Typical full-fledged Spring web application

Spring's declarative

transaction management features make the web application fully

transactional, just as it would be if you used EJB container-managed

transactions. All your custom business logic can be implemented with

simple POJOs and managed by Spring's IoC container. Additional services

include support for sending email and validation that is independent of

the web layer, which lets you choose where to execute validation rules.

Spring's ORM support is integrated with JPA, Hibernate, JDO and iBatis;

for example, when using Hibernate, you can continue to use your existing

mapping files and standard Hibernate

SessionFactory configuration. Form

controllers seamlessly integrate the web-layer with the domain model,

removing the need for ActionForms or other classes

that transform HTTP parameters to values for your domain model.

Spring middle-tier using a third-party web framework

Sometimes circumstances do not allow you to completely switch to a

different framework. The Spring Framework does not

force you to use everything within it; it is not an

all-or-nothing solution. Existing front-ends built

with WebWork, Struts, Tapestry, or other UI frameworks can be integrated

with a Spring-based middle-tier, which allows you to use Spring

transaction features. You simply need to wire up your business logic using

an ApplicationContext and use a

WebApplicationContext to integrate your web

layer.

Remoting usage scenario

When you need to access existing code through web services, you can

use Spring's Hessian-, Burlap-,

Rmi- or JaxRpcProxyFactory

classes. Enabling remote access to existing applications is not

difficult.

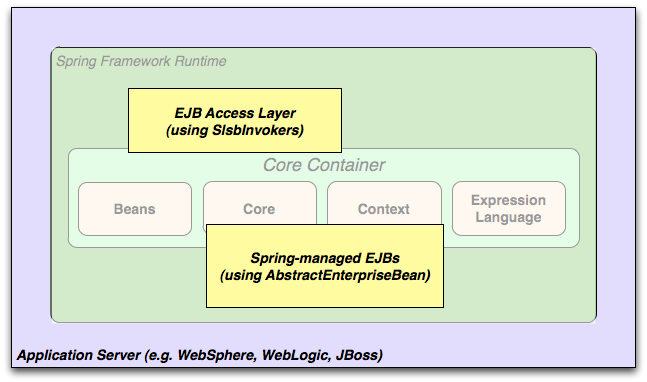

EJBs - Wrapping existing POJOs

The Spring Framework also provides an access and abstraction layer for Enterprise JavaBeans, enabling you to reuse your existing POJOs and wrap them in stateless session beans for use in scalable, fail-safe web applications that might need declarative security.

Dependency management and dependency injection are different

things. To get those nice features of Spring into your application (like

dependency injection) you need to assemble all the libraries needed (jar

files) and get them onto your classpath at runtime, and possibly at

compile time. These dependencies are not virtual components that are

injected, but physical resources in a file system (typically). The

process of dependency management involves locating those resources,

storing them and adding them to classpaths. Dependencies can be direct

(e.g. my application depends on Spring at runtime), or indirect (e.g. my

application depends on commons-dbcp which depends on

commons-pool). The indirect dependencies are also known as

"transitive" and it is those dependencies that are hardest to identify

and manage.

If you are going to use Spring you need to get a copy of the jar

libraries that comprise the pieces of Spring that you need. To make this

easier Spring is packaged as a set of modules that separate the

dependencies as much as possible, so for example if you don't want to

write a web application you don't need the spring-web modules. To refer

to Spring library modules in this guide we use a shorthand naming

convention spring-* or spring-*.jar, where "*"

represents the short name for the module (e.g. spring-core,

spring-webmvc, spring-jms, etc.). The actual

jar file name that you use may be in this form (see below) or it may

not, and normally it also has a version number in the file name (e.g.

spring-core-3.0.0.RELEASE.jar).

In general, Spring publishes its artifacts to four different places:

-

On the community download site http://www.springsource.org/download/community. Here you find all the Spring jars bundled together into a zip file for easy download. The names of the jars here since version 3.0 are in the form

org.springframework.*-<version>.jar. -

Maven Central, which is the default repository that Maven queries, and does not require any special configuration to use. Many of the common libraries that Spring depends on also are available from Maven Central and a large section of the Spring community uses Maven for dependency management, so this is convenient for them. The names of the jars here are in the form

spring-*-<version>.jarand the Maven groupId isorg.springframework. -

The Enterprise Bundle Repository (EBR), which is run by SpringSource and also hosts all the libraries that integrate with Spring. Both Maven and Ivy repositories are available here for all Spring jars and their dependencies, plus a large number of other common libraries that people use in applications with Spring. Both full releases and also milestones and development snapshots are deployed here. The names of the jar files are in the same form as the community download (

org.springframework.*-<version>.jar), and the dependencies are also in this "long" form, with external libraries (not from SpringSource) having the prefixcom.springsource. See the FAQ for more information. -

In a public Maven repository hosted on Amazon S3 for development snapshots and milestone releases (a copy of the final releases is also held here). The jar file names are in the same form as Maven Central, so this is a useful place to get development versions of Spring to use with other libraries deployed in Maven Central.

So the first thing you need to decide is how to manage your dependencies: most people use an automated system like Maven or Ivy, but you can also do it manually by downloading all the jars yourself. When obtaining Spring with Maven or Ivy you have then to decide which place you'll get it from. In general, if you care about OSGi, use the EBR, since it houses OSGi compatible artifacts for all of Spring's dependencies, such as Hibernate and Freemarker. If OSGi does not matter to you, either place works, though there are some pros and cons between them. In general, pick one place or the other for your project; do not mix them. This is particularly important since EBR artifacts necessarily use a different naming convention than Maven Central artifacts.

Table 1.1. Comparison of Maven Central and SpringSource EBR Repositories

| Feature | Maven Central | EBR |

|---|---|---|

| OSGi Compatible | Not explicit | Yes |

| Number of Artifacts | Tens of thousands; all kinds | Hundreds; those that Spring integrates with |

| Consistent Naming Conventions | No | Yes |

| Naming Convention: GroupId | Varies. Newer artifacts often use domain name, e.g. org.slf4j. Older ones often just use the artifact name, e.g. log4j. | Domain name of origin or main package root, e.g. org.springframework |

| Naming Convention: ArtifactId | Varies. Generally the project or module name, using a hyphen "-" separator, e.g. spring-core, logj4. | Bundle Symbolic Name, derived from the main package root, e.g. org.springframework.beans. If the jar had to be patched to ensure OSGi compliance then com.springsource is appended, e.g. com.springsource.org.apache.log4j |

| Naming Convention: Version | Varies. Many new artifacts use m.m.m or m.m.m.X (with m=digit, X=text). Older ones use m.m. Some neither. Ordering is defined but not often relied on, so not strictly reliable. | OSGi version number m.m.m.X, e.g. 3.0.0.RC3. The text qualifier imposes alphabetic ordering on versions with the same numeric values. |

| Publishing | Usually automatic via rsync or source control updates. Project authors can upload individual jars to JIRA. | Manual (JIRA processed by SpringSource) |

| Quality Assurance | By policy. Accuracy is responsibility of authors. | Extensive for OSGi manifest, Maven POM and Ivy metadata. QA performed by Spring team. |

| Hosting | Contegix. Funded by Sonatype with several mirrors. | S3 funded by SpringSource. |

| Search Utilities | Various | http://www.springsource.com/repository |

| Integration with SpringSource Tools | Integration through STS with Maven dependency management | Extensive integration through STS with Maven, Roo, CloudFoundry |

Although Spring provides integration and support for a huge range of enterprise and other external tools, it intentionally keeps its mandatory dependencies to an absolute minimum: you shouldn't have to locate and download (even automatically) a large number of jar libraries in order to use Spring for simple use cases. For basic dependency injection there is only one mandatory external dependency, and that is for logging (see below for a more detailed description of logging options).

Next we outline the basic steps needed to configure an application that depends on Spring, first with Maven and then with Ivy. In all cases, if anything is unclear, refer to the documentation of your dependency management system, or look at some sample code - Spring itself uses Ivy to manage dependencies when it is building, and our samples mostly use Maven.

If you are using Maven for dependency management you don't even need to supply the logging dependency explicitly. For example, to create an application context and use dependency injection to configure an application, your Maven dependencies will look like this:

<dependencies> <dependency> <groupId>org.springframework</groupId> <artifactId>spring-context</artifactId> <version>3.0.0.RELEASE</version> <scope>runtime</scope> </dependency> </dependencies>

That's it. Note the scope can be declared as runtime if you don't need to compile against Spring APIs, which is typically the case for basic dependency injection use cases.

We used the Maven Central naming conventions in the example above, so that works with Maven Central or the SpringSource S3 Maven repository. To use the S3 Maven repository (e.g. for milestones or developer snapshots), you need to specify the repository location in your Maven configuration. For full releases:

<repositories> <repository> <id>com.springsource.repository.maven.release</id> <url>http://repo.springsource.org/release/</url> <snapshots><enabled>false</enabled></snapshots> </repository> </repositories>

For milestones:

<repositories> <repository> <id>com.springsource.repository.maven.milestone</id> <url>http://repo.springsource.org/milestone/</url> <snapshots><enabled>false</enabled></snapshots> </repository> </repositories>

And for snapshots:

<repositories> <repository> <id>com.springsource.repository.maven.snapshot</id> <url>http://repo.springsource.org/snapshot/</url> <snapshots><enabled>true</enabled></snapshots> </repository> </repositories>

To use the SpringSource EBR you would need to use a different naming convention for the dependencies. The names are usually easy to guess, e.g. in this case it is:

<dependencies> <dependency> <groupId>org.springframework</groupId> <artifactId>org.springframework.context</artifactId> <version>3.0.0.RELEASE</version> <scope>runtime</scope> </dependency> </dependencies>

You also need to declare the location of the repository explicitly (only the URL is important):

<repositories> <repository> <id>com.springsource.repository.bundles.release</id> <url>http://repository.springsource.com/maven/bundles/release/</url> </repository> </repositories>

If you are managing your dependencies by hand, the URL in the repository declaration above is not browsable, but there is a user interface at http://www.springsource.com/repository that can be used to search for and download dependencies. It also has handy snippets of Maven and Ivy configuration that you can copy and paste if you are using those tools.

If you prefer to use Ivy to manage dependencies then there are similar names and configuration options.

To configure Ivy to point to the SpringSource EBR add the

following resolvers to your

ivysettings.xml:

<resolvers> <url name="com.springsource.repository.bundles.release"> <ivy pattern="http://repository.springsource.com/ivy/bundles/release/ [organisation]/[module]/[revision]/[artifact]-[revision].[ext]" /> <artifact pattern="http://repository.springsource.com/ivy/bundles/release/ [organisation]/[module]/[revision]/[artifact]-[revision].[ext]" /> </url> <url name="com.springsource.repository.bundles.external"> <ivy pattern="http://repository.springsource.com/ivy/bundles/external/ [organisation]/[module]/[revision]/[artifact]-[revision].[ext]" /> <artifact pattern="http://repository.springsource.com/ivy/bundles/external/ [organisation]/[module]/[revision]/[artifact]-[revision].[ext]" /> </url> </resolvers>

The XML above is not valid because the lines are too long - if you copy-paste then remove the extra line endings in the middle of the url patterns.

Once Ivy is configured to look in the EBR adding a dependency is

easy. Simply pull up the details page for the bundle in question in

the repository browser and you'll find an Ivy snippet ready for you to

include in your dependencies section. For example (in

ivy.xml):

<dependency org="org.springframework" name="org.springframework.core" rev="3.0.0.RELEASE" conf="compile->runtime"/>

Logging is a very important dependency for Spring because a) it is the only mandatory external dependency, b) everyone likes to see some output from the tools they are using, and c) Spring integrates with lots of other tools all of which have also made a choice of logging dependency. One of the goals of an application developer is often to have unified logging configured in a central place for the whole application, including all external components. This is more difficult than it might have been since there are so many choices of logging framework.

The mandatory logging dependency in Spring is the Jakarta Commons

Logging API (JCL). We compile against JCL and we also make JCL

Log objects visible for classes that extend the

Spring Framework. It's important to users that all versions of Spring

use the same logging library: migration is easy because backwards

compatibility is preserved even with applications that extend Spring.

The way we do this is to make one of the modules in Spring depend

explicitly on commons-logging (the canonical implementation

of JCL), and then make all the other modules depend on that at compile

time. If you are using Maven for example, and wondering where you picked

up the dependency on commons-logging, then it is from

Spring and specifically from the central module called

spring-core.

The nice thing about commons-logging is that you

don't need anything else to make your application work. It has a runtime

discovery algorithm that looks for other logging frameworks in well

known places on the classpath and uses one that it thinks is appropriate

(or you can tell it which one if you need to). If nothing else is

available you get pretty nice looking logs just from the JDK

(java.util.logging or JUL for short). You should find that your Spring

application works and logs happily to the console out of the box in most

situations, and that's important.

Unfortunately, the runtime discovery algorithm in

commons-logging, while convenient for the end-user, is

problematic. If we could turn back the clock and start Spring now

as a new project it would use a different logging dependency. The

first choice would probably be the Simple Logging Facade for Java (SLF4J), which is also used by a lot

of other tools that people use with Spring inside their

applications.

Switching off commons-logging is easy: just make

sure it isn't on the classpath at runtime. In Maven terms you exclude

the dependency, and because of the way that the Spring dependencies

are declared, you only have to do that once.

<dependencies> <dependency> <groupId>org.springframework</groupId> <artifactId>spring-context</artifactId> <version>3.0.0.RELEASE</version> <scope>runtime</scope> <exclusions> <exclusion> <groupId>commons-logging</groupId> <artifactId>commons-logging</artifactId> </exclusion> </exclusions> </dependency> </dependencies>

Now this application is probably broken because there is no implementation of the JCL API on the classpath, so to fix it a new one has to be provided. In the next section we show you how to provide an alternative implementation of JCL using SLF4J as an example.

SLF4J is a cleaner dependency and more efficient at runtime than

commons-logging because it uses compile-time bindings

instead of runtime discovery of the other logging frameworks it

integrates. This also means that you have to be more explicit about what

you want to happen at runtime, and declare it or configure it

accordingly. SLF4J provides bindings to many common logging frameworks,

so you can usually choose one that you already use, and bind to that for

configuration and management.

SLF4J provides bindings to many common logging frameworks,

including JCL, and it also does the reverse: bridges between other

logging frameworks and itself. So to use SLF4J with Spring you need to

replace the commons-logging dependency with the SLF4J-JCL

bridge. Once you have done that then logging calls from within Spring

will be translated into logging calls to the SLF4J API, so if other

libraries in your application use that API, then you have a single place

to configure and manage logging.

A common choice might be to bridge Spring to SLF4J, and then

provide explicit binding from SLF4J to Log4J. You need to supply 4

dependencies (and exclude the existing commons-logging):

the bridge, the SLF4J API, the binding to Log4J, and the Log4J

implementation itself. In Maven you would do that like this

<dependencies> <dependency> <groupId>org.springframework</groupId> <artifactId>spring-context</artifactId> <version>3.0.0.RELEASE</version> <scope>runtime</scope> <exclusions> <exclusion> <groupId>commons-logging</groupId> <artifactId>commons-logging</artifactId> </exclusion> </exclusions> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>jcl-over-slf4j</artifactId> <version>1.5.8</version> <scope>runtime</scope> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-api</artifactId> <version>1.5.8</version> <scope>runtime</scope> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> <version>1.5.8</version> <scope>runtime</scope> </dependency> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>1.2.14</version> <scope>runtime</scope> </dependency> </dependencies>

That might seem like a lot of dependencies just to get some

logging. Well it is, but it is optional, and it

should behave better than the vanilla commons-logging with

respect to classloader issues, notably if you are in a strict container

like an OSGi platform. Allegedly there is also a performance benefit

because the bindings are at compile-time not runtime.

A more common choice amongst SLF4J users, which uses fewer steps

and generates fewer dependencies, is to bind directly to Logback. This removes the extra

binding step because Logback implements SLF4J directly, so you only need

to depend on two libraries not four (jcl-over-slf4j and

logback). If you do that you might also need to exclude the

slf4j-api dependency from other external dependencies (not Spring),

because you only want one version of that API on the classpath.

Many people use Log4j as a logging framework for configuration and management purposes. It's efficient and well-established, and in fact it's what we use at runtime when we build and test Spring. Spring also provides some utilities for configuring and initializing Log4j, so it has an optional compile-time dependency on Log4j in some modules.

To make Log4j work with the default JCL dependency

(commons-logging) all you need to do is put Log4j on the

classpath, and provide it with a configuration file

(log4j.properties or log4j.xml in the root

of the classpath). So for Maven users this is your dependency

declaration:

<dependencies> <dependency> <groupId>org.springframework</groupId> <artifactId>spring-context</artifactId> <version>3.0.0.RELEASE</version> <scope>runtime</scope> </dependency> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>1.2.14</version> <scope>runtime</scope> </dependency> </dependencies>

And here's a sample log4j.properties for logging to the console:

log4j.rootCategory=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d{ABSOLUTE} %5p %t %c{2}:%L - %m%n

log4j.category.org.springframework.beans.factory=DEBUG

Many people run their Spring applications in a container that

itself provides an implementation of JCL. IBM Websphere Application

Server (WAS) is the archetype. This often causes problems, and

unfortunately there is no silver bullet solution; simply excluding

commons-logging from your application is not enough in

most situations.

To be clear about this: the problems reported are usually not

with JCL per se, or even with commons-logging: rather

they are to do with binding commons-logging to another

framework (often Log4J). This can fail because

commons-logging changed the way they do the runtime

discovery in between the older versions (1.0) found in some

containers and the modern versions that most people use now (1.1).

Spring does not use any unusual parts of the JCL API, so nothing

breaks there, but as soon as Spring or your application tries to do

any logging you can find that the bindings to Log4J are not

working.

In such cases with WAS the easiest thing to do is to invert the class loader hierarchy (IBM calls it "parent last") so that the application controls the JCL dependency, not the container. That option isn't always open, but there are plenty of other suggestions in the public domain for alternative approaches, and your mileage may vary depending on the exact version and feature set of the container.

If you have been using the Spring Framework for some time, you will be aware that Spring has undergone two major revisions: Spring 2.0, released in October 2006, and Spring 2.5, released in November 2007. It is now time for a third overhaul resulting in Spring Framework 3.0.

The entire framework code has been revised to take advantage of Java 5 features like generics, varargs and other language improvements. We have done our best to still keep the code backwards compatible. We now have consistent use of generic Collections and Maps, consistent use of generic FactoryBeans, and also consistent resolution of bridge methods in the Spring AOP API. Generic ApplicationListeners automatically receive specific event types only. All callback interfaces such as TransactionCallback and HibernateCallback declare a generic result value now. Overall, the Spring core codebase is now freshly revised and optimized for Java 5.

Spring's TaskExecutor abstraction has been updated for close integration with Java 5's java.util.concurrent facilities. We provide first-class support for Callables and Futures now, as well as ExecutorService adapters, ThreadFactory integration, etc. This has been aligned with JSR-236 (Concurrency Utilities for Java EE 6) as far as possible. Furthermore, we provide support for asynchronous method invocations through the use of the new @Async annotation (or EJB 3.1's @Asynchronous annotation).

The Spring reference documentation has also substantially been updated to reflect all of the changes and new features for Spring Framework 3.0. While every effort has been made to ensure that there are no errors in this documentation, some errors may nevertheless have crept in. If you do spot any typos or even more serious errors, and you can spare a few cycles during lunch, please do bring the error to the attention of the Spring team by raising an issue.

There are many excellent articles and tutorials that show how to get started with Spring Framework 3 features. Read them at the Spring Documentation page.

The samples have been improved and updated to take advantage of the new features in Spring Framework 3. Additionally, the samples have been moved out of the source tree into a dedicated SVN repository available at:

https://anonsvn.springframework.org/svn/spring-samples/

As such, the samples are no longer distributed alongside Spring Framework 3 and need to be downloaded separately from the repository mentioned above. However, this documentation will continue to refer to some samples (in particular Petclinic) to illustrate various features.

![[Note]](images/note.png) | Note |

|---|---|

For more information on Subversion (or in short SVN), see the project homepage at:

|

The framework modules have been revised and are now managed separately with one source-tree per module jar:

-

org.springframework.aop

-

org.springframework.beans

-

org.springframework.context

-

org.springframework.context.support

-

org.springframework.expression

-

org.springframework.instrument

-

org.springframework.jdbc

-

org.springframework.jms

-

org.springframework.orm

-

org.springframework.oxm

-

org.springframework.test

-

org.springframework.transaction

-

org.springframework.web

-

org.springframework.web.portlet

-

org.springframework.web.servlet

-

org.springframework.web.struts

We are now using a new Spring build system as known from Spring Web Flow 2.0. This gives us:

-

Ivy-based "Spring Build" system

-

consistent deployment procedure

-

consistent dependency management

-

consistent generation of OSGi manifests

This is a list of new features for Spring Framework 3.0. We will cover these features in more detail later in this section.

-

Spring Expression Language

-

IoC enhancements/Java based bean metadata

-

General-purpose type conversion system and field formatting system

-

Object to XML mapping functionality (OXM) moved from Spring Web Services project

-

Comprehensive REST support

-

@MVC additions

-

Declarative model validation

-

Early support for Java EE 6

-

Embedded database support

BeanFactory interface returns typed bean instances as far as possible:

-

T getBean(Class<T> requiredType)

-

T getBean(String name, Class<T> requiredType)

-

Map<String, T> getBeansOfType(Class<T> type)

Spring's TaskExecutor interface now extends

java.util.concurrent.Executor:

-

extended AsyncTaskExecutor supports standard Callables with Futures

New Java 5 based converter API and SPI:

-

stateless ConversionService and Converters

-

superseding standard JDK PropertyEditors

Typed ApplicationListener<E>

Spring introduces an expression language which is similar to Unified EL in its syntax but offers significantly more features. The expression language can be used when defining XML and Annotation based bean definitions and also serves as the foundation for expression language support across the Spring portfolio. Details of this new functionality can be found in the chapter Spring Expression Language (SpEL).

The Spring Expression Language was created to provide the Spring community a single, well supported expression language that can be used across all the products in the Spring portfolio. Its language features are driven by the requirements of the projects in the Spring portfolio, including tooling requirements for code completion support within the Eclipse based SpringSource Tool Suite.

The following is an example of how the Expression Language can be used to configure some properties of a database setup

<bean class="mycompany.RewardsTestDatabase"> <property name="databaseName" value="#{systemProperties.databaseName}"/> <property name="keyGenerator" value="#{strategyBean.databaseKeyGenerator}"/> </bean>

This functionality is also available if you prefer to configure your components using annotations:

@Repository public class RewardsTestDatabase { @Value("#{systemProperties.databaseName}") public void setDatabaseName(String dbName) { … } @Value("#{strategyBean.databaseKeyGenerator}") public void setKeyGenerator(KeyGenerator kg) { … } }

Some core features from the JavaConfig project have been added to the Spring Framework now. This means that the following annotations are now directly supported:

-

@Configuration

-

@Bean

-

@DependsOn

-

@Primary

-

@Lazy

-

@Import

-

@ImportResource

-

@Value

Here is an example of a Java class providing basic configuration using the new JavaConfig features:

package org.example.config; @Configuration public class AppConfig { private @Value("#{jdbcProperties.url}") String jdbcUrl; private @Value("#{jdbcProperties.username}") String username; private @Value("#{jdbcProperties.password}") String password; @Bean public FooService fooService() { return new FooServiceImpl(fooRepository()); } @Bean public FooRepository fooRepository() { return new HibernateFooRepository(sessionFactory()); } @Bean public SessionFactory sessionFactory() { // wire up a session factory AnnotationSessionFactoryBean asFactoryBean = new AnnotationSessionFactoryBean(); asFactoryBean.setDataSource(dataSource()); // additional config return asFactoryBean.getObject(); } @Bean public DataSource dataSource() { return new DriverManagerDataSource(jdbcUrl, username, password); } }

To get this to work you need to add the following component scanning entry in your minimal application context XML file.

<context:component-scan base-package="org.example.config"/> <util:properties id="jdbcProperties" location="classpath:org/example/config/jdbc.properties"/>

Or you can bootstrap a @Configuration class directly using

AnnotationConfigApplicationContext:

public static void main(String[] args) { ApplicationContext ctx = new AnnotationConfigApplicationContext(AppConfig.class); FooService fooService = ctx.getBean(FooService.class); fooService.doStuff(); }

See Section 5.12.2, “Instantiating the Spring container using

AnnotationConfigApplicationContext” for full information on

AnnotationConfigApplicationContext.

@Bean annotated methods are also supported

inside Spring components. They contribute a factory bean definition to

the container. See Defining bean metadata within

components for more information

A general purpose type conversion system has been introduced. The system is currently used by SpEL for type conversion, and may also be used by a Spring Container and DataBinder when binding bean property values.

In addition, a formatter SPI has been introduced for formatting field values. This SPI provides a simpler and more robust alternative to JavaBean PropertyEditors for use in client environments such as Spring MVC.

Object to XML mapping functionality (OXM) from the Spring Web

Services project has been moved to the core Spring Framework now. The

functionality is found in the org.springframework.oxm

package. More information on the use of the OXM

module can be found in the Marshalling XML using O/X

Mappers chapter.

The most exciting new feature for the Web Tier is the support for building RESTful web services and web applications. There are also some new annotations that can be used in any web application.

Server-side support for building RESTful applications has been

provided as an extension of the existing annotation driven MVC web

framework. Client-side support is provided by the

RestTemplate class in the spirit of other

template classes such as JdbcTemplate and

JmsTemplate. Both server and client side REST

functionality make use of

HttpConverters to facilitate the

conversion between objects and their representation in HTTP requests

and responses.

The MarshallingHttpMessageConverter uses

the Object to XML mapping functionality mentioned

earlier.

Refer to the sections on MVC and the RestTemplate for more information.

A mvc namespace has been introduced that greatly simplifies Spring MVC configuration.

Additional annotations such as

@CookieValue and

@RequestHeaders have been added. See Mapping cookie values with the

@CookieValue annotation and Mapping request header attributes with

the @RequestHeader annotation for more information.

Several validation enhancements, including JSR 303 support that uses Hibernate Validator as the default provider.

We provide support for asynchronous method invocations through the use of the new @Async annotation (or EJB 3.1's @Asynchronous annotation).

JSR 303, JSF 2.0, JPA 2.0, etc

Convenient support for embedded Java database engines, including HSQL, H2, and Derby, is now provided.

This is a list of new features for Spring Framework 3.1. A number of features do not have dedicated reference documentation but do have complete Javadoc. In such cases, fully-qualified class names are given. See also Appendix C, Migrating to Spring Framework 3.1

-

Cache Abstraction (SpringSource team blog)

-

XML profiles (SpringSource Team Blog)

-

Introducing @Profile (SpringSource Team Blog)

-

See org.springframework.context.annotation.Configuration Javadoc

-

See org.springframework.context.annotation.Profile Javadoc

-

Environment Abstraction (SpringSource Team Blog)

-

See org.springframework.core.env.Environment Javadoc

-

Unified Property Management (SpringSource Team Blog)

-

See org.springframework.core.env.Environment Javadoc

-

See org.springframework.core.env.PropertySource Javadoc

-

See org.springframework.context.annotation.PropertySource Javadoc

Code-based equivalents to popular Spring XML namespace elements

<context:component-scan/>, <tx:annotation-driven/>

and <mvc:annotation-driven> have been developed, most in the

form of @Enable annotations. These are

designed for use in conjunction with Spring's

@Configuration classes, which were

introduced in Spring Framework 3.0.

-

See org.springframework.context.annotation.Configuration Javadoc

-

See org.springframework.context.annotation.ComponentScan Javadoc

-

See org.springframework.transaction.annotation.EnableTransactionManagement Javadoc

-

See org.springframework.cache.annotation.EnableCaching Javadoc

-

See org.springframework.web.servlet.config.annotation.EnableWebMvc Javadoc

-

See org.springframework.scheduling.annotation.EnableScheduling Javadoc

-

See org.springframework.scheduling.annotation.EnableAsync Javadoc

-

See org.springframework.context.annotation.EnableAspectJAutoProxy Javadoc

-

See org.springframework.context.annotation.EnableLoadTimeWeaving Javadoc

-

See org.springframework.beans.factory.aspectj.EnableSpringConfigured Javadoc

-

See Javadoc for classes within the new org.springframework.orm.hibernate4 package

The @ContextConfiguration

annotation now supports supplying

@Configuration classes for configuring

the Spring TestContext. In addition, a new

@ActiveProfiles annotation has been

introduced to support declarative configuration of active bean

definition profiles in ApplicationContext

integration tests.

-

Spring 3.1 M2: Testing with @Configuration Classes and Profiles (SpringSource Team Blog)

-

See the section called “Context configuration with annotated classes” and

org.springframework.test.context.ContextConfigurationJavadoc -

See

org.springframework.test.context.ActiveProfilesJavadoc -

See

org.springframework.test.context.SmartContextLoaderJavadoc -

See

org.springframework.test.context.support.DelegatingSmartContextLoaderJavadoc -

See

org.springframework.test.context.support.AnnotationConfigContextLoaderJavadoc

Prior to Spring Framework 3.1, in order to inject against a property method it had to conform strictly to JavaBeans property signature rules, namely that any 'setter' method must be void-returning. It is now possible in Spring XML to specify setter methods that return any object type. This is useful when considering designing APIs for method-chaining, where setter methods return a reference to 'this'.

The new WebApplicationInitializer

builds atop Servlet 3.0's

ServletContainerInitializer support to

provide a programmatic alternative to the traditional web.xml.

-

See org.springframework.web.WebApplicationInitializer Javadoc

-

Diff from Spring's Greenhouse reference application demonstrating migration from web.xml to

WebApplicationInitializer

-

See org.springframework.web.multipart.support.StandardServletMultipartResolver Javadoc

In standard JPA, persistence units get defined through

META-INF/persistence.xml files in specific jar files

which will in turn get searched for @Entity classes.

In many cases, persistence.xml does not contain more than a unit name

and relies on defaults and/or external setup for all other concerns

(such as the DataSource to use, etc). For that reason, Spring Framework

3.1 provides an alternative:

LocalContainerEntityManagerFactoryBean accepts a

'packagesToScan' property, specifying base packages to scan for

@Entity classes. This is analogous to

AnnotationSessionFactoryBean's property of the

same name for native Hibernate setup, and also to Spring's

component-scan feature for regular Spring beans. Effectively, this

allows for XML-free JPA setup at the mere expense of specifying a base

package for entity scanning: a particularly fine match for Spring

applications which rely on component scanning for Spring beans as well,

possibly even bootstrapped using a code-based Servlet 3.0

initializer.

Spring Framework 3.1 introduces a new set of support classes for processing requests with annotated controllers:

-

RequestMappingHandlerMapping -

RequestMappingHandlerAdapter -

ExceptionHandlerExceptionResolver

These classes are a replacement for the existing:

-

DefaultAnnotationHandlerMapping -

AnnotationMethodHandlerAdapter -

AnnotationMethodHandlerExceptionResolver

The new classes were developed in response to many requests to make annotation controller support classes more customizable and open for extension. Whereas previously you could configure a custom annotated controller method argument resolver, with the new support classes you can customize the processing for any supported method argument or return value type.

-

See org.springframework.web.method.support.HandlerMethodArgumentResolver Javadoc

-

See org.springframework.web.method.support.HandlerMethodReturnValueHandler Javadoc

A second notable difference is the introduction of a

HandlerMethod abstraction to represent an

@RequestMapping method. This abstraction is used

throughout by the new support classes as the handler

instance. For example a HandlerInterceptor can

cast the handler from Object

to HandlerMethod and get access to the target

controller method, its annotations, etc.

The new classes are enabled by default by the MVC namespace and by

Java-based configuration via @EnableWebMvc. The

existing classes will continue to be available but use of the new

classes is recommended going forward.

See the section called “New Support Classes for @RequestMapping methods in Spring MVC 3.1” for additional

details and a list of features not available with the new support classes.

Improved support for specifying media types consumed by a method

through the 'Content-Type' header as well as for

producible types specified through the 'Accept'

header. See the section called “Consumable Media Types” and the section called “Producible Media Types”

Flash attributes can now be stored in a

FlashMap and saved in the HTTP session to survive

a redirect. For an overview of the general support for flash attributes

in Spring MVC see Section 17.6, “Using flash attributes”.

In annotated controllers, an

@RequestMapping method can add flash

attributes by declaring a method argument of type

RedirectAttributes. This method argument

can now also be used to get precise control over the attributes used in

a redirect scenario. See the section called “Specifying redirect and flash attributes”

for more details.

URI template variables from the current request are used in more places:

-

URI template variables are used in addition to request parameters when binding a request to

@ModelAttributemethod arguments. -

@PathVariable method argument values are merged into the model before rendering, except in views that generate content in an automated fashion such as JSON serialization or XML marshalling.

-

A redirect string can contain placeholders for URI variables (e.g.

"redirect:/blog/{year}/{month}"). When expanding the placeholders, URI template variables from the current request are automatically considered. -

An

@ModelAttributemethod argument can be instantiated from a URI template variable provided there is a registered Converter or PropertyEditor to convert from a String to the target object type.

An @RequestBody method argument can be

annotated with @Valid to invoke automatic

validation similar to the support for

@ModelAttribute method arguments. A resulting

MethodArgumentNotValidException is handled in the

DefaultHandlerExceptionResolver and results in a

400 response code.

This new annotation provides access to the content of a "multipart/form-data" request part. See Section 17.10.5, “Handling a file upload request from programmatic clients” and Section 17.10, “Spring's multipart (file upload) support”.

A new UriComponents class has been added,

which is an immutable container of URI components providing

access to all contained URI components.

A new UriComponentsBuilder class is also

provided to help create UriComponents instances.

Together the two classes give fine-grained control over all

aspects of preparing a URI including construction, expansion

from URI template variables, and encoding.

In most cases the new classes can be used as a more flexible

alternative to the existing UriTemplate

especially since UriTemplate relies on those

same classes internally.

A ServletUriComponentsBuilder sub-class

provides static factory methods to copy information from

a Servlet request. See Section 17.7, “Building URIs”.

This section covers what's new in Spring Framework 3.2. See also Appendix D, Migrating to Spring Framework 3.2

The Spring MVC programming model now provides explicit Servlet 3

async support. @RequestMapping methods can

return one of:

-

java.util.concurrent.Callableto complete processing in a separate thread managed by a task executor within Spring MVC. -

org.springframework.web.context.request.async.DeferredResultto complete processing at a later time from a thread not known to Spring MVC — for example, in response to some external event (JMS, AMQP, etc.) -

org.springframework.web.context.request.async.AsyncTaskto wrap aCallableand customize the timeout value or the task executor to use.

First-class support for testing Spring MVC applications with a

fluent API and without a Servlet container. Server-side tests involve use

of the DispatcherServlet while client-side REST

tests rely on the RestTemplate. See Section 11.3.6, “Spring MVC Test Framework”.

A ContentNegotiationStrategy is now

available for resolving the requested media types from an incoming

request. The available implementations are based on the file extension,

query parameter, the 'Accept' header, or a fixed content type.

Equivalent options were previously available only in the

ContentNegotiatingViewResolver but are now available throughout.

ContentNegotiationManager is the central

class to use when configuring content negotiation options.

For more details see Section 17.15.4, “Configuring Content Negotiation”.

The introduction of ContentNegotiationManger

also enables selective suffix pattern matching for incoming requests.

For more details, see the Javadoc of

RequestMappingHandlerMapping.setUseRegisteredSuffixPatternMatch.

Classes annotated with

@ControllerAdvice can contain

@ExceptionHandler,

@InitBinder, and

@ModelAttribute methods and those will

apply to @RequestMapping methods across

controller hierarchies as opposed to the controller hierarchy within which

they are declared. @ControllerAdvice is a

component annotation allowing implementation classes to be auto-detected

through classpath scanning.

A new @MatrixVariable annotation adds

support for extracting matrix variables from the request URI. For more

details see the section called “Matrix Variables”.

An abstract base class implementation of the

WebApplicationInitializer interface is

provided to simplify code-based registration of a DispatcherServlet and

filters mapped to it. The new class is named

AbstractDispatcherServletInitializer and its

sub-class

AbstractAnnotationConfigDispatcherServletInitializer

can be used with Java-based Spring configuration. For more details see

Section 17.14, “Code-based Servlet container initialization”.

A convenient base class with an

@ExceptionHandler method that handles

standard Spring MVC exceptions and returns a

ResponseEntity that allowing customizing and

writing the response with HTTP message converters. This serves as an

alternative to the DefaultHandlerExceptionResolver,

which does the same but returns a ModelAndView

instead.

See the revised Section 17.11, “Handling exceptions” including information on customizing the default Servlet container error page.

The RestTemplate can now read an HTTP

response to a generic type (e.g. List<Account>). There

are three new exchange() methods that accept

ParameterizedTypeReference, a new class that

enables capturing and passing generic type info.

In support of this feature, the

HttpMessageConverter is extended by

GenericHttpMessageConverter adding a method

for reading content given a specified parameterized type. The new

interface is implemented by the

MappingJacksonHttpMessageConverter and also by a

new Jaxb2CollectionHttpMessageConverter that can

read read a generic Collection where the

generic type is a JAXB type annotated with

@XmlRootElement or

@XmlType.

The Jackson JSON 2 library is now supported. Due to packaging

changes in the Jackson library, there are separate classes in Spring MVC

as well. Those are

MappingJackson2HttpMessageConverter and

MappingJackson2JsonView. Other related

configuration improvements include support for pretty printing as well as

a JacksonObjectMapperFactoryBean for convenient

customization of an ObjectMapper in XML

configuration.

Tiles 3 is now supported in addition to Tiles 2.x. Configuring

it should be very similar to the Tiles 2 configuration, i.e. the

combination of TilesConfigurer,

TilesViewResolver and TilesView

except using the tiles3 instead of the tiles2

package.

Also note that besides the version number change, the tiles

dependencies have also changed. You will need to have a subset or all of

tiles-request-api, tiles-api,

tiles-core, tiles-servlet,

tiles-jsp, tiles-el.

An @RequestBody or an

@RequestPart argument can now be followed

by an Errors argument making it possible to

handle validation errors (as a result of an

@Valid annotation) locally within the

@RequestMapping method.

@RequestBody now also supports a required

flag.

The HTTP request method PATCH may now be used in

@RequestMapping methods as well as in the

RestTemplate in conjunction with Apache

HttpComponents HttpClient version 4.2 or later. The JDK

HttpURLConnection does not support the

PATCH method.

Mapped interceptors now support URL patterns to be excluded. The MVC namespace and the MVC JavaConfig both expose these options.

As of 3.2, Spring allows for @Autowired and

@Value to be used as meta-annotations,

e.g. to build custom injection annotations in combination with specific qualifiers.

Analogously, you may build custom @Bean definition

annotations for @Configuration classes,

e.g. in combination with specific qualifiers, @Lazy, @Primary, etc.

Spring provides a CacheManager adapter for JCache, building against the JCache 0.5 preview release. Full JCache support is coming next year, along with Java EE 7 final.

The @DateTimeFormat annotation can

now be used without needing a dependency on the Joda Time library. If Joda

Time is not present the JDK SimpleDateFormat will

be used to parse and print date patterns. When Joda Time is present it

will continue to be used in preference to

SimpleDateFormat.

It is now possible to define global formats that will be used when parsing and printing date and time types. See Section 7.7, “Configuring a global date & time format” for details.

In addition to the aforementioned inclusion of the Spring MVC Test Framework in

the spring-test module, the Spring

TestContext Framework has been revised with support for

integration testing web applications as well as configuring application

contexts with context initializers. For further details, consult the

following.

-

Configuring and loading a WebApplicationContext in integration tests

-

Configuring context hierarchies in integration tests

-

Testing request and session scoped beans

-

Improvements to Servlet API mocks

-

Configuring test application contexts with ApplicationContextInitializers

Spring Framework 3.2 includes fine-tuning of concurrent data structures in many parts of the framework, minimizing locks and generally improving the arrangements for highly concurrent creation of scoped/prototype beans.

Building and contributing to the framework has never been simpler with our move to a Gradle-based build system and source control at GitHub. See the building from source section of the README and the contributor guidelines for complete details.

Last but not least, Spring Framework 3.2 comes with refined Java 7 support within the framework as well as through upgraded third-party dependencies: specifically, CGLIB 3.0, ASM 4.0 (both of which come as inlined dependencies with Spring now) and AspectJ 1.7 support (next to the existing AspectJ 1.6 support).

This part of the reference documentation covers all of those technologies that are absolutely integral to the Spring Framework.

Foremost amongst these is the Spring Framework's Inversion of Control (IoC) container. A thorough treatment of the Spring Framework's IoC container is closely followed by comprehensive coverage of Spring's Aspect-Oriented Programming (AOP) technologies. The Spring Framework has its own AOP framework, which is conceptually easy to understand, and which successfully addresses the 80% sweet spot of AOP requirements in Java enterprise programming.

Coverage of Spring's integration with AspectJ (currently the richest - in terms of features - and certainly most mature AOP implementation in the Java enterprise space) is also provided.

Finally, the adoption of the test-driven-development (TDD) approach to software development is certainly advocated by the Spring team, and so coverage of Spring's support for integration testing is covered (alongside best practices for unit testing). The Spring team has found that the correct use of IoC certainly does make both unit and integration testing easier (in that the presence of setter methods and appropriate constructors on classes makes them easier to wire together in a test without having to set up service locator registries and suchlike)... the chapter dedicated solely to testing will hopefully convince you of this as well.