3.2.12-SNAPSHOT

The AWS adapter takes a Spring Cloud Function app and converts it to a form that can run in AWS Lambda.

Introduction

AWS Lambda

The AWS adapter takes a Spring Cloud Function app and converts it to a form that can run in AWS Lambda.

The details of how to get stared with AWS Lambda is out of scope of this document, so the expectation is that user has some familiarity with AWS and AWS Lambda and wants to learn what additional value spring provides.

Getting Started

One of the goals of Spring Cloud Function framework is to provide necessary infrastructure elements to enable a simple function application to interact in a certain way in a particular environment. A simple function application (in context or Spring) is an application that contains beans of type Supplier, Function or Consumer. So, with AWS it means that a simple function bean should somehow be recognised and executed in AWS Lambda environment.

Let’s look at the example:

@SpringBootApplication

public class FunctionConfiguration {

public static void main(String[] args) {

SpringApplication.run(FunctionConfiguration.class, args);

}

@Bean

public Function<String, String> uppercase() {

return value -> value.toUpperCase();

}

}

It shows a complete Spring Boot application with a function bean defined in it. What’s interesting is that on the surface this is just another boot app, but in the context of AWS Adapter it is also a perfectly valid AWS Lambda application. No other code or configuration is required. All you need to do is package it and deploy it, so let’s look how we can do that.

To make things simpler we’ve provided a sample project ready to be built and deployed and you can access it here.

You simply execute ./mvnw clean package to generate JAR file. All the necessary maven plugins have already been setup to generate

appropriate AWS deployable JAR file. (You can read more details about JAR layout in Notes on JAR Layout).

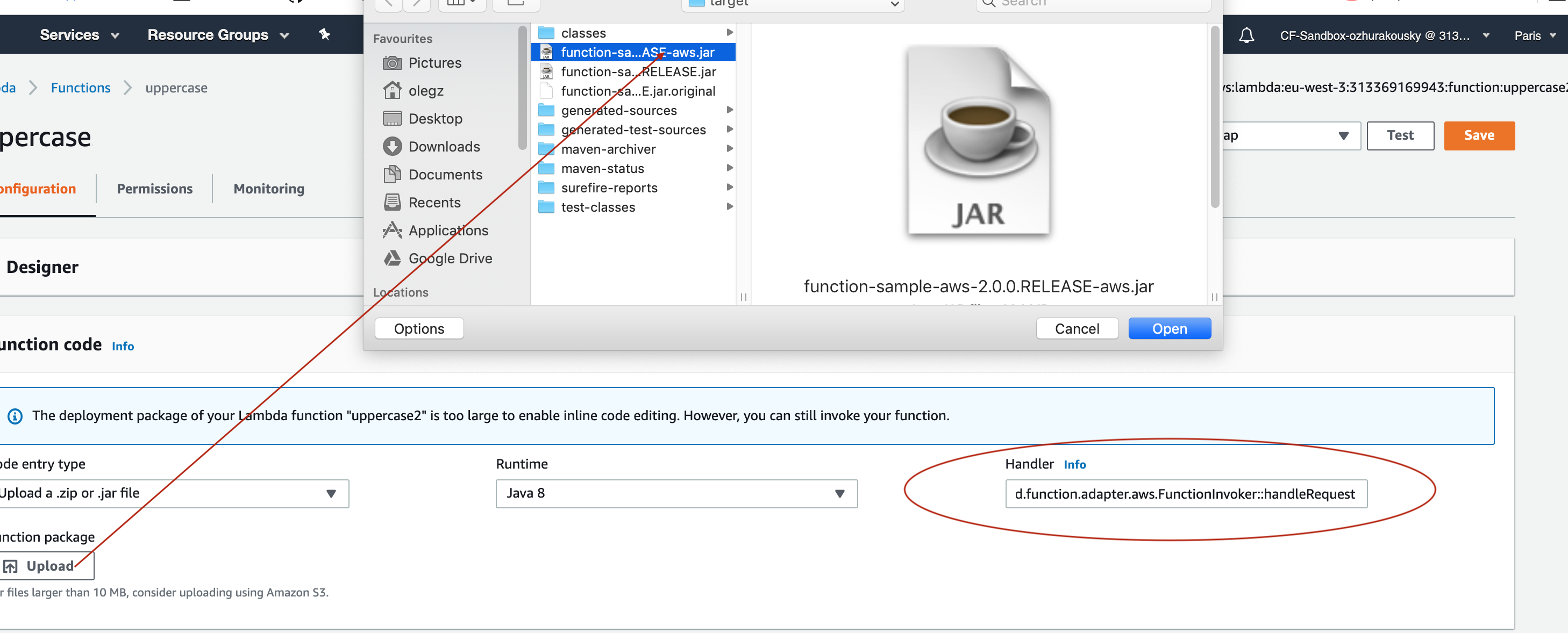

Then you have to upload the JAR file (via AWS dashboard or AWS CLI) to AWS.

When ask about handler you specify org.springframework.cloud.function.adapter.aws.FunctionInvoker::handleRequest which is a generic request handler.

That is all. Save and execute the function with some sample data which for this function is expected to be a String which function will uppercase and return back.

While org.springframework.cloud.function.adapter.aws.FunctionInvoker is a general purpose AWS’s RequestHandler implementation aimed at completely

isolating you from the specifics of AWS Lambda API, for some cases you may want to specify which specific AWS’s RequestHandler you want

to use. The next section will explain you how you can accomplish just that.

AWS Request Handlers

The adapter has a couple of generic request handlers that you can use. The most generic is (and the one we used in the Getting Started section)

is org.springframework.cloud.function.adapter.aws.FunctionInvoker which is the implementation of AWS’s RequestStreamHandler.

User doesn’t need to do anything other then specify it as 'handler' on AWS dashboard when deploying function.

It will handle most of the case including Kinesis, streaming etc. .

If your app has more than one @Bean of type Function etc. then you can choose the one to use by configuring spring.cloud.function.definition

property or environment variable. The functions are extracted from the Spring Cloud FunctionCatalog. In the event you don’t specify spring.cloud.function.definition

the framework will attempt to find a default following the search order where it searches first for Function then Consumer and finally Supplier).

AWS Function Routing

One of the core features of Spring Cloud Function is routing - an ability to have one special function to delegate to other functions based on the user provided routing instructions.

In AWS Lambda environment this feature provides one additional benefit, as it allows you to bind a single function (Routing Function) as AWS Lambda and thus a single HTTP endpoint for API Gateway. So in the end you only manage one function and one endpoint, while benefiting from many function that can be part of your application.

More details are available in the provided sample, yet few general things worth mentioning.

Routing capabilities will be enabled by default whenever there is more then one function in your application as org.springframework.cloud.function.adapter.aws.FunctionInvoker

can not determine which function to bind as AWS Lambda, so it defaults to RoutingFunction.

This means that all you need to do is provide routing instructions which you can do using several mechanisms

(see sample for more details).

Also, note that since AWS does not allow dots . and/or hyphens`-` in the name of the environment variable, you can benefit from boot support and simply substitute

dots with underscores and hyphens with camel case. So for example spring.cloud.function.definition becomes spring_cloud_function_definition

and spring.cloud.function.routing-expression becomes spring_cloud_function_routingExpression.

AWS Function Routing with Custom Runtime

When using Custom Runtime Function Routing works the same way. All you need is to specify functionRouter as AWS Handler the same way you would use the name of the function as handler.

Notes on JAR Layout

You don’t need the Spring Cloud Function Web or Stream adapter at runtime in Lambda, so you might

need to exclude those before you create the JAR you send to AWS. A Lambda application has to be

shaded, but a Spring Boot standalone application does not, so you can run the same app using 2

separate jars (as per the sample). The sample app creates 2 jar files, one with an aws

classifier for deploying in Lambda, and one executable (thin) jar that includes spring-cloud-function-web

at runtime. Spring Cloud Function will try and locate a "main class" for you from the JAR file

manifest, using the Start-Class attribute (which will be added for you by the Spring Boot

tooling if you use the starter parent). If there is no Start-Class in your manifest you can

use an environment variable or system property MAIN_CLASS when you deploy the function to AWS.

If you are not using the functional bean definitions but relying on Spring Boot’s auto-configuration, then additional transformers must be configured as part of the maven-shade-plugin execution.

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<version>2.7.1</version>

</dependency>

</dependencies>

<executions>

<execution>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<createDependencyReducedPom>false</createDependencyReducedPom>

<shadedArtifactAttached>true</shadedArtifactAttached>

<shadedClassifierName>aws</shadedClassifierName>

<transformers>

<transformer implementation="org.apache.maven.plugins.shade.resource.AppendingTransformer">

<resource>META-INF/spring.handlers</resource>

</transformer>

<transformer implementation="org.springframework.boot.maven.PropertiesMergingResourceTransformer">

<resource>META-INF/spring.factories</resource>

</transformer>

<transformer implementation="org.apache.maven.plugins.shade.resource.AppendingTransformer">

<resource>META-INF/spring.schemas</resource>

</transformer>

<transformer implementation="org.apache.maven.plugins.shade.resource.AppendingTransformer">

<resource>META-INF/spring.components</resource>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>Build file setup

In order to run Spring Cloud Function applications on AWS Lambda, you can leverage Maven or Gradle plugins offered by the cloud platform provider.

Maven

In order to use the adapter plugin for Maven, add the plugin dependency to your pom.xml

file:

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-function-adapter-aws</artifactId>

</dependency>

</dependencies>As pointed out in the Notes on JAR Layout, you will need a shaded jar in order to upload it to AWS Lambda. You can use the Maven Shade Plugin for that. The example of the setup can be found above.

You can use theSpring Boot Maven Plugin to generate the thin jar.

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<dependencies>

<dependency>

<groupId>org.springframework.boot.experimental</groupId>

<artifactId>spring-boot-thin-layout</artifactId>

<version>${wrapper.version}</version>

</dependency>

</dependencies>

</plugin>You can find the entire sample pom.xml file for deploying Spring Cloud Function

applications to AWS Lambda with Maven here.

Gradle

In order to use the adapter plugin for Gradle, add the dependency to your build.gradle file:

dependencies {

compile("org.springframework.cloud:spring-cloud-function-adapter-aws:${version}")

}

As pointed out in Notes on JAR Layout, you will need a shaded jar in order to upload it to AWS Lambda. You can use the Gradle Shadow Plugin for that:

buildscript {

dependencies {

classpath "com.github.jengelman.gradle.plugins:shadow:${shadowPluginVersion}"

}

}

apply plugin: 'com.github.johnrengelman.shadow'

assemble.dependsOn = [shadowJar]

shadowJar {

classifier = 'aws'

dependencies {

exclude(

dependency("org.springframework.cloud:spring-cloud-function-web:${springCloudFunctionVersion}"))

}

// Required for Spring

mergeServiceFiles()

append 'META-INF/spring.handlers'

append 'META-INF/spring.schemas'

append 'META-INF/spring.tooling'

transform(PropertiesFileTransformer) {

paths = ['META-INF/spring.factories']

mergeStrategy = "append"

}

}

You can use the Spring Boot Gradle Plugin and Spring Boot Thin Gradle Plugin to generate the thin jar.

buildscript {

dependencies {

classpath("org.springframework.boot.experimental:spring-boot-thin-gradle-plugin:${wrapperVersion}")

classpath("org.springframework.boot:spring-boot-gradle-plugin:${springBootVersion}")

}

}

apply plugin: 'org.springframework.boot'

apply plugin: 'org.springframework.boot.experimental.thin-launcher'

assemble.dependsOn = [thinJar]

You can find the entire sample build.gradle file for deploying Spring Cloud Function

applications to AWS Lambda with Gradle here.

Upload

Build the sample under spring-cloud-function-samples/function-sample-aws and upload the -aws jar file to Lambda. The handler can be example.Handler or org.springframework.cloud.function.adapter.aws.SpringBootStreamHandler (FQN of the class, not a method reference, although Lambda does accept method references).

./mvnw -U clean package

Using the AWS command line tools it looks like this:

aws lambda create-function --function-name Uppercase --role arn:aws:iam::[USERID]:role/service-role/[ROLE] --zip-file fileb://function-sample-aws/target/function-sample-aws-2.0.0.BUILD-SNAPSHOT-aws.jar --handler org.springframework.cloud.function.adapter.aws.SpringBootStreamHandler --description "Spring Cloud Function Adapter Example" --runtime java8 --region us-east-1 --timeout 30 --memory-size 1024 --publish

The input type for the function in the AWS sample is a Foo with a single property called "value". So you would need this to test it:

{

"value": "test"

}

The AWS sample app is written in the "functional" style (as an ApplicationContextInitializer). This is much faster on startup in Lambda than the traditional @Bean style, so if you don’t need @Beans (or @EnableAutoConfiguration) it’s a good choice. Warm starts are not affected.

|

Type Conversion

Spring Cloud Function will attempt to transparently handle type conversion between the raw input stream and types declared by your function.

For example, if your function signature is as such Function<Foo, Bar> we will attempt to convert

incoming stream event to an instance of Foo.

In the event type is not known or can not be determined (e.g., Function<?, ?>) we will attempt to

convert an incoming stream event to a generic Map.

Raw Input

There are times when you may want to have access to a raw input. In this case all you need is to declare your

function signature to accept InputStream. For example, Function<InputStream, ?>. In this case

we will not attempt any conversion and will pass the raw input directly to a function.

Functional Bean Definitions

Your functions will start much quicker if you can use functional bean definitions instead of @Bean. To do this make your main class

an ApplicationContextInitializer<GenericApplicationContext> and use the registerBean() methods in GenericApplicationContext to

create all the beans you need. You function need to be registered as a bean of type FunctionRegistration so that the input and

output types can be accessed by the framework. There is an example in github (the AWS sample is written in this style). It would

look something like this:

@SpringBootConfiguration

public class FuncApplication implements ApplicationContextInitializer<GenericApplicationContext> {

public static void main(String[] args) throws Exception {

FunctionalSpringApplication.run(FuncApplication.class, args);

}

public Function<Foo, Bar> function() {

return value -> new Bar(value.uppercase()));

}

@Override

public void initialize(GenericApplicationContext context) {

context.registerBean("function", FunctionRegistration.class,

() -> new FunctionRegistration<Function<Foo, Bar>>(function())

.type(FunctionTypeUtils.functionType(Foo.class, Bar.class)));

}

}

AWS Context

In a typical implementation of AWS Handler user has access to AWS context object. With function approach you can have the same experience if you need it.

Upon each invocation the framework will add aws-context message header containing the AWS context instance for that particular invocation. So if you need to access it

you can simply have Message<YourPojo> as an input parameter to your function and then access aws-context from message headers.

For convenience we provide AWSLambdaUtils.AWS_CONTEXT constant.

Platform Specific Features

HTTP and API Gateway

AWS has some platform-specific data types, including batching of messages, which is much more efficient than processing each one individually. To make use of these types you can write a function that depends on those types. Or you can rely on Spring to extract the data from the AWS types and convert it to a Spring Message. To do this you tell AWS that the function is of a specific generic handler type (depending on the AWS service) and provide a bean of type Function<Message<S>,Message<T>>, where S and T are your business data types. If there is more than one bean of type Function you may also need to configure the Spring Boot property function.name to be the name of the target bean (e.g. use FUNCTION_NAME as an environment variable).

The supported AWS services and generic handler types are listed below:

| Service | AWS Types | Generic Handler | |

|---|---|---|---|

API Gateway |

|

|

|

Kinesis |

KinesisEvent |

org.springframework.cloud.function.adapter.aws.SpringBootKinesisEventHandler |

For example, to deploy behind an API Gateway, use --handler org.springframework.cloud.function.adapter.aws.SpringBootApiGatewayRequestHandler in your AWS command line (in via the UI) and define a @Bean of type Function<Message<Foo>,Message<Bar>> where Foo and Bar are POJO types (the data will be marshalled and unmarshalled by AWS using Jackson).

Custom Runtime

You can also benefit from AWS Lambda custom runtime feature of AWS Lambda and Spring Cloud Function provides all the necessary components to make it easy.

From the code perspective the application should look no different then any other Spring Cloud Function application.

The only thing you need to do is to provide a bootstrap script in the root of your zip/jar that runs the Spring Boot application.

and select "Custom Runtime" when creating a function in AWS.

Here is an example 'bootstrap' file:

#!/bin/sh

cd ${LAMBDA_TASK_ROOT:-.}

java -Dspring.main.web-application-type=none -Dspring.jmx.enabled=false \

-noverify -XX:TieredStopAtLevel=1 -Xss256K -XX:MaxMetaspaceSize=128M \

-Djava.security.egd=file:/dev/./urandom \

-cp .:`echo lib/*.jar | tr ' ' :` com.example.LambdaApplicationThe com.example.LambdaApplication represents your application which contains function beans.

Set the handler name in AWS to the name of your function. You can use function composition here as well (e.g., uppecrase|reverse).

That is pretty much all. Once you upload your zip/jar to AWS your function will run in custom runtime.

We provide a sample project

where you can also see how to configure yoru POM to properly generate the zip file.

The functional bean definition style works for custom runtimes as well, and is

faster than the @Bean style. A custom runtime can start up much quicker even than a functional bean implementation

of a Java lambda - it depends mostly on the number of classes you need to load at runtime.

Spring doesn’t do very much here, so you can reduce the cold start time by only using primitive types in your function, for instance,

and not doing any work in custom @PostConstruct initializers.