Spring Boot for Apache Geode provides the convenience of Spring Boot’s convention over configuration approach by using auto-configuration with Spring Framework’s powerful abstractions and highly consistent programming model to simplify the development of Apache Geode applications in a Spring context.

Secondarily, Spring Boot for Apache Geode provides developers with a consistent experience whether building and running Spring Boot, Apache Geode applications locally or in a managed environment, such as with VMware Tanzu Application Service (TAS).

This project is a continuation and a logical extension to Spring Data for Apache Geode’s Annotation-based configuration model, and the goals set forth in that model: To enable application developers to get up and running as quickly, reliably, and as easily as possible. In fact, Spring Boot for Apache Geode builds on this very foundation cemented in Spring Data for Apache Geode since the Spring Data Kay (2.0) Release Train.

1. Introduction

Spring Boot for Apache Geode automatically applies auto-configuration to several key application concerns (use cases) including, but not limited to:

-

Look-Aside, [Async] Inline, Near and Multi-Site Caching, by using Apache Geode as a caching provider in Spring’s Cache Abstraction. For more information, see Caching with Apache Geode.

-

System of Record (SOR), persisting application state in Apache Geode by using Spring Data Repositories. For more information, see Spring Data Repositories.

-

Transactions, managing application state consistently with Spring Transaction Management with support for both Local Cache and Global JTA Transactions.

-

Distributed Computations, run with Apache Geode’s Function Execution framework and conveniently implemented and executed with POJO-based, annotation support for Functions. For more information, see Function Implementations & Executions.

-

Continuous Queries, expressing interests in a stream of events and letting applications react to and process changes to data in near real-time with Apache Geode’s Continuous Query (CQ). Listeners/Handlers are defined as simple Message-Driven POJOs (MDP) with Spring’s Message Listener Container, which has been extended with its configurable CQ support. For more information, see Continuous Query.

-

Data Serialization using Apache Geode PDX with first-class configuration and support. For more information, see Data Serialization with PDX.

-

Data Initialization to quickly load (import) data to hydrate the cache during application startup or write (export) data on application shutdown to move data between environments (for example, TEST to DEV). For more information, see Using Data.

-

Actuator, to gain insight into the runtime behavior and operation of your cache, whether a client or a peer. For more information, see Spring Boot Actuator.

-

Logging, to quickly and conveniently enable or adjust Apache Geode log levels in your Spring Boot application to gain insight into the runtime operations of the application as they occur. For more information, see Logging.

-

Security, including Authentication & Authorization, and Transport Layer Security (TLS) with Apache Geode Secure Socket Layer (SSL). Once more, Spring Data for Apache Geode includes first-class support for configuring Auth and SSL. For more information, see Security.

-

HTTP Session state management, by including Spring Session for Apache Geode on your application’s classpath. For more information, see Spring Session.

-

Testing. Whether you write Unit or Integration Tests for Apache Geode in a Spring context, SBDG covers all your testing needs with the help of STDG.

While Spring Data for Apache Geode offers a simple, consistent, convenient and declarative approach to configure all these powerful Apache Geode features, Spring Boot for Apache Geode makes it even easier to do, as we will explore throughout this reference documentation.

1.1. Goals

While the SBDG project has many goals and objectives, the primary goals of this project center around three key principles:

-

From Open Source (Apache Geode) to Commercial (VMware Tanzu GemFire).

-

From Non-Managed (self-managed/self-hosted or on-premise installations) to Managed (VMware Tanzu GemFire for VMs, VMware Tanzu GemFire for K8S) environments.

-

With little to no code or configuration changes necessary.

It is also possible to go in the reverse direction, from Managed back to a Non-Managed environment and even from Commercial back to the Open Source offering, again, with little to no code or configuration changes.

| SBDG’s promise is to deliver on these principles as much as is technically possible and as is technically allowed by Apache Geode. |

2. Getting Started

To be immediately productive and as effective as possible when you use Spring Boot for Apache Geode, it helps to understand the foundation on which this project is built.

The story begins with the Spring Framework and the core technologies and concepts built into the Spring container.

Then our journey continues with the extensions built into Spring Data for Apache Geode to simplify the development of Apache Geode applications in a Spring context, using Spring’s powerful abstractions and highly consistent programming model. This part of the story was greatly enhanced in Spring Data Kay, with the Annotation-based configuration model. Though this new configuration approach uses annotations and provides sensible defaults, its use is also very explicit and assumes nothing. If any part of the configuration is ambiguous, SDG will fail fast. SDG gives you choice, so you still must tell SDG what you want.

Next, we venture into Spring Boot and all of its wonderfully expressive and highly opinionated “convention over configuration” approach for getting the most out of your Spring Apache Geode applications in the easiest, quickest, and most reliable way possible. We accomplish this by combining Spring Data for Apache Geode’s annotation-based configuration with Spring Boot’s auto-configuration to get you up and running even faster and more reliably so that you are productive from the start.

As a result, it would be pertinent to begin your Spring Boot education with Spring Boot’s documentation.

Finally, we arrive at Spring Boot for Apache Geode (SBDG).

3. Using Spring Boot for Apache Geode

To use Spring Boot for Apache Geode, declare the spring-geode-starter on your Spring Boot application classpath:

<dependencies>

<dependency>

<groupId>org.springframework.geode</groupId>

<artifactId>spring-geode-starter</artifactId>

<version>1.7.5</version>

</dependency>

</dependencies>dependencies {

compile 'org.springframework.geode:spring-geode-starter:1.7.5'

}3.1. Maven BOM

If you anticipate using more than one Spring Boot for Apache Geode (SBDG) module in your Spring Boot application,

you can also declare the new org.springframework.geode:spring-geode-bom Maven BOM in your application Maven POM.

Your application use case may require more than one module if (for example, you need (HTTP) Session state management

and replication with, for example, spring-geode-starter-session), if you need to enable Spring Boot Actuator endpoints

for Apache Geode (for example, spring-geode-starter-actuator), or if you need assistance writing complex Unit

and (Distributed) Integration Tests with Spring Test for Apache Geode (STDG) (for example, spring-geode-starter-test).

You can declare and use any one of the SBDG modules:

-

spring-geode-starter -

spring-geode-starter-actuator -

spring-geode-starter-logging -

spring-geode-starter-session -

spring-geode-starter-test

When more than one SBDG module is in use, it makes sense to declare the spring-geode-bom to manage all the dependencies

such that the versions and transitive dependencies necessarily align properly.

A Spring Boot application Maven POM that declares the spring-geode-bom along with two or more module dependencies

might appear as follows:

<project xmlns="http://maven.apache.org/POM/4.0.0">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.7.5</version>

</parent>

<artifactId>my-spring-boot-application</artifactId>

<properties>

<spring-geode.version>1.7.5</spring-geode.version>

</properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.geode</groupId>

<artifactId>spring-geode-bom</artifactId>

<version>${spring-geode.version}</version>

<scope>import</scope>

<type>pom</type>

</dependency>

</dependencies>

</dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.geode</groupId>

<artifactId>spring-geode-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.geode</groupId>

<artifactId>spring-geode-starter-session</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.geode</groupId>

<artifactId>spring-geode-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

</project>Notice that:

-

The Spring Boot application Maven POM (

pom.xml) contains a<dependencyManagement>section that declares theorg.springframework.geode:spring-geode-bom. -

None of the

spring-geode-starter[-xyz]dependencies explicitly specify a<version>. The version is managed by thespring-geode.versionproperty, making it easy to switch between versions of SBDG as needed and use it in all the SBDG modules declared and used in your application Maven POM.

If you change the version of SBDG, be sure to change the org.springframework.boot:spring-boot-starter-parent POM

version to match. SBDG is always one major version behind but matches on minor version and patch version

(and version qualifier — SNAPSHOT, M#, RC#, or RELEASE, if applicable).

For example, SBDG 1.4.0 is based on Spring Boot 2.4.0. SBDG 1.3.5.RELEASE is based on Spring Boot 2.3.5.RELEASE,

and so on. It is important that the versions align.

| All of these concerns are handled for you by going to start.spring.io and adding the “_Spring for Apache Geode_” dependency to a project. For convenience, you can click this link to get started. |

3.2. Gradle Dependency Management

Using Gradle is similar to using Maven.

Again, if you declare and use more than one SBDG module in your Spring Boot application (for example,

the spring-geode-starter along with the spring-geode-starter-session dependency), declaring the spring-geode-bom

inside your application Gradle build file helps.

Your application Gradle build file configuration (roughly) appears as follows:

plugins {

id 'org.springframework.boot' version '2.7.5'

id 'io.spring.dependency-management' version '1.0.10.RELEASE'

id 'java'

}

// ...

ext {

set('springGeodeVersion', "1.7.5")

}

dependencies {

implementation 'org.springframework.geode:spring-geode-starter'

implementation 'org.springframework.geode:spring-geode-starter-actuator'

testImplementation 'org.springframework.boot:spring-boot-starter-test'

}

dependencyManagement {

imports {

mavenBom "org.springframework.geode:spring-geode-bom:${springGeodeVersion}"

}

}A combination of the Spring Boot Gradle Plugin and the Spring Dependency Management Gradle Plugin manages the application dependencies for you.

In a nutshell, the Spring Dependency Management Gradle Plugin provides dependency management capabilities for Gradle, much like Maven. The Spring Boot Gradle Plugin defines a curated and tested set of versions for many third party Java libraries. Together, they make adding dependencies and managing (compatible) versions easier.

Again, you do not need to explicitly declare the version when adding a dependency, including a new SBDG module

dependency (for example, spring-geode-starter-session), since this has already been determined for you.

You can declare the dependency as follows:

implementation 'org.springframework.geode:spring-geode-starter-session'The version of SBDG is controlled by the extension property (springGeodeVersion) in the application Gradle build file.

To use a different version of SBDG, set the springGeodeVersion property to the desired version (for example,

1.3.5.RELEASE). Remember to be sure that the version of Spring Boot matches.

SBDG is always one major version behind but matches on minor version and patch version (and version qualifier,

such as SNAPSHOT, M#, RC#, or RELEASE, if applicable). For example, SBDG 1.4.0 is based on Spring Boot 2.4.0,

SBDG 1.3.5.RELEASE is based on Spring Boot 2.3.5.RELEASE, and so on. It is important that the versions align.

| All of these concerns are handled for you by going to start.spring.io and adding the “_Spring for Apache Geode_” dependency to a project. For convenience, you can click this link to get started. |

4. Primary Dependency Versions

Spring Boot for Apache Geode 1.7.5 builds and depends on the following versions of the base projects listed below:

| Name | Version |

|---|---|

Java (JRE) |

1.8 |

Apache Geode |

1.14.4 |

Spring Framework |

5.3.23 |

Spring Boot |

2.7.5 |

Spring Data for Apache Geode |

2.7.5 |

Spring Session for Apache Geode |

2.7.1 |

Spring Test for Apache Geode |

0.3.4-RAJ |

It is essential that the versions of all the dependencies listed in the table above align accordingly. If the dependency versions are misaligned, then functionality could be missing, or certain functions could behave unpredictably from its specified contract.

Please follow dependency versions listed in the table above and use it as a guide when setting up your Spring Boot projects using Apache Geode.

Again, the best way to setup your Spring Boot projects is by first, declaring the spring-boot-starter-parent Maven POM

as the parent POM in your project POM:

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.7.5</version>

</parent>Or, when using Grade:

plugins {

id 'org.springframework.boot' version '2.7.5'

id 'io.spring.dependency-management' version '1.0.10.RELEASE'

id 'java'

}And then, use the Spring Boot for Apache Geode, spring-geode-bom. For example, with Maven:

spring-geode-bom BOM in Maven<properties>

<spring-geode.version>1.7.5</spring-geode.version>

</properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.geode</groupId>

<artifactId>spring-geode-bom</artifactId>

<version>${spring-geode.version}</version>

<scope>import</scope>

<type>pom</type>

</dependency>

</dependencies>

</dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.geode</groupId>

<artifactId>spring-geode-starter</artifactId>

</dependency>

</dependencies>Or, with Gradle:

spring-geode-bom BOM in Gradleext {

set('springGeodeVersion', "1.7.5")

}

dependencies {

implementation 'org.springframework.geode:spring-geode-starter'

}

dependencyManagement {

imports {

mavenBom "org.springframework.geode:spring-geode-bom:${springGeodeVersion}"

}

}All of this is made simple by going to start.spring.io and creating a Spring Boot

2.7.5 project using Apache Geode.

4.1. Overriding Dependency Versions

While Spring Boot for Apache Geode requires baseline versions of the primary dependencies listed above, it is possible, using Spring Boot’s dependency management capabilities, to override the versions of 3rd-party Java libraries and dependencies managed by Spring Boot itself.

When your Spring Boot application Maven POM inherits from the org.springframework.boot:spring-boot-starter-parent,

or alternatively, applies the Spring Dependency Management Gradle Plugin (io.spring.dependency-management) along with

the Spring Boot Gradle Plugin (org.springframework.boot) in your Spring Boot application Gradle build file, then you

automatically enable the dependency management capabilities provided by Spring Boot for all 3rd-party Java libraries

and dependencies curated and managed by Spring Boot.

Spring Boot’s dependency management harmonizes all 3rd-party Java libraries and dependencies that you are likely to use in your Spring Boot applications. All these dependencies have been tested and proven to work with the version of Spring Boot and other Spring dependencies (e.g. Spring Data, Spring Security) you may be using in your Spring Boot applications.

Still, there may be times when you want, or even need to override the version of some 3rd-party Java libraries used by your Spring Boot applications, that are specifically managed by Spring Boot. In cases where you know that using a different version of a managed dependency is safe to do so, then you have a few options for how to override the dependency version:

| Use caution when overriding dependencies since they may not be compatible with other dependencies managed by Spring Boot for which you may have declared on your application classpath, for example, by adding a starter. It is common for multiple Java libraries to share the same transitive dependencies but use different versions of the Java library (e.g. logging). This will often lead to Exceptions thrown at runtime due to API differences. Keep in mind that Java resolves classes on the classpath from the first class definition that is found in the order that JARs or paths have been defined on the classpath. Finally, Spring does not support dependency versions that have been overridden and do not match the versions declared and managed by Spring Boot. See documentation. |

| You should refer to Spring Boot’s documentation on Dependency Management for more details. |

4.1.1. Version Property Override

Perhaps the easiest option to change the version of a Spring Boot managed dependency is to set the version property used by Spring Boot to control the dependency’s version to the desired Java library version.

For example, if you want to use a different version of Log4j than what is currently set and determined by Spring Boot, then you would do:

<properties>

<log4j2.version>2.17.2</log4j2.version>

</properties>ext['log4j2.version'] = '2.17.2'

The Log4j version number used in the Maven and Gradle examples shown above is arbitrary. You must set

the log4j2.version property to a valid Log4j version that would be resolvable by Maven or Gradle when given

the fully qualified artifact: org.apache.logging.log4j:log4j:2.17.2.

|

The version property name must precisely match the version property declared in the spring-boot-dependencies

Maven POM.

See Spring Boot’s documentation on version properties.

Additional details can be found in the Spring Boot Maven Plugin documentation as well as the Spring Boot Gradle Plugin documentation.

4.1.2. Override with Dependency Management

This option is not specific to Spring in general, or Spring Boot in particular, but applies to Maven and Gradle, which both have intrinsic dependency management features and capabilities.

This approach is useful to not only control the versions of the dependencies managed by Spring Boot directly, but also control the versions of dependencies that may be transitively pulled in by the dependencies that are managed by Spring Boot. Additionally, this approach is more universal since it is handled by Maven or Gradle itself.

For example, when you declare the org.springframework.boot:spring-boot-starter-test dependency in your Spring Boot

application Maven POM or Gradle build file for testing purposes, you will see a dependency tree similar to:

$gradlew dependencies OR $mvn dependency:tree...

[INFO] +- org.springframework.boot:spring-boot-starter-test:jar:2.6.4:test

[INFO] | +- org.springframework.boot:spring-boot-test:jar:2.6.4:test

[INFO] | +- org.springframework.boot:spring-boot-test-autoconfigure:jar:2.6.4:test

[INFO] | +- com.jayway.jsonpath:json-path:jar:2.6.0:test

[INFO] | | +- net.minidev:json-smart:jar:2.4.8:test

[INFO] | | | \- net.minidev:accessors-smart:jar:2.4.8:test

[INFO] | | | \- org.ow2.asm:asm:jar:9.1:test

[INFO] | | \- org.slf4j:slf4j-api:jar:1.7.36:compile

[INFO] | +- jakarta.xml.bind:jakarta.xml.bind-api:jar:2.3.3:test

[INFO] | | \- jakarta.activation:jakarta.activation-api:jar:1.2.2:test

[INFO] | +- org.assertj:assertj-core:jar:3.21.0:compile

[INFO] | +- org.hamcrest:hamcrest:jar:2.2:compile

[INFO] | +- org.junit.jupiter:junit-jupiter:jar:5.8.2:test

[INFO] | | +- org.junit.jupiter:junit-jupiter-api:jar:5.8.2:test

[INFO] | | | +- org.opentest4j:opentest4j:jar:1.2.0:test

[INFO] | | | +- org.junit.platform:junit-platform-commons:jar:1.8.2:test

[INFO] | | | \- org.apiguardian:apiguardian-api:jar:1.1.2:test

[INFO] | | +- org.junit.jupiter:junit-jupiter-params:jar:5.8.2:test

[INFO] | | \- org.junit.jupiter:junit-jupiter-engine:jar:5.8.2:test

[INFO] | | \- org.junit.platform:junit-platform-engine:jar:1.8.2:test

...If you wanted to override and control the version of the opentest4j transitive dependency, for whatever reason,

perhaps because you are using the opentest4j API directly in your application tests, then you could add dependency

management in either Maven or Gradle to control the opentest4j dependency version.

The opentest4j dependency is pulled in by JUnit and is not a dependency that Spring Boot specifically manages.

Of course, Maven or Gradle’s dependency management capabilities can be used to override dependencies that are managed

by Spring Boot as well.

|

Using the opentest4j dependency as an example, you can override the dependency version by doing the following:

<project>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.opentest4j</groupId>

<artifactId>opentest4j</artifactId>

<version>1.0.0</version>

</dependency>

</dependencies>

</dependencyManagement>

</project>plugins {

id 'org.springframework.boot' version '2.7.5'

}

apply plugin: 'io.spring.dependency-management'

dependencyManagement {

dependencies {

dependency 'org.opentest4j:openttest4j:1.0.0'

}

}After applying Maven or Gradle dependency management configuration, you will then see:

$gradlew dependencies OR $mvn dependency:tree...

[INFO] +- org.springframework.boot:spring-boot-starter-test:jar:2.6.4:test

...

[INFO] | | | +- org.opentest4j:opentest4j:jar:1.0.0:test

...For more details on Maven dependency management, refer to the documentation.

For more details on Gradle dependency management, please refer to the documentation

5. Building ClientCache Applications

The first opinionated option provided to you by Spring Boot for Apache Geode (SBDG) is a

ClientCache instance

that you get by declaring Spring Boot for Apache Geode on your application classpath.

It is assumed that most application developers who use Spring Boot to build applications backed by Apache Geode are building cache client applications deployed in an Apache Geode Client/Server Topology. The client/server topology is the most common and traditional architecture employed by enterprise applications that use Apache Geode.

For example, you can begin building a Spring Boot Apache Geode ClientCache application by declaring the

spring-geode-starter on your application’s classpath:

<dependency>

<groupId>org.springframework.geode</groupId>

<artifactId>spring-geode-starter</artifactId>

</dependency>Then you configure and bootstrap your Spring Boot, Apache Geode ClientCache application with the following

main application class:

ClientCache Application@SpringBootApplication

public class SpringBootApacheGeodeClientCacheApplication {

public static void main(String[] args) {

SpringApplication.run(SpringBootApacheGeodeClientCacheApplication.class, args);

}

}Your application now has a ClientCache instance that can connect to an Apache Geode server running on localhost

and listening on the default CacheServer port, 40404.

By default, an Apache Geode server (that is, CacheServer) must be running for the application to use the ClientCache

instance. However, it is perfectly valid to create a ClientCache instance and perform data access operations by using

LOCAL Regions. This is useful during development.

To develop with LOCAL Regions, configure your cache Regions with the

ClientRegionShortcut.LOCAL

data management policy.

|

When you are ready to switch from your local development environment (IDE) to a client/server architecture in a managed

environment, change the data management policy of the client Region from LOCAL back to the default (PROXY)

or even a CACHING_PROXY, which causes the data to be sent to and received from one or more servers.

| Compare and contrast the preceding configuration with the Spring Data for Apache Geode approach. |

It is uncommon to ever need a direct reference to the ClientCache instance provided by SBDG injected into your

application components (for example, @Service or @Repository beans defined in a Spring ApplicationContext),

whether you are configuring additional Apache Geode objects (Regions, Indexes, and so on) or are using those objects

indirectly in your applications. However, it is possible to do so if and when needed.

For example, perhaps you want to perform some additional ClientCache initialization in a Spring Boot

ApplicationRunner on startup:

GemFireCache reference@SpringBootApplication

public class SpringBootApacheGeodeClientCacheApplication {

public static void main(String[] args) {

SpringApplication.run(SpringBootApacheGeodeClientCacheApplication.class, args);

}

@Bean

ApplicationRunner runAdditionalClientCacheInitialization(GemFireCache gemfireCache) {

return args -> {

ClientCache clientCache = (ClientCache) gemfireCache;

// perform additional ClientCache initialization as needed

};

}

}5.1. Building Embedded (Peer & Server) Cache Applications

What if you want to build an embedded peer Cache application instead?

Perhaps you need an actual peer cache member, configured and bootstrapped with Spring Boot, along with the ability to join this member to an existing cluster (of data servers) as a peer node.

Remember the second goal in Spring Boot’s documentation:

Be opinionated out of the box but get out of the way quickly as requirements start to diverge from the defaults.

Here, we focus on the second part of the goal: "get out of the way quickly as requirements start to diverge from the defaults".

If your application requirements demand you use Spring Boot to configure and bootstrap an embedded peer Cache instance,

declare your intention with either SDG’s

@PeerCacheApplication annotation,

or, if you also need to enable connections from ClientCache applications, use SDG’s

@CacheServerApplication annotation:

CacheServer Application@SpringBootApplication

@CacheServerApplication(name = "SpringBootApacheGeodeCacheServerApplication")

public class SpringBootApacheGeodeCacheServerApplication {

public static void main(String[] args) {

SpringApplication.run(SpringBootApacheGeodeCacheServerApplication.class, args);

}

}

An Apache Geode server is not necessarily a CacheServer capable of serving cache clients. It is merely a peer

member node in an Apache Geode cluster (that is, a distributed system) that stores and manages data.

|

By explicitly declaring the @CacheServerApplication annotation, you tell Spring Boot that you do not want the default

ClientCache instance but rather want an embedded peer Cache instance with a CacheServer component, which enables

connections from ClientCache applications.

You can also enable two other Apache Geode services: * An embedded Locator, which allows clients or even other peers to locate servers in the cluster. * An embedded Manager, which allows the Apache Geode application process to be managed and monitored by using Gfsh, Apache Geode’s command-line shell tool:

CacheServer Application with Locator and Manager services enabled@SpringBootApplication

@CacheServerApplication(name = "SpringBootApacheGeodeCacheServerApplication")

@EnableLocator

@EnableManager

public class SpringBootApacheGeodeCacheServerApplication {

public static void main(String[] args) {

SpringApplication.run(SpringBootApacheGeodeCacheServerApplication.class, args);

}

}Then you can use Gfsh to connect to and manage this server:

$ echo $GEMFIRE

/Users/jblum/pivdev/apache-geode-1.2.1

$ gfsh

_________________________ __

/ _____/ ______/ ______/ /____/ /

/ / __/ /___ /_____ / _____ /

/ /__/ / ____/ _____/ / / / /

/______/_/ /______/_/ /_/ 1.2.1

Monitor and Manage Apache Geode

gfsh>connect

Connecting to Locator at [host=localhost, port=10334] ..

Connecting to Manager at [host=10.0.0.121, port=1099] ..

Successfully connected to: [host=10.0.0.121, port=1099]

gfsh>list members

Name | Id

------------------------------------------- | --------------------------------------------------------------------------

SpringBootApacheGeodeCacheServerApplication | 10.0.0.121(SpringBootApacheGeodeCacheServerApplication:29798)<ec><v0>:1024

gfsh>describe member --name=SpringBootApacheGeodeCacheServerApplication

Name : SpringBootApacheGeodeCacheServerApplication

Id : 10.0.0.121(SpringBootApacheGeodeCacheServerApplication:29798)<ec><v0>:1024

Host : 10.0.0.121

Regions :

PID : 29798

Groups :

Used Heap : 168M

Max Heap : 3641M

Working Dir : /Users/jblum/pivdev/spring-boot-data-geode/spring-geode-docs/build

Log file : /Users/jblum/pivdev/spring-boot-data-geode/spring-geode-docs/build

Locators : localhost[10334]

Cache Server Information

Server Bind :

Server Port : 40404

Running : true

Client Connections : 0You can even start additional servers in Gfsh. These additional servers connect to your Spring Boot configured

and bootstrapped Apache Geode CacheServer application. These additional servers started in Gfsh know about

the Spring Boot, Apache Geode server because of the embedded Locator service, which is running on localhost

and listening on the default Locator port, 10334:

gfsh>start server --name=GfshServer --log-level=config --disable-default-server

Starting a Geode Server in /Users/jblum/pivdev/lab/GfshServer...

...

Server in /Users/jblum/pivdev/lab/GfshServer on 10.0.0.121 as GfshServer is currently online.

Process ID: 30031

Uptime: 3 seconds

Geode Version: 1.2.1

Java Version: 1.8.0_152

Log File: /Users/jblum/pivdev/lab/GfshServer/GfshServer.log

JVM Arguments: -Dgemfire.default.locators=10.0.0.121:127.0.0.1[10334] -Dgemfire.use-cluster-configuration=true -Dgemfire.start-dev-rest-api=false -Dgemfire.log-level=config -XX:OnOutOfMemoryError=kill -KILL %p -Dgemfire.launcher.registerSignalHandlers=true -Djava.awt.headless=true -Dsun.rmi.dgc.server.gcInterval=9223372036854775806

Class-Path: /Users/jblum/pivdev/apache-geode-1.2.1/lib/geode-core-1.2.1.jar:/Users/jblum/pivdev/apache-geode-1.2.1/lib/geode-dependencies.jar

gfsh>list members

Name | Id

------------------------------------------- | --------------------------------------------------------------------------

SpringBootApacheGeodeCacheServerApplication | 10.0.0.121(SpringBootApacheGeodeCacheServerApplication:29798)<ec><v0>:1024

GfshServer | 10.0.0.121(GfshServer:30031)<v1>:1025Perhaps you want to start the other way around. You may need to connect a Spring Boot configured and bootstrapped Apache Geode server application to an existing cluster. You can start the cluster in Gfsh with the following commands (shown with partial typical output):

gfsh>start locator --name=GfshLocator --port=11235 --log-level=config

Starting a Geode Locator in /Users/jblum/pivdev/lab/GfshLocator...

...

Locator in /Users/jblum/pivdev/lab/GfshLocator on 10.0.0.121[11235] as GfshLocator is currently online.

Process ID: 30245

Uptime: 3 seconds

Geode Version: 1.2.1

Java Version: 1.8.0_152

Log File: /Users/jblum/pivdev/lab/GfshLocator/GfshLocator.log

JVM Arguments: -Dgemfire.log-level=config -Dgemfire.enable-cluster-configuration=true -Dgemfire.load-cluster-configuration-from-dir=false -Dgemfire.launcher.registerSignalHandlers=true -Djava.awt.headless=true -Dsun.rmi.dgc.server.gcInterval=9223372036854775806

Class-Path: /Users/jblum/pivdev/apache-geode-1.2.1/lib/geode-core-1.2.1.jar:/Users/jblum/pivdev/apache-geode-1.2.1/lib/geode-dependencies.jar

Successfully connected to: JMX Manager [host=10.0.0.121, port=1099]

Cluster configuration service is up and running.

gfsh>start server --name=GfshServer --log-level=config --disable-default-server

Starting a Geode Server in /Users/jblum/pivdev/lab/GfshServer...

....

Server in /Users/jblum/pivdev/lab/GfshServer on 10.0.0.121 as GfshServer is currently online.

Process ID: 30270

Uptime: 4 seconds

Geode Version: 1.2.1

Java Version: 1.8.0_152

Log File: /Users/jblum/pivdev/lab/GfshServer/GfshServer.log

JVM Arguments: -Dgemfire.default.locators=10.0.0.121[11235] -Dgemfire.use-cluster-configuration=true -Dgemfire.start-dev-rest-api=false -Dgemfire.log-level=config -XX:OnOutOfMemoryError=kill -KILL %p -Dgemfire.launcher.registerSignalHandlers=true -Djava.awt.headless=true -Dsun.rmi.dgc.server.gcInterval=9223372036854775806

Class-Path: /Users/jblum/pivdev/apache-geode-1.2.1/lib/geode-core-1.2.1.jar:/Users/jblum/pivdev/apache-geode-1.2.1/lib/geode-dependencies.jar

gfsh>list members

Name | Id

----------- | --------------------------------------------------

GfshLocator | 10.0.0.121(GfshLocator:30245:locator)<ec><v0>:1024

GfshServer | 10.0.0.121(GfshServer:30270)<v1>:1025Then modify the SpringBootApacheGeodeCacheServerApplication class to connect to the existing cluster:

CacheServer Application connecting to an external cluster@SpringBootApplication

@CacheServerApplication(name = "SpringBootApacheGeodeCacheServerApplication", locators = "localhost[11235]")

public class SpringBootApacheGeodeCacheServerApplication {

public static void main(String[] args) {

SpringApplication.run(SpringBootApacheGeodeClientCacheApplication.class, args);

}

}

Notice that the SpringBootApacheGeodeCacheServerApplication class, @CacheServerApplication annotation’s

locators property are configured with the host and port (localhost[11235]), on which the Locator was started

by using Gfsh.

|

After running your Spring Boot Apache Geode CacheServer application again and executing the list members command

in Gfsh again, you should see output similar to the following:

gfsh>list members

Name | Id

------------------------------------------- | ----------------------------------------------------------------------

GfshLocator | 10.0.0.121(GfshLocator:30245:locator)<ec><v0>:1024

GfshServer | 10.0.0.121(GfshServer:30270)<v1>:1025

SpringBootApacheGeodeCacheServerApplication | 10.0.0.121(SpringBootApacheGeodeCacheServerApplication:30279)<v2>:1026

gfsh>describe member --name=SpringBootApacheGeodeCacheServerApplication

Name : SpringBootApacheGeodeCacheServerApplication

Id : 10.0.0.121(SpringBootApacheGeodeCacheServerApplication:30279)<v2>:1026

Host : 10.0.0.121

Regions :

PID : 30279

Groups :

Used Heap : 165M

Max Heap : 3641M

Working Dir : /Users/jblum/pivdev/spring-boot-data-geode/spring-geode-docs/build

Log file : /Users/jblum/pivdev/spring-boot-data-geode/spring-geode-docs/build

Locators : localhost[11235]

Cache Server Information

Server Bind :

Server Port : 40404

Running : true

Client Connections : 0In both scenarios, the Spring Boot configured and bootstrapped Apache Geode server, the Gfsh Locator and Gfsh server formed a cluster.

While you can use either approach and Spring does not care, it is far more convenient to use Spring Boot and your IDE to form a small cluster while developing. Spring profiles make it far simpler and much faster to configure and start a small cluster.

Also, this approach enables rapidly prototyping, testing, and debugging your entire end-to-end application and system architecture right from the comfort and familiarity of your IDE. No additional tooling (such as Gfsh) or knowledge is required to get started quickly and easily. Just build and run.

Be careful to vary your port numbers for the embedded services, like the CacheServer, Locators, and the Manager,

especially if you start multiple instances on the same machine. Otherwise, you are likely to run into

a java.net.BindException caused by port conflicts.

|

| See the Running an Apache Geode cluster with Spring Boot from your IDE appendix for more details. |

5.2. Building Locator Applications

In addition to ClientCache, CacheServer, and peer Cache applications, SDG, and by extension SBDG, now supports

Spring Boot Apache Geode Locator applications.

An Apache Geode Locator is a location-based service or, more typically, a standalone process that lets clients locate a cluster of Apache Geode servers to manage data. Many cache clients can connect to the same cluster to share data. Running multiple clients is common in a Microservices architecture where you need to scale-up the number of application instances to satisfy the demand.

An Apache Geode Locator is also used by joining members of an existing cluster to scale-out and increase capacity of the logically pooled system resources (memory, CPU, network and disk). A Locator maintains metadata that is sent to the clients to enable such capabilities as single-hop data access to route data access operations to the data node in the cluster maintaining the data of interests. A Locator also maintains load information for servers in the cluster, which enables the load to be uniformly distributed across the cluster while also providing fail-over services to a redundant member if the primary fails. A Locator provides many more benefits, and we encourage you to read the documentation for more details.

As shown earlier, you can embed a Locator service within either a Spring Boot peer Cache or a CacheServer

application by using the SDG @EnableLocator annotation:

@SpringBootApplication

@CacheServerApplication

@EnableLocator

class SpringBootCacheServerWithEmbeddedLocatorApplication {

// ...

}However, it is more common to start standalone Locator JVM processes. This is useful when you want to increase the resiliency of your cluster in the face of network and process failures, which are bound to happen. If a Locator JVM process crashes or gets severed from the cluster due to a network failure or partition, having multiple Locators provides a higher degree of availability (HA) through redundancy.

Even if all Locators in the cluster go down, the cluster still remains intact. You cannot add more peer members (that is, scale-up the number of data nodes in the cluster) or connect any more clients, but the cluster is fine. If all the locators in the cluster go down, it is safe to restart them only after a thorough diagnosis.

| Once a client receives metadata about the cluster of servers, all data-access operations are sent directly to servers in the cluster, not a Locator. Therefore, existing, connected clients remain connected and operable. |

To configure and bootstrap Spring Boot Apache Geode Locator applications as standalone JVM processes, use the following configuration:

@SpringBootApplication

@LocatorApplication

class SpringBootApacheGeodeLocatorApplication {

// ...

}Instead of using the @EnableLocator annotation, you now use the @LocatorApplication annotation.

The @LocatorApplication annotation works in the same way as the @PeerCacheApplication and @CacheServerApplication

annotations, bootstrapping an Apache Geode process and overriding the default ClientCache instance provided by SBDG.

If your @SpringBootApplication class is annotated with @LocatorApplication, it must be a Locator and not

a ClientCache, CacheServer, or peer Cache application. If you need the application to function as a peer Cache,

perhaps with embedded CacheServer components and an embedded Locator, you need to follow the approach shown earlier:

using the @EnableLocator annotation with either the @PeerCacheApplication or @CacheServerApplication annotation.

|

With our Spring Boot Apache Geode Locator application, we can connect both Spring Boot configured and bootstrapped

peer members (peer Cache, CacheServer and Locator applications) as well as Gfsh started Locators and servers.

First, we need to start two Locators by using our Spring Boot Apache Geode Locator application class:

@UseLocators

@SpringBootApplication

@LocatorApplication(name = "SpringBootApacheGeodeLocatorApplication")

public class SpringBootApacheGeodeLocatorApplication {

public static void main(String[] args) {

new SpringApplicationBuilder(SpringBootApacheGeodeLocatorApplication.class)

.web(WebApplicationType.NONE)

.build()

.run(args);

System.err.println("Press <enter> to exit!");

new Scanner(System.in).nextLine();

}

@Configuration

@EnableManager(start = true)

@Profile("manager")

@SuppressWarnings("unused")

static class ManagerConfiguration { }

}We also need to vary the configuration for each Locator application instance.

Apache Geode requires each peer member in the cluster to be uniquely named. We can set the name of the Locator by using

the spring.data.gemfire.locator.name SDG property set as a JVM System Property in your IDE’s run configuration profile

for the main application class: -Dspring.data.gemfire.locator.name=SpringLocatorOne. We name the second Locator

application instance SpringLocatorTwo.

Additionally, we must vary the port numbers that the Locators use to listen for connections. By default, an Apache Geode

Locator listens on port 10334. We can set the Locator port by using the spring.data.gemfire.locator.port

SDG property.

For our first Locator application instance (SpringLocatorOne), we also enable the "manager" profile so that

we can connect to the Locator by using Gfsh.

Our IDE run configuration profile for our first Locator application instance appears as:

-server -ea -Dspring.profiles.active=manager -Dspring.data.gemfire.locator.name=SpringLocatorOne -Dlogback.log.level=INFO

And our IDE run configuration profile for our second Locator application instance appears as:

-server -ea -Dspring.profiles.active= -Dspring.data.gemfire.locator.name=SpringLocatorTwo -Dspring.data.gemfire.locator.port=11235 -Dlogback.log.level=INFO

You should see log output similar to the following when you start a Locator application instance:

. ____ _ __ _ _

/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

\\/ ___)| |_)| | | | | || (_| | ) ) ) )

' |____| .__|_| |_|_| |_\__, | / / / /

=========|_|==============|___/=/_/_/_/

:: Spring Boot :: (v2.2.0.BUILD-SNAPSHOT)

2019-09-01 11:02:48,707 INFO .SpringBootApacheGeodeLocatorApplication: 55 - Starting SpringBootApacheGeodeLocatorApplication on jblum-mbpro-2.local with PID 30077 (/Users/jblum/pivdev/spring-boot-data-geode/spring-geode-docs/out/production/classes started by jblum in /Users/jblum/pivdev/spring-boot-data-geode/spring-geode-docs/build)

2019-09-01 11:02:48,711 INFO .SpringBootApacheGeodeLocatorApplication: 651 - No active profile set, falling back to default profiles: default

2019-09-01 11:02:49,374 INFO xt.annotation.ConfigurationClassEnhancer: 355 - @Bean method LocatorApplicationConfiguration.exclusiveLocatorApplicationBeanFactoryPostProcessor is non-static and returns an object assignable to Spring's BeanFactoryPostProcessor interface. This will result in a failure to process annotations such as @Autowired, @Resource and @PostConstruct within the method's declaring @Configuration class. Add the 'static' modifier to this method to avoid these container lifecycle issues; see @Bean javadoc for complete details.

2019-09-01 11:02:49,919 INFO ode.distributed.internal.InternalLocator: 530 - Starting peer location for Distribution Locator on 10.99.199.24[11235]

2019-09-01 11:02:49,925 INFO ode.distributed.internal.InternalLocator: 498 - Starting Distribution Locator on 10.99.199.24[11235]

2019-09-01 11:02:49,926 INFO distributed.internal.tcpserver.TcpServer: 242 - Locator was created at Sun Sep 01 11:02:49 PDT 2019

2019-09-01 11:02:49,927 INFO distributed.internal.tcpserver.TcpServer: 243 - Listening on port 11235 bound on address 0.0.0.0/0.0.0.0

2019-09-01 11:02:49,928 INFO ternal.membership.gms.locator.GMSLocator: 162 - GemFire peer location service starting. Other locators: localhost[10334] Locators preferred as coordinators: true Network partition detection enabled: true View persistence file: /Users/jblum/pivdev/spring-boot-data-geode/spring-geode-docs/build/locator11235view.dat

2019-09-01 11:02:49,928 INFO ternal.membership.gms.locator.GMSLocator: 416 - Peer locator attempting to recover from localhost/127.0.0.1:10334

2019-09-01 11:02:49,963 INFO ternal.membership.gms.locator.GMSLocator: 422 - Peer locator recovered initial membership of View[10.99.199.24(SpringLocatorOne:30043:locator)<ec><v0>:41000|0] members: [10.99.199.24(SpringLocatorOne:30043:locator)<ec><v0>:41000]

2019-09-01 11:02:49,963 INFO ternal.membership.gms.locator.GMSLocator: 407 - Peer locator recovered state from LocatorAddress [socketInetAddress=localhost/127.0.0.1:10334, hostname=localhost, isIpString=false]

2019-09-01 11:02:49,965 INFO ode.distributed.internal.InternalLocator: 644 - Starting distributed system

2019-09-01 11:02:50,007 INFO he.geode.internal.logging.LoggingSession: 82 -

---------------------------------------------------------------------------

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with this

work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with the

License. You may obtain a copy of the License at

https://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS, WITHOUT

WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the

License for the specific language governing permissions and limitations

under the License.

---------------------------------------------------------------------------

Build-Date: 2019-04-19 11:49:13 -0700

Build-Id: onichols 0

Build-Java-Version: 1.8.0_192

Build-Platform: Mac OS X 10.14.4 x86_64

Product-Name: Apache Geode

Product-Version: 1.9.0

Source-Date: 2019-04-19 11:11:31 -0700

Source-Repository: release/1.9.0

Source-Revision: c0a73d1cb84986d432003bd12e70175520e63597

Native version: native code unavailable

Running on: 10.99.199.24/10.99.199.24, 8 cpu(s), x86_64 Mac OS X 10.13.6

Communications version: 100

Process ID: 30077

User: jblum

Current dir: /Users/jblum/pivdev/spring-boot-data-geode/spring-geode-docs/build

Home dir: /Users/jblum

Command Line Parameters:

-ea

-Dspring.profiles.active=

-Dspring.data.gemfire.locator.name=SpringLocatorTwo

-Dspring.data.gemfire.locator.port=11235

-Dlogback.log.level=INFO

-javaagent:/Applications/IntelliJ IDEA 19 CE.app/Contents/lib/idea_rt.jar=51961:/Applications/IntelliJ IDEA 19 CE.app/Contents/bin

-Dfile.encoding=UTF-8

Class Path:

...

..

.

2019-09-01 11:02:54,112 INFO ode.distributed.internal.InternalLocator: 661 - Locator started on 10.99.199.24[11235]

2019-09-01 11:02:54,113 INFO ode.distributed.internal.InternalLocator: 769 - Starting server location for Distribution Locator on 10.99.199.24[11235]

2019-09-01 11:02:54,134 INFO nt.internal.locator.wan.LocatorDiscovery: 138 - Locator discovery task exchanged locator information 10.99.199.24[11235] with localhost[10334]: {-1=[10.99.199.24[10334]]}.

2019-09-01 11:02:54,242 INFO .SpringBootApacheGeodeLocatorApplication: 61 - Started SpringBootApacheGeodeLocatorApplication in 6.137470354 seconds (JVM running for 6.667)

Press <enter> to exit!Next, start up the second Locator application instance (you should see log output similar to the preceding list). Then connect to the cluster of Locators by using Gfsh:

$ echo $GEMFIRE

/Users/jblum/pivdev/apache-geode-1.9.0

$ gfsh

_________________________ __

/ _____/ ______/ ______/ /____/ /

/ / __/ /___ /_____ / _____ /

/ /__/ / ____/ _____/ / / / /

/______/_/ /______/_/ /_/ 1.9.0

Monitor and Manage Apache Geode

gfsh>connect

Connecting to Locator at [host=localhost, port=10334] ..

Connecting to Manager at [host=10.99.199.24, port=1099] ..

Successfully connected to: [host=10.99.199.24, port=1099]

gfsh>list members

Name | Id

---------------- | ------------------------------------------------------------------------

SpringLocatorOne | 10.99.199.24(SpringLocatorOne:30043:locator)<ec><v0>:41000 [Coordinator]

SpringLocatorTwo | 10.99.199.24(SpringLocatorTwo:30077:locator)<ec><v1>:41001By using our SpringBootApacheGeodeCacheServerApplication main class from the previous section, we can configure

and bootstrap an Apache Geode CacheServer application with Spring Boot and connect it to our cluster of Locators:

@SpringBootApplication

@CacheServerApplication(name = "SpringBootApacheGeodeCacheServerApplication")

@SuppressWarnings("unused")

public class SpringBootApacheGeodeCacheServerApplication {

public static void main(String[] args) {

new SpringApplicationBuilder(SpringBootApacheGeodeCacheServerApplication.class)

.web(WebApplicationType.NONE)

.build()

.run(args);

}

@Configuration

@UseLocators

@Profile("clustered")

static class ClusteredConfiguration { }

@Configuration

@EnableLocator

@EnableManager(start = true)

@Profile("!clustered")

static class LonerConfiguration { }

}To do so, enable the "clustered" profile by using an IDE run profile configuration similar to:

-server -ea -Dspring.profiles.active=clustered -Dspring.data.gemfire.name=SpringServer -Dspring.data.gemfire.cache.server.port=41414 -Dlogback.log.level=INFO

After the server starts up, you should see the new peer member in the cluster:

CacheServergfsh>list members

Name | Id

---------------- | ------------------------------------------------------------------------

SpringLocatorOne | 10.99.199.24(SpringLocatorOne:30043:locator)<ec><v0>:41000 [Coordinator]

SpringLocatorTwo | 10.99.199.24(SpringLocatorTwo:30077:locator)<ec><v1>:41001

SpringServer | 10.99.199.24(SpringServer:30216)<v2>:41002Finally, we can even start additional Locators and servers connected to this cluster by using Gfsh:

gfsh>start locator --name=GfshLocator --port=12345 --log-level=config

Starting a Geode Locator in /Users/jblum/pivdev/lab/GfshLocator...

......

Locator in /Users/jblum/pivdev/lab/GfshLocator on 10.99.199.24[12345] as GfshLocator is currently online.

Process ID: 30259

Uptime: 5 seconds

Geode Version: 1.9.0

Java Version: 1.8.0_192

Log File: /Users/jblum/pivdev/lab/GfshLocator/GfshLocator.log

JVM Arguments: -Dgemfire.default.locators=10.99.199.24[11235],10.99.199.24[10334] -Dgemfire.enable-cluster-configuration=true -Dgemfire.load-cluster-configuration-from-dir=false -Dgemfire.log-level=config -Dgemfire.launcher.registerSignalHandlers=true -Djava.awt.headless=true -Dsun.rmi.dgc.server.gcInterval=9223372036854775806

Class-Path: /Users/jblum/pivdev/apache-geode-1.9.0/lib/geode-core-1.9.0.jar:/Users/jblum/pivdev/apache-geode-1.9.0/lib/geode-dependencies.jar

gfsh>start server --name=GfshServer --server-port=45454 --log-level=config

Starting a Geode Server in /Users/jblum/pivdev/lab/GfshServer...

...

Server in /Users/jblum/pivdev/lab/GfshServer on 10.99.199.24[45454] as GfshServer is currently online.

Process ID: 30295

Uptime: 2 seconds

Geode Version: 1.9.0

Java Version: 1.8.0_192

Log File: /Users/jblum/pivdev/lab/GfshServer/GfshServer.log

JVM Arguments: -Dgemfire.default.locators=10.99.199.24[11235],10.99.199.24[12345],10.99.199.24[10334] -Dgemfire.start-dev-rest-api=false -Dgemfire.use-cluster-configuration=true -Dgemfire.log-level=config -XX:OnOutOfMemoryError=kill -KILL %p -Dgemfire.launcher.registerSignalHandlers=true -Djava.awt.headless=true -Dsun.rmi.dgc.server.gcInterval=9223372036854775806

Class-Path: /Users/jblum/pivdev/apache-geode-1.9.0/lib/geode-core-1.9.0.jar:/Users/jblum/pivdev/apache-geode-1.9.0/lib/geode-dependencies.jar

gfsh>list members

Name | Id

---------------- | ------------------------------------------------------------------------

SpringLocatorOne | 10.99.199.24(SpringLocatorOne:30043:locator)<ec><v0>:41000 [Coordinator]

SpringLocatorTwo | 10.99.199.24(SpringLocatorTwo:30077:locator)<ec><v1>:41001

SpringServer | 10.99.199.24(SpringServer:30216)<v2>:41002

GfshLocator | 10.99.199.24(GfshLocator:30259:locator)<ec><v3>:41003

GfshServer | 10.99.199.24(GfshServer:30295)<v4>:41004You must be careful to vary the ports and name of your peer members appropriately. Spring, and Spring Boot for Apache Geode (SBDG) in particular, make doing so easy.

5.3. Building Manager Applications

As discussed in the previous sections, you can enable a Spring Boot configured and bootstrapped Apache Geode peer member node in the cluster to function as a Manager.

An Apache Geode Manager is a peer member node in the cluster that runs the management service, letting the cluster be managed and monitored with JMX-based tools, such as Gfsh, JConsole, or JVisualVM. Any tool using the JMX API can connect to and manage an Apache Geode cluster for whatever purpose.

Like Locators, the cluster may have more than one Manager for redundancy. Only server-side, peer member nodes

in the cluster may function Managers. Therefore, a ClientCache application cannot be a Manager.

To create a Manager, use the SDG @EnableManager annotation.

The three primary uses of the @EnableManager annotation to create a Manager are:

1 - CacheServer Manager Application

@SpringBootApplication

@CacheServerApplication(name = "CacheServerManagerApplication")

@EnableManager(start = true)

class CacheServerManagerApplication {

// ...

}2 - Peer Cache Manager Application

@SpringBootApplication

@PeerCacheApplication(name = "PeerCacheManagerApplication")

@EnableManager(start = "true")

class PeerCacheManagerApplication {

// ...

}3 - Locator Manager Application

@SpringBootApplication

@LocatorApplication(name = "LocatorManagerApplication")

@EnableManager(start = true)

class LocatorManagerApplication {

// ...

}#1 creates a peer Cache instance with a CacheServer component that accepts client connections along with

an embedded Manager that lets JMX clients connect.

#2 creates only a peer Cache instance along with an embedded Manager. As a peer Cache with no CacheServer

component, clients are not able to connect to this node. It is merely a server managing data.

#3 creates a Locator instance with an embedded Manager.

In all configuration arrangements, the Manager is configured to start immediately.

See the Javadoc for the

@EnableManager annotation

for additional configuration options.

|

As of Apache Geode 1.11.0, you must include additional Apache Geode dependencies on your Spring Boot application classpath to make your application a proper Apache Geode Manager in the cluster, particularly if you also enable the embedded HTTP service in the Manager.

The required dependencies are:

runtime "org.apache.geode:geode-http-service"

runtime "org.apache.geode:geode-web"

runtime "org.springframework.boot:spring-boot-starter-jetty"The embedded HTTP service (implemented with the Eclipse Jetty Servlet Container), runs the Management (Admin) REST API, which is used by Apache Geode tooling, such as Gfsh, to connect to an Apache Geode cluster over HTTP. In addition, it also enables the Apache Geode Pulse Monitoring Tool (and Web application) to run.

Even if you do not start the embedded HTTP service, a Manager still requires the geode-http-service, geode-web

and spring-boot-starter-jetty dependencies.

Optionally, you may also include the geode-pulse dependency, as follows:

runtime "org.apache.geode:geode-pulse"The geode-pulse dependency is only required if you want the Manager to automatically start

the Apache Geode Pulse Monitoring Tool. Pulse enables you

to view the nodes of your Apache Geode cluster and monitor them in realtime.

6. Auto-configuration

The following Spring Framework, Spring Data for Apache Geode (SDG) and Spring Session for Apache Geode (SSDG) annotations are implicitly declared by Spring Boot for Apache Geode’s (SBDG) auto-configuration.

-

@ClientCacheApplication -

@EnableGemfireCaching(alternatively, Spring Framework’s@EnableCaching) -

@EnableContinuousQueries -

@EnableGemfireFunctions -

@EnableGemfireFunctionExecutions -

@EnableGemfireRepositories -

@EnableLogging -

@EnablePdx -

@EnableSecurity -

@EnableSsl -

@EnableGemFireHttpSession

This means that you need not explicitly declare any of these annotations on your @SpringBootApplication class,

since they are provided by SBDG already. The only reason you would explicitly declare any of these annotations is to

override Spring Boot’s, and in particular, SBDG’s auto-configuration. Otherwise, doing so is unnecessary.

|

| You should read the chapter in Spring Boot’s reference documentation on auto-configuration. |

| You should review the chapter in Spring Data for Apache Geode’s (SDG) reference documentation on annotation-based configuration. For a quick reference and overview of annotation-based configuration, see the annotations quickstart. |

| See the corresponding sample guide and code to see Spring Boot auto-configuration for Apache Geode in action. |

6.1. Customizing Auto-configuration

You might ask, “How do I customize the auto-configuration provided by SBDG if I do not explicitly declare the annotation?”

For example, you may want to customize the member’s name. You know that the

@ClientCacheApplication annotation

provides the name attribute

so that you can set the client member’s name. However, SBDG has already implicitly declared the @ClientCacheApplication

annotation through auto-configuration on your behalf. What do you do?

In this case, SBDG supplies a few additional annotations.

For example, to set the (client or peer) member’s name, you can use the @UseMemberName annotation:

@UseMemberName@SpringBootApplication

@UseMemberName("MyMemberName")

class SpringBootApacheGeodeClientCacheApplication {

//...

}Alternatively, you could set the spring.application.name or the spring.data.gemfire.name property in Spring Boot

application.properties:

spring.application.name property# Spring Boot application.properties

spring.application.name = MyMemberNamespring.data.gemfire.cache.name property# Spring Boot application.properties

spring.data.gemfire.cache.name = MyMemberName

The spring.data.gemfire.cache.name property is an alias for the spring.data.gemfire.name property. Both

properties do the same thing (set the name of the client or peer member node).

|

In general, there are three ways to customize configuration, even in the context of SBDG’s auto-configuration:

-

Using annotations provided by SBDG for common and popular concerns (such as naming client or peer members with the

@UseMemberNameannotation or enabling durable clients with the@EnableDurableClientannotation). -

Using well-known and documented properties (such as

spring.application.name, orspring.data.gemfire.name, orspring.data.gemfire.cache.name). -

Using configurers (such as

ClientCacheConfigurer).

| For the complete list of documented properties, see Configuration Metadata Reference. |

6.2. Disabling Auto-configuration

Spring Boot’s reference documentation explains how to disable Spring Boot auto-configuration.

Disabling Auto-configuration also explains how to disable SBDG auto-configuration.

In a nutshell, if you want to disable any auto-configuration provided by either Spring Boot or SBDG, declare your intent

in the @SpringBootApplication annotation:

@SpringBootApplication(

exclude = { DataSourceAutoConfiguration.class, PdxAutoConfiguration.class }

)

class SpringBootApacheGeodeClientCacheApplication {

// ...

}| Make sure you understand what you are doing when you disable auto-configuration. |

6.3. Overriding Auto-configuration

Overriding explains how to override SBDG auto-configuration.

In a nutshell, if you want to override the default auto-configuration provided by SBDG, you must annotate your

@SpringBootApplication class with your intent.

For example, suppose you want to configure and bootstrap an Apache Geode CacheServer application

(a peer, not a client):

ClientCache Auto-Configuration by configuring & bootstrapping a CacheServer application@SpringBootApplication

@CacheServerApplication

class SpringBootApacheGeodeCacheServerApplication {

// ...

}You can also explicitly declare the @ClientCacheApplication annotation on your @SpringBootApplication class:

@ClientCacheApplication@SpringBootApplication

@ClientCacheApplication

class SpringBootApacheGeodeClientCacheApplication {

// ...

}You are overriding SBDG’s auto-configuration of the ClientCache instance. As a result, you have now also implicitly

consented to being responsible for other aspects of the configuration (such as security).

Why does that happen?

It happens because, in certain cases, such as security, certain aspects of security configuration (such as SSL) must be configured before the cache instance is created. Also, Spring Boot always applies user configuration before auto-configuration partially to determine what needs to be auto-configured in the first place.

| Make sure you understand what you are doing when you override auto-configuration. |

6.4. Replacing Auto-configuration

See the Spring Boot reference documentation on replacing auto-configuration.

6.5. Understanding Auto-configuration

This section covers the SBDG provided auto-configuration classes that correspond to the SDG annotations in more detail.

To review the complete list of SBDG auto-confiugration classes, see Complete Set of Auto-configuration Classes.

6.5.1. @ClientCacheApplication

The SBDG ClientCacheAutoConfiguration class

corresponds to the SDG @ClientCacheApplication annotation.

|

As explained in Getting Started SBDG starts with the opinion that application developers primarily build Apache Geode client applications by using Spring Boot.

Technically, this means building Spring Boot applications with an Apache Geode ClientCache instance connected to

a dedicated cluster of Apache Geode servers that manage the data as part of a

client/server topology.

By way of example, this means that you need not explicitly declare and annotate your @SpringBootApplication class

with SDG’s @ClientCacheApplication annotation, as the following example shows:

@SpringBootApplication

@ClientCacheApplication

class SpringBootApacheGeodeClientCacheApplication {

// ...

}SBDG’s provided auto-configuration class is already meta-annotated with SDG’s @ClientCacheApplication annotation.

Therefore, you need only do:

@SpringBootApplication

class SpringBootApacheGeodeClientCacheApplication {

// ...

}| See SDG’s reference documentation for more details on Apache Geode cache applications and client/server applications in particular. |

6.5.2. @EnableGemfireCaching

The SBDG CachingProviderAutoConfiguration class

corresponds to the SDG @EnableGemfireCaching annotation.

|

If you used the core Spring Framework to configure Apache Geode as a caching provider in Spring’s Cache Abstraction, you need to:

@SpringBootApplication

@EnableCaching

class CachingUsingApacheGeodeConfiguration {

@Bean

GemfireCacheManager cacheManager(GemFireCache cache) {

GemfireCacheManager cacheManager = new GemfireCacheManager();

cacheManager.setCache(cache);

return cacheManager;

}

}If you use Spring Data for Apache Geode’s @EnableGemfireCaching annotation, you can simplify the preceding

configuration:

@SpringBootApplication

@EnableGemfireCaching

class CachingUsingApacheGeodeConfiguration {

}Also, if you use SBDG, you need only do:

@SpringBootApplication

class CachingUsingApacheGeodeConfiguration {

}This lets you focus on the areas in your application that would benefit from caching without having to enable the plumbing. You can then demarcate the service methods in your application that are good candidates for caching:

@Service

class CustomerService {

@Caching("CustomersByName")

Customer findBy(String name) {

// ...

}

}| See documentation on caching for more details. |

6.5.3. @EnableContinuousQueries

The SBDG ContinuousQueryAutoConfiguration class

corresponds to the SDG @EnableContinuousQueries annotation.

|

Without having to enable anything, you can annotate your application (POJO) component method(s) with the SDG

@ContinuousQuery

annotation to register a CQ and start receiving events. The method acts as a CqEvent handler or, in Apache Geode’s

terminology, the method is an implementation of the

CqListener interface.

@Component

class MyCustomerApplicationContinuousQueries {

@ContinuousQuery("SELECT customer.* "

+ " FROM /Customers customers"

+ " WHERE customer.getSentiment().name().equalsIgnoreCase('UNHAPPY')")

public void handleUnhappyCustomers(CqEvent event) {

// ...

}

}As the preceding example shows, you can define the events you are interested in receiving by using an OQL query with a finely tuned query predicate that describes the events of interests and implements the handler method to process the events (such as applying a credit to the customer’s account and following up in email).

| See Continuous Query for more details. |

6.5.4. @EnableGemfireFunctionExecutions & @EnableGemfireFunctions

The SBDG FunctionExecutionAutoConfiguration class

corresponds to both the SDG @EnableGemfireFunctionExecutions

and SDG @EnableGemfireFunctions annotations.

|

Whether you need to execute

or implement a Function, SBDG detects the Function definition

and auto-configures it appropriately for use in your Spring Boot application. You need only define the Function

execution or implementation in a package below the main @SpringBootApplication class:

package example.app.functions;

@OnRegion("Accounts")

interface MyCustomerApplicationFunctions {

void applyCredit(Customer customer);

}Then you can inject the Function execution into any application component and use it:

package example.app.service;

@Service

class CustomerService {

@Autowired

private MyCustomerApplicationFunctions customerFunctions;

void analyzeCustomerSentiment(Customer customer) {

// ...

this.customerFunctions.applyCredit(customer);

// ...

}

}The same pattern basically applies to Function implementations, except in the implementation case, SBDG registers the Function implementation for use (that is, to be called by a Function execution).

Doing so lets you focus on defining the logic required by your application and not worry about how Functions are registered, called, and so on. SBDG handles this concern for you.

| Function implementations are typically defined and registered on the server-side. |

| See Function Implementations & Executions for more details. |

6.5.5. @EnableGemfireRepositories

The SBDG GemFireRepositoriesAutoConfigurationRegistrar class

corresponds to the SDG @EnableGemfireRepositories annotation.

|

As with Functions, you need concern yourself only with the data access operations (such as basic CRUD and simple queries)

required by your application to carry out its operation, not with how to create and perform them (for example,

Region.get(key) and Region.put(key, obj)) or execute them (for example, Query.execute(arguments)).

Start by defining your Spring Data Repository:

package example.app.repo;

interface CustomerRepository extends CrudRepository<Customer, Long> {

List<Customer> findBySentimentEqualTo(Sentiment sentiment);

}Then you can inject the Repository into an application component and use it:

package example.app.sevice;

@Service

class CustomerService {

@Autowired

private CustomerRepository repository;

public void processCustomersWithSentiment(Sentiment sentiment) {

this.repository.findBySentimentEqualTo(sentiment)

.forEach(customer -> { /* ... */ });

// ...

}

}Your application-specific Repository simply needs to be declared in a package below the main @SpringBootApplication

class. Again, you are focusing only on the data access operations and queries required to carry out the operatinons

of your application, nothing more.

| See Spring Data Repositories for more details. |

6.5.6. @EnableLogging

The SBDG LoggingAutoConfiguration class

corresponds to the SDG @EnableLogging annotation.

|

Logging is an essential application concern to understand what is happening in the system along with when and where the events occurred. By default, SBDG auto-configures logging for Apache Geode with the default log-level, “config”.

You can change any aspect of logging, such as the log-level, in Spring Boot application.properties:

# Spring Boot application.properites.

spring.data.gemfire.cache.log-level=debug

The 'spring.data.gemfire.logging.level' property is an alias for spring.data.gemfire.cache.log-level.

|

You can also configure other aspects, such as the log file size and disk space limits for the filesystem location used to store the Apache Geode log files at runtime.

Under the hood, Apache Geode’s logging is based on Log4j. Therefore, you can configure Apache Geode logging to use any

logging provider (such as Logback) and configuration metadata appropriate for that logging provider so long as you

supply the necessary adapter between Log4j and whatever logging system you use. For instance, if you include

org.springframework.boot:spring-boot-starter-logging, you are using Logback and you will need the

org.apache.logging.log4j:log4j-to-slf4j adapter.

6.5.7. @EnablePdx

The SBDG PdxSerializationAutoConfiguration class

corresponds to the SDG @EnablePdx annotation.

|

Any time you need to send an object over the network or overflow or persist an object to disk, your application domain

model object must be serializable. It would be painful to have to implement java.io.Serializable in every one of your

application domain model objects (such as Customer) that would potentially need to be serialized.

Furthermore, using Java Serialization may not be ideal (it may not be the most portable or efficient solution) in all cases or even possible in other cases (such as when you use a third party library over which you have no control).

In these situations, you need to be able to send your object anywhere, anytime without unduly requiring the class type to be serializable and exist on the classpath in every place it is sent. Indeed, the final destination may not even be a Java application. This is where Apache Geode PDX Serialization steps in to help.

However, you need not figure out how to configure PDX to identify the application class types that needs to be serialized. Instead, you can define your class type as follows:

@Region("Customers")

class Customer {

@Id

private Long id;

@Indexed

private String name;

// ...

}SBDG’s auto-configuration handles the rest.

| See Data Serialization with PDX for more details. |

6.5.8. @EnableSecurity

The SBDG ClientSecurityAutoConfiguration class

and PeerSecurityAutoConfiguration class

correspond to the SDG @EnableSecurity annotation,

but they apply security (specifically, authentication and authorization (auth) configuration) for both clients

and servers.

|

Configuring your Spring Boot, Apache Geode ClientCache application to properly authenticate with a cluster of secure

Apache Geode servers is as simple as setting a username and a password in Spring Boot application.properties:

# Spring Boot application.properties

spring.data.gemfire.security.username=Batman

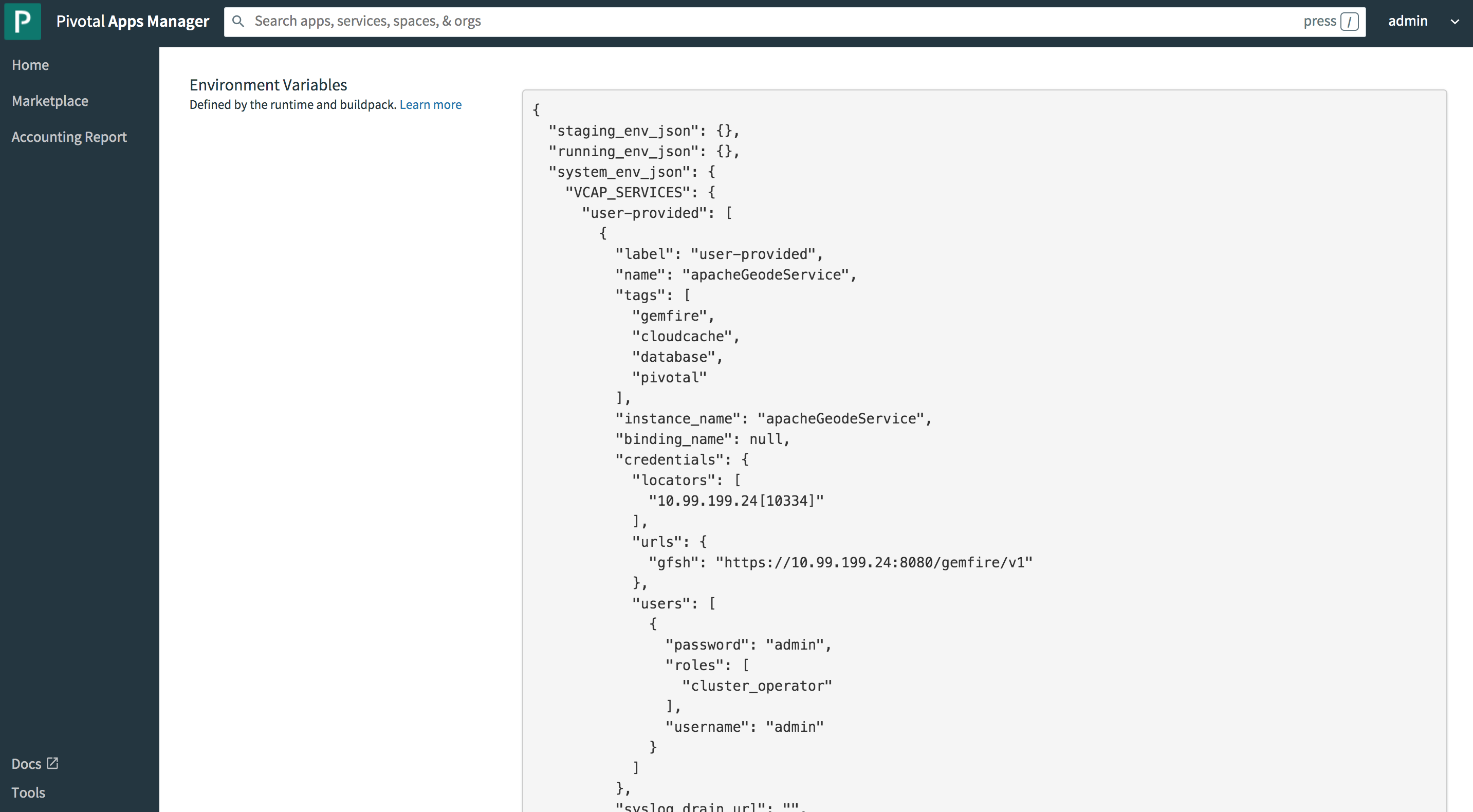

spring.data.gemfire.security.password=r0b!n5ucks| Authentication is even easier to configure in a managed environment, such as PCF when using PCC. You need not do anything. |

Authorization is configured on the server-side and is made simple with SBDG and the help of Apache Shiro. Of course, this assumes you use SBDG to configure and bootstrap your Apache Geode cluster in the first place, which is even easier with SBDG. See Running an Apache Geode cluster with Spring Boot from your IDE.

| See Security for more details. |

6.5.9. @EnableSsl

The SBDG SslAutoConfiguration class

corresponds to the SDG @EnableSsl annotation.

|

Configuring SSL for secure transport (TLS) between your Spring Boot, Apache Geode ClientCache application and an

Apache Geode cluster can be a real problem, especially to get right from the start. So, it is something that SBDG

makes as simple as possible.

You can supply a trusted.keystore file containing the certificates in a well-known location (such as the root of your

application classpath), and SBDG’s auto-configuration steps in to handle the rest.

This is useful during development, but we highly recommend using a more secure procedure (such as integrating with a secure credential store like LDAP, CredHub or Vault) when deploying your Spring Boot application to production.

| See Transport Layer Security using SSL for more details. |

6.5.10. @EnableGemFireHttpSession

The SBDG SpringSessionAutoConfiguration class

corresponds to the SSDG @EnableGemFireHttpSession annotation.

|

Configuring Apache Geode to serve as the (HTTP) session state caching provider by using Spring Session requires that

you only include the correct starter, that is spring-geode-starter-session:

<dependency>

<groupId>org.springframework.geode</groupId>

<artifactId>spring-geode-starter-session</artifactId>

<version>1.7.5</version>

</dependency>

With Spring Session — and specifically Spring Session for Apache Geode (SSDG) — on the classpath of your Spring Boot,

Apache Geode ClientCache Web application, you can manage your (HTTP) session state with Apache Geode. No further

configuration is needed. SBDG auto-configuration detects Spring Session on the application classpath and does the rest.

| See Spring Session for more details. |

6.5.11. RegionTemplateAutoConfiguration

The SBDG RegionTemplateAutoConfiguration class

has no corresponding SDG annotation. However, the auto-configuration of a GemfireTemplate for every Apache Geode

Region defined and declared in your Spring Boot application is still supplied by SBDG.

For example, you can define a Region by using:

@Configuration

class GeodeConfiguration {

@Bean("Customers")

ClientRegionFactoryBean<Long, Customer> customersRegion(GemFireCache cache) {

ClientRegionFactoryBean<Long, Customer> customersRegion =

new ClientRegionFactoryBean<>();

customersRegion.setCache(cache);

customersRegion.setShortcut(ClientRegionShortcut.PROXY);

return customersRegion;

}

}Alternatively, you can define the Customers Region by using @EnableEntityDefinedRegions:

@EnableEntityDefinedRegions@Configuration

@EnableEntityDefinedRegion(basePackageClasses = Customer.class)

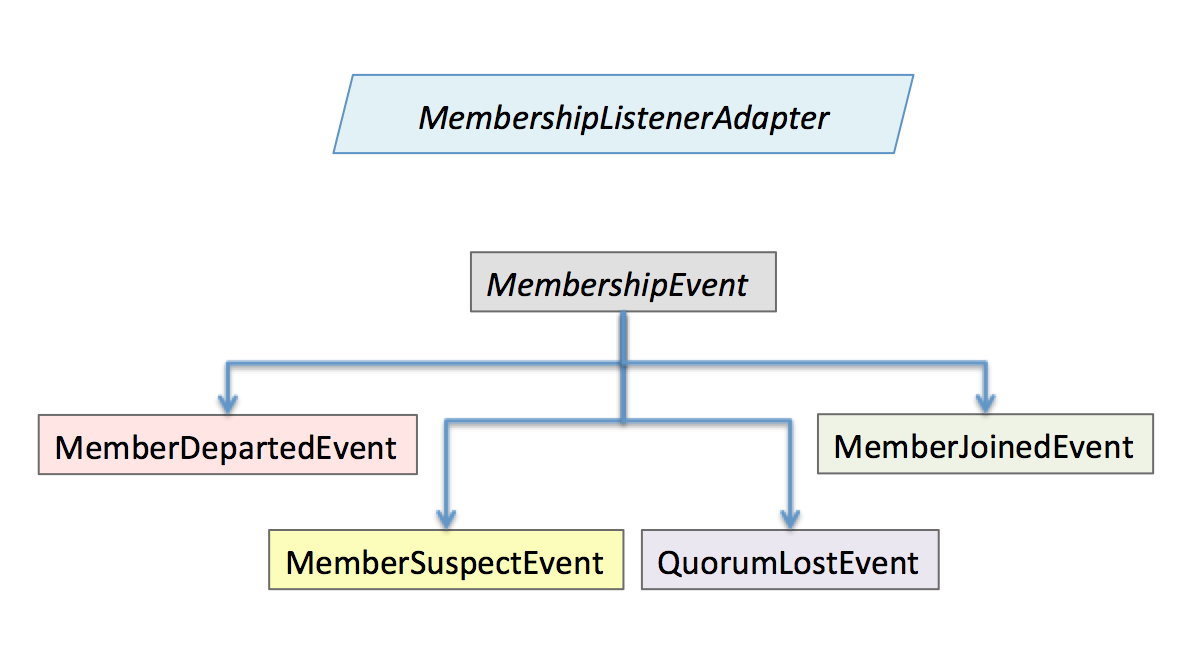

class GeodeConfiguration {