© 2009 - 2023 VMware, Inc. All rights reserved.

Copies of this document may be made for your own use and for distribution to others, provided that you do not charge any fee for such copies and further provided that each copy contains this Copyright Notice, whether distributed in print or electronically.

Preface

This chapter includes:

1. Requirements

This section details the compatible Java and Spring Framework versions.

2. Code Conventions

Spring Framework 2.0 introduced support for namespaces, which simplifies the XML configuration of the application context and lets Spring Integration provide broad namespace support.

In this reference guide, the int namespace prefix is used for Spring Integration’s core namespace support.

Each Spring Integration adapter type (also called a module) provides its own namespace, which is configured by using the following convention:

The following example shows the int, int-event, and int-stream namespaces in use:

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:int="http://www.springframework.org/schema/integration"

xmlns:int-webflux="http://www.springframework.org/schema/integration/webflux"

xmlns:int-stream="http://www.springframework.org/schema/integration/stream"

xsi:schemaLocation="

http://www.springframework.org/schema/beans

https://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/integration

https://www.springframework.org/schema/integration/spring-integration.xsd

http://www.springframework.org/schema/integration/webflux

https://www.springframework.org/schema/integration/webflux/spring-integration-webflux.xsd

http://www.springframework.org/schema/integration/stream

https://www.springframework.org/schema/integration/stream/spring-integration-stream.xsd">

…

</beans>For a detailed explanation regarding Spring Integration’s namespace support, see Namespace Support.

| The namespace prefix can be freely chosen. You may even choose not to use any namespace prefixes at all. Therefore, you should apply the convention that best suits your application. Be aware, though, that SpringSource Tool Suite™ (STS) uses the same namespace conventions for Spring Integration as used in this reference guide. |

3. Conventions in This Guide

In some cases, to aid formatting when specifying long fully qualified class names, we shorten org.springframework to o.s and org.springframework.integration to o.s.i, such as with o.s.i.transaction.TransactionSynchronizationFactory.

4. Feedback and Contributions

For how-to questions or diagnosing or debugging issues, we suggest using Stack Overflow. Click here for a list of the latest questions. If you’re fairly certain that there is a problem in the Spring Integration or would like to suggest a feature, please use the GitHub Issues.

If you have a solution in mind or a suggested fix, you can submit a pull request on GitHub. However, please keep in mind that, for all but the most trivial issues, we expect a ticket to be filed in the issue tracker, where discussions take place and leave a record for future reference.

For more details see the guidelines at the CONTRIBUTING, top-level project page.

5. Getting Started

If you are just getting started with Spring Integration, you may want to begin by creating a Spring Boot-based application. Spring Boot provides a quick (and opinionated) way to create a production-ready Spring-based application. It is based on the Spring Framework, favors convention over configuration, and is designed to get you up and running as quickly as possible.

You can use start.spring.io to generate a basic project (add integration as dependency) or follow one of the "Getting Started" guides, such as Getting Started Building an Integrating Data.

As well as being easier to digest, these guides are very task focused, and most of them are based on Spring Boot.

What’s New?

For those who are already familiar with Spring Integration, this chapter provides a brief overview of the new features of version 6.1.

If you are interested in the changes and features that were introduced in earlier versions, see the Change History.

6. What’s New in Spring Integration 6.1?

If you are interested in more details, see the Issue Tracker tickets that were resolved as part of the 6.1 development process.

In general the project has been moved to the latest dependency versions.

6.1. New Components

6.1.1. Zip Support

The Zip Spring Integration Extension project has been migrated as the spring-integration-zip module.

See Zip Support for more information.

6.1.2. ContextHolderRequestHandlerAdvice

The ContextHolderRequestHandlerAdvice allows to store a value from a request message into some context around MessageHandler execution.

See Context Holder Advice for more information.

6.1.3. The handleReactive() operator for Java DSL

The IntegrationFlow can now end with a convenient handleReactive(ReactiveMessageHandler) operator.

See ReactiveMessageHandler for more information.

6.1.4. PartitionedChannel

A new PartitionedChannel has been introduced to process messages with the same partition key in the same thread.

See PartitionedChannel for more information.

6.2. General Changes

-

Added support for transforming to/from Protocol Buffers. See Protocol Buffers Transformers for more information.

-

The

MessageFilternow emits a warning into logs when message is silently discarded and dropped. See Filter for more information. -

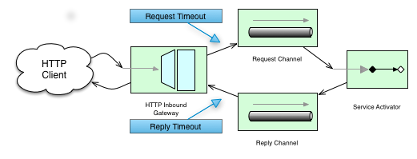

The default timeout for send and receive operations in gateways and replying channel adapters has been changed from infinity to

30seconds. Only one left as a1second is areceiveTimeoutforPollingConsumerto not block a scheduler thread too long and let other queued tasks to be performed with theTaskScheduler. -

The

IntegrationComponentSpec.get()method has been deprecated with removal planned for the next version. SinceIntegrationComponentSpecis aFactoryBean, its bean definition must stay as is without any target object resolutions. The Java DSL and the framework by itself will manage theIntegrationComponentSpeclifecycle. See Java DSL for more information. -

The

AbstractMessageProducingHandleris marked as anasyncby default if its output channel is configured to aReactiveStreamsSubscribableChannel. See Asynchronous Service Activator for more information.

6.3. Web Sockets Changes

A ClientWebSocketContainer can now be configured with a predefined URI instead of a combination of uriTemplate and uriVariables.

See WebSocket Overview for more information.

6.4. JMS Changes

The JmsInboundGateway, via its ChannelPublishingJmsMessageListener, can now be configured with a replyToExpression to resolve a reply destination against the request message at runtime.

See JMS Inbound Gateway for more information.

6.5. Mail Changes

The (previously deprecated) ImapIdleChannelAdapter.sendingTaskExecutor property has been removed in favor of an asynchronous message process downstream in the flow.

See Mail-receiving Channel Adapter for more information.

6.6. Files Changes

The FileReadingMessageSource now exposes watchMaxDepth and watchDirPredicate options for the WatchService.

See WatchServiceDirectoryScanner for more information.

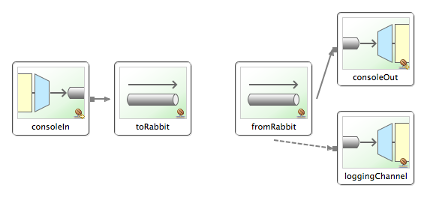

6.7. AMQP Changes

The Java DSL API for Rabbit Streams (the RabbitStream factory) exposes additional properties for simple configurations.

See RabbitMQ Stream Queue Support for more information.

6.8. JDBC Changes

The DefaultLockRepository now exposes setters for insert, update and renew queries.

See JDBC Lock Registry for more information.

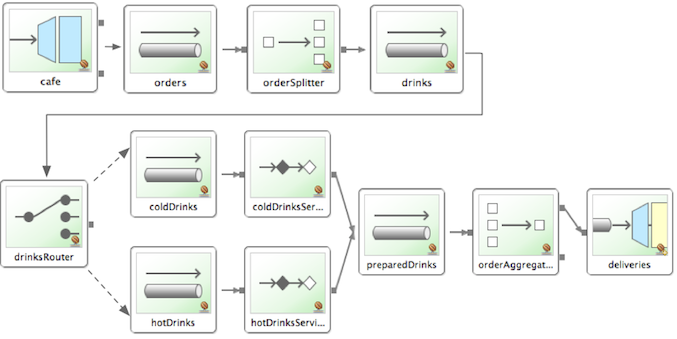

Overview of Spring Integration Framework

Spring Integration provides an extension of the Spring programming model to support the well known Enterprise Integration Patterns. It enables lightweight messaging within Spring-based applications and supports integration with external systems through declarative adapters. Those adapters provide a higher level of abstraction over Spring’s support for remoting, messaging, and scheduling.

Spring Integration’s primary goal is to provide a simple model for building enterprise integration solutions while maintaining the separation of concerns that is essential for producing maintainable, testable code.

7. Spring Integration Overview

This chapter provides a high-level introduction to Spring Integration’s core concepts and components. It includes some programming tips to help you make the most of Spring Integration.

7.1. Background

One of the key themes of the Spring Framework is Inversion of Control (IoC). In its broadest sense, this means that the framework handles responsibilities on behalf of the components that are managed within its context. The components themselves are simplified, because they are relieved of those responsibilities. For example, dependency injection relieves the components of the responsibility of locating or creating their dependencies. Likewise, aspect-oriented programming relieves business components of generic cross-cutting concerns by modularizing them into reusable aspects. In each case, the end result is a system that is easier to test, understand, maintain, and extend.

Furthermore, the Spring framework and portfolio provide a comprehensive programming model for building enterprise applications. Developers benefit from the consistency of this model and especially from the fact that it is based upon well established best practices, such as programming to interfaces and favoring composition over inheritance. Spring’s simplified abstractions and powerful support libraries boost developer productivity while simultaneously increasing the level of testability and portability.

Spring Integration is motivated by these same goals and principles. It extends the Spring programming model into the messaging domain and builds upon Spring’s existing enterprise integration support to provide an even higher level of abstraction. It supports message-driven architectures where inversion of control applies to runtime concerns, such as when certain business logic should run and where the response should be sent. It supports routing and transformation of messages so that different transports and different data formats can be integrated without impacting testability. In other words, the messaging and integration concerns are handled by the framework. Business components are further isolated from the infrastructure, and developers are relieved of complex integration responsibilities.

As an extension of the Spring programming model, Spring Integration provides a wide variety of configuration options, including annotations, XML with namespace support, XML with generic “bean” elements, and direct usage of the underlying API. That API is based upon well-defined strategy interfaces and non-invasive, delegating adapters. Spring Integration’s design is inspired by the recognition of a strong affinity between common patterns within Spring and the well known patterns described in Enterprise Integration Patterns, by Gregor Hohpe and Bobby Woolf (Addison Wesley, 2004). Developers who have read that book should be immediately comfortable with the Spring Integration concepts and terminology.

7.2. Goals and Principles

Spring Integration is motivated by the following goals:

-

Provide a simple model for implementing complex enterprise integration solutions.

-

Facilitate asynchronous, message-driven behavior within a Spring-based application.

-

Promote intuitive, incremental adoption for existing Spring users.

Spring Integration is guided by the following principles:

-

Components should be loosely coupled for modularity and testability.

-

The framework should enforce separation of concerns between business logic and integration logic.

-

Extension points should be abstract in nature (but within well-defined boundaries) to promote reuse and portability.

7.3. Main Components

From a vertical perspective, a layered architecture facilitates separation of concerns, and interface-based contracts between layers promote loose coupling. Spring-based applications are typically designed this way, and the Spring framework and portfolio provide a strong foundation for following this best practice for the full stack of an enterprise application. Message-driven architectures add a horizontal perspective, yet these same goals are still relevant. Just as “layered architecture” is an extremely generic and abstract paradigm, messaging systems typically follow the similarly abstract “pipes-and-filters” model. The “filters” represent any components capable of producing or consuming messages, and the “pipes” transport the messages between filters so that the components themselves remain loosely-coupled. It is important to note that these two high-level paradigms are not mutually exclusive. The underlying messaging infrastructure that supports the “pipes” should still be encapsulated in a layer whose contracts are defined as interfaces. Likewise, the “filters” themselves should be managed within a layer that is logically above the application’s service layer, interacting with those services through interfaces in much the same way that a web tier would.

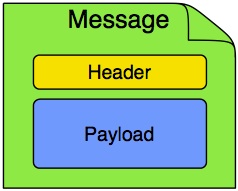

7.3.1. Message

In Spring Integration, a message is a generic wrapper for any Java object combined with metadata used by the framework while handling that object. It consists of a payload and headers. The payload can be of any type, and the headers hold commonly required information such as ID, timestamp, correlation ID, and return address. Headers are also used for passing values to and from connected transports. For example, when creating a message from a received file, the file name may be stored in a header to be accessed by downstream components. Likewise, if a message’s content is ultimately going to be sent by an outbound mail adapter, the various properties (to, from, cc, subject, and others) may be configured as message header values by an upstream component. Developers can also store any arbitrary key-value pairs in the headers.

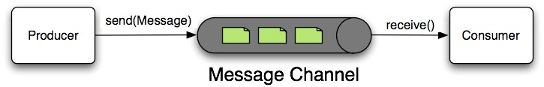

7.3.2. Message Channel

A message channel represents the “pipe” of a pipes-and-filters architecture. Producers send messages to a channel, and consumers receive messages from a channel. The message channel therefore decouples the messaging components and also provides a convenient point for interception and monitoring of messages.

A message channel may follow either point-to-point or publish-subscribe semantics. With a point-to-point channel, no more than one consumer can receive each message sent to the channel. Publish-subscribe channels, on the other hand, attempt to broadcast each message to all subscribers on the channel. Spring Integration supports both of these models.

Whereas “point-to-point” and "publish-subscribe" define the two options for how many consumers ultimately receive each message, there is another important consideration: Should the channel buffer messages? In Spring Integration, pollable channels are capable of buffering Messages within a queue. The advantage of buffering is that it allows for throttling the inbound messages and thereby prevents overloading a consumer. However, as the name suggests, this also adds some complexity, since a consumer can only receive the messages from such a channel if a poller is configured. On the other hand, a consumer connected to a subscribable channel is simply message-driven. Message Channel Implementations has a detailed discussion of the variety of channel implementations available in Spring Integration.

7.3.3. Message Endpoint

One of the primary goals of Spring Integration is to simplify the development of enterprise integration solutions through inversion of control. This means that you should not have to implement consumers and producers directly, and you should not even have to build messages and invoke send or receive operations on a message channel. Instead, you should be able to focus on your specific domain model with an implementation based on plain objects. Then, by providing declarative configuration, you can “connect” your domain-specific code to the messaging infrastructure provided by Spring Integration. The components responsible for these connections are message endpoints. This does not mean that you should necessarily connect your existing application code directly. Any real-world enterprise integration solution requires some amount of code focused upon integration concerns such as routing and transformation. The important thing is to achieve separation of concerns between the integration logic and the business logic. In other words, as with the Model-View-Controller (MVC) paradigm for web applications, the goal should be to provide a thin but dedicated layer that translates inbound requests into service layer invocations and then translates service layer return values into outbound replies. The next section provides an overview of the message endpoint types that handle these responsibilities, and, in upcoming chapters, you can see how Spring Integration’s declarative configuration options provide a non-invasive way to use each of these.

7.4. Message Endpoints

A Message Endpoint represents the “filter” of a pipes-and-filters architecture. As mentioned earlier, the endpoint’s primary role is to connect application code to the messaging framework and to do so in a non-invasive manner. In other words, the application code should ideally have no awareness of the message objects or the message channels. This is similar to the role of a controller in the MVC paradigm. Just as a controller handles HTTP requests, the message endpoint handles messages. Just as controllers are mapped to URL patterns, message endpoints are mapped to message channels. The goal is the same in both cases: isolate application code from the infrastructure. These concepts and all the patterns that follow are discussed at length in the Enterprise Integration Patterns book. Here, we provide only a high-level description of the main endpoint types supported by Spring Integration and the roles associated with those types. The chapters that follow elaborate and provide sample code as well as configuration examples.

7.4.1. Message Transformer

A message transformer is responsible for converting a message’s content or structure and returning the modified message.

Probably the most common type of transformer is one that converts the payload of the message from one format to another (such as from XML to java.lang.String).

Similarly, a transformer can add, remove, or modify the message’s header values.

7.4.2. Message Filter

A message filter determines whether a message should be passed to an output channel at all.

This simply requires a boolean test method that may check for a particular payload content type, a property value, the presence of a header, or other conditions.

If the message is accepted, it is sent to the output channel.

If not, it is dropped (or, for a more severe implementation, an Exception could be thrown).

Message filters are often used in conjunction with a publish-subscribe channel, where multiple consumers may receive the same message and use the criteria of the filter to narrow down the set of messages to be processed.

| Be careful not to confuse the generic use of “filter” within the pipes-and-filters architectural pattern with this specific endpoint type that selectively narrows down the messages flowing between two channels. The pipes-and-filters concept of a “filter” matches more closely with Spring Integration’s message endpoint: any component that can be connected to a message channel in order to send or receive messages. |

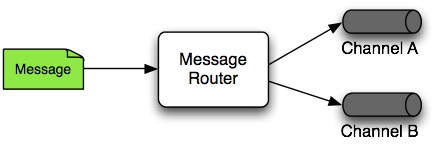

7.4.3. Message Router

A message router is responsible for deciding what channel or channels (if any) should receive the message next. Typically, the decision is based upon the message’s content or the metadata available in the message headers. A message router is often used as a dynamic alternative to a statically configured output channel on a service activator or other endpoint capable of sending reply messages. Likewise, a message router provides a proactive alternative to the reactive message filters used by multiple subscribers, as described earlier.

7.4.4. Splitter

A splitter is another type of message endpoint whose responsibility is to accept a message from its input channel, split that message into multiple messages, and send each of those to its output channel. This is typically used for dividing a “composite” payload object into a group of messages containing the subdivided payloads.

7.4.5. Aggregator

Basically a mirror-image of the splitter, the aggregator is a type of message endpoint that receives multiple messages and combines them into a single message.

In fact, aggregators are often downstream consumers in a pipeline that includes a splitter.

Technically, the aggregator is more complex than a splitter, because it is required to maintain state (the messages to be aggregated), to decide when the complete group of messages is available, and to timeout if necessary.

Furthermore, in case of a timeout, the aggregator needs to know whether to send the partial results, discard them, or send them to a separate channel.

Spring Integration provides a CorrelationStrategy, a ReleaseStrategy, and configurable settings for timeout, whether

to send partial results upon timeout, and a discard channel.

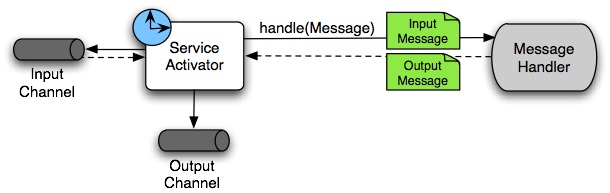

7.4.6. Service Activator

A Service Activator is a generic endpoint for connecting a service instance to the messaging system. The input message channel must be configured, and, if the service method to be invoked is capable of returning a value, an output message Channel may also be provided.

| The output channel is optional, since each message may also provide its own 'Return Address' header. This same rule applies for all consumer endpoints. |

The service activator invokes an operation on some service object to process the request message, extracting the request message’s payload and converting (if the method does not expect a message-typed parameter). Whenever the service object’s method returns a value, that return value is likewise converted to a reply message if necessary (if it is not already a message type). That reply message is sent to the output channel. If no output channel has been configured, the reply is sent to the channel specified in the message’s “return address”, if available.

A request-reply service activator endpoint connects a target object’s method to input and output Message Channels.

| As discussed earlier, in Message Channel, channels can be pollable or subscribable. In the preceding diagram, this is depicted by the “clock” symbol and the solid arrow (poll) and the dotted arrow (subscribe). |

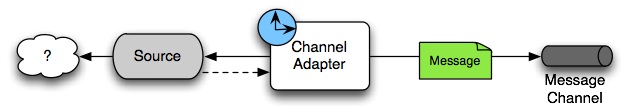

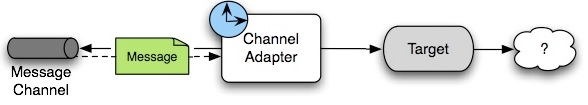

7.4.7. Channel Adapter

A channel adapter is an endpoint that connects a message channel to some other system or transport. Channel adapters may be either inbound or outbound. Typically, the channel adapter does some mapping between the message and whatever object or resource is received from or sent to the other system (file, HTTP Request, JMS message, and others). Depending on the transport, the channel adapter may also populate or extract message header values. Spring Integration provides a number of channel adapters, which are described in upcoming chapters.

MessageChannel.| Message sources can be pollable (for example, POP3) or message-driven (for example, IMAP Idle). In the preceding diagram, this is depicted by the “clock” symbol and the solid arrow (poll) and the dotted arrow (message-driven). |

MessageChannel to a target system.| As discussed earlier in Message Channel, channels can be pollable or subscribable. In the preceding diagram, this is depicted by the “clock” symbol and the solid arrow (poll) and the dotted arrow (subscribe). |

7.4.8. Endpoint Bean Names

Consuming endpoints (anything with an inputChannel) consist of two beans, the consumer and the message handler.

The consumer has a reference to the message handler and invokes it as messages arrive.

Consider the following XML example:

<int:service-activator id = "someService" ... />Given the preceding example, the bean names are as follows:

-

Consumer:

someService(theid) -

Handler:

someService.handler

When using Enterprise Integration Pattern (EIP) annotations, the names depend on several factors. Consider the following example of an annotated POJO:

@Component

public class SomeComponent {

@ServiceActivator(inputChannel = ...)

public String someMethod(...) {

...

}

}

Given the preceding example, the bean names are as follows:

-

Consumer:

someComponent.someMethod.serviceActivator -

Handler:

someComponent.someMethod.serviceActivator.handler

Starting with version 5.0.4, you can modify these names by using the @EndpointId annotation, as the following example shows:

@Component

public class SomeComponent {

@EndpointId("someService")

@ServiceActivator(inputChannel = ...)

public String someMethod(...) {

...

}

}

Given the preceding example, the bean names are as follows:

-

Consumer:

someService -

Handler:

someService.handler

The @EndpointId creates names as created by the id attribute with XML configuration.

Consider the following example of an annotated bean:

@Configuration

public class SomeConfiguration {

@Bean

@ServiceActivator(inputChannel = ...)

public MessageHandler someHandler() {

...

}

}

Given the preceding example, the bean names are as follows:

-

Consumer:

someConfiguration.someHandler.serviceActivator -

Handler:

someHandler(the@Beanname)

Starting with version 5.0.4, you can modify these names by using the @EndpointId annotation, as the following example shows:

@Configuration

public class SomeConfiguration {

@Bean("someService.handler") (1)

@EndpointId("someService") (2)

@ServiceActivator(inputChannel = ...)

public MessageHandler someHandler() {

...

}

}

| 1 | Handler: someService.handler (the bean name) |

| 2 | Consumer: someService (the endpoint ID) |

The @EndpointId annotation creates names as created by the id attribute with XML configuration, as long as you use the convention of appending .handler to the @Bean name.

There is one special case where a third bean is created: For architectural reasons, if a MessageHandler @Bean does not define an AbstractReplyProducingMessageHandler, the framework wraps the provided bean in a ReplyProducingMessageHandlerWrapper.

This wrapper supports request handler advice handling and emits the normal 'produced no reply' debug log messages.

Its bean name is the handler bean name plus .wrapper (when there is an @EndpointId — otherwise, it is the normal generated handler name).

Similarly, Pollable Message Sources create two beans, a SourcePollingChannelAdapter (SPCA) and a MessageSource.

Consider the following XML configuration:

<int:inbound-channel-adapter id = "someAdapter" ... />Given the preceding XML configuration, the bean names are as follows:

-

SPCA:

someAdapter(theid) -

Handler:

someAdapter.source

Consider the following Java configuration of a POJO to define an @EndpointId:

@EndpointId("someAdapter")

@InboundChannelAdapter(channel = "channel3", poller = @Poller(fixedDelay = "5000"))

public String pojoSource() {

...

}

Given the preceding Java configuration example, the bean names are as follows:

-

SPCA:

someAdapter -

Handler:

someAdapter.source

Consider the following Java configuration of a bean to define an @EndpointID:

@Bean("someAdapter.source")

@EndpointId("someAdapter")

@InboundChannelAdapter(channel = "channel3", poller = @Poller(fixedDelay = "5000"))

public MessageSource<?> source() {

return () -> {

...

};

}

Given the preceding example, the bean names are as follows:

-

SPCA:

someAdapter -

Handler:

someAdapter.source(as long as you use the convention of appending.sourceto the@Beanname)

7.5. Configuration and @EnableIntegration

Throughout this document, you can see references to XML namespace support for declaring elements in a Spring Integration flow.

This support is provided by a series of namespace parsers that generate appropriate bean definitions to implement a particular component.

For example, many endpoints consist of a MessageHandler bean and a ConsumerEndpointFactoryBean into which the handler and an input channel name are injected.

The first time a Spring Integration namespace element is encountered, the framework automatically declares a number of beans (a task scheduler, an implicit channel creator, and others) that are used to support the runtime environment.

Version 4.0 introduced the @EnableIntegration annotation, to allow the registration of Spring Integration infrastructure beans (see the Javadoc).

This annotation is required when only Java configuration is used — for example with Spring Boot or Spring Integration Messaging Annotation support and Spring Integration Java DSL with no XML integration configuration.

|

The @EnableIntegration annotation is also useful when you have a parent context with no Spring Integration components and two or more child contexts that use Spring Integration.

It lets these common components be declared once only, in the parent context.

The @EnableIntegration annotation registers many infrastructure components with the application context.

In particular, it:

-

Registers some built-in beans, such as

errorChanneland itsLoggingHandler,taskSchedulerfor pollers,jsonPathSpEL-function, and others. -

Adds several

BeanFactoryPostProcessorinstances to enhance theBeanFactoryfor global and default integration environment. -

Adds several

BeanPostProcessorinstances to enhance or convert and wrap particular beans for integration purposes. -

Adds annotation processors to parse messaging annotations and registers components for them with the application context.

The @IntegrationComponentScan annotation also permits classpath scanning.

This annotation plays a similar role as the standard Spring Framework @ComponentScan annotation, but it is restricted to components and annotations that are specific to Spring Integration, which the standard Spring Framework component scan mechanism cannot reach.

For an example, see @MessagingGateway Annotation.

The @EnablePublisher annotation registers a PublisherAnnotationBeanPostProcessor bean and configures the default-publisher-channel for those @Publisher annotations that are provided without a channel attribute.

If more than one @EnablePublisher annotation is found, they must all have the same value for the default channel.

See Annotation-driven Configuration with the @Publisher Annotation for more information.

The @GlobalChannelInterceptor annotation has been introduced to mark ChannelInterceptor beans for global channel interception.

This annotation is an analogue of the <int:channel-interceptor> XML element (see Global Channel Interceptor Configuration).

@GlobalChannelInterceptor annotations can be placed at the class level (with a @Component stereotype annotation) or on @Bean methods within @Configuration classes.

In either case, the bean must implement ChannelInterceptor.

Starting with version 5.1, global channel interceptors apply to dynamically registered channels — such as beans that are initialized by using beanFactory.initializeBean() or through the IntegrationFlowContext when using the Java DSL.

Previously, interceptors were not applied when beans were created after the application context was refreshed.

The @IntegrationConverter annotation marks Converter, GenericConverter, or ConverterFactory beans as candidate converters for integrationConversionService.

This annotation is an analogue of the <int:converter> XML element (see Payload Type Conversion).

You can place @IntegrationConverter annotations at the class level (with a @Component stereotype annotation) or on @Bean methods within @Configuration classes.

See Annotation Support for more information about messaging annotations.

7.6. Programming Considerations

You should use plain old java objects (POJOs) whenever possible and only expose the framework in your code when absolutely necessary. See POJO Method invocation for more information.

If you do expose the framework to your classes, there are some considerations that need to be taken into account, especially during application startup:

-

If your component is

ApplicationContextAware, you should generally not use theApplicationContextin thesetApplicationContext()method. Instead, store a reference and defer such uses until later in the context lifecycle. -

If your component is an

InitializingBeanor uses@PostConstructmethods, do not send any messages from these initialization methods. The application context is not yet initialized when these methods are called, and sending such messages is likely to fail. If you need to send a messages during startup, implementApplicationListenerand wait for theContextRefreshedEvent. Alternatively, implementSmartLifecycle, put your bean in a late phase, and send the messages from thestart()method.

7.6.1. Considerations When Using Packaged (for example, Shaded) Jars

Spring Integration bootstraps certain features by using Spring Framework’s SpringFactories mechanism to load several IntegrationConfigurationInitializer classes.

This includes the -core jar as well as certain others, including -http and -jmx.

The information for this process is stored in a META-INF/spring.factories file in each jar.

Some developers prefer to repackage their application and all dependencies into a single jar by using well known tools, such as the Apache Maven Shade Plugin.

By default, the shade plugin does not merge the spring.factories files when producing the shaded jar.

In addition to spring.factories, other META-INF files (spring.handlers and spring.schemas) are used for XML configuration.

These files also need to be merged.

Spring Boot’s executable jar mechanism takes a different approach, in that it nests the jars, thus retaining each spring.factories file on the class path.

So, with a Spring Boot application, nothing more is needed if you use its default executable jar format.

|

Even if you do not use Spring Boot, you can still use the tooling provided by Boot to enhance the shade plugin by adding transformers for the above mentioned files. The following example shows how to configure the plugin:

...

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<configuration>

<keepDependenciesWithProvidedScope>true</keepDependenciesWithProvidedScope>

<createDependencyReducedPom>true</createDependencyReducedPom>

</configuration>

<dependencies>

<dependency> (1)

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<version>${spring.boot.version}</version>

</dependency>

</dependencies>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<transformers> (2)

<transformer

implementation="org.apache.maven.plugins.shade.resource.AppendingTransformer">

<resource>META-INF/spring.handlers</resource>

</transformer>

<transformer

implementation="org.springframework.boot.maven.PropertiesMergingResourceTransformer">

<resource>META-INF/spring.factories</resource>

</transformer>

<transformer

implementation="org.apache.maven.plugins.shade.resource.AppendingTransformer">

<resource>META-INF/spring.schemas</resource>

</transformer>

<transformer

implementation="org.apache.maven.plugins.shade.resource.ServicesResourceTransformer" />

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

...Specifically,

| 1 | Add the spring-boot-maven-plugin as a dependency. |

| 2 | Configure the transformers. |

You can add a property for ${spring.boot.version} or use an explicit version.

7.7. Programming Tips and Tricks

This section documents some ways to get the most from Spring Integration.

7.7.1. XML Schemas

When using XML configuration, to avoid getting false schema validation errors, you should use a “Spring-aware” IDE, such as the Spring Tool Suite (STS), Eclipse with the Spring IDE plugins, or IntelliJ IDEA.

These IDEs know how to resolve the correct XML schema from the classpath (by using the META-INF/spring.schemas file in the jars).

When using STS or Eclipse with the plugin, you must enable Spring Project Nature on the project.

The schemas hosted on the internet for certain legacy modules (those that existed in version 1.0) are the 1.0 versions for compatibility reasons. If your IDE uses these schemas, you are likely to see false errors.

Each of these online schemas has a warning similar to the following:

|

This schema is for the 1.0 version of Spring Integration Core. We cannot update it to the current schema because that will break any applications using 1.0.3 or lower. For subsequent versions, the "unversioned" schema is resolved from the classpath and obtained from the jar. Please refer to GitHub: |

The affected modules are

-

core(spring-integration.xsd) -

file -

http -

jms -

mail -

security -

stream -

ws -

xml

7.7.2. Finding Class Names for Java and DSL Configuration

With XML configuration and Spring Integration Namespace support, the XML parsers hide how target beans are declared and wired together. For Java configuration, it is important to understand the Framework API for target end-user applications.

The first-class citizens for EIP implementation are Message, Channel, and Endpoint (see Main Components, earlier in this chapter).

Their implementations (contracts) are:

-

org.springframework.messaging.Message: See Message; -

org.springframework.messaging.MessageChannel: See Message Channels; -

org.springframework.integration.endpoint.AbstractEndpoint: See Poller.

The first two are simple enough to understand how to implement, configure, and use. The last one deserves more attention

The AbstractEndpoint is widely used throughout the Spring Framework for different component implementations.

Its main implementations are:

-

EventDrivenConsumer, used when we subscribe to aSubscribableChannelto listen for messages. -

PollingConsumer, used when we poll for messages from aPollableChannel.

When you use messaging annotations or the Java DSL, you don’t need to worry about these components, because the Framework automatically produces them with appropriate annotations and BeanPostProcessor implementations.

When building components manually, you should use the ConsumerEndpointFactoryBean to help determine the target AbstractEndpoint consumer implementation to create, based on the provided inputChannel property.

On the other hand, the ConsumerEndpointFactoryBean delegates to an another first class citizen in the Framework - org.springframework.messaging.MessageHandler.

The goal of the implementation of this interface is to handle the message consumed by the endpoint from the channel.

All EIP components in Spring Integration are MessageHandler implementations (for example, AggregatingMessageHandler, MessageTransformingHandler, AbstractMessageSplitter, and others).

The target protocol outbound adapters (FileWritingMessageHandler, HttpRequestExecutingMessageHandler, AbstractMqttMessageHandler, and others) are also MessageHandler implementations.

When you develop Spring Integration applications with Java configuration, you should look into the Spring Integration module to find an appropriate MessageHandler implementation to use for the @ServiceActivator configuration.

For example, to send an XMPP message (see XMPP Support) you should configure something like the following:

@Bean

@ServiceActivator(inputChannel = "input")

public MessageHandler sendChatMessageHandler(XMPPConnection xmppConnection) {

ChatMessageSendingMessageHandler handler = new ChatMessageSendingMessageHandler(xmppConnection);

DefaultXmppHeaderMapper xmppHeaderMapper = new DefaultXmppHeaderMapper();

xmppHeaderMapper.setRequestHeaderNames("*");

handler.setHeaderMapper(xmppHeaderMapper);

return handler;

}

The MessageHandler implementations represent the outbound and processing part of the message flow.

The inbound message flow side has its own components, which are divided into polling and listening behaviors.

The listening (message-driven) components are simple and typically require only one target class implementation to be ready to

produce messages.

Listening components can be one-way MessageProducerSupport implementations, (such as AbstractMqttMessageDrivenChannelAdapter and ImapIdleChannelAdapter) or request-reply MessagingGatewaySupport implementations (such as AmqpInboundGateway and AbstractWebServiceInboundGateway).

Polling inbound endpoints are for those protocols that do not provide a listener API or are not intended for such a behavior, including any file based protocol (such as FTP), any data bases (RDBMS or NoSQL), and others.

These inbound endpoints consist of two components: the poller configuration, to initiate the polling task periodically,

and a message source class to read data from the target protocol and produce a message for the downstream integration flow.

The first class for the poller configuration is a SourcePollingChannelAdapter.

It is one more AbstractEndpoint implementation, but especially for polling to initiate an integration flow.

Typically, with the messaging annotations or Java DSL, you should not worry about this class.

The Framework produces a bean for it, based on the @InboundChannelAdapter configuration or a Java DSL builder spec.

Message source components are more important for the target application development, and they all implement the MessageSource interface (for example, MongoDbMessageSource and AbstractTwitterMessageSource).

With that in mind, our config for reading data from an RDBMS table with JDBC could resemble the following:

@Bean

@InboundChannelAdapter(value = "fooChannel", poller = @Poller(fixedDelay="5000"))

public MessageSource<?> storedProc(DataSource dataSource) {

return new JdbcPollingChannelAdapter(dataSource, "SELECT * FROM foo where status = 0");

}

You can find all the required inbound and outbound classes for the target protocols in the particular Spring Integration module (in most cases, in the respective package).

For example, the spring-integration-websocket adapters are:

-

o.s.i.websocket.inbound.WebSocketInboundChannelAdapter: ImplementsMessageProducerSupportto listen for frames on the socket and produce message to the channel. -

o.s.i.websocket.outbound.WebSocketOutboundMessageHandler: The one-wayAbstractMessageHandlerimplementation to convert incoming messages to the appropriate frame and send over websocket.

If you are familiar with Spring Integration XML configuration, starting with version 4.3, we provide information in the XSD element definitions about which target classes are used to declare beans for the adapter or gateway, as the following example shows:

<xsd:element name="outbound-async-gateway">

<xsd:annotation>

<xsd:documentation>

Configures a Consumer Endpoint for the 'o.s.i.amqp.outbound.AsyncAmqpOutboundGateway'

that will publish an AMQP Message to the provided Exchange and expect a reply Message.

The sending thread returns immediately; the reply is sent asynchronously; uses 'AsyncRabbitTemplate.sendAndReceive()'.

</xsd:documentation>

</xsd:annotation>7.8. POJO Method invocation

As discussed in Programming Considerations, we recommend using a POJO programming style, as the following example shows:

@ServiceActivator

public String myService(String payload) { ... }

In this case, the framework extracts a String payload, invokes your method, and wraps the result in a message to send to the next component in the flow (the original headers are copied to the new message).

In fact, if you use XML configuration, you do not even need the @ServiceActivator annotation, as the following paired examples show:

<int:service-activator ... ref="myPojo" method="myService" />public String myService(String payload) { ... }

You can omit the method attribute as long as there is no ambiguity in the public methods on the class.

You can also obtain header information in your POJO methods, as the following example shows:

@ServiceActivator

public String myService(@Payload String payload, @Header("foo") String fooHeader) { ... }

You can also dereference properties on the message, as the following example shows:

@ServiceActivator

public String myService(@Payload("payload.foo") String foo, @Header("bar.baz") String barbaz) { ... }

Because various POJO method invocations are available, versions prior to 5.0 used SpEL (Spring Expression Language) to invoke the POJO methods.

SpEL (even interpreted) is usually “fast enough” for these operations, when compared to the actual work usually done in the methods.

However, starting with version 5.0, the org.springframework.messaging.handler.invocation.InvocableHandlerMethod is used by default whenever possible.

This technique is usually faster to execute than interpreted SpEL and is consistent with other Spring messaging projects.

The InvocableHandlerMethod is similar to the technique used to invoke controller methods in Spring MVC.

There are certain methods that are still always invoked when using SpEL.

Examples include annotated parameters with dereferenced properties, as discussed earlier.

This is because SpEL has the capability to navigate a property path.

There may be some other corner cases that we have not considered that also do not work with InvocableHandlerMethod instances.

For this reason, we automatically fall back to using SpEL in those cases.

If you wish, you can also set up your POJO method such that it always uses SpEL, with the UseSpelInvoker annotation, as the following example shows:

@UseSpelInvoker(compilerMode = "IMMEDIATE")

public void bar(String bar) { ... }

If the compilerMode property is omitted, the spring.expression.compiler.mode system property determines the compiler mode.

See SpEL compilation for more information about compiled SpEL.

Core Messaging

This section covers all aspects of the core messaging API in Spring Integration. It covers messages, message channels, and message endpoints. It also covers many of the enterprise integration patterns, such as filter, router, transformer, service activator, splitter, and aggregator.

This section also contains material about system management, including the control bus and message history support.

8. Messaging Channels

8.1. Message Channels

While the Message plays the crucial role of encapsulating data, it is the MessageChannel that decouples message producers from message consumers.

8.1.1. The MessageChannel Interface

Spring Integration’s top-level MessageChannel interface is defined as follows:

public interface MessageChannel {

boolean send(Message message);

boolean send(Message message, long timeout);

}

When sending a message, the return value is true if the message is sent successfully.

If the send call times out or is interrupted, it returns false.

PollableChannel

Since message channels may or may not buffer messages (as discussed in the Spring Integration Overview), two sub-interfaces define the buffering (pollable) and non-buffering (subscribable) channel behavior.

The following listing shows the definition of the PollableChannel interface:

public interface PollableChannel extends MessageChannel {

Message<?> receive();

Message<?> receive(long timeout);

}

As with the send methods, when receiving a message, the return value is null in the case of a timeout or interrupt.

SubscribableChannel

The SubscribableChannel base interface is implemented by channels that send messages directly to their subscribed MessageHandler instances.

Therefore, they do not provide receive methods for polling.

Instead, they define methods for managing those subscribers.

The following listing shows the definition of the SubscribableChannel interface:

public interface SubscribableChannel extends MessageChannel {

boolean subscribe(MessageHandler handler);

boolean unsubscribe(MessageHandler handler);

}

8.1.2. Message Channel Implementations

Spring Integration provides different message channel implementations. The following sections briefly describe each one.

PublishSubscribeChannel

The PublishSubscribeChannel implementation broadcasts any Message sent to it to all of its subscribed handlers.

This is most often used for sending event messages, whose primary role is notification (as opposed to document messages, which are generally intended to be processed by a single handler).

Note that the PublishSubscribeChannel is intended for sending only.

Since it broadcasts to its subscribers directly when its send(Message) method is invoked, consumers cannot poll for messages (it does not implement PollableChannel and therefore has no receive() method).

Instead, any subscriber must itself be a MessageHandler, and the subscriber’s handleMessage(Message) method is invoked in turn.

Prior to version 3.0, invoking the send method on a PublishSubscribeChannel that had no subscribers returned false.

When used in conjunction with a MessagingTemplate, a MessageDeliveryException was thrown.

Starting with version 3.0, the behavior has changed such that a send is always considered successful if at least the minimum subscribers are present (and successfully handle the message).

This behavior can be modified by setting the minSubscribers property, which defaults to 0.

If you use a TaskExecutor, only the presence of the correct number of subscribers is used for this determination, because the actual handling of the message is performed asynchronously.

|

QueueChannel

The QueueChannel implementation wraps a queue.

Unlike the PublishSubscribeChannel, the QueueChannel has point-to-point semantics.

In other words, even if the channel has multiple consumers, only one of them should receive any Message sent to that channel.

It provides a default no-argument constructor (providing an essentially unbounded capacity of Integer.MAX_VALUE) as well as a constructor that accepts the queue capacity, as the following listing shows:

public QueueChannel(int capacity)

A channel that has not reached its capacity limit stores messages in its internal queue, and the send(Message<?>) method returns immediately, even if no receiver is ready to handle the message.

If the queue has reached capacity, the sender blocks until room is available in the queue.

Alternatively, if you use the send method that has an additional timeout parameter, the queue blocks until either room is available or the timeout period elapses, whichever occurs first.

Similarly, a receive() call returns immediately if a message is available on the queue, but, if the queue is empty, then a receive call may block until either a message is available or the timeout, if provided, elapses.

In either case, it is possible to force an immediate return regardless of the queue’s state by passing a timeout value of 0.

Note, however, that calls to the versions of send() and receive() with no timeout parameter block indefinitely.

PriorityChannel

Whereas the QueueChannel enforces first-in-first-out (FIFO) ordering, the PriorityChannel is an alternative implementation that allows for messages to be ordered within the channel based upon a priority.

By default, the priority is determined by the priority header within each message.

However, for custom priority determination logic, a comparator of type Comparator<Message<?>> can be provided to the PriorityChannel constructor.

RendezvousChannel

The RendezvousChannel enables a “direct-handoff” scenario, wherein a sender blocks until another party invokes the channel’s receive() method.

The other party blocks until the sender sends the message.

Internally, this implementation is quite similar to the QueueChannel, except that it uses a SynchronousQueue (a zero-capacity implementation of BlockingQueue).

This works well in situations where the sender and receiver operate in different threads, but asynchronously dropping the message in a queue is not appropriate.

In other words, with a RendezvousChannel, the sender knows that some receiver has accepted the message, whereas with a QueueChannel, the message would have been stored to the internal queue and potentially never received.

Keep in mind that all of these queue-based channels are storing messages in-memory only by default.

When persistence is required, you can either provide a 'message-store' attribute within the 'queue' element to reference a persistent MessageStore implementation or you can replace the local channel with one that is backed by a persistent broker, such as a JMS-backed channel or channel adapter.

The latter option lets you take advantage of any JMS provider’s implementation for message persistence, as discussed in JMS Support.

However, when buffering in a queue is not necessary, the simplest approach is to rely upon the DirectChannel, discussed in the next section.

|

The RendezvousChannel is also useful for implementing request-reply operations.

The sender can create a temporary, anonymous instance of RendezvousChannel, which it then sets as the 'replyChannel' header when building a Message.

After sending that Message, the sender can immediately call receive (optionally providing a timeout value) in order to block while waiting for a reply Message.

This is very similar to the implementation used internally by many of Spring Integration’s request-reply components.

DirectChannel

The DirectChannel has point-to-point semantics but otherwise is more similar to the PublishSubscribeChannel than any of the queue-based channel implementations described earlier.

It implements the SubscribableChannel interface instead of the PollableChannel interface, so it dispatches messages directly to a subscriber.

As a point-to-point channel, however, it differs from the PublishSubscribeChannel in that it sends each Message to a single subscribed MessageHandler.

In addition to being the simplest point-to-point channel option, one of its most important features is that it enables a single thread to perform the operations on “both sides” of the channel.

For example, if a handler subscribes to a DirectChannel, then sending a Message to that channel triggers invocation of that handler’s handleMessage(Message) method directly in the sender’s thread, before the send() method invocation can return.

The key motivation for providing a channel implementation with this behavior is to support transactions that must span across the channel while still benefiting from the abstraction and loose coupling that the channel provides.

If the send() call is invoked within the scope of a transaction, the outcome of the handler’s invocation (for example, updating a database record) plays a role in determining the ultimate result of that transaction (commit or rollback).

Since the DirectChannel is the simplest option and does not add any additional overhead that would be required for scheduling and managing the threads of a poller, it is the default channel type within Spring Integration.

The general idea is to define the channels for an application, consider which of those need to provide buffering or to throttle input, and modify those to be queue-based PollableChannels.

Likewise, if a channel needs to broadcast messages, it should not be a DirectChannel but rather a PublishSubscribeChannel.

Later, we show how each of these channels can be configured.

|

The DirectChannel internally delegates to a message dispatcher to invoke its subscribed message handlers, and that dispatcher can have a load-balancing strategy exposed by load-balancer or load-balancer-ref attributes (mutually exclusive).

The load balancing strategy is used by the message dispatcher to help determine how messages are distributed amongst message handlers when multiple message handlers subscribe to the same channel.

As a convenience, the load-balancer attribute exposes an enumeration of values pointing to pre-existing implementations of LoadBalancingStrategy.

A round-robin (load-balances across the handlers in rotation) and none (for the cases where one wants to explicitly disable load balancing) are the only available values.

Other strategy implementations may be added in future versions.

However, since version 3.0, you can provide your own implementation of the LoadBalancingStrategy and inject it by using the load-balancer-ref attribute, which should point to a bean that implements LoadBalancingStrategy, as the following example shows:

A FixedSubscriberChannel is a SubscribableChannel that only supports a single MessageHandler subscriber that cannot be unsubscribed.

This is useful for high-throughput performance use-cases when no other subscribers are involved and no channel interceptors are needed.

<int:channel id="lbRefChannel">

<int:dispatcher load-balancer-ref="lb"/>

</int:channel>

<bean id="lb" class="foo.bar.SampleLoadBalancingStrategy"/>Note that the load-balancer and load-balancer-ref attributes are mutually exclusive.

The load-balancing also works in conjunction with a boolean failover property.

If the failover value is true (the default), the dispatcher falls back to any subsequent handlers (as necessary) when preceding handlers throw exceptions.

The order is determined by an optional order value defined on the handlers themselves or, if no such value exists, the order in which the handlers subscribed.

If a certain situation requires that the dispatcher always try to invoke the first handler and then fall back in the same fixed order sequence every time an error occurs, no load-balancing strategy should be provided.

In other words, the dispatcher still supports the failover boolean property even when no load-balancing is enabled.

Without load-balancing, however, the invocation of handlers always begins with the first, according to their order.

For example, this approach works well when there is a clear definition of primary, secondary, tertiary, and so on.

When using the namespace support, the order attribute on any endpoint determines the order.

Keep in mind that load-balancing and failover apply only when a channel has more than one subscribed message handler.

When using the namespace support, this means that more than one endpoint shares the same channel reference defined in the input-channel attribute.

|

Starting with version 5.2, when failover is true, a failure of the current handler together with the failed message is logged under debug or info if configured respectively.

ExecutorChannel

The ExecutorChannel is a point-to-point channel that supports the same dispatcher configuration as DirectChannel (load-balancing strategy and the failover boolean property).

The key difference between these two dispatching channel types is that the ExecutorChannel delegates to an instance of TaskExecutor to perform the dispatch.

This means that the send method typically does not block, but it also means that the handler invocation may not occur in the sender’s thread.

It therefore does not support transactions that span the sender and receiving handler.

The sender can sometimes block.

For example, when using a TaskExecutor with a rejection policy that throttles the client (such as the ThreadPoolExecutor.CallerRunsPolicy), the sender’s thread can execute the method any time the thread pool is at its maximum capacity and the executor’s work queue is full.

Since that situation would only occur in a non-predictable way, you should not rely upon it for transactions.

|

PartitionedChannel

Starting with version 6.1, a PartitionedChannel implementation is provided.

This is an extension of AbstractExecutorChannel and represents point-to-point dispatching logic where the actual consumption is processed on a specific thread, determined by the partition key evaluated from a message sent to this channel.

This channel is similar to the ExecutorChannel mentioned above, but with the difference that messages with the same partition key are always handled in the same thread, preserving ordering.

It does not require an external TaskExecutor, but can be configured with a custom ThreadFactory (e.g. Thread.ofVirtual().name("partition-", 0).factory()).

This factory is used to populate single-thread executors into a MessageDispatcher delegate, per partition.

By default, the IntegrationMessageHeaderAccessor.CORRELATION_ID message header is used as the partition key.

This channel can be configured as a simple bean:

@Bean

PartitionedChannel somePartitionedChannel() {

return new PartitionedChannel(3, (message) -> message.getHeaders().get("partitionKey"));

}

The channel will have 3 partitions - dedicated threads; will use the partitionKey header to determine in which partition the message will be handled.

See PartitionedChannel class Javadocs for more information.

FluxMessageChannel

The FluxMessageChannel is an org.reactivestreams.Publisher implementation for "sinking" sent messages into an internal reactor.core.publisher.Flux for on demand consumption by reactive subscribers downstream.

This channel implementation is neither a SubscribableChannel, nor a PollableChannel, so only org.reactivestreams.Subscriber instances can be used to consume from this channel honoring back-pressure nature of reactive streams.

On the other hand, the FluxMessageChannel implements a ReactiveStreamsSubscribableChannel with its subscribeTo(Publisher<Message<?>>) contract allowing receiving events from reactive source publishers, bridging a reactive stream into the integration flow.

To achieve fully reactive behavior for the whole integration flow, such a channel must be placed between all the endpoints in the flow.

See Reactive Streams Support for more information about interaction with Reactive Streams.

Scoped Channel

Spring Integration 1.0 provided a ThreadLocalChannel implementation, but that has been removed as of 2.0.

Now the more general way to handle the same requirement is to add a scope attribute to a channel.

The value of the attribute can be the name of a scope that is available within the context.

For example, in a web environment, certain scopes are available, and any custom scope implementations can be registered with the context.

The following example shows a thread-local scope being applied to a channel, including the registration of the scope itself:

<int:channel id="threadScopedChannel" scope="thread">

<int:queue />

</int:channel>

<bean class="org.springframework.beans.factory.config.CustomScopeConfigurer">

<property name="scopes">

<map>

<entry key="thread" value="org.springframework.context.support.SimpleThreadScope" />

</map>

</property>

</bean>The channel defined in the previous example also delegates to a queue internally, but the channel is bound to the current thread, so the contents of the queue are similarly bound.

That way, the thread that sends to the channel can later receive those same messages, but no other thread would be able to access them.

While thread-scoped channels are rarely needed, they can be useful in situations where DirectChannel instances are being used to enforce a single thread of operation but any reply messages should be sent to a “terminal” channel.

If that terminal channel is thread-scoped, the original sending thread can collect its replies from the terminal channel.

Now, since any channel can be scoped, you can define your own scopes in addition to thread-Local.

8.1.3. Channel Interceptors

One of the advantages of a messaging architecture is the ability to provide common behavior and capture meaningful information about the messages passing through the system in a non-invasive way.

Since the Message instances are sent to and received from MessageChannel instances, those channels provide an opportunity for intercepting the send and receive operations.

The ChannelInterceptor strategy interface, shown in the following listing, provides methods for each of those operations:

public interface ChannelInterceptor {

Message<?> preSend(Message<?> message, MessageChannel channel);

void postSend(Message<?> message, MessageChannel channel, boolean sent);

void afterSendCompletion(Message<?> message, MessageChannel channel, boolean sent, Exception ex);

boolean preReceive(MessageChannel channel);

Message<?> postReceive(Message<?> message, MessageChannel channel);

void afterReceiveCompletion(Message<?> message, MessageChannel channel, Exception ex);

}

After implementing the interface, registering the interceptor with a channel is just a matter of making the following call:

channel.addInterceptor(someChannelInterceptor);

The methods that return a Message instance can be used for transforming the Message or can return 'null' to prevent further processing (of course, any of the methods can throw a RuntimeException).

Also, the preReceive method can return false to prevent the receive operation from proceeding.

Keep in mind that receive() calls are only relevant for PollableChannels.

In fact, the SubscribableChannel interface does not even define a receive() method.

The reason for this is that when a Message is sent to a SubscribableChannel, it is sent directly to zero or more subscribers, depending on the type of channel (for example,

a PublishSubscribeChannel sends to all of its subscribers).

Therefore, the preReceive(…), postReceive(…), and afterReceiveCompletion(…) interceptor methods are invoked only when the interceptor is applied to a PollableChannel.

|

Spring Integration also provides an implementation of the Wire Tap pattern.

It is a simple interceptor that sends the Message to another channel without otherwise altering the existing flow.

It can be very useful for debugging and monitoring.

An example is shown in Wire Tap.

Because it is rarely necessary to implement all of the interceptor methods, the interface provides no-op methods (those returning void method have no code, the Message-returning methods return the Message as-is, and the boolean method returns true).

The order of invocation for the interceptor methods depends on the type of channel.

As described earlier, the queue-based channels are the only ones where the receive() method is intercepted in the first place.

Additionally, the relationship between send and receive interception depends on the timing of the separate sender and receiver threads.

For example, if a receiver is already blocked while waiting for a message, the order could be as follows: preSend, preReceive, postReceive, postSend.

However, if a receiver polls after the sender has placed a message on the channel and has already returned, the order would be as follows: preSend, postSend (some-time-elapses), preReceive, postReceive.

The time that elapses in such a case depends on a number of factors and is therefore generally unpredictable (in fact, the receive may never happen).

The type of queue also plays a role (for example, rendezvous versus priority).

In short, you cannot rely on the order beyond the fact that preSend precedes postSend and preReceive precedes postReceive.

|

Starting with Spring Framework 4.1 and Spring Integration 4.1, the ChannelInterceptor provides new methods: afterSendCompletion() and afterReceiveCompletion().

They are invoked after send()' and 'receive() calls, regardless of any exception that is raised, which allow for resource cleanup.

Note that the channel invokes these methods on the ChannelInterceptor list in the reverse order of the initial preSend() and preReceive() calls.

Starting with version 5.1, global channel interceptors now apply to dynamically registered channels - such as through beans that are initialized by using beanFactory.initializeBean() or IntegrationFlowContext when using the Java DSL.

Previously, interceptors were not applied when beans were created after the application context was refreshed.

Also, starting with version 5.1, ChannelInterceptor.postReceive() is no longer called when no message is received; it is no longer necessary to check for a null Message<?>.

Previously, the method was called.

If you have an interceptor that relies on the previous behavior, implement afterReceiveCompleted() instead, since that method is invoked, regardless of whether a message is received or not.

Starting with version 5.2, the ChannelInterceptorAware is deprecated in favor of InterceptableChannel from the Spring Messaging module, which it extends now for backward compatibility.

|

8.1.4. MessagingTemplate

When the endpoints and their various configuration options are introduced, Spring Integration provides a foundation for messaging components that enables non-invasive invocation of your application code from the messaging system.

However, it is sometimes necessary to invoke the messaging system from your application code.

For convenience when implementing such use cases, Spring Integration provides a MessagingTemplate that supports a variety of operations across the message channels, including request and reply scenarios.

For example, it is possible to send a request and wait for a reply, as follows:

MessagingTemplate template = new MessagingTemplate();

Message reply = template.sendAndReceive(someChannel, new GenericMessage("test"));

In the preceding example, a temporary anonymous channel would be created internally by the template. The 'sendTimeout' and 'receiveTimeout' properties may also be set on the template, and other exchange types are also supported. The following listing shows the signatures for such methods:

public boolean send(final MessageChannel channel, final Message<?> message) { ...

}

public Message<?> sendAndReceive(final MessageChannel channel, final Message<?> request) { ...

}

public Message<?> receive(final PollableChannel<?> channel) { ...

}

A less invasive approach that lets you invoke simple interfaces with payload or header values instead of Message instances is described in Enter the GatewayProxyFactoryBean.

|

8.1.5. Configuring Message Channels

To create a message channel instance, you can use the <channel/> element for xml or DirectChannel instance for Java configuration, as follows:

@Bean

public MessageChannel exampleChannel() {

return new DirectChannel();

}

<int:channel id="exampleChannel"/>When you use the <channel/> element without any sub-elements, it creates a DirectChannel instance (a SubscribableChannel).

To create a publish-subscribe channel, use the <publish-subscribe-channel/> element (the PublishSubscribeChannel in Java), as follows:

@Bean

public MessageChannel exampleChannel() {

return new PublishSubscribeChannel();

}

<int:publish-subscribe-channel id="exampleChannel"/>You can alternatively provide a variety of <queue/> sub-elements to create any of the pollable channel types (as described in Message Channel Implementations).

The following sections shows examples of each channel type.

DirectChannel Configuration

As mentioned earlier, DirectChannel is the default type.

The following listing shows who to define one:

@Bean

public MessageChannel directChannel() {

return new DirectChannel();

}

<int:channel id="directChannel"/>A default channel has a round-robin load-balancer and also has failover enabled (see DirectChannel for more detail).

To disable one or both of these, add a <dispatcher/> sub-element (a LoadBalancingStrategy constructor of the DirectChannel) and configure the attributes as follows:

@Bean

public MessageChannel failFastChannel() {

DirectChannel channel = new DirectChannel();

channel.setFailover(false);

return channel;

}

@Bean

public MessageChannel failFastChannel() {

return new DirectChannel(null);

}

<int:channel id="failFastChannel">

<int:dispatcher failover="false"/>

</channel>

<int:channel id="channelWithFixedOrderSequenceFailover">

<int:dispatcher load-balancer="none"/>

</int:channel>Datatype Channel Configuration

Sometimes, a consumer can process only a particular type of payload, forcing you to ensure the payload type of the input messages. The first thing that comes to mind may be to use a message filter. However, all that message filter can do is filter out messages that are not compliant with the requirements of the consumer. Another way would be to use a content-based router and route messages with non-compliant data-types to specific transformers to enforce transformation and conversion to the required data type. This would work, but a simpler way to accomplish the same thing is to apply the Datatype Channel pattern. You can use separate datatype channels for each specific payload data type.

To create a datatype channel that accepts only messages that contain a certain payload type, provide the data type’s fully-qualified class name in the channel element’s datatype attribute, as the following example shows:

@Bean

public MessageChannel numberChannel() {

DirectChannel channel = new DirectChannel();

channel.setDatatypes(Number.class);

return channel;

}

<int:channel id="numberChannel" datatype="java.lang.Number"/>Note that the type check passes for any type that is assignable to the channel’s datatype.

In other words, the numberChannel in the preceding example would accept messages whose payload is java.lang.Integer or java.lang.Double.

Multiple types can be provided as a comma-delimited list, as the following example shows:

@Bean

public MessageChannel numberChannel() {

DirectChannel channel = new DirectChannel();

channel.setDatatypes(String.class, Number.class);

return channel;

}

<int:channel id="stringOrNumberChannel" datatype="java.lang.String,java.lang.Number"/>So the 'numberChannel' in the preceding example accepts only messages with a data type of java.lang.Number.

But what happens if the payload of the message is not of the required type?

It depends on whether you have defined a bean named integrationConversionService that is an instance of Spring’s Conversion Service.

If not, then an Exception would be thrown immediately.

However, if you have defined an integrationConversionService bean, it is used in an attempt to convert the message’s payload to the acceptable type.

You can even register custom converters.

For example, suppose you send a message with a String payload to the 'numberChannel' we configured above.

You might handle the message as follows:

MessageChannel inChannel = context.getBean("numberChannel", MessageChannel.class);

inChannel.send(new GenericMessage<String>("5"));

Typically, this would be a perfectly legal operation. However, since we use Datatype Channel, the result of such operation would generate an exception similar to the following:

Exception in thread "main" org.springframework.integration.MessageDeliveryException:

Channel 'numberChannel'

expected one of the following datataypes [class java.lang.Number],

but received [class java.lang.String]

…The exception happens because we require the payload type to be a Number, but we sent a String.

So we need something to convert a String to a Number.

For that, we can implement a converter similar to the following example:

public static class StringToIntegerConverter implements Converter<String, Integer> {

public Integer convert(String source) {

return Integer.parseInt(source);

}

}

Then we can register it as a converter with the Integration Conversion Service, as the following example shows:

@Bean

@IntegrationConverter

public StringToIntegerConverter strToInt {

return new StringToIntegerConverter();

}

<int:converter ref="strToInt"/>

<bean id="strToInt" class="org.springframework.integration.util.Demo.StringToIntegerConverter"/>Or on the StringToIntegerConverter class when it is marked with the @Component annotation for auto-scanning.

When the 'converter' element is parsed, it creates the integrationConversionService bean if one is not already defined.

With that converter in place, the send operation would now be successful, because the datatype channel uses that converter to convert the String payload to an Integer.