VertexAI Embeddings

The Generative Language PaLM API allows developers to build generative AI applications using the PaLM model. Large Language Models (LLMs) are a powerful, versatile type of machine learning model that enables computers to comprehend and generate natural language through a series of prompts. The PaLM API is based on Google’s next generation LLM, PaLM. It excels at a variety of different tasks like code generation, reasoning, and writing. You can use the PaLM API to build generative AI applications for use cases like content generation, dialogue agents, summarization and classification systems, and more.

Based on the Models REST API.

Prerequisites

To access the PaLM2 REST API you need to obtain an access API KEY form makersuite.

| Currently the PaLM API it is not available outside US, but you can use VPN for testing. |

The Spring AI project defines a configuration property named spring.ai.vertex.ai.api-key that you should set to the value of the API Key obtained.

Exporting an environment variable is one way to set that configuration property:

export SPRING_AI_VERTEX_AI_API_KEY=<INSERT KEY HERE>Add Repositories and BOM

Spring AI artifacts are published in Spring Milestone and Snapshot repositories. Refer to the Repositories section to add these repositories to your build system.

To help with dependency management, Spring AI provides a BOM (bill of materials) to ensure that a consistent version of Spring AI is used throughout the entire project. Refer to the Dependency Management section to add the Spring AI BOM to your build system.

Auto-configuration

Spring AI provides Spring Boot auto-configuration for the VertexAI Embedding Client.

To enable it add the following dependency to your project’s Maven pom.xml file:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-vertex-ai-palm2-spring-boot-starter</artifactId>

</dependency>or to your Gradle build.gradle build file.

dependencies {

implementation 'org.springframework.ai:spring-ai-vertex-ai-palm2-spring-boot-starter'

}| Refer to the Dependency Management section to add the Spring AI BOM to your build file. |

Embedding Properties

The prefix spring.ai.vertex.ai is used as the property prefix that lets you connect to VertexAI.

| Property | Description | Default |

|---|---|---|

spring.ai.vertex.ai.ai.base-url |

The URL to connect to |

|

spring.ai.vertex.ai.api-key |

The API Key |

- |

The prefix spring.ai.vertex.ai.embedding is the property prefix that lets you configure the embedding client implementation for VertexAI Chat.

| Property | Description | Default |

|---|---|---|

spring.ai.vertex.ai.embedding.enabled |

Enable Vertex AI PaLM API Embedding client. |

true |

spring.ai.vertex.ai.embedding.model |

This is the Vertex Embedding model to use |

embedding-gecko-001 |

Sample Controller (Auto-configuration)

Create a new Spring Boot project and add the spring-ai-vertex-ai-palm2-spring-boot-starter to your pom (or gradle) dependencies.

Add a application.properties file, under the src/main/resources directory, to enable and configure the VertexAi Chat client:

spring.ai.vertex.ai.api-key=YOUR_API_KEY

spring.ai.vertex.ai.embedding.model=embedding-gecko-001

replace the api-key with your VertexAI credentials.

|

This will create a VertexAiPaLm2EmbeddingClient implementation that you can inject into your class.

Here is an example of a simple @Controller class that uses the embedding client for text generations.

@RestController

public class EmbeddingController {

private final EmbeddingClient embeddingClient;

@Autowired

public EmbeddingController(EmbeddingClient embeddingClient) {

this.embeddingClient = embeddingClient;

}

@GetMapping("/ai/embedding")

public Map embed(@RequestParam(value = "message", defaultValue = "Tell me a joke") String message) {

EmbeddingResponse embeddingResponse = this.embeddingClient.embedForResponse(List.of(message));

return Map.of("embedding", embeddingResponse);

}

}Manual Configuration

The VertexAiPaLm2EmbeddingClient implements the EmbeddingClient and uses the Low-level VertexAiPaLm2Api Client to connect to the VertexAI service.

Add the spring-ai-vertex-ai dependency to your project’s Maven pom.xml file:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-vertex-ai-palm2</artifactId>

</dependency>or to your Gradle build.gradle build file.

dependencies {

implementation 'org.springframework.ai:spring-ai-vertex-ai-palm2'

}| Refer to the Dependency Management section to add the Spring AI BOM to your build file. |

Next, create a VertexAiPaLm2EmbeddingClient and use it for text generations:

VertexAiPaLm2Api vertexAiApi = new VertexAiPaLm2Api(< YOUR PALM_API_KEY>);

var embeddingClient = new VertexAiPaLm2EmbeddingClient(vertexAiApi);

EmbeddingResponse embeddingResponse = embeddingClient

.embedForResponse(List.of("Hello World", "World is big and salvation is near"));Low-level VertexAiPaLm2Api Client

The VertexAiPaLm2Api provides is lightweight Java client for VertexAiPaLm2Api Chat API.

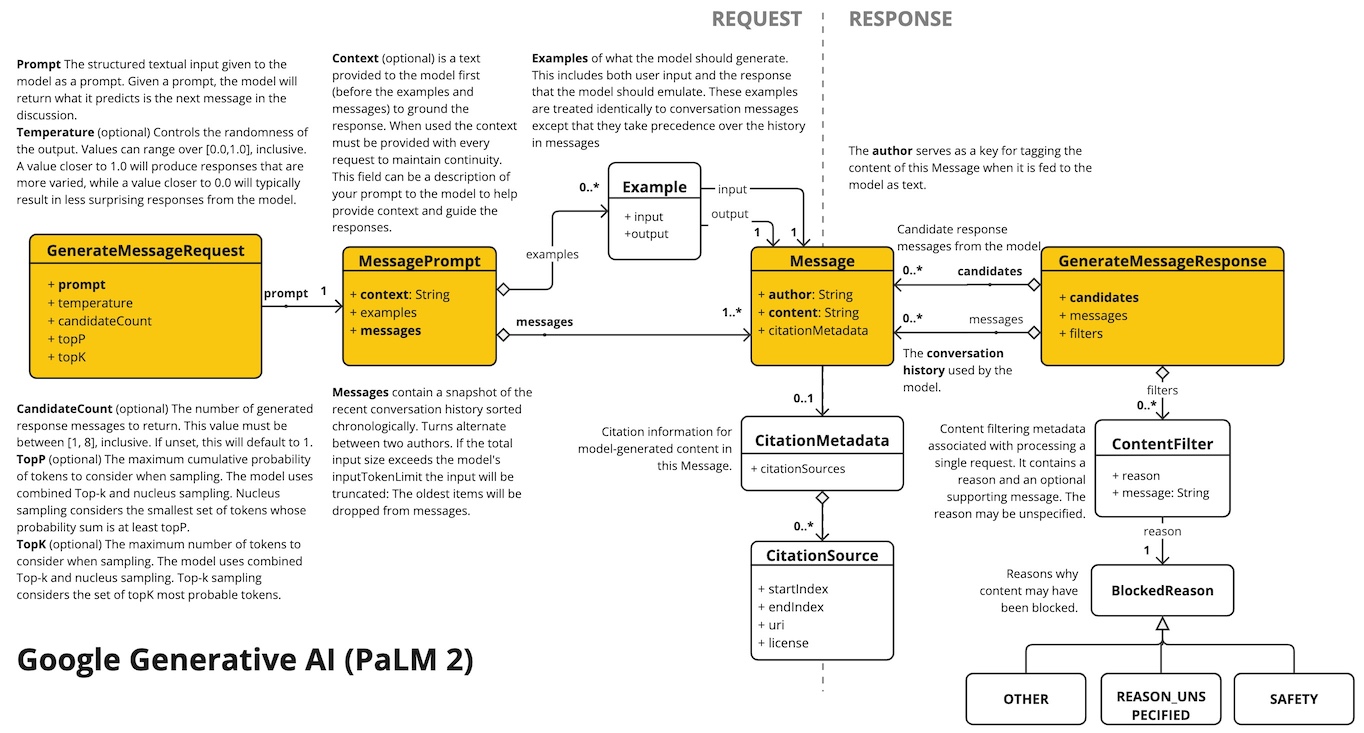

Following class diagram illustrates the VertexAiPaLm2Api embedding interfaces and building blocks:

Here is a simple snippet how to use the api programmatically:

VertexAiPaLm2Api vertexAiApi = new VertexAiPaLm2Api(< YOUR PALM_API_KEY>);

// Generate

var prompt = new MessagePrompt(List.of(new Message("0", "Hello, how are you?")));

GenerateMessageRequest request = new GenerateMessageRequest(prompt);

GenerateMessageResponse response = vertexAiApi.generateMessage(request);

// Embed text

Embedding embedding = vertexAiApi.embedText("Hello, how are you?");

// Batch embedding

List<Embedding> embeddings = vertexAiApi.batchEmbedText(List.of("Hello, how are you?", "I am fine, thank you!"));