|

This version is still in development and is not considered stable yet. For the latest snapshot version, please use Spring AI 1.0.0-SNAPSHOT! |

Function Calling API

| This page describes the previous version of the Function Calling API, which has been deprecated and marked for remove in the next release. The current version is available at Tool Calling. See the Migration Guide for more information. |

The integration of function support in AI models, permits the model to request the execution of client-side functions, thereby accessing necessary information or performing tasks dynamically as required.

Spring AI currently supports tool/function calling for the following AI Models:

-

Anthropic Claude: Refer to the Anthropic Claude tool/function calling.

-

Azure OpenAI: Refer to the Azure OpenAI tool/function calling.

-

Amazon Bedrock Converse: Refer to the Amazon Bedrock Converse tool/function calling.

-

Google VertexAI Gemini: Refer to the Vertex AI Gemini tool/function calling.

-

Groq: Refer to the Groq tool/function calling.

-

Mistral AI: Refer to the Mistral AI tool/function calling.

-

Ollama: Refer to the Ollama tool/function calling

-

OpenAI: Refer to the OpenAI tool/function calling.

You can register custom Java functions with the ChatClient and have the AI model intelligently choose to output a JSON object containing arguments to call one or many of the registered functions.

This allows you to connect the LLM capabilities with external tools and APIs.

The AI models are trained to detect when a function should be called and to respond with JSON that adheres to the function signature.

The API does not call the function directly; instead, the model generates JSON that you can use to call the function in your code and return the result back to the model to complete the conversation.

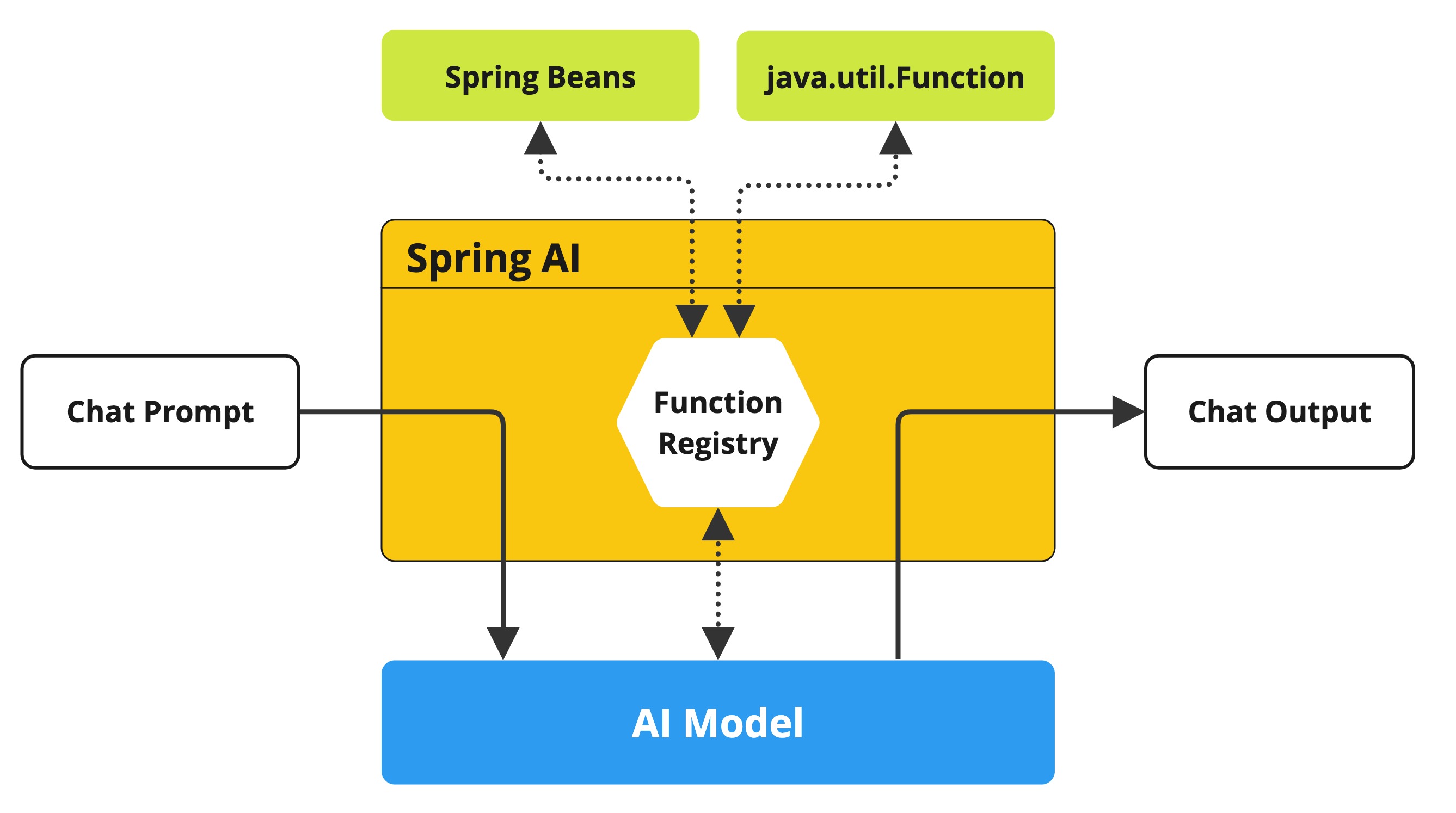

Spring AI provides flexible and user-friendly ways to register and call custom functions.

In general, the custom functions need to provide a function name, description, and the function call signature (as JSON schema) to let the model know what arguments the function expects. The description helps the model to understand when to call the function.

As a developer, you need to implement a function that takes the function call arguments sent from the AI model, and responds with the result back to the model. Your function can in turn invoke other 3rd party services to provide the results.

Spring AI makes this as easy as defining a @Bean definition that returns a java.util.Function and supplying the bean name as an option when invoking the ChatClient or registering the function dynamically in your prompt request.

Under the hood, Spring wraps your POJO (the function) with the appropriate adapter code that enables interaction with the AI Model, saving you from writing tedious boilerplate code. The basis of the underlying infrastructure is the FunctionCallback.java interface and the companion Builder utility class to simplify the implementation and registration of Java callback functions.

How it works

Suppose we want the AI model to respond with information that it does not have, for example, the current temperature at a given location.

We can provide the AI model with metadata about our own functions that it can use to retrieve that information as it processes your prompt.

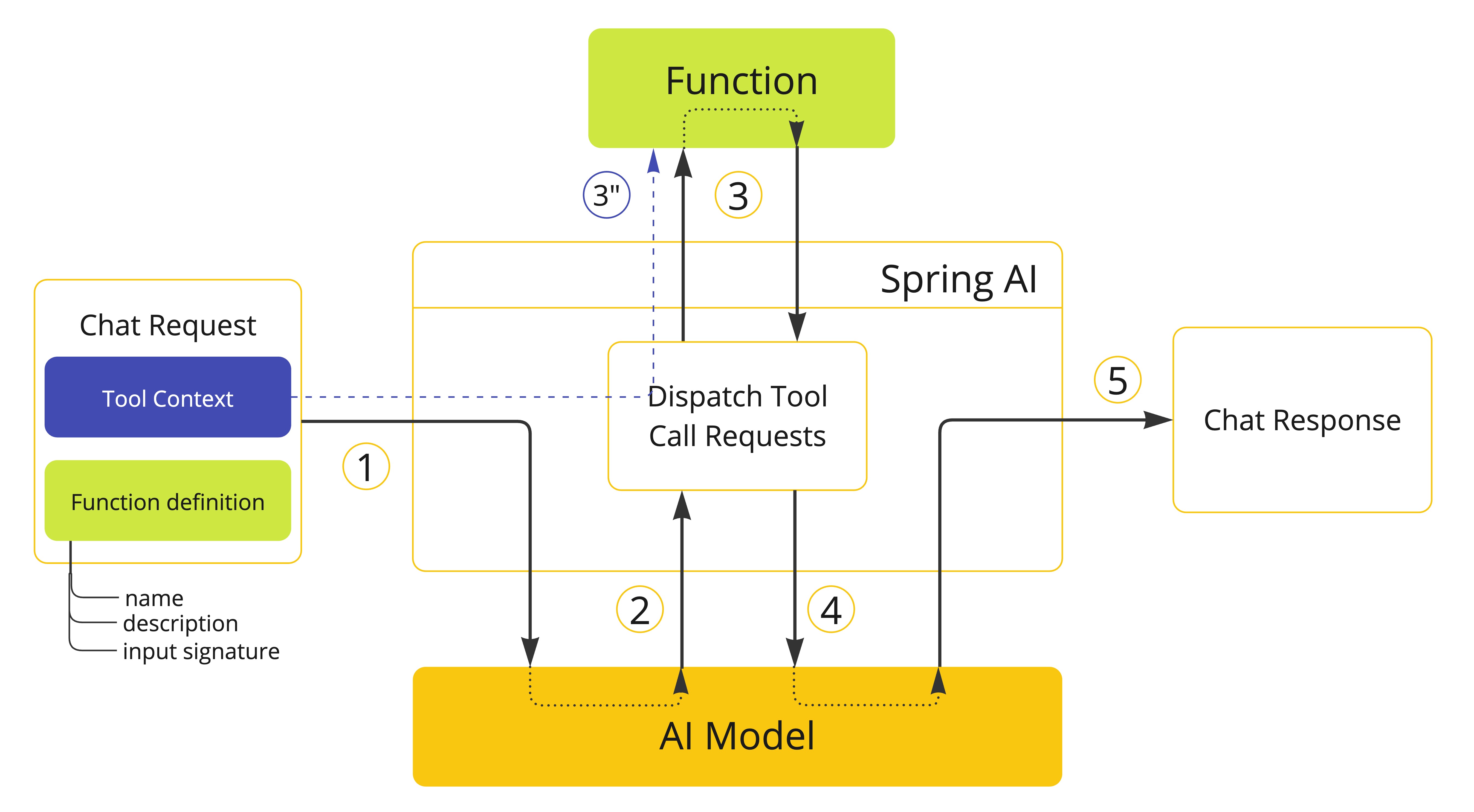

For example, if during the processing of a prompt, the AI Model determines that it needs additional information about the temperature in a given location, it will start a server-side generated request/response interaction. Instead of returning the final response message, the AI Model returns at special Tool Call request, providing the function name and arguments (as JSON). It is the responsibility of the client to process this message and execute the named function and return the response as Tool Response message back to the AI Model.

Spring AI greatly simplifies the code you need to write to support function invocation.

It brokers the function invocation conversation for you.

You can simply provide your function definition as a @Bean and then provide the bean name of the function in your prompt options or pass the function directly as a parameter in your prompt request options.

You can also reference multiple function bean names in your prompt.

Example Use Case

Lets define a simple use case that we can use as an example to explain how function invocation works. Let’s create a chatbot that answer questions by calling our own function. To support the response of the chatbot, we will register our own function that takes a location and returns the current weather in that location.

When the model needs to answer a question such as "What’s the weather like in Boston?" the AI model will invoke the client providing the location value as an argument to be passed to the function. This RPC-like data is passed as JSON.

Our function calls some SaaS-based weather service API and returns the weather response back to the model to complete the conversation. In this example, we will use a simple implementation named MockWeatherService that hard-codes the temperature for various locations.

The following MockWeatherService class represents the weather service API:

-

Java

-

Kotlin

public class MockWeatherService implements Function<Request, Response> {

public enum Unit { C, F }

public record Request(String location, Unit unit) {}

public record Response(double temp, Unit unit) {}

public Response apply(Request request) {

return new Response(30.0, Unit.C);

}

}class MockWeatherService : Function1<Request, Response> {

override fun invoke(request: Request) = Response(30.0, Unit.C)

}

enum class Unit { C, F }

data class Request(val location: String, val unit: Unit) {}

data class Response(val temp: Double, val unit: Unit) {}Server-Side Registration

Functions as Beans

Spring AI provides multiple ways to register custom functions as beans in the Spring context.

We start by describing the most POJO-friendly options.

Plain Functions

In this approach, you define a @Bean in your application context as you would any other Spring managed object.

Internally, Spring AI ChatModel will create an instance of a FunctionCallback that adds the logic for it being invoked via the AI model.

The name of the @Bean is used function name.

-

Java

-

Kotlin

@Configuration

static class Config {

@Bean

@Description("Get the weather in location") // function description

public Function<MockWeatherService.Request, MockWeatherService.Response> currentWeather() {

return new MockWeatherService();

}

}@Configuration

class Config {

@Bean

@Description("Get the weather in location") // function description

fun currentWeather(): (Request) -> Response = MockWeatherService()

}The @Description annotation is optional and provides a function description that helps the model understand when to call the function. It is an important property to set to help the AI model determine what client side function to invoke.

Another option for providing the description of the function is to use the @JsonClassDescription annotation on the MockWeatherService.Request:

-

Java

-

Kotlin

@Configuration

static class Config {

@Bean

public Function<Request, Response> currentWeather() { // bean name as function name

return new MockWeatherService();

}

}

@JsonClassDescription("Get the weather in location") // function description

public record Request(String location, Unit unit) {}@Configuration

class Config {

@Bean

fun currentWeather(): (Request) -> Response { // bean name as function name

return MockWeatherService()

}

}

@JsonClassDescription("Get the weather in location") // function description

data class Request(val location: String, val unit: Unit)It is a best practice to annotate the request object with information such that the generated JSON schema of that function is as descriptive as possible to help the AI model pick the correct function to invoke.

FunctionCallback

Another way to register a function is to create a FunctionCallback like this:

-

Java

-

Kotlin

@Configuration

static class Config {

@Bean

public FunctionCallback weatherFunctionInfo() {

return FunctionCallback.builder()

.function("CurrentWeather", new MockWeatherService()) // (1) function name and instance

.description("Get the weather in location") // (2) function description

.inputType(MockWeatherService.Request.class) // (3) input type to build the JSON schema

.build();

}

}import org.springframework.ai.model.function.withInputType

@Configuration

class Config {

@Bean

fun weatherFunctionInfo(): FunctionCallback {

return FunctionCallback.builder()

.function("CurrentWeather", MockWeatherService()) // (1) function name and instance

.description("Get the weather in location") // (2) function description

// (3) Required due to Kotlin SAM conversion being an opaque lambda

.inputType<MockWeatherService.Request>()

.build();

}

}It wraps the 3rd party MockWeatherService function and registers it as a CurrentWeather function with the ChatClient.

It also provides a description (2) and an optional response converter to convert the response into a text as expected by the model.

| By default, the response converter performs a JSON serialization of the Response object. |

The FunctionCallback.Builder internally resolves the function call signature based on the MockWeatherService.Request class.

|

Enable functions by bean name

To let the model know and call your CurrentWeather function you need to enable it in your prompt requests:

ChatClient chatClient = ...

ChatResponse response = this.chatClient.prompt("What's the weather like in San Francisco, Tokyo, and Paris?")

.functions("CurrentWeather") // Enable the function

.call().

chatResponse();

logger.info("Response: {}", response);The above user question will trigger 3 calls to the CurrentWeather function (one for each city) and the final response will be something like this:

Here is the current weather for the requested cities: - San Francisco, CA: 30.0°C - Tokyo, Japan: 10.0°C - Paris, France: 15.0°C

The FunctionCallbackWithPlainFunctionBeanIT.java test demo this approach.

Client-Side Registration

In addition to the auto-configuration, you can register callback functions, dynamically.

You can use either the function invoking or method invoking approaches to register functions with your ChatClient or ChatModel requests.

The client-side registration enables you to register functions by default.

Function Invoking

ChatClient chatClient = ...

ChatResponse response = this.chatClient.prompt("What's the weather like in San Francisco, Tokyo, and Paris?")

.functions(FunctionCallback.builder()

.function("currentWeather", (Request request) -> new Response(30.0, Unit.C)) // (1) function name and instance

.description("Get the weather in location") // (2) function description

.inputType(MockWeatherService.Request.class) // (3) input type to build the JSON schema

.build())

.call()

.chatResponse();| The on the fly functions are enabled by default for the duration of this request. |

This approach allows to choose dynamically different functions to be called based on the user input.

The FunctionCallbackInPromptIT.java integration test provides a complete example of how to register a function with the ChatClient and use it in a prompt request.

Method Invoking

The MethodInvokingFunctionCallback enables method invocation through reflection while automatically handling JSON schema generation and parameter conversion.

It’s particularly useful for integrating Java methods as callable functions within AI model interactions.

The MethodInvokingFunctionCallback implements the FunctionCallback interface and provides:

-

Automatic JSON schema generation for method parameters

-

Support for both static and instance methods

-

Any number of parameters (including none) and return values (including void)

-

Any parameter/return types (primitives, objects, collections)

-

Special handling for

ToolContextparameters

You need the FunctionCallback.Builder to create MethodInvokingFunctionCallback like this:

// Create using builder pattern

FunctionCallback methodInvokingCallback = FunctionCallback.builder()

.method("MethodName", Class<?>...argumentTypes) // The method to invoke and its argument types

.description("Function calling description") // Hints the AI to know when to call this method

.targetObject(targetObject) // Required instance methods for static methods use targetClass

.build();Here are a few usage examples:

-

Static Method Invocation

-

Instance Method with ToolContext

public class WeatherService {

public static String getWeather(String city, TemperatureUnit unit) {

return "Temperature in " + city + ": 20" + unit;

}

}

// Usage

FunctionCallback callback = FunctionCallback.builder()

.method("getWeather", String.class, TemperatureUnit.class)

.description("Get weather information for a city")

.targetClass(WeatherService.class)

.build();public class DeviceController {

public void setDeviceState(String deviceId, boolean state, ToolContext context) {

Map<String, Object> contextData = context.getContext();

// Implementation using context data

}

}

// Usage

DeviceController controller = new DeviceController();

String response = ChatClient.create(chatModel).prompt()

.user("Turn on the living room lights")

.functions(FunctionCallback.builder()

.method("setDeviceState", String.class,boolean.class,ToolContext.class)

.description("Control device state")

.targetObject(controller)

.build())

.toolContext(Map.of("location", "home"))

.call()

.content();The OpenAiChatClientMethodInvokingFunctionCallbackIT integration test provides additional examples of how to use the FunctionCallback.Builder to create method invocation FunctionCallbacks.

Tool Context

Spring AI now supports passing additional contextual information to function callbacks through a tool context. This feature allows you to provide extra, user provided, data that can be used within the function execution along with the function arguments passed by the AI model.

The ToolContext class provides a way to pass additional context information.

Using Tool Context

In case of function-invoking, the context information that is passed in as the second argument of a java.util.BiFunction.

For method-invoking, the context information is passed as a method argument of type ToolContext.

Function Invoking

You can set the tool context when building your chat options and use a BiFunction for your callback:

BiFunction<MockWeatherService.Request, ToolContext, MockWeatherService.Response> weatherFunction =

(request, toolContext) -> {

String sessionId = (String) toolContext.getContext().get("sessionId");

String userId = (String) toolContext.getContext().get("userId");

// Use sessionId and userId in your function logic

double temperature = 0;

if (request.location().contains("Paris")) {

temperature = 15;

}

else if (request.location().contains("Tokyo")) {

temperature = 10;

}

else if (request.location().contains("San Francisco")) {

temperature = 30;

}

return new MockWeatherService.Response(temperature, 15, 20, 2, 53, 45, MockWeatherService.Unit.C);

};

ChatResponse response = chatClient.prompt("What's the weather like in San Francisco, Tokyo, and Paris?")

.functions(FunctionCallback.builder()

.function("getCurrentWeather", this.weatherFunction)

.description("Get the weather in location")

.inputType(MockWeatherService.Request.class)

.build())

.toolContext(Map.of("sessionId", "1234", "userId", "5678"))

.call()

.chatResponse();In this example, the weatherFunction is defined as a BiFunction that takes both the request and the tool context as parameters. This allows you to access the context directly within the function logic.

This approach allows you to pass session-specific or user-specific information to your functions, enabling more contextual and personalized responses.

Method Invoking

public class DeviceController {

public void setDeviceState(String deviceId, boolean state, ToolContext context) {

Map<String, Object> contextData = context.getContext();

// Implementation using context data

}

}

// Usage

DeviceController controller = new DeviceController();

String response = ChatClient.create(chatModel).prompt()

.user("Turn on the living room lights")

.functions(FunctionCallback.builder()

.method("setDeviceState", String.class,boolean.class,ToolContext.class)

.description("Control device state")

.targetObject(controller)

.build())

.toolContext(Map.of("location", "home"))

.call()

.content();